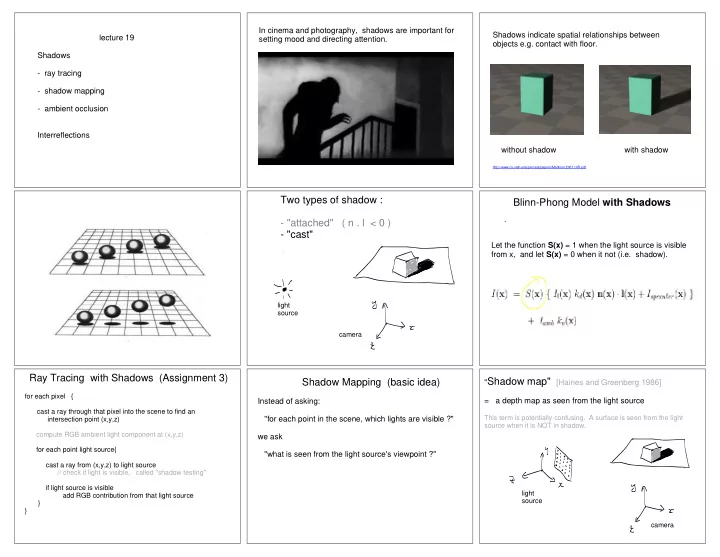

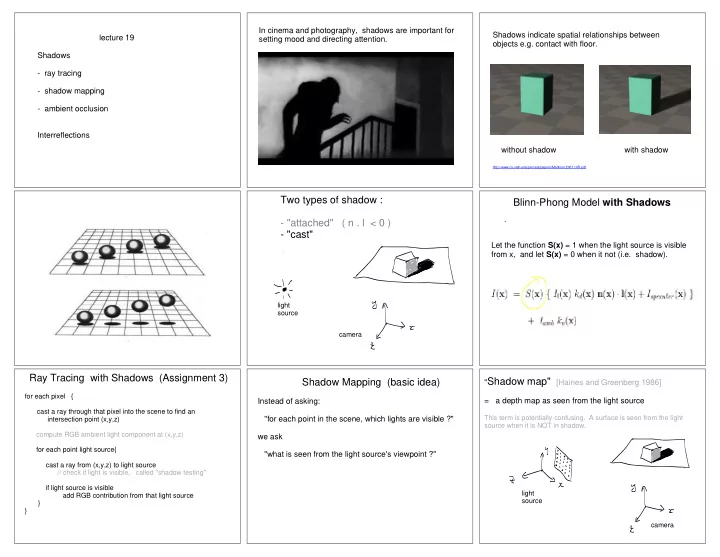

In cinema and photography, shadows are important for Shadows indicate spatial relationships between lecture 19 setting mood and directing attention. objects e.g. contact with floor. Shadows - ray tracing - shadow mapping - ambient occlusion Interreflections without shadow with shadow http://www.cs.utah.edu/percept/papers/Madison:2001:UIS.pdf Two types of shadow : Blinn-Phong Model with Shadows - "attached" ( n . l < 0 ) - "cast" Let the function S(x) = 1 when the light source is visible from x, and let S(x) = 0 when it not (i.e. shadow). light source camera Ray Tracing with Shadows (Assignment 3) Shadow Mapping (basic idea) " Shadow map" [Haines and Greenberg 1986] for each pixel { Instead of asking: = a depth map as seen from the light source cast a ray through that pixel into the scene to find an This term is potentially confusing. A surface is seen from the light intersection point (x,y,z) "for each point in the scene, which lights are visible ?" source when it is NOT in shadow. compute RGB ambient light component at (x,y,z) we ask for each point light source{ "what is seen from the light source's viewpoint ?" cast a ray from (x,y,z) to light source // check if light is visible, called "shadow testing" if light source is visible light add RGB contribution from that light source source } } camera

surface Coordinate Systems that casts surface that receives shadow shadow light (x shadow , y shadow ) source light z light > z shadow source z light = z shadow shadow map projection plane Notation: Let ( x light , y light , z light ) be light source coordinates. z shadow ( x shadow, y shadow ) I ( x p, y p ) Let ( x shadow , y shadow , z shadow ) be shadow map coordinates "shadow map" RGB image (with shadows) with z shadow having 16 bits per pixel. light source viewpoint camera viewpoint In both cases, assume the points have been projectively transformed, This illustration shows perspective view, but in fact the comparisons http://www.opengl-tutorial.org/intermediate-tutorials/tutorial-16-shadow-mapping/ and coordinates are normalized to [0, 1] x [0, 1] x [0, 1]. are done in normalized coordinates (i.e. after projective transform). Shadow Mapping algorithm (sketch) Shadow Mapping: a two-pass algorithm Shading Mapping and Aliasing (and more details) for each camera image pixel (x p ,y p ) { Pass 1: find depth z p of closest visible surface // using whatever method transform (x p , y p , z p ) to light coordinates ( x light , y light , z light ) Compute only a shadow map z shadow . compare z light to z shadow ( x shadow , y shadow ) to decide if // Assume just one light source (can be generalized) 3D point is in shadow Pass 2: compute RGB } for each camera image pixel (x p ,y p ) { find depth z p of closest visible surface transform (x p ,y p, z p ) to light coordinates (x light, y light, z light ) ( x shadow, y shadow ) = discretize(x light, y light ) // to pixel positions (x,y , z) // in shadow map if z light > z shadow ( x shadow, y shadow ) shadowmap S(x p ,y p ) = 0 // light source is not visible from point light else // i.e. point in shadow source (x p ,y p ) S(x p ,y p ) = 1 // light source is visible from point calculate RGB value e.g. using Blinn-Phong model with shadow } camera http://www.opengl-tutorial.org/intermediate-tutorials/tutorial-16-shadow-mapping/ What causes the aliasing shown on the previous slide? Consider the To conclude that ( x light , z light ) is in shadow, in the presence of 2D xz example below. discretization, we require that a stronger condition is met: z shadow (x shadow ) < z light - ( x light, z light ) is on a continuous visible surface (blue curve). . (x shadow, z shadow ) is in the discretized shadow map (black points). However, as we show on next slide, this can lead us to conclude that The shadow condition, z shadow (x shadow ) < z light , is supposed to be a point is not in shadow when in fact it is in shadow. false because all points on the surface are visible. However, because of discretization, the condition is often true and the algorithm mistakenly concludes that some points are shadowed. z light z shadow z shadow In fact, there is no gap between ground and vertical wall. Yet algorithm allows light to leak under the wall. It fails to detect this shadow. x shadow x shadow

Pass 2 (make RGB image with shadows) Pass 1 (Compute shadow map) Real Time Rendering vertex shader How are shadows computed in the OpenGL pipeline ? - transform vertex to camera coordinates (x p , y p , z) and vertex shader - transform vertices to light coordinates to light source coordinates ( x light, y light , z light ) // as in pass 1 ( x light, y light , z light ) clip The rasterizer does not have access to the shadow coordinates rasterizer map computed in the first pass. fragments rasterizer - for each light source pixel ( x shadow, y shadow ) The fragment shader (below) has access to it. - generate fragments vertices find depth z shadow of closest surface pixels (each fragment needs (x p , y p ), n, z, x shadow, y shadow , z light ) vertex "primitive rasterization fragment processor assembly" processor & clipping fragment shader Pass 1: make shadow map and store as a texture - if z shadow ( x shadow, y shadow ) + < z light fragment shader Pass 2: make RGB image using shadow map compute RGB using ambient only // in shadow - store depths as a texture z shadow ( x shadow, y shadow ) else compute RGB using Blinn-Phong // not in shadow Visibility of the "sky" How to handle 'diffuse lighting' ? lecture 19 shadows and interreflections - outdoors on an overcast day - shadow maps - uniformly illuminated indoor scene - ambient occlusion e.g. classroom, factory, office, retail store - global illumination: interreflections "Sky" visibility varies throughout scene Solution 1: (cheap) use attached shadows only. I skipped this in the lecture because I thought I was running out of time. See Exercises. Assume : - the light source is a uniform distant hemisphere - the fraction of the source is determined only by the surface normal. Key limitation: the model on the previous slide cannot account for cast shadows, e.g. illumination variations along the ground plane or along the planar side of the gully, since the normal is constant on a plane. What is the differences of this model and "sunny day" ?

http://http.developer.nvidia.com/GPUGems/gpugems_ch17.html Solution 2: Ray tracing (expensive) Solution 3: Ambient Occlusion [Zhukov,1998] for each pixel in the image { // precompute I diffuse (x) = n(x) . l cast a ray through the scene point to find the nearest for each vertex x { This example has no surface (x,y,z) shadows, point source at shoot out rays into the hemisphere and calculate the upper right, and uniform shoot out rays from (x, y, z) into the hemisphere fraction of rays, S(x) in [0, 1], that reach the "sky" reflectance and check which of them reach the "sky" i.e. infinity or some finite distance // S(x) is an attribute of x, along with n and material I diffuse (x) = S(x) add up environment contribution of rays that reach the "sky" // If you are willing to use more memory, then store the // you could have a non-uniform sky // a boolean map S(x, l) , where l is direction of light Ambient occlusion can } // See Exercises. replace n(x).l term in more } general Blinn-Phong model instance. Compare the far leg here // We say that S(x) is "baked" into the surface. with the above example. This allows for real-time rendering (moving the camera). Examples of ambient occlusion Q: How to do ambient occlusion with indoor scenes ? A: For each vertex, compute S(x) in [0, 1] by considering only surfaces within some distance of x. http://www.adamlhumphreys.com/gallery/lighting_ca/19 Ambient Occlusion in My Own Research Post-doctoral Research [Langer & Buelthoff, 2000] No, I will not ask about this (or the next two slides) on the final exam. - I carried out the first shape perception experients that compared Ph.D. thesis images rendered with vs. without ambient occlusion. - "Shape from shading on a cloudy day" (Langer & Zucker, 1994) I independently discovered the principle of ambient occlusion. I used it to introduce a new version of a classic computer vision problem (shape from shading). input image computed true mesh with ambient occlusion (rendered) mesh

Recommend

More recommend