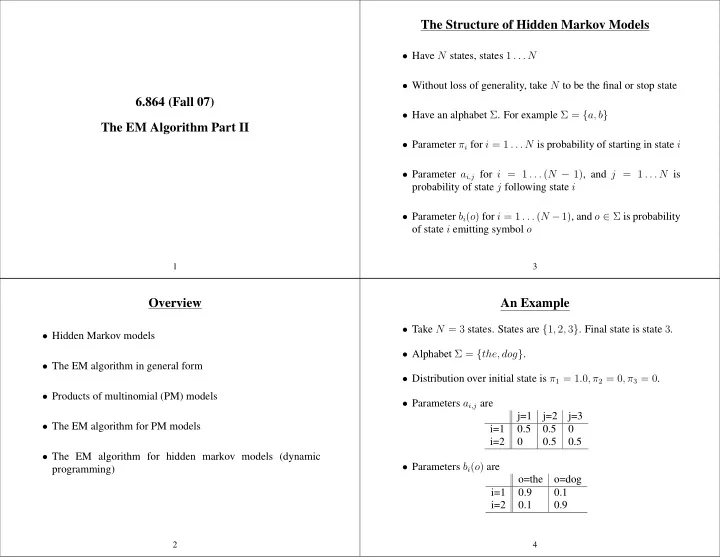

The Structure of Hidden Markov Models • Have N states, states 1 . . . N • Without loss of generality, take N to be the final or stop state 6.864 (Fall 07) • Have an alphabet Σ . For example Σ = { a, b } The EM Algorithm Part II • Parameter π i for i = 1 . . . N is probability of starting in state i • Parameter a i,j for i = 1 . . . ( N − 1) , and j = 1 . . . N is probability of state j following state i • Parameter b i ( o ) for i = 1 . . . ( N − 1) , and o ∈ Σ is probability of state i emitting symbol o 1 3 Overview An Example • Take N = 3 states. States are { 1 , 2 , 3 } . Final state is state 3 . • Hidden Markov models • Alphabet Σ = { the, dog } . • The EM algorithm in general form • Distribution over initial state is π 1 = 1 . 0 , π 2 = 0 , π 3 = 0 . • Products of multinomial (PM) models • Parameters a i,j are j=1 j=2 j=3 • The EM algorithm for PM models i=1 0.5 0.5 0 i=2 0 0.5 0.5 • The EM algorithm for hidden markov models (dynamic • Parameters b i ( o ) are programming) o=the o=dog i=1 0.9 0.1 i=2 0.1 0.9 2 4

A Generative Process Hidden Markov Models • Pick the start state s 1 to be state i for i = 1 . . . N with • An HMM specifies a probability for each possible ( x, y ) pair, probability π i . where x is a sequence of symbols drawn from Σ , and y is a sequence of states drawn from the integers 1 . . . ( N − 1) . The sequences x and y are restricted to have the same length. • Set t = 1 • E.g., say we have an HMM with N = 3 , Σ = { a, b } , and with • Repeat while current state s t is not the stop state ( N ): some choice of the parameters Θ . Take x = � a, a, b, b � and – Emit a symbol o t ∈ Σ with probability b s t ( o t ) y = � 1 , 2 , 2 , 1 � . Then in this case, – Pick the next state s t +1 as state j with probability a s t ,j . P ( x, y | Θ) = π 1 a 1 , 2 a 2 , 2 a 2 , 1 a 1 , 3 b 1 ( a ) b 2 ( a ) b 2 ( b ) b 1 ( b ) – t = t + 1 5 7 Probabilities Over Sequences Hidden Markov Models • An output sequence is a sequence of observations o 1 . . . o T In general, if we have the sequence x = x 1 , x 2 , . . . x n where where each o i ∈ Σ each x j ∈ Σ , and the sequence y = y 1 , y 2 , . . . y n where each e.g. the dog the dog dog the y j ∈ 1 . . . ( N − 1) , then • A state sequence is a sequence of states s 1 . . . s T where each n n � � s i ∈ { 1 . . . N } P ( x, y | Θ) = π y 1 a y n ,N a y j − 1 ,y j b y j ( x j ) e.g. 1 2 1 2 2 1 j =2 j =1 • HMM defines a probability for each state/output sequence pair e.g. the/1 dog/2 the/1 dog/2 the/2 dog/1 has probability π 1 b 1 ( the ) a 1 , 2 b 2 ( dog ) a 2 , 1 b 1 ( the ) a 1 , 2 b 2 ( dog ) a 2 , 2 b 2 ( the ) a 2 , 1 b 1 ( dog ) a 1 , 3 6 8

A Hidden Variable Problem Overview • We have an HMM with N = 3 , Σ = { e, f, g, h } • Hidden Markov models • We see the following output sequences in training data • The EM algorithm in general form e g e h • Products of multinomial (PM) models f h f g • The EM algorithm for PM models • The EM algorithm for hidden markov models (dynamic • How would you choose the parameter values for π i , a i,j , and programming) b i ( o ) ? 9 11 Another Hidden Variable Problem EM: the Basic Set-up • We have some data points—a “sample”— x 1 , x 2 , . . . x m . • We have an HMM with N = 3 , Σ = { e, f, g, h } • For example, each x i might be a sentence such as “the • We see the following output sequences in training data dog slept”: this will be the case in EM applied to hidden Markov models (HMMs) or probabilistic context-free- e g h (Note that in this case each x i is a grammars (PCFGs). e h sequence , which we will sometimes write x i 1 , x i 2 , . . . x i n i where f h g n i is the length of the sequence.) f g g e h • Or in the three coins example (see the lecture notes), each x i might be a sequence of three coin tosses, such as HHH , THT , or TTT . • How would you choose the parameter values for π i , a i,j , and b i ( o ) ? 10 12

• Given the sample x 1 , x 2 , . . . x m , we define the likelihood as • We have a parameter vector Θ . For example, see the description of HMMs in the previous section. As another m m example, in a PCFG, Θ would contain the probability P ( α → L ′ (Θ) = � P ( x i | Θ) = � � P ( x i , y | Θ) β | α ) for every rule expansion α → β in the context-free i =1 i =1 y grammar within the PCFG. and we define the log-likelihood as m m L (Θ) = log L ′ (Θ) = log P ( x i | Θ) = P ( x i , y | Θ) � � � log y i =1 i =1 13 15 • We have a model P ( x, y | Θ) : A function that for any x, y, Θ • The maximum-likelihood estimation problem is to find triple returns a probability, which is the probability of seeing Θ ML = arg max Θ ∈ Ω L (Θ) x and y together given parameter settings Θ . • This model defines a joint distribution over x and y , but that we where Ω is a parameter space specifying the set of allowable can also derive a marginal distribution over x alone, defined parameter settings. In the HMM example, Ω would enforce as the restrictions � N j =1 π j = 1 , for all j = 1 . . . ( N − 1) , � P ( x | Θ) = P ( x, y | Θ) � N k =1 a j,k = 1 , and for all j = 1 . . . ( N − 1) , � o ∈ Σ b j ( o ) = 1 . y 14 16

The EM Algorithm Overview • Θ t is the parameter vector at t ’th iteration • Hidden Markov models • Choose Θ 0 (at random, or using various heuristics) • The EM algorithm in general form • Products of multinomial (PM) models • Iterative procedure is defined as Θ t = argmax Θ Q (Θ , Θ t − 1 ) • The EM algorithm for PM models where • The EM algorithm for hidden markov models (dynamic Q (Θ , Θ t − 1 ) = P ( y | x i , Θ t − 1 ) log P ( x i , y | Θ) � � programming) i y ∈Y 17 19 The EM Algorithm Products of Multinomial (PM) Models t = argmax Θ Q (Θ , Θ t − 1 ) , where • Iterative procedure is defi ned as Θ • In a PCFG, each sample point x is a sentence, and each y is a � � Q (Θ , Θ t − 1 ) = P ( y | x i , Θ t − 1 ) log P ( x i , y | Θ) possible parse tree for that sentence. We have i y ∈Y n � P ( x, y | Θ) = P ( α i → β i | α i ) • Key points: i =1 – Intuition: fi ll in hidden variables y according to P ( y | x i , Θ) assuming that ( x, y ) contains the n context-free rules α i → β i – EM is guaranteed to converge to a local maximum, or saddle-point, for i = 1 . . . n . of the likelihood function – In general, if � argmax Θ log P ( x i , y i | Θ) • For example, if ( x, y ) contains the rules S → NP VP , i NP → Jim , and VP → sleeps , then has a simple (analytic) solution, then P ( x, y | Θ) = P ( S → NP VP | S ) × P ( NP → Jim | NP ) × P ( VP → sleeps | VP ) � � P ( y | x i , Θ t − 1 ) log P ( x i , y | Θ) argmax Θ i y also has a simple (analytic) solution. 18 20

Products of Multinomial (PM) Models Overview • HMMs define a model with a similar form. Recall the • Hidden Markov models example in the section on HMMs, where we had the following probability for a particular ( x, y ) pair: • The EM algorithm in general form P ( x, y | Θ) = π 1 a 1 , 2 a 2 , 2 a 2 , 1 a 1 , 3 b 1 ( a ) b 2 ( a ) b 2 ( b ) b 1 ( b ) • Products of multinomial (PM) models • The EM algorithm for PM models • The EM algorithm for hidden markov models (dynamic programming) 21 23 Products of Multinomial (PM) Models The EM Algorithm for PM Models • We will use Θ t to denote the parameter values at the t ’th • In both HMMs and PCFGs, the model can be written in the iteration of the algorithm. following form Θ Count ( x,y,r ) � P ( x, y | Θ) = r • In the initialization step, some choice for initial parameter r =1 ... | Θ | settings Θ 0 is made. Here: • The algorithm then defines an iterative sequence of parameters – Θ r for r = 1 . . . | Θ | is the r ’th parameter in the model Θ 0 , Θ 1 , . . . , Θ T , before returning Θ T as the final parameter – Count ( x, y, r ) for r = 1 . . . | Θ | is a count corresponding settings. to how many times Θ r is seen in the expression for P ( x, y | Θ) . • Crucial detail: deriving Θ t from Θ t − 1 • We will refer to any model that can be written in this form as a product of multinomials (PM) model. 22 24

Recommend

More recommend