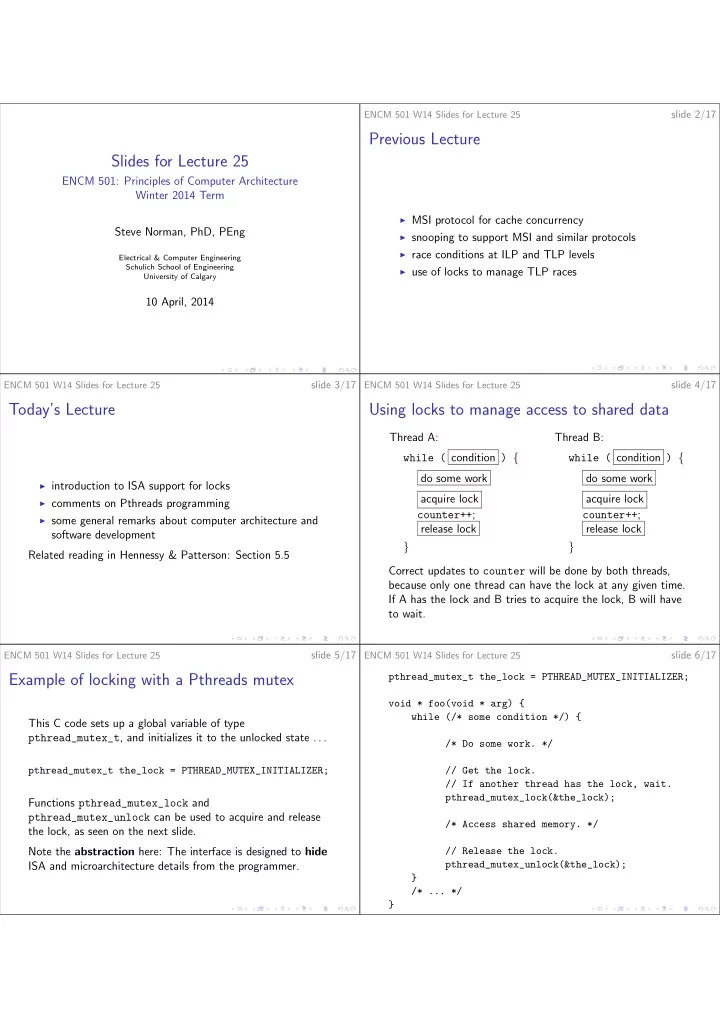

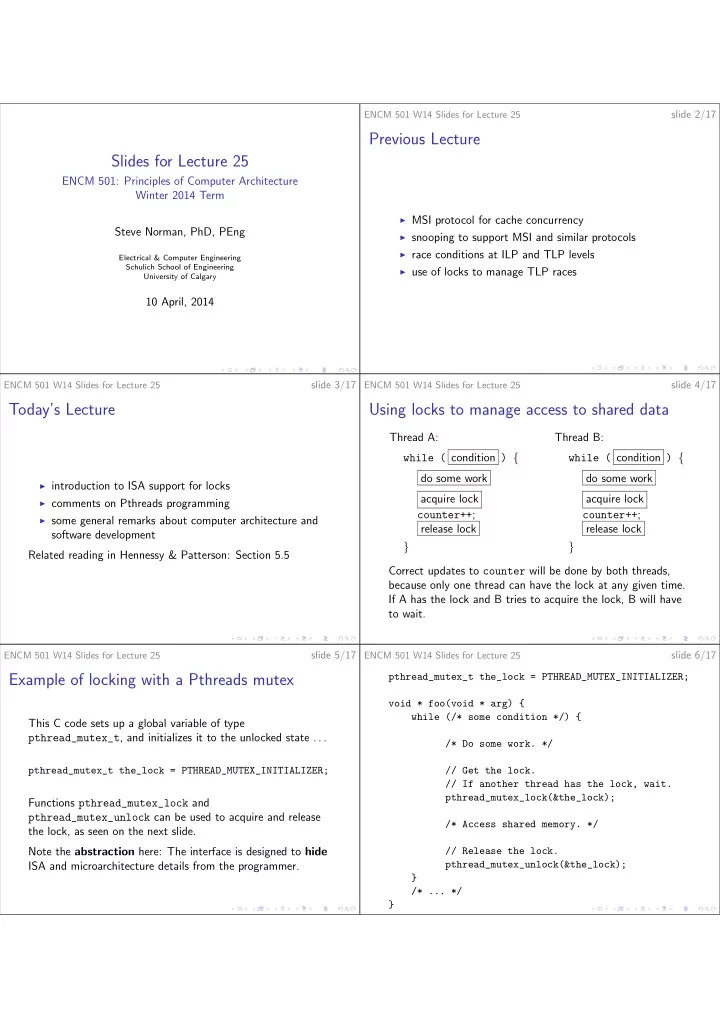

slide 2/17 ENCM 501 W14 Slides for Lecture 25 Previous Lecture Slides for Lecture 25 ENCM 501: Principles of Computer Architecture Winter 2014 Term ◮ MSI protocol for cache concurrency Steve Norman, PhD, PEng ◮ snooping to support MSI and similar protocols ◮ race conditions at ILP and TLP levels Electrical & Computer Engineering Schulich School of Engineering ◮ use of locks to manage TLP races University of Calgary 10 April, 2014 ENCM 501 W14 Slides for Lecture 25 slide 3/17 ENCM 501 W14 Slides for Lecture 25 slide 4/17 Today’s Lecture Using locks to manage access to shared data Thread A: Thread B: while ( condition ) { while ( condition ) { do some work do some work ◮ introduction to ISA support for locks acquire lock acquire lock ◮ comments on Pthreads programming counter++ ; counter++ ; ◮ some general remarks about computer architecture and release lock release lock software development } } Related reading in Hennessy & Patterson: Section 5.5 Correct updates to counter will be done by both threads, because only one thread can have the lock at any given time. If A has the lock and B tries to acquire the lock, B will have to wait. slide 5/17 slide 6/17 ENCM 501 W14 Slides for Lecture 25 ENCM 501 W14 Slides for Lecture 25 pthread_mutex_t the_lock = PTHREAD_MUTEX_INITIALIZER; Example of locking with a Pthreads mutex void * foo(void * arg) { while (/* some condition */) { This C code sets up a global variable of type pthread_mutex_t , and initializes it to the unlocked state . . . /* Do some work. */ pthread_mutex_t the_lock = PTHREAD_MUTEX_INITIALIZER; // Get the lock. // If another thread has the lock, wait. pthread_mutex_lock(&the_lock); Functions pthread_mutex_lock and pthread_mutex_unlock can be used to acquire and release /* Access shared memory. */ the lock, as seen on the next slide. Note the abstraction here: The interface is designed to hide // Release the lock. ISA and microarchitecture details from the programmer. pthread_mutex_unlock(&the_lock); } /* ... */ }

slide 7/17 slide 8/17 ENCM 501 W14 Slides for Lecture 25 ENCM 501 W14 Slides for Lecture 25 How NOT to set up a lock ISA and microarchitecture support for concurrency Instruction sets have to provide special atomic instructions to int my_lock = 0; // 0: unlocked; 1: locked. allow software implementation of synchronization facilities such as mutexes (locks) and semaphores. void get_lock(void) { // Keep trying until my_lock == 0. An atomic RMW (read-modify-write) instruction (or a while (my_lock != 0) sequence of instructions that is intended to provide the same ; kind of behaviour, such as MIPS LL / SC ) typically works like this: // Aha! The lock is available! ◮ memory read is attempted at some location; my_lock = 1; ◮ some kind of write data is generated; } ◮ memory write to the same location is attempted. Why is this approach totally useless? ENCM 501 W14 Slides for Lecture 25 slide 9/17 ENCM 501 W14 Slides for Lecture 25 slide 10/17 MIPS LL and SC instructions LL (load linked:) This is like a normal LW instruction, but it The key aspects of atomic RMW instructions are: also gets the processor ready for upcoming SC instruction. ◮ the whole operation succeeds or the whole operation fails, SC (store conditional:) The assembler syntax is in a clean way that can be checked after the attempt was SC GPR1 , offset ( GPR2 ) made; ◮ if two or more threads attempt the operation, such that If SC succeeds, it works like SW, but also writes 1 into GPR1 . the attempts overlap in time, one thread will succeed, and If SC fails, there is no memory write, and GPR1 gets a value all the other threads will fail. of 0. The hardware ensures that if two or more threads attempt LL/SC sequences that overlap in time, SC will succeed in only one thread . slide 11/17 slide 12/17 ENCM 501 W14 Slides for Lecture 25 ENCM 501 W14 Slides for Lecture 25 Use of LL and SC to lock a mutex Spinlocks The example on the last slide demonstrates spinning in a loop Suppose R9 points to a memory word used to hold the state of to acquire a lock. a mutex: 0 for unlocked, 1 for locked. Here is code for MIPS, Suppose Thread A is spinning, waiting to acquire a lock. Then with delayed branch instructions. Thread A is occupying a core, using energy, and not really L1: LL R8, (R9) doing any work. BNE R8, R0, L1 That’s fine if the lock will soon be released. However, if the ORI R8, R0, 1 lock may be held for a long time, a more sophisticated SC R8, (R9) algorithm is better: BEQ R8, R0, L1 NOP ◮ Thread spins, but gives up after some fixed number of iterations. Let’s add some comments to explain how this works. ◮ Thread makes system call to OS kernel, asking to sleep What would the code be to unlock the mutex? until the lock is available.

slide 13/17 slide 14/17 ENCM 501 W14 Slides for Lecture 25 ENCM 501 W14 Slides for Lecture 25 SC and similar instructions have long latencies Locks aren’t free, but are often necessary In a multicore system, SC, and instructions that are intended It should be clear that any kind of variable or data structure for similar purposes in other ISAs—see, for example, that could be written to by two or more threads must be CMPXCHG in x86 and x86-64—will necessarily have to inspect protected by a lock. some shared global state to determine success or failure. Consider a program in which only one thread writes to a There is no way to make a safe decision about SC simply by variable or data structure, but many threads read that variable looking within a private cache with one core! or data structure from time to time. Execution of SC by a core must therefore cause a many-cycle Why might a lock be necessary in this one-writer, many-reader stall in that core. It’s only one instruction, but that doesn’t case? What kind of modification to the lock design might mean it’s cheap in terms of time! improve program efficiency? ENCM 501 W14 Slides for Lecture 25 slide 15/17 ENCM 501 W14 Slides for Lecture 25 slide 16/17 Comments on Pthreads programming Useful quotes There’s not much time left, so I won’t try to provide any new This course has mostly been about hardware designs to details beyond what appeared in assignments and a tutorial. enhance instruction throughput, and a little about tradeoffs with energy use, chip area, and so on. But that does not Some very general comments: mean that speed is important in every part of every system . . . ◮ Pthreads was chosen for thread examples in ENCM 501 because the basics can be understood if you have “The best performance improvement is the transition from the reasonable understandings of C and the concept of virtual nonworking state to the working state.” —John Ousterhout address spaces for processes. “Premature optimization is the root of all evil (or at least ◮ To be effective with Pthreads, you need to learn a lot most of it) in programming.” —Donald Knuth more than the just the very basics given in ENCM 501. ◮ There are a lot of alternatives and a lot of innovation A little Web search regarding the Knuth quote is very currently underway—if your application needs threads, do worthwhile—what does it mean, exactly, and what does it not research on multiple languages and libraries! say? slide 17/17 ENCM 501 W14 Slides for Lecture 25 Some of the things I hope you take away from this course Better appreciation for the intense design efforts that have given us today’s astonishingly powerful, complex, and cheap processor chips and memory systems. A starting point for research on ways to get maximum performance out of current and future hardware, when maximum performance is needed. A starting point for research, if you are trying to choose the best processor and memory system for a high-performance embedded application.

Recommend

More recommend