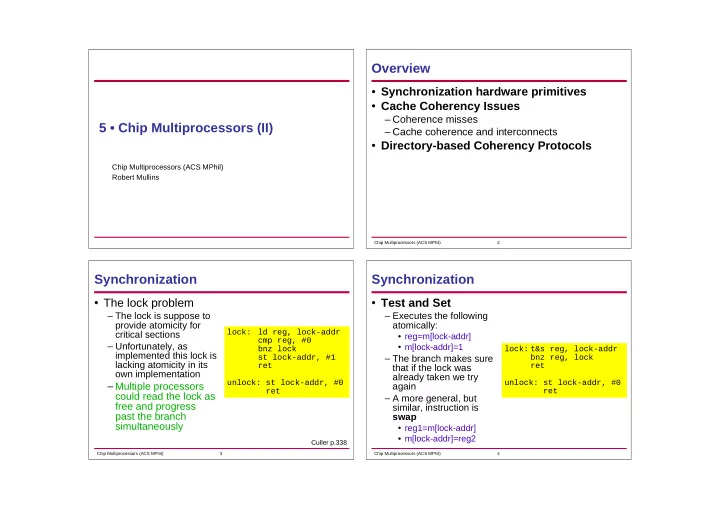

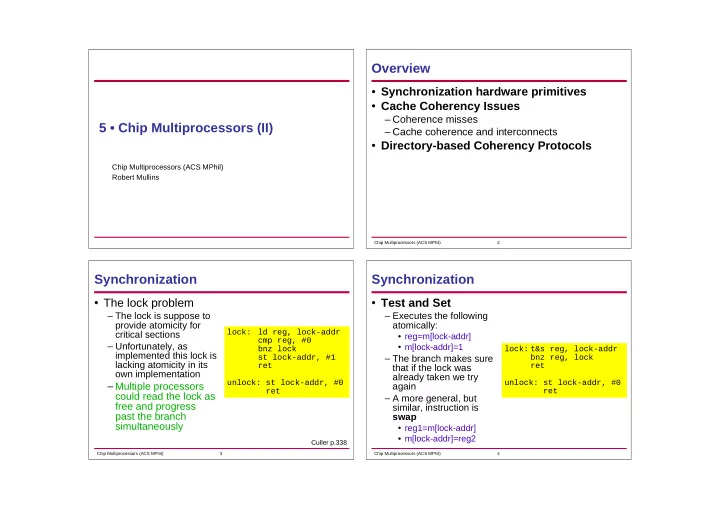

Overview • Synchronization hardware primitives • Cache Coherency Issues – Coherence misses 5 • Chip Multiprocessors (II) – Cache coherence and interconnects • Directory-based Coherency Protocols Chip Multiprocessors (ACS MPhil)‐ Robert Mullins Chip Multiprocessors (ACS MPhil)‐ 2 Synchronization Synchronization • The lock problem • Test and Set – The lock is suppose to – Executes the following provide atomicity for atomically: lock: ld reg, lock-addr critical sections • reg=m[lock-addr] cmp reg, #0 – Unfortunately, as • m[lock-addr]=1 bnz lock lock: t&s reg, lock-addr implemented this lock is st lock-addr, #1 – The branch makes sure bnz reg, lock lacking atomicity in its ret ret that if the lock was own implementation already taken we try unlock: st lock-addr, #0 unlock: st lock-addr, #0 – Multiple processors again ret ret could read the lock as – A more general, but free and progress similar, instruction is past the branch swap simultaneously • reg1=m[lock-addr] • m[lock-addr]=reg2 Culler p.338 Chip Multiprocessors (ACS MPhil)‐ 3 Chip Multiprocessors (ACS MPhil)‐ 4

Synchronization Synchronization • We could implement test&set with two bus • If we assume an invalidation-based CC protocol with transactions a WB cache, a better approach is to: – A read and a write transaction – Issue a read exclusive (BusRdX) transaction then perform the read and write (in the cache)‐ without – We could lock down the bus for these two cycles to giving up ownership ensure the sequence is atomic – Any incoming requests to the block are buffered until – More difficult with a split-transaction bus the data is written in the cache • performance and deadlock issues • Any other processors are forced to wait Culler p.391 Chip Multiprocessors (ACS MPhil)‐ 5 Chip Multiprocessors (ACS MPhil)‐ 6 Synchronization LL/SC • Other common synchronization instructions: • LL/SC – swap – Load-Linked (LL) • Read memory – fetch&op • Set lock flag and put address in lock register • fetch&inc – Intervening writes to the address in the lock register will • fetch&add cause the lock flag to be reset – compare&swap – Store-Conditional (SC) – Many x86 instructions can be prefixed with the • Check lock flag to ensure an intervening conflicting write “lock” modifier to make them atomic has not occurred • A simpler general purpose solution? • If lock flag is not set, SC will fail If (atomic_update) then mem[addr]=rt, rt=1 else rt=0 Chip Multiprocessors (ACS MPhil)‐ 7 Chip Multiprocessors (ACS MPhil)‐ 8

LL/SC LL/SC reg2=1 lock: ll reg1, lock-addr bnz reg1, lock ; lock already taken? sc lock-addr, reg2 beqz lock ; if SC failed goto lock ret unlock: st lock-addr, #0 ret This SC will fail as the lock flag will be reset by the store from P2 Culler p.391 Chip Multiprocessors (ACS MPhil)‐ 9 Chip Multiprocessors (ACS MPhil)‐ 10 LL/SC LL/SC • LL/SC can be implemented using the CC protocol: • Need to ensure forward progress. May prevent LL giving up M state for n cycles (or after repeated fails – LL – loads cache line with write permission (issues guarantee success, i.e. simply don't give up M state)‐ BusRdX, holds line in state M)‐ • We normally implement a restricted form of LL/SC – SC – Only succeeds if cache line is still in state M, called RLL/RSC: otherwise fails – SC may experience spurious failures • e.g. due to context switches and TLB misses – We add restrictions to avoid cache line (holding lock variable)‐ from being replaced • Disallow memory memory-referencing instructions between LL and SC • Prohibit out-of-order execution between LL and SC Chip Multiprocessors (ACS MPhil)‐ 11 Chip Multiprocessors (ACS MPhil)‐ 12

Coherence misses True sharing • Remember your 3 C's! • A block typically contains many words ( e.g. 4- 8)‐. Coherency is maintained at the granularity – Compulsory of cache blocks • Cold-start of first-reference misses – Capacity – True sharing miss • Misses that arise from the communication of data • If cache is not large enough to store all the blocks needed during the execution of the program • e.g., the 1 st write to a shared block (S)‐ will causes an – Conflict (or collision)‐ invalidation to establish ownership • Additionally, subsequent reads of the invalidated block • Conflict misses occur due to direct-mapped or set by another processor will also cause a miss associative block placement strategies • Both these misses are classified as true sharing if data – Coherence is communicated and they would occur irrespective of • Misses that arise due to interprocessor communication block size. Chip Multiprocessors (ACS MPhil)‐ 13 Chip Multiprocessors (ACS MPhil)‐ 14 False sharing Cache coherence and interconnects • False sharing miss • Broadcast-based snoopy protocols – Different processors are writing and reading – These protocols rely on bus-based interconnects different words in a block, but no communication is • Buses have limited scalability taking place • Energy and bandwidth implications of broadcasting • e.g. a block may contain words X and Y – They permit direct cache-to-cache transfers • P1 repeatedly writes to X, P2 repeatedly writes to Y • Low-latency communication • The block will be repeatedly invalidated (leading to – 2 “hops” cache misses)‐ even though no communication is taking » 1. broadcast place » 2. receive data from remote cache – These are false misses and are due to the fact that • Very useful for applications with lots of fine-grain the block contains multiple words sharing • They would not occur if the block size = a single word For more details see “Coherence miss classification for performance debugging in multi-core processors”, Venkataramani et al. Interact-2013 Chip Multiprocessors (ACS MPhil)‐ 15 Chip Multiprocessors (ACS MPhil)‐ 16

Cache coherence and interconnects Cache coherence and interconnects • Totally-ordered interconnects – All messages are delivered to all destinations in the same order. Totally-ordered interconnects often employ a centralised arbiter or switch – e.g. a bus or pipelined broadcast tree – Traditional snoopy protocols are built around the concept of a bus (or virtual bus)‐: • (1)‐ Broadcast - All transactions are visible to all components connected to the bus • (2)‐ The interconnect provides a total order of messages A pipelined broadcast tree is sufficiently similar to a bus to support traditional snooping protocols. [Reproduced from Milo Martin's PhD thesis (Wisconsin)‐] The centralised switch guarantees a total ordering of messages, i.e. messages are sent to the root switch then broadcast. Chip Multiprocessors (ACS MPhil)‐ 17 Chip Multiprocessors (ACS MPhil)‐ 18 Cache coherence and interconnects Directory-based cache coherence • Unordered interconnects • The state of the blocks in each cache in a snoopy protocol is maintained by broadcasting – Networks (e.g. mesh, torus)‐ can't typically provide strong ordering guarantees, i.e. nodes don't perceive all memory operations on the bus transactions in a single global order. • We want to avoid the need to broadcast. So • Point-to-point ordering maintain the state of each block explicitly – Networks may be able to ensure messages sent – We store this information in the directory between a pair of nodes are guaranteed not to be – Requests can be made to the appropriate directory reordered. entry to read or write a particular block – e.g. a mesh with a single VC and deterministic – The directory orchestrates the appropriate actions dimension ordered (XY)‐ routing necessary to satisfy the request Chip Multiprocessors (ACS MPhil)‐ 19 Chip Multiprocessors (ACS MPhil)‐ 20

Directory-based cache coherence Broadcast-based directory protocols • The directory provides a per-block ordering • A number of recent coherence protocols broadcast transactions over unordered interconnects: point to resolve races – Similar to snoopy coherence protocols – All requests for a particular block are made to the – They provide a directory, or coherence hub, that serves as same directory. The directory decides the order an ordering point. The directory simply broadcasts requests the requests will be satisfied. Directory protocols to all nodes (no sharer state is maintained)‐ can operate over unordered interconnects – The ordering point also buffers subsequent coherence requests to the same cache line to prevent races with a request in progress – e.g. early example AMD's Hammer protocol – High bandwidth requirements, but simple, no need to maintain/read sharer state Chip Multiprocessors (ACS MPhil)‐ 21 Chip Multiprocessors (ACS MPhil)‐ 22 Directory-based cache coherence Directory-based cache coherence • The directory keeps track of who has a copy of Read Miss to a the block and their states block in a modified state in a cache – Broadcasting is replaced by cheaper point-to-point (Culler, Fig. 8.5)‐ communications by maintaining a list of sharers – The number of invalidations on a write is typically small in real applications, giving us a significant reduction in communication costs (esp. in systems with a large number of processors)‐ An example of a simple protocol. This is only meant to introduce the concept of a directory Chip Multiprocessors (ACS MPhil)‐ 23 Chip Multiprocessors (ACS MPhil)‐ 24

Recommend

More recommend