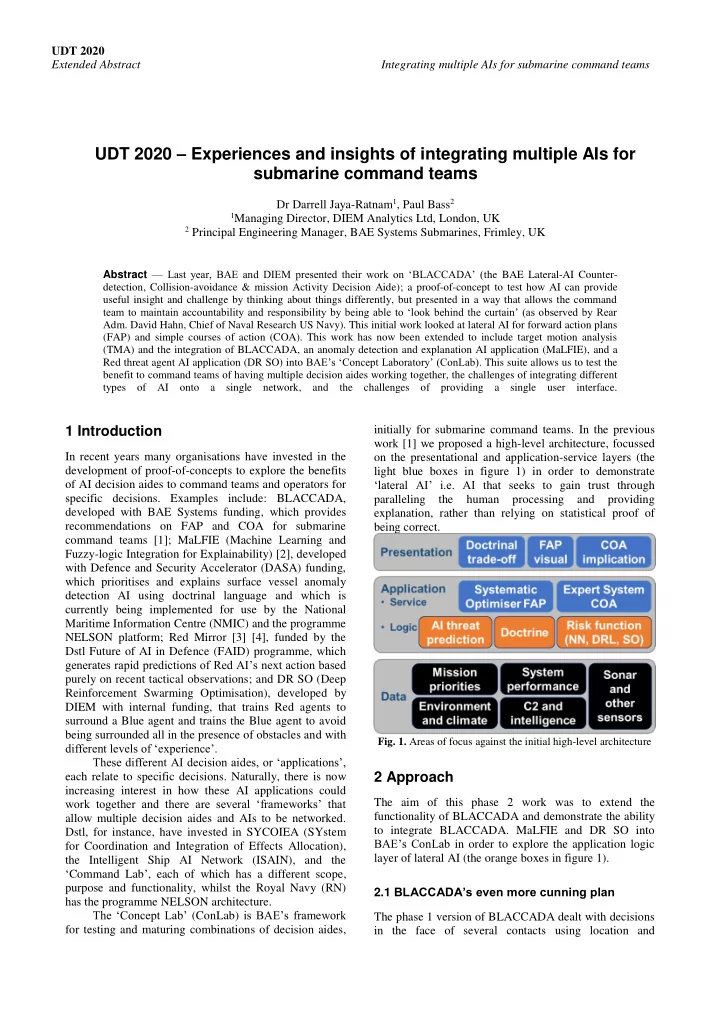

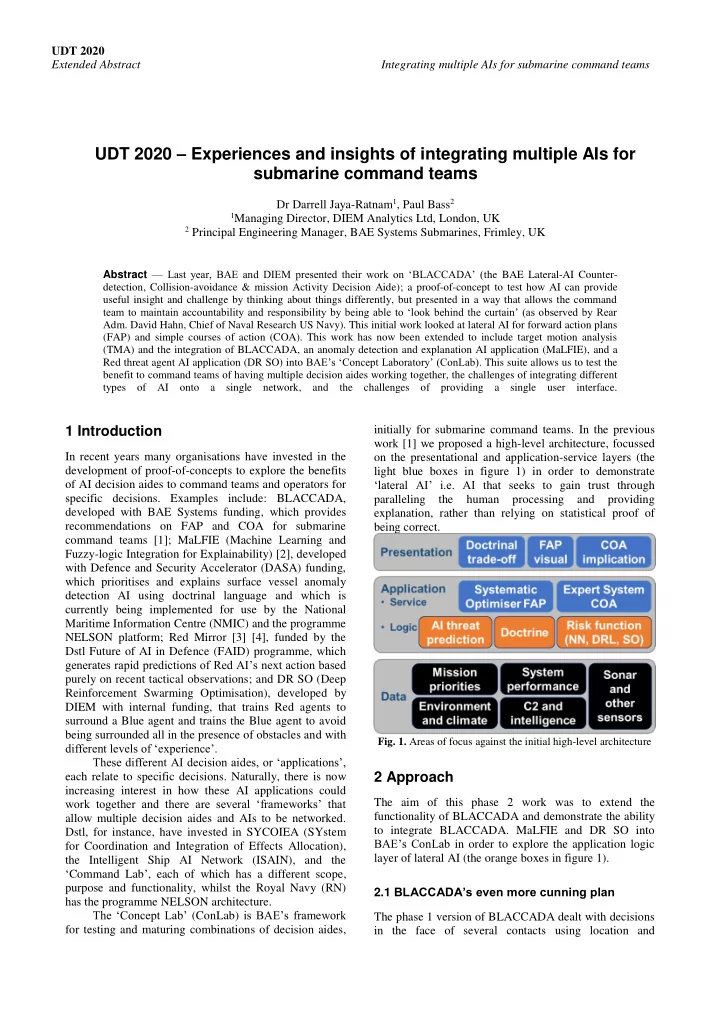

UDT 2020 Extended Abstract Integrating multiple AIs for submarine command teams UDT 2020 – Experiences and insights of integrating multiple AIs for submarine command teams Dr Darrell Jaya-Ratnam 1 , Paul Bass 2 1 Managing Director, DIEM Analytics Ltd, London, UK 2 Principal Engineering Manager, BAE Systems Submarines, Frimley, UK Abstract — Last year, BAE and DIEM presented their work on ‘BLACCADA’ (t he BAE Lateral-AI Counter- detection, Collision-avoidance & mission Activity Decision Aide); a proof-of-concept to test how AI can provide useful insight and challenge by thinking about things differently, but presented in a way that allows the command team to maintain accountability and responsibility by being able to ‘look behind the curtain’ (as observed by Rear Adm. David Hahn, Chief of Naval Research US Navy). This initial work looked at lateral AI for forward action plans (FAP) and simple courses of action (COA). This work has now been extended to include target motion analysis (TMA) and the integration of BLACCADA, an anomaly detection and explanation AI application (MaLFIE), and a Red threat agent AI application (DR SO) into BAE’s ‘Concept Laboratory’ (ConLab) . This suite allows us to test the benefit to command teams of having multiple decision aides working together, the challenges of integrating different types of AI onto a single network, and the challenges of providing a single user interface. 1 Introduction initially for submarine command teams. In the previous work [1] we proposed a high-level architecture, focussed In recent years many organisations have invested in the on the presentational and application-service layers (the development of proof-of-concepts to explore the benefits light blue boxes in figure 1) in order to demonstrate ‘lateral AI’ i.e. AI that seeks to gain trust through of AI decision aides to command teams and operators for specific decisions. Examples include: BLACCADA, paralleling the human processing and providing developed with BAE Systems funding, which provides explanation, rather than relying on statistical proof of recommendations on FAP and COA for submarine being correct. command teams [1]; MaLFIE (Machine Learning and Fuzzy-logic Integration for Explainability) [2], developed with Defence and Security Accelerator (DASA) funding, which prioritises and explains surface vessel anomaly detection AI using doctrinal language and which is currently being implemented for use by the National Maritime Information Centre (NMIC) and the programme NELSON platform; Red Mirror [3] [4], funded by the Dstl Future of AI in Defence (FAID) programme, which generates rapid predictions of Red AI’s next action based purely on recent tactical observations; and DR SO (Deep Reinforcement Swarming Optimisation), developed by DIEM with internal funding, that trains Red agents to surround a Blue agent and trains the Blue agent to avoid being surrounded all in the presence of obstacles and with Fig. 1. Areas of focus against the initial high-level architecture different levels of ‘experience’. These different AI decision aides, or ‘applications’, 2 Approach each relate to specific decisions. Naturally, there is now increasing interest in how these AI applications could work together and there are several ‘frameworks’ that The aim of this phase 2 work was to extend the functionality of BLACCADA and demonstrate the ability allow multiple decision aides and AIs to be networked. to integrate BLACCADA. MaLFIE and DR SO into Dstl, for instance, have invested in SYCOIEA (SYstem BAE’s ConLab in order to explore the application logic for Coordination and Integration of Effects Allocation), layer of lateral AI (the orange boxes in figure 1). the Intelligent Ship AI Network (ISAIN), and the ‘ Command Lab ’ , each of which has a different scope, purpose and functionality, whilst the Royal Navy (RN) 2.1 BLACCADA’s even more cunning plan has the programme NELSON architecture. The ‘Concept Lab’ (ConLab) is BAE’s framework The phase 1 version of BLACCADA dealt with decisions for testing and maturing combinations of decision aides, in the face of several contacts using location and

UDT 2020 Extended Abstract Integrating multiple AIs for submarine command teams movement data, and taking into account uncertainty of TMA recommendations, based on the Eklund ranging these parameters. The FAP then indicated safe and unsafe method, were added to both the FAP and COA in order to zones over time, based on these contact parameters, to decrease the uncertainty of the location and movement explain the minimum risk route to a mission essential inputs for a particular contact. Functions to handle a location to the submarine command team. Once the larger number of contacts, update and confirm mission desired location had been reached, the COA provided details, and record and save specific locations in a route tactical recommendations on the specific actions to take were incorporated in the FAP. Finally, the option of ‘going deep’ was added as a potential COA . Figure 2 as each new piece of information on nearby contacts was received. For phase 2 a number of updates were made. shows screen-shots from the phase 2 FAP and COA. Fig. 2. Screen shot of the updated BLACCADA FAP (top) and COA (bottom) including TMA and ‘go deep’ COAs 2.2 MaLFIE anomaly detection and explanation application was chosen as a potential means of reducing this uncertainty. MaLFIE phase 1 was a DASA funded The contact details input to BLACCADA include proof-of-concept which takes AIS data (Automated location, movement and type. All of these have Information System), uses bespoke or standard ‘anomaly uncertainty associated with them. The MaLFIE detection’ algorithms to establish patterns of life (POL)

UDT 2020 Extended Abstract Integrating multiple AIs for submarine command teams other ‘AI explanation’ techniques e.g. RETAIN and for different vessel types, and then provides an LIMES, seek to ‘explain’ through numbers (sensitivities explanation and prioritisation across all the anomalous surface vessels identified by these algorithms. The key and probabilities) which are only useful to data scientists. features of the MaLFIE explanation are that it can Figure 3 shows the MaLFIE ph1 front end, indicating generate explanations for any existing anomaly detection colour coding of vessels of different levels of ‘anomality’, the prioritisation of the anomaly, and the system - it has been tested with clustering and Deep Reinforcement Learning (DRL) algorithms - and natural language explanation of the driving factors of the generates ‘explanations’ in a narrative/ doctrinal language anomaly scores output by the AIs chosen. that military operators can understand and use, whereas Fig. 3. Screen shot of the phase 1 MaLFIE application front end (user-interface developed by BMT under contract to DIEM) For this project the backend algorithms of MaLFIE phase obstacles(the large black circles). Simultaneously, the 1, developed by DIEM, were integrated into ConLab so single Blue agent learns to avoid being swarmed. Note that they could use any sensor data e.g. AIS and radar, in that in the DR SO context, swarming refers to ‘surround ing ’ Blue so that it is trapped, whereas other order to provide the submarine command team with ‘swarming’ algorithms are actually used to ‘flock’. insights into the pattern of life of different types of vessels, the extent to which an individual vessels’ The DR SO algorithm was integrated into the Con behaviour is anomalous, and why, so that they may better Lab to simulate challenging Red threats representing, for ‘weight’ the different zones and COAs from instance, multiple torpedoes coordinating an attack, or BLACCADA. multiple ASW vessels e.g. frigates, future ASW drones, dipping-sonar platforms, coordinating a submarine search. 2.3 DR SO threat agent simulation BLACADDA uses the observed contact movements fed in from the ConLab environment. Currently these contact movements are driven by pre-described scenarios or simple behaviour rules from, for instance, the ‘Command Modern Air/Naval Operations’ game. DR SO was developed by DIEM to provide a ‘Red threat agent’ for use on ‘counter AI AI’ studies such as ‘Red Mirror’ [3]. It was incorporated into the ConLab to provide an automated threat which can be trained to deal with specific scenarios and missions. action. Figure 4 illustrates the key features of DR SO. It trains multiple Red agents (the red circles) to ‘swarm’ a single Blue agent ( the blue circle) in the presence of

Recommend

More recommend