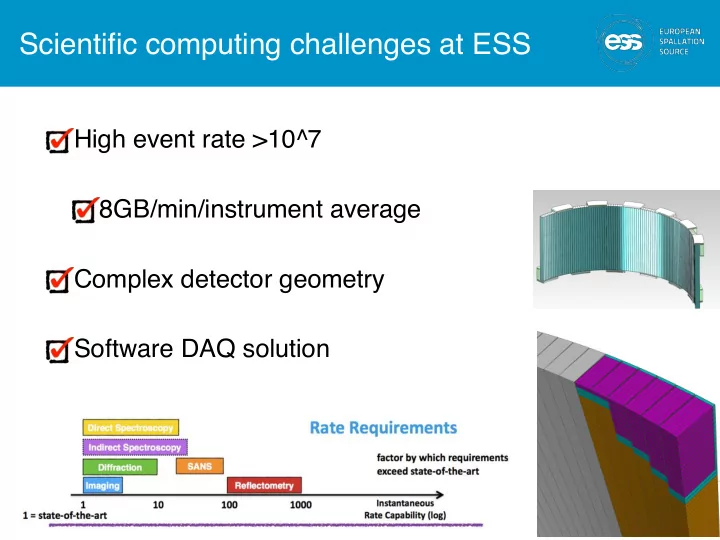

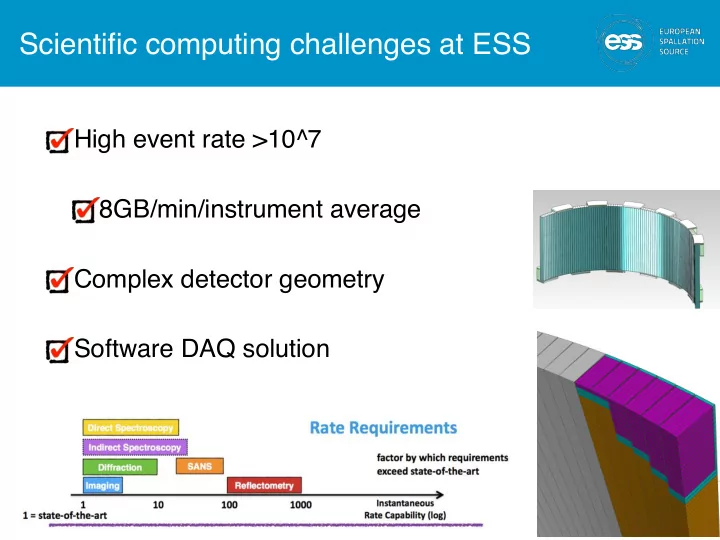

Scientific computing challenges at ESS High event rate >10^7 8GB/min/instrument average Complex detector geometry Software DAQ solution 1

Mantid development at the ESS • Construction phase • Core framework development • MPI distribution • Maintainability • Integration with data acquisition & experiment control • Contributions to project (4.0 development) • Operations (post 2019) • Instrument / class functionality development • Deployment • ESS specific data corrections 2

ESS Mantid usage • User workbench for • Data reduction for event mode and histograms • Data visualisation & inspection • Simple fitting • Integration with ECP • Integration with cluster based reduction 3

DMSC Organisation construction phase DMSC Data Systems DAQ & Data Instrument Data Analysis Project admin & Technologies Management Data & Modeling 6FTE 13FTE 8FTE 11FTE 3FTE Copenhagen Data Centre Instrument Control User Analysis codes MCSTAS Detector readout Project support DMSC servers in Lund Interfaces support + dev. Data Acquisition Budget & schedule Clusters, Workstations Live Visualization File writers (NeXus) Meeting organisation Disks, Parallel File System, Data reduction Data curation Database Servers (MANTID) Networks (incl. Lund – CPH) Data transfer, Back-up & Archive External facing Servers User Program Software – Proposal & Scheduling Systems Construction budget 20mio euro 4 + SINE2020 (40pm) Brightness (23py)

Moving into operations DMSC Data Systems Experiment control Data reduction Scientific modelling Project admin User office & Technologies Data curation and data and simulation (Petra Aulin) support analysis 10FTE 13FTE 10FTE +10FTE 4FTE 3FTE 4FTE Data reduction & Development, Storage and compute Readout DAQ and Support and integration of Project support visualisation support support and Data centre operations Control MD and a-priori simulation Budget & schedule and development maintenance of Lund Data management tools Meeting Data analysis support & user office Data centre operation Data curation organisation development solution CPH User database Inter site connection & proposal system network Visit system Software Deployment sample tracking Security • Construction - Build capacity and capability to support a user programme • Operations - Deliver a supported user programme 5

Data flow from Detectors, SE, choppers and motion control to disk, Mantid & analysis Frames of Events & Detector Detector data signal Aggregation of meta data interface Apache Kafka Event formation unit(s) FEA - FE-BE Detectors (Pixel positioning) ERC Kafka Kafka Mantid subscriber File Nexus Processed Neutron data and meta data Aggregator Data reduction API config Fast SE readout High speed automated Fast SE & motion environment reduction data interface data correction i.e With a latency visualisation of that is outside of reduced data spec for EPICS ERC Accelerator data Fast SE data Analysis interface PSS Status Instrument PV Analysis file in Sample access gateway PLC API config environment Time stamped Control Box signals Data analysis codes layer visualisation of Motion axis analysed data ERC IOC(s) Control Box Time stamped ChiC Python based signals Choppers experiment control system & visualisation ERC ERC IOC(s) WEB of detector data.DAQ File writing service control & configuration DOI ICS software, CCDB ,IOC DM ICS ID group factory naming, SCR group Reduction / Analysis catalogue PFS DB API ERC access Event Chopper & DAM DST Detector User Office receiver motion & group group group SE card DB

ESS data pipeline Mantid automatic reduction Instrument view of live data Experiment control Event Instrument Hardware Detector Formation Backend Unit 10 GB/s MRF fibre Event Detector NeXus File Formation Backend Writing Unit Data Aggregation (Kafka) Chopper Live EPICS Feedback MRF EPICS Bridge DMSC Hardware Motion Mantid reduction MPI + Kafka listener 7 BrightnESS is funded by the European Union’s Horizon 2020 research and innovation programme under grant agreement No. 676548

Proof of concept • Streaming a NeXus file through Kafka to Mantid • Scaleable performance ~4x10 7 events/s

The Mantid Project • Neutron specific data treatment framework • Standardised beyond data format • Event data capable • Live view • Complete instrument geometry • nD data visualisation • Data and software curation • App based UI • Python interace • Jupyter notebook • MPL graphing 9 9

Software sustainability institute review of Mantid. • Keep the GUI - improve it • Increase maintainability • Increase stability & performance 10

Mantid Performance requirements Requirements - live data reduction for an event rate of > 10^7 Filter good events from bad events Capability for handling of complex geometries 11

Safety first Functional safety Developer freedom The road to success is paved with … 12

HistogramData • Started by Simon Heybrock, • bug found in ConvertUnits • Improves speed and memory overhead • Conceptual correctness and type safety • Introduced in Mantid 3.8 (October 2016) • Now rolled out to Mantid framework and to be distributed as part of Mantid 3.10.0 with large collaboration effort. In collaboration with STFC 13

Mantid development objectives • Mantid has • Geometry • Data types • algorithms • Create a common MPI implementation • Introduce type safety 14

Initial MPI tests MPI more effective than threads. A number of ways to achieve load balance We balance on spectra 15

MPI bottlenecks, Instrument 2.0 • Instrument stores geometry and meta data. • r,t,p spectrum ID map, isMasked… • Complex detector geometries • Described within the framework PixID # Spectrum # • Current Instrument implementation is rate not nice • Parameter map is large /complex • Organically developed 16

Instrument 2.0 refactor • Reengineered parameter map implementation • For current & future use cases • MPI uses in particular • Scanning instruments • Cached info layers • Detector • Spectra • Mask • Cleaner interface for developers 17

so far so good… • Highlights the cost of refactoring • Significant improvements across Mantid • ILL can load interlaced scans from D2B into Matrix • Direct geometry workflow x2 faster Runtime for Runtime for unit Mask unit dets conversion 18

Step Scanning Instruments • Core framework changes complete as of March 2017 • Performed as part of sub collaboration with ILL • Intended to be shipped as part of Mantid 3.10.0 in June 2017 In collaboration with STFC 19

Next steps • Production MPI design • Matrix workspace • MD workspace • Integration of DAQ into ECP • User experience • How will users interact with our DAQ, reduction and analysis systems at ESS 20

Recommend

More recommend