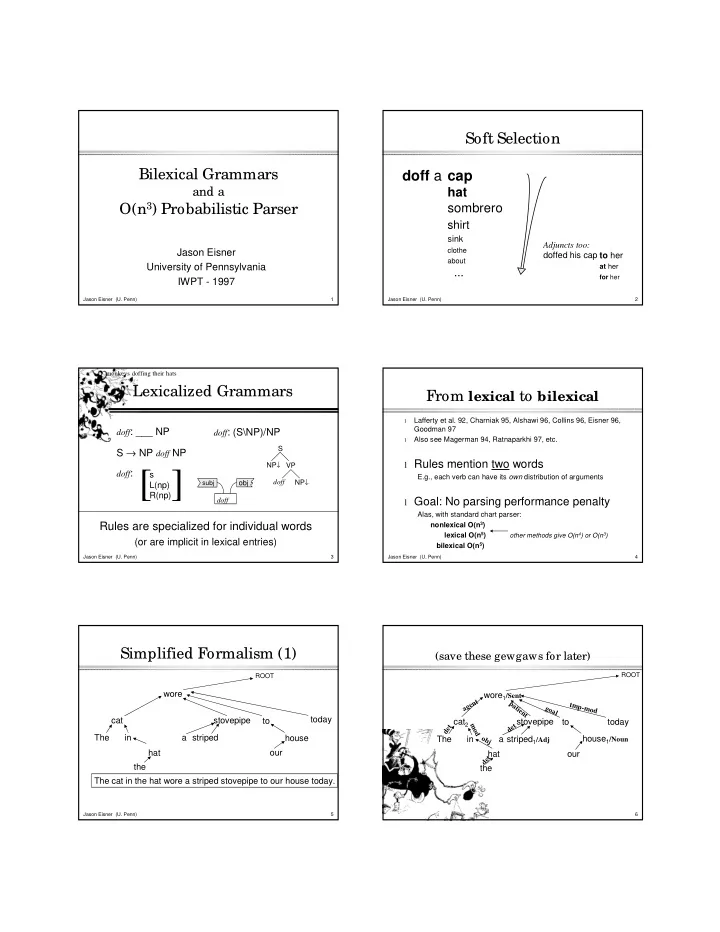

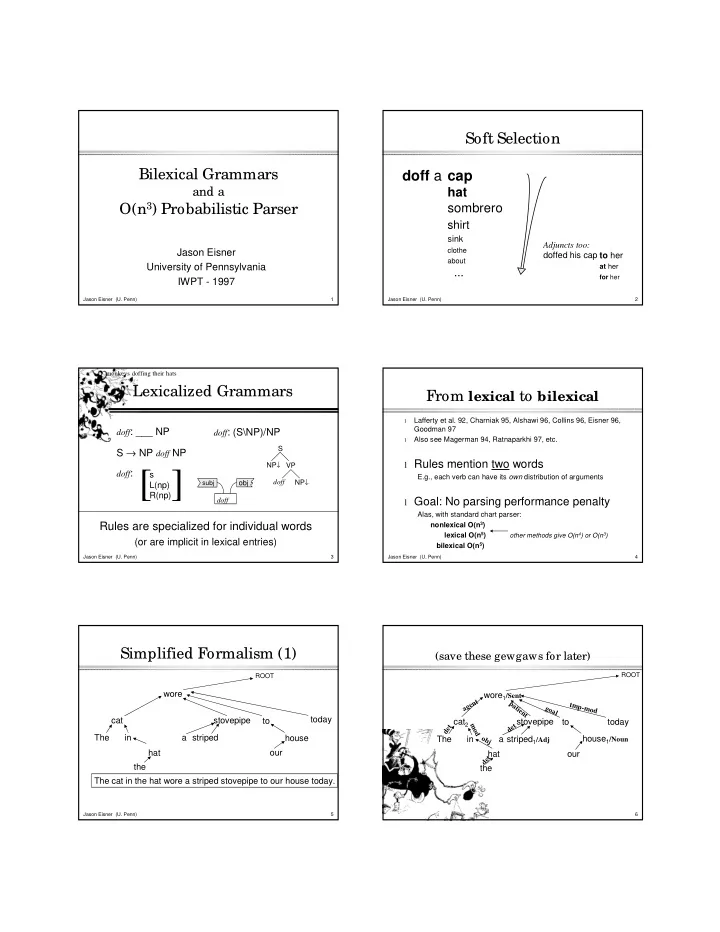

Soft Selection Bilexical Grammars doff a cap and a hat O(n 3 ) Probabilistic Parser sombrero shirt sink Adjuncts too: clothe Jason Eisner doffed his cap to her about University of Pennsylvania at her ... for her IWPT - 1997 Jason Eisner (U. Penn) 1 Jason Eisner (U. Penn) 2 monkeys doffing their hats Lexicalized Grammars From lexical to bilexical Lafferty et al. 92, Charniak 95, Alshawi 96, Collins 96, Eisner 96, l Goodman 97 doff : ___ NP doff : (S\NP)/NP Also see Magerman 94, Ratnaparkhi 97, etc. l S → NP doff NP S l Rules mention two words NP ↓ VP [ ] doff : s E.g., each verb can have its own distribution of arguments NP ↓ obj doff subj L(np) R(np) l Goal: No parsing performance penalty doff Alas, with standard chart parser: Rules are specialized for individual words nonlexical O(n 3 ) lexical O(n 5 ) other methods give O(n 4 ) or O(n 3 ) (or are implicit in lexical entries) bilexical O(n 5 ) Jason Eisner (U. Penn) 3 Jason Eisner (U. Penn) 4 Simplified Formalism (1) (save these gewgaws for later) ROOT ROOT wore wore 1 /Sent agent patient tmp-mod goal today cat stovepipe to cat 2 stovepipe to today mod det det The in a striped house obj house 1 /Noun The in a striped 1 /Adj hat our hat our t e d the the The cat in the hat wore a striped stovepipe to our house today. Jason Eisner (U. Penn) 5 Jason Eisner (U. Penn) 6

Simplified Formalism (2) Weighting the Grammar ROOT Transitive verb. doff: right DFA wore accepts: Noun Adv* cat stovepipe to today likes: hat nicely now (e.g., “[Bentley] doffed [his hat] [nicely] [just now]”) hates: sink countably (e.g., #“Bentley doffed [ the sink] [countably]”) in The a striped house hat our the Need a flexible mechanism to score the possible sequences of dependents. doff nicely(2) every lexical entry wore: left DFA right DFA hat(8) hat nicely now: 8+2+2+3 = 15 lists 2 idiosyncratic DFAs. These accept sink countably: 1+0+3 = 4 in(-5) now(2) -4 3 dependent sequences sink(1) the word likes. cat stovepipe to today countably(0) Jason Eisner (U. Penn) 7 Jason Eisner (U. Penn) 8 Why CKY is slow Generic Chart Parsing (1) 1. visiting relatives is boring l interchangeable analyses have same signature 2. visiting relatives wear funny hats l “analysis” = tree or dotted tree or ... 3. visiting relatives, we got bored and stole their funny hats ... [cap spending at $300 million] ... visiting relatives: NP(visiting), NP(relatives), AdvP, ... NP NP VP CFG says that all NPs are interchangeable VP [score: 5] So we only have to use generic or best NP. [score: 2] NP [score: 17] [score: 12] But bilexical grammar disagrees: [score: 4] e.g., NP(visiting) is a poor subject for wear l if ≤ S signatures, keep ≤ S analyses per substring We must try combining each analysis w/ context Jason Eisner (U. Penn) 9 Jason Eisner (U. Penn) 10 Generic Chart Parsing (2) Headed constituents ... for each of the O( n 2 ) substrings, ... have too many signatures. How bad is Θ Θ (n 3 S 2 c)? Θ Θ for each of O( n ) ways of splitting it, for each of ≤ S analyses of first half for each of ≤ S analyses of second half, O(n 3 S 2 c) For unheaded constituents, S is constant: NP, VP ... for each of ≤ c ways of combining them: (similarly for dotted trees). So Θ (n 3 ). combine, & add result to chart if best But when different heads ⇒ different signatures, [cap spending] + [at $300 million] = [[cap spending] [at $300 million]] the average substring has Θ (n) possible heads and S= Θ (n) possible signatures. So Θ (n 5 ). ≤ S analyses ≤ S analyses ≤ cS 2 analyses of which we keep ≤ S Jason Eisner (U. Penn) 11 Jason Eisner (U. Penn) 12

Forget heads - think hats! Spans vs. constituents Two kinds of substring. Solution: » Constituent of the tree: links to the rest Don’t assemble the parse from constituents. only through its head. Assemble it from spans instead. ROOT wore The cat in the hat wore a stovepipe. ROOT cat stovepipe The in a » Span of the tree: links to the rest hat only through its endwords. the The cat in the hat wore a stovepipe. ROOT The cat in the hat wore a stovepipe. ROOT Jason Eisner (U. Penn) 13 Jason Eisner (U. Penn) 14 Decomposing a tree into spans Maintaining weights The cat in the hat wore a stovepipe. ROOT x y x y Seed chart w/ word pairs , , x y Step of the algorithm: + The cat cat in the hat wore a stovepipe. ROOT a ... b ... c a ... b b ... c + = We can add a ... b ... c an arc only if + wore a stovepipe. ROOT cat in the hat wore a, c are both a ... b ... c parentless. a ... b ... c a ... b b ... c cat in + weight( ) = weight( ) + weight( ) in the hat wore + weight of c arc from a’s right DFA state + weights of stopping in b’s left and right states in the hat + hat wore Jason Eisner (U. Penn) 15 Jason Eisner (U. Penn) 16 Analysis Improvement Algorithm is O(n 3 S 2 ) time, O(n 2 S ) space. What is S? Can reduce S from O(t 2 ) to O(t) a ... b b ... c = a ... b ... c + a ... b b ... c = a ... b ... c + Where: »b gets a parent from exactly one side likewise for b ’s right automaton »Neither a nor c previously had a parent state of b ’s left automaton The halt-weight for each half is »a’s right DFA accepts c; b’s DFAs can halt tells us weight of halting independent of the other half. a ... b Signature of has to specify parental status & DFA state of a and b Add every span to both left chart & right chart ∴ S = O(t 2 ) where t is the maximum # states of any DFA a ... b b ... c Above, we draw from left chart, from right chart a ... b Copy of in left chart has halt weight for b already added S independent of n because all of a substring’s analyses so its signature needn’t mention the state of b ’s automaton are headed in the same place - at the ends! Jason Eisner (U. Penn) 17 Jason Eisner (U. Penn) 18

Embellishments More detailed parses (1) agent l More detailed parses cat Labeled arcs det adj » Labeled edges The in obj » Tags (part of speech, word sense, ...) Grammar: hat DFAs must accept strings of word-role pairs » Nonterminals t e d e.g., ( cat, agent ) or ( hat, obj ) the Parser: When we stick two spans together, consider l How to encode probability models covering with: obj obj agent nothing , , , , agent etc. Time penalty: O(m) where m is the number of label types Jason Eisner (U. Penn) 19 Jason Eisner (U. Penn) 20 More detailed parses (2) Nonterminals Optimize tagging & parsing at once C cat 3 a A,B,C c c’ Grammar: B C C B Every input token denotes confusion set A b b’ B The 1 in 6 cat = {cat 1 , cat 2 , cat 3 , cat 4 } c b b’ c’ a one-to-one hat 2 Choice of cat 3 adds a certain weight to parse (cf. Collins 96) Parser: Articulated phrase Flat dependency phrase w/ head a . the 1 projected by head a More possibilities for seeding chart a ... b b ... c Tags of b must match in + The bilexical DFAs for a A,B,C insist a ... b Signature of must specify that its kids come in order A, B, C . tags of a and b Time penalty: Use fast bilexical algorithm, then convert result to nonterminal tree. O(g 4 ) where g is max # tags per input word Want small (and finite) set of tags like a A,B,C . since S goes up by g 2 (Guaranteed by X-bar theory: doff = {doff V,V,VP , doff V,V,VP,S }. ) O(g 3 ) by considering only appropriate b ... c Jason Eisner (U. Penn) 21 Jason Eisner (U. Penn) 22 Using the weights String-local constraints nicely hat x y now Seed chart with word pairs like doff : in We can choose to exclude some such pairs. sink l Deterministic grammar: All weights 0 or - ∞ Example: k-gram tagging. (here k=3) l Generative model: N P Det tag with part-of-speech trigrams log Pr(next kid = nicely | doff in state 2) one cat in the hat weight = log Pr(the | Det)Pr(Det | N,P) l Comprehension model: log Pr(next kid = nicely | doff in state 2, nicely present) Det V P N P Det excluded bigram: in the the 2 words disagree on tag for “cat” Eisner 1996 compared several models, found l significant differences Jason Eisner (U. Penn) 23 Jason Eisner (U. Penn) 24

Conclusions l Bilexical grammar formalism How much do 2 words want to relate? Flexible: encode your favorite representation Flexible: encode your favorite prob. model l Fast parsing algorithm Assemble spans, not constituents O(n 3 ), not O(n 5 ). Precisely, O(n 3 t 2 g 3 m). t=max DFA size, g=max senses/word, m=# label types These grammar factors are typically small Jason Eisner (U. Penn) 25

Recommend

More recommend