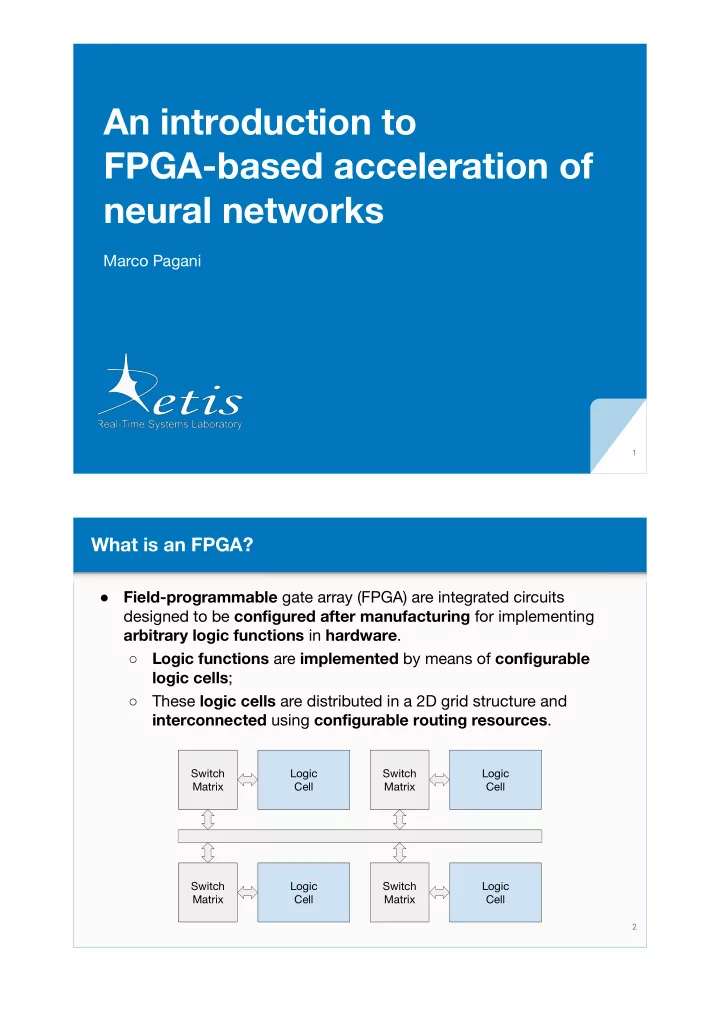

An introduction to FPGA-based acceleration of neural networks Marco Pagani 1 What is an FPGA? Field-programmable gate array (FPGA) are integrated circuits ● designed to be con fi gured after manufacturing for implementing arbitrary logic functions in hardware . Logic functions are implemented by means of con fi gurable ○ logic cells ; These logic cells are distributed in a 2D grid structure and ○ interconnected using con fi gurable routing resources . Switch Logic Switch Logic Matrix Cell Matrix Cell Switch Logic Switch Logic Matrix Cell Matrix Cell 2

What is an FPGA? Logic cells which are typically built around a look-up table (LUT). ● A n-inputs LUT is a logic circuit that can be con fi gured to ○ implement any possible combinational logic function with n inputs and a single output . 00 0 SRAM con fi g. memory 01 0 Out MUX 10 0 11 1 LUT In0 In1 3 What is an FPGA? Logic cells are built combining one or more look-up tables with ● fl ip- fl ops (FF) capable of storing a state. In this way, they can also sequential logic ; ○ In modern state-of-the-art FPGAs, logic cells includes: several LUTs, ● FFs, multiplexers, arithmetic and carry chains, and shift registers . 00 0 Out SRAM con fi g. memory FF 01 0 MUX 10 0 Out 11 1 Logic cell In0 In1 4

What is an FPGA? Besides Logic cells , FPGA also includes special-purpose cells: ● Digital signal processing (DSPs) / arithmetic cells; ○ ○ Dense memory cells (BRAMs). Input / output cells. ○ Hence, the fabric of modern FPGAs is an heterogeneous structure: ● I/O I/O I/O I/O I/O I/O LC MC LC AC LC I/O I/O LC MC LC AC LC I/O I/O LC MC LC AC LC I/O I/O I/O I/O I/O I/O 5 FPGAs as computing devices FPGAs are di ff erent from software-programmable processors . ● They do not rely on a prede fi ned microarchitecture or an ISA to ○ be programmed; “Programming” an FPGA means designing the datapath itself and ○ the control logic that will be used to process the data. Software programmable FPGA processor Software stack Software stack (optional) Software Hardware ISA ISA (optional) Hardware Logic design microarchitecture FPGA fabric 6

FPGAs as computing devices Traditionally, “hardware programming” has been carried out using ● hardware description languages (HDL) such as VHDL or Verilog . Di ff erent paradigm with respect to software programming . ○ HDL allows specifying sets of operations that will be performed (or ○ evaluated) concurrently when the activation conditions are met, eventually triggering other operations . Costly and time consuming design and debugging. ○ Software stack (optional) ISA (optional) Logic design FPGA fabric 7 FPGAs as computing devices In the last decades, high-level synthesis (HLS) tools emerged. ● HLS tools allow translating an algorithmic description of a ○ procedure written in system programming languages such as C or C++ into an HDL implementation ; Less e ffi cient with respect to native HDL but productivity is ○ higher both in coding and testing. Ease of development FPGA HLS FPGA HDL Performance 8

FPGAs design fl ow A modern FPGA design fl ow consist of a design and integration ● phase followed by synthesis and implementation phase . Logic design units (HDL) are often packaged into IPs to promote ○ portability and reusability. At the end , the process produces one or more bitstreams . ○ HLS Sources HDL Sources HLS tool Synthesis Implementation Bitstream generation 9 FPGAs design fl ow A bitstream con fi guration fi le contains the actual data for con fi guring ● the SRAM memory cells de fi ning the function of logic cells and the routing resources. Note: unlike a CPU program, an FPGA con fi guration does not ○ specify a set of operations that will be interpreted and executed by the FPGA bur rather contains the data for con fi guring the fabric. 00 SRAM con fi g. memory U Out FF 01 0101010 U 1101010 MUX 1010111 10 0011110 U Out 11 U Bitstream Logic cell In 10

Heterogeneous SoC-FPGA The pay-o ff for FPGA HW-programmability is higher silicon area ● consumption and lower clock frequency compared to “hard” CPUs. SoC-FPGA are heterogeneous platforms have emerged taking ○ the best of both worlds. Tightly coupling a SoC platform with FPGA fabric . ■ HW-Accelerator CPU CPU Last level Inter- DRAM cache connect CPU CPU Memory HW-Accelerator Memory controller FPGA 11 Why using FPGA for accelerating neural networks? FPGA have been used to accelerate the inference process for ● arti fi cial neural networks (NN). From data centers to cyber-physical systems application; ○ Currently, the training phase is still typically performed on GPUs . ○ ● Amazon AWS FPGA instances. ● Many industrial players are moving towards heterogeneous SoC-FPGA ● Microsoft Azure FPGA; for NN acceleration and parallel I/O processing. 12

Why using FPGA for accelerating neural networks? The inference process for neural networks can be performed using ● di ff erent computing devices ; These alternatives can be classi fi ed on di ff erent dimensions : ○ Performances, energy e ffi ciency, ease of programming. ■ Easy to program / less e ffi cient More e ffi cient / less fl exible ASIC CPU GPU FPGA (TPU) General-purpose devices Recon fi gurable Specialized digital ● ● ● implementing an ISA and fabric implementing logic having the having a programmable digital logic; highest e ffi ciency. datapath; Limited clock Can't be ● ● GPUs are oriented frequency; recon fi gured when ● towards data-parallel the needs change. (SIMD/SIMT) processing. 13 Why using FPGA for accelerating neural networks? The main advantage of FPGA is their fl exibility : ● Potentially, it is possible to implement the inference process using a ● hardware accelerator custom-designed for the speci fi c NN . E ffi cient datapath tailored for the speci fi c inference process. ○ If the network change the accelerator can be redesigned . ○ Same chip fi t di ff erent types of machine learning models; ■ As a result, FPGA-accelerated inference of NN is typically more ● energy-e ffi cient compared to CPUs and GPUs; High throughput without ramping up the clock frequency. ○ CPU development GPU FPGA Ease of HLS FPGA HDL Performance per Watt 14

Why using FPGA for accelerating neural networks? Another important advantage of FPGA-based hardware acceleration ● is time predictability ; This especially important in the context of real-time systems. ○ Currently, only minimal information on the internal architecture ● and resource management logic is publicly available for state-the-art GPUs . FPGA-based hardware accelerators are intrinsically more open, ● o ff ering the possibility to simulate and even describe their internal logic at clock-level granularity . Logic simulation view (GTK Waves) 15 Accelerating neural networks on FPGA The main drawback of FPGA-based NN acceleration is complexity : ● Custom designing an accelerator in HDL or even with HSL is ○ expensive and time consuming process. Network trained model Control code HW accelerators L0 Conv. (CPU) (FPGA) L1 Conv. load(); start(); wait(); L2 Max Pool. L3 Fully conn. L4 Fully conn. CPU CPU Inter- LLC connect CPU CPU FPGA 16

Accelerating neural networks on FPGA Hence, as for GPUs, speci fi c frameworks have been developed to ● ease the development of FPGA-based HW acceleration for NN. Network trained model L0 Conv. Control code HW accelerators (CPU) (FPGA) L1 Conv. load(); Framework L2 Max Pool. start(); core wait(); L3 Fully conn. L4 Fully conn. Contains code Framework templates / library accelerators 17 Accelerating neural networks on FPGA In order to achieve e ff ective HW acceleration some characteristics ● of FPGA must be taken into account : Floating points operations are expensive on current generation ○ FPGA fabric (this may change in the future). Integer and binary operation are way more e ffi cient . ■ On-fabric memory storage (BRAMs and LUT-RAMs) have a ○ larger throughput and and a lower latency compared to external memory such as external DDR memory . The parallel processing potential of FPGA hardware may be ■ hampered resulting in a memory-bound design . LC MC LC AC LC LC MC LC AC LC 18

Accelerating neural networks on FPGA According to these characteristics, some general design principles ● for accelerating NN of FPGA can be derived: Whenever possible, use integer or binary operation ; ○ Whenever possible, use on-chip memory to store the ○ parameters ; NN parameters can contain a lot of redundant information . ● This can be exploited using quantization i.e., moving from ○ fl oating-point to low-precision integer arithmetics. Moving to a quantized representation fi ts both design principles: ● Integer operations requires signi fi cantly less logic resources ○ allowing increasing compute density . Network parameters may entirely fi t into on-chip memory ; ○ 19 Accelerating neural networks on FPGA Moving from fl oating-point arithmetic to low-precision integer ● arithmetic has a limited impact on the NN accuracy . Network can be retrained to improve the accuracy. ○ Advanced frameworks for FPGA-based acceleration of NN will ● manage the quantization and retraining steps. Hardware Network Quantization generation / model and code transformation retraining generation Framework library 20

Recommend

More recommend