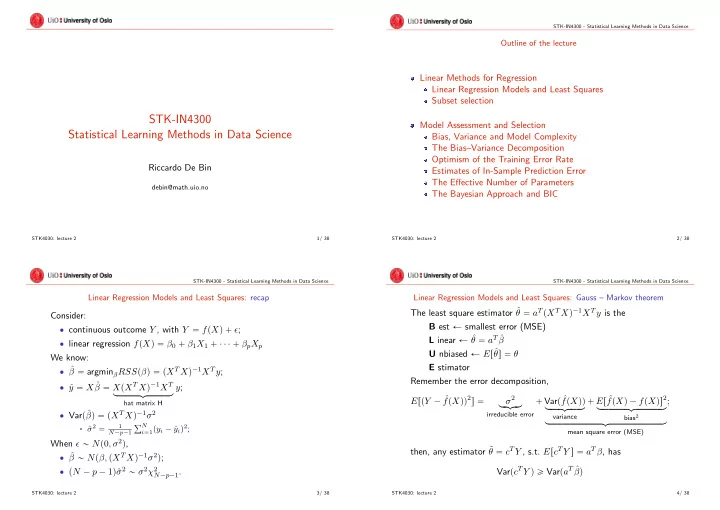

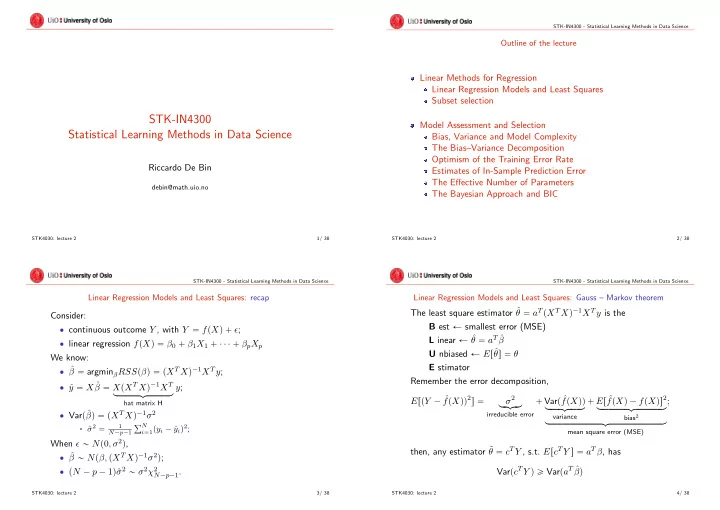

STK-IN4300 - Statistical Learning Methods in Data Science Outline of the lecture Linear Methods for Regression Linear Regression Models and Least Squares Subset selection STK-IN4300 Model Assessment and Selection Statistical Learning Methods in Data Science Bias, Variance and Model Complexity The Bias–Variance Decomposition Optimism of the Training Error Rate Riccardo De Bin Estimates of In-Sample Prediction Error The Effective Number of Parameters debin@math.uio.no The Bayesian Approach and BIC STK4030: lecture 2 1/ 38 STK4030: lecture 2 2/ 38 STK-IN4300 - Statistical Learning Methods in Data Science STK-IN4300 - Statistical Learning Methods in Data Science Linear Regression Models and Least Squares: recap Linear Regression Models and Least Squares: Gauss – Markov theorem θ “ a T p X T X q ´ 1 X T y is the The least square estimator ˆ Consider: B est Ð smallest error (MSE) ‚ continuous outcome Y , with Y “ f p X q ` ǫ ; θ “ a T ˆ L inear Ð ˆ β ‚ linear regression f p X q “ β 0 ` β 1 X 1 ` ¨ ¨ ¨ ` β p X p U nbiased Ð E r ˆ θ s “ θ We know: E stimator β “ argmin β RSS p β q “ p X T X q ´ 1 X T y ; ‚ ˆ Remember the error decomposition, β “ X p X T X q ´ 1 X T y “ X ˆ ‚ ˆ y ; loooooooomoooooooon E rp Y ´ ˆ f p X qq 2 s “ σ 2 ` Var p ˆ ` E r ˆ f p X q ´ f p X qs 2 lo omo on f p X qq ; hat matrix H looooomooooon loooooooooomoooooooooon β q “ p X T X q ´ 1 σ 2 ‚ Var p ˆ irreducible error variance looooooooooooooooooomooooooooooooooooooon bias 2 ř N σ 2 “ 1 § ˆ y i q 2 ; i “ 1 p y i ´ ˆ mean square error (MSE) N ´ p ´ 1 When ǫ „ N p 0 , σ 2 q , θ “ c T Y , s.t. E r c T Y s “ a T β , has then, any estimator ˜ β „ N p β, p X T X q ´ 1 σ 2 q ; ‚ ˆ σ 2 „ σ 2 χ 2 Var p c T Y q ě Var p a T ˆ ‚ p N ´ p ´ 1 q ˆ β q N ´ p ´ 1 . STK4030: lecture 2 3/ 38 STK4030: lecture 2 4/ 38

STK-IN4300 - Statistical Learning Methods in Data Science STK-IN4300 - Statistical Learning Methods in Data Science Linear Regression Models and Least Squares: hypothesis testing Subset selection: variable selection To test H 0 : β j “ 0 , we use the Z-score statistic, Why choosing a sparser (less variables) model? ˆ ˆ β j ´ 0 β j b z j “ “ ‚ prediction accuracy (smaller variance); sd p ˆ p X T X q ´ 1 β j q σ ˆ ‚ interpretability (easier to understand the model); r j,j s ‚ portability (easier to use in practice). ‚ When σ 2 is unknown, under H 0 , z j „ t N ´ p ´ 1 , Classical approaches: where t k is a Student t distribution with k degrees of freedom. ‚ forward selection; ‚ When σ 2 is known, under H 0 , ‚ backward elimination; z j „ N p 0; 1 q . ‚ stepwise and stepback selection; To test H 0 : β j , β k “ 0 , ‚ best subset; F “ p RSS 0 ´ RSS 1 q{p p 1 ´ p 0 q ‚ stagewise selection. , RSS 1 {p N ´ p ´ 1 q where 1 and 0 refer to the larger and smaller models, respectively. STK4030: lecture 2 5/ 38 STK4030: lecture 2 6/ 38 STK-IN4300 - Statistical Learning Methods in Data Science STK-IN4300 - Statistical Learning Methods in Data Science Subset selection: classical approaches Subset selection: classical approaches Forward selection: Stepwise and stepback selection: ‚ mixture of forward and backward selection; ‚ start with the null model, Y “ β 0 ` ǫ ; ‚ allow both adding and removing variables at each step: ‚ among a set of possible variables, add that which reduces the § starting from the null model: stepwise selection; unexplained variability the most § starting from the full model: stepback selection. § e.g.: after the first step, Y “ β 0 ` β 2 X 2 ` ǫ ; ‚ repeat iteratively until a certain stopping criterion (p-value Best subset: larger than a threshold α , increasing AIC, . . . ) is met. ‚ compute all the 2 p possible models (each variable in/out); ‚ choose the model which minimizes a loss function (e.g., AIC). Backward elimination: ‚ start with the full model, Y “ β 0 ` β 1 X 1 ` ¨ ¨ ¨ ` β p X p ` ǫ ; Stagewise selection: ‚ remove the variable that contributes the least in explaining ‚ similar to the forward selection; the outcome variability ‚ at each step, the specific regression coefficient is updated only § e.g.: after the first step, Y “ β 0 ` β 2 X 2 ` ¨ ¨ ¨ ` β p X p ` ǫ ; using the information related to the corresponding variable: ‚ repeat iteratively until a stopping criterion (p-value of all § slow to converge in low-dimensions; remaining variable smaller than α , increasing AIC, . . . ) is met. § turned out to be effective in high-dimensional settings. STK4030: lecture 2 7/ 38 STK4030: lecture 2 8/ 38

STK-IN4300 - Statistical Learning Methods in Data Science STK-IN4300 - Statistical Learning Methods in Data Science Model Assessment and Selection: introduction Bias, Variance and Model Complexity: definitions Define: ‚ Y “ target variable; ‚ X “ input matrix; ‚ Model Assessment: evaluate the performance (e.g., in terms ‚ ˆ of prediction) of a selected model. f p X q “ prediction rule, trained on a training set T . The error is measured through a loss function ‚ Model Selection: select the best model for the task (e.g., best for prediction). L p Y, ˆ f p X qq which penalizes differences between Y and ˆ ‚ Generalization: a (prediction) model must be valid in broad f p X q . generality, not specific for a specific dataset. Typical choices for continuous outcomes are: ‚ L p Y, ˆ f p X qq “ p Y ´ ˆ f p X qq 2 , the quadratic loss; ‚ L p Y, ˆ f p X qq “ | Y ´ ˆ f p X q| , the absolute loss. STK4030: lecture 2 9/ 38 STK4030: lecture 2 10/ 38 STK-IN4300 - Statistical Learning Methods in Data Science STK-IN4300 - Statistical Learning Methods in Data Science Bias, Variance and Model Complexity: categorical variables Bias, Variance and Model Complexity: test error Similar story for the categorical variables: The test error (or generalization error) is the prediction error over ‚ G “ target variable Ñ takes K values in G ; an independent test sample Err T “ E r L p Y, ˆ f p X qq| T s Typical choices for the loss function in this case are: ‚ L p Y, ˆ f p X qq “ 1 p G ‰ ˆ G p X qq , the 0-1 loss; where both X and Y are drawn randomly from their joint ‚ L p Y, ˆ f p X qq “ ´ 2 log ˆ p G p X q , the deviance. distribution. p G p X q “ ℓ p ˆ ‚ log ˆ f p X qq is general and can be use for every kind The specific training set T used to derive the prediction rule is of outcome (binomial, Gamma, Poisson, log-normal, . . . ) fixed Ñ the test error refers to the error for this specific T . ‚ the factor ´ 2 is added to make the loss function equal to the squared loss in the Gaussian case, " * In general, we would like to minimize the expected prediction error 1 ´ 1 L p ˆ 2 ¨ 1 p Y ´ ˆ f p X qq 2 (expected test error), ? f p X qq “ 2 π 1 exp Err “ E r L p Y, ˆ f p X qq “ ´ 1 f p X qqs “ E r Err T s . ℓ p ˆ 2 p Y ´ ˆ f p X qq 2 STK4030: lecture 2 11/ 38 STK4030: lecture 2 12/ 38

STK-IN4300 - Statistical Learning Methods in Data Science STK-IN4300 - Statistical Learning Methods in Data Science Bias, Variance and Model Complexity: training error Bias, Variance and Model Complexity: prediction error ‚ We would like to get Err, but we only have information on the single training set (we will see later how to solve this issue); ‚ our goal here is to estimate Err T . The training error ÿ N err “ 1 L p y i , ˆ Ď f p x i qq , N i “ 1 is NOT a good estimator of Err T . We do not want to minimize the training error: ‚ increasing the model complexity, we can always decrease it; ‚ overfitting issues: § model specific for the training data; § generalize very poorly. STK4030: lecture 2 13/ 38 STK4030: lecture 2 14/ 38 STK-IN4300 - Statistical Learning Methods in Data Science STK-IN4300 - Statistical Learning Methods in Data Science Bias, Variance and Model Complexity: data split Bias, Variance and Model Complexity: data split In an ideal (= a lot of data) situation, the best option is randomly splitting the data in three independent sets, Example with k-nearest neighbour: ‚ in the training set: fit kNN with different values of k ; ‚ in the validation set: select the model with best performance (choose k ); ‚ in the test set: evaluate the prediction error of the model ‚ training set: data used to fit the model(s); with the selected k . ‚ validation set: data used to identify the best model; ‚ test set: data used to assess the performance of the best model (must be completely ignored during model selection). NB: it is extremely important to use the sets fully independently! STK4030: lecture 2 15/ 38 STK4030: lecture 2 16/ 38

Recommend

More recommend