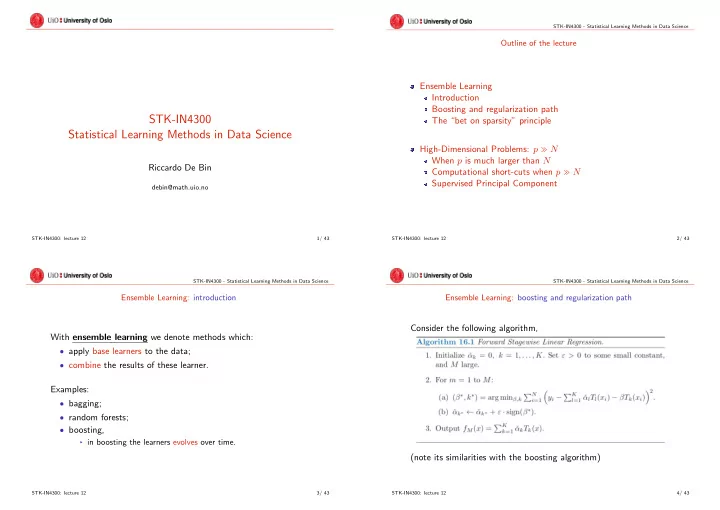

STK-IN4300 - Statistical Learning Methods in Data Science Outline of the lecture Ensemble Learning Introduction Boosting and regularization path STK-IN4300 The “bet on sparsity” principle Statistical Learning Methods in Data Science High-Dimensional Problems: p " N When p is much larger than N Riccardo De Bin Computational short-cuts when p " N Supervised Principal Component debin@math.uio.no STK-IN4300: lecture 12 1/ 43 STK-IN4300: lecture 12 2/ 43 STK-IN4300 - Statistical Learning Methods in Data Science STK-IN4300 - Statistical Learning Methods in Data Science Ensemble Learning: introduction Ensemble Learning: boosting and regularization path Consider the following algorithm, With ensemble learning we denote methods which: ‚ apply base learners to the data; ‚ combine the results of these learner. Examples: ‚ bagging; ‚ random forests; ‚ boosting, § in boosting the learners evolves over time. (note its similarities with the boosting algorithm) STK-IN4300: lecture 12 3/ 43 STK-IN4300: lecture 12 4/ 43

STK-IN4300 - Statistical Learning Methods in Data Science STK-IN4300 - Statistical Learning Methods in Data Science Ensemble Learning: boosting and regularization path Ensemble Learning: boosting and regularization path In comparison with lasso: ‚ initialization ( ˘ α k “ 0 , k “ 1 , . . . , K ) Ð Ñ λ “ 8 ; ‚ for small values of M : § some ˘ α k are not updated Ð Ñ coefficients “forced” to be 0; α r 0 s α r M s α r8s § ˘ ď ˘ ď ˘ Ð Ñ shrinkage; k k k § M inversely related to λ ; ‚ for M large enough ( M “ 8 in boosting) and K ă N , α r M s “ ˆ α LS Ð Ñ λ “ 0 ˘ k STK-IN4300: lecture 12 5/ 43 STK-IN4300: lecture 12 6/ 43 STK-IN4300 - Statistical Learning Methods in Data Science STK-IN4300 - Statistical Learning Methods in Data Science Ensemble Learning: boosting and regularization path Ensemble Learning: boosting and regularization path If ‚ all the basis learners T K are mutually uncorrelated, Consider 1000 Gaussian distributed variables: then ‚ strongly correlated ( ρ “ 0 . 95 ) in blocks of 20; ‚ for ε Ñ 0 and M Ñ 8 , such that εM Ñ t , ‚ uncorrelated blocks; Algorithm 16.1 gives the lasso solutions with t “ ř ‚ one variable with effect on the outcome for each block; k | α k | . ‚ effects generated from a standard Gaussian. In general component-wise boosting and lasso do not provide the same solution: Moreover: ‚ in practice, often similar in terms of prediction; ‚ added Gaussian noise; ‚ for ε ( ν ) Ñ 0 boosting (and forward stagewise in general) ‚ noise-to-signal ratio = 0.72. tends to the path of the least angle regression algorithm; ‚ lasso can also be seen as a special case of least angle. STK-IN4300: lecture 12 7/ 43 STK-IN4300: lecture 12 8/ 43

STK-IN4300 - Statistical Learning Methods in Data Science STK-IN4300 - Statistical Learning Methods in Data Science Ensemble Learning: boosting and regularization path Ensemble Learning: boosting and regularization path STK-IN4300: lecture 12 9/ 43 STK-IN4300: lecture 12 10/ 43 STK-IN4300 - Statistical Learning Methods in Data Science STK-IN4300 - Statistical Learning Methods in Data Science Ensemble Learning: the “bet on sparsity” principle Ensemble Learning: the “bet on sparsity” principle Consider the following framework: ‚ 50 observations; We consider: ‚ 300 independent Gaussian variables. ‚ L 1 -type of penalty (shrinkage, variable selection); Three scenarios: ‚ L 2 -type of penalty (shrinkage, computationally easy); ‚ all 300 variables are relevant; ‚ boosting’s stagewise forward strategy minimizes something close to a L 1 penalized loss function; ‚ only 10 out of 300 variables are relevant; ‚ step-by-step minimization. ‚ 30 out of 300 variables are relevant. Outcome: Can we characterize situations where one is preferable to the other? ‚ regression (added standard Gaussian noise); ‚ classification (from an inverse-logit transformation of the linear predictor) STK-IN4300: lecture 12 11/ 43 STK-IN4300: lecture 12 12/ 43

STK-IN4300 - Statistical Learning Methods in Data Science STK-IN4300 - Statistical Learning Methods in Data Science Ensemble Learning: the “bet on sparsity” principle Ensemble Learning: the “bet on sparsity” principle STK-IN4300: lecture 12 13/ 43 STK-IN4300: lecture 12 14/ 43 STK-IN4300 - Statistical Learning Methods in Data Science STK-IN4300 - Statistical Learning Methods in Data Science Ensemble Learning: the “bet on sparsity” principle Ensemble Learning: the “bet on sparsity” principle This means that: ‚ lasso performs better than ridge in sparse contexts; ‚ ridge gives better results if there are several relevant variables with small effects; ‚ anyway, in the dense case, the model does not explain a lot, § not enough data to estimate correctly several coefficients; Ó “bet on sparsity” “ “use a procedure that does well in sparse problems, since no procedure does well in dense problems” STK-IN4300: lecture 12 15/ 43 STK-IN4300: lecture 12 16/ 43

STK-IN4300 - Statistical Learning Methods in Data Science STK-IN4300 - Statistical Learning Methods in Data Science Ensemble Learning: the “bet on sparsity” principle High-Dimensional Problems: when p is much larger than N The case p " N (number of variable much larger than the number The degree of sparseness depends on: of observations): ‚ the unknown mechanism generating the data; ‚ very important in current applications, § it depends on the number of relevant variables; § e.g., in a common genetic study, p « 23000 , N « 100 ; ‚ size of the training set; ‚ concerns about high variance and overfitting; § larger sizes allow estimating denser models; ‚ highly regularized approaches are common: ‚ noise-to-signal ratio; § lasso; § smaller NSR Ñ denser models (same as before); § ridge; § boosting; ‚ size of the dictionary; § elastic-net; § more base learners, potentially sparser models. § . . . STK-IN4300: lecture 12 17/ 43 STK-IN4300: lecture 12 18/ 43 STK-IN4300 - Statistical Learning Methods in Data Science STK-IN4300 - Statistical Learning Methods in Data Science High-Dimensional Problems: when p is much larger than N High-Dimensional Problems: when p is much larger than N As a consequence, averaging over 100 replications: ‚ p 0 , the number of significant β (i.e., β : | ˆ Consider the following example: β { ˆ se | ą 2 ) ‚ N “ 100 ; (i) p 0 “ 9 (ii) p 0 “ 33 (iii) p 0 “ 331 . ‚ p variables from standard Gaussians with ρ “ 0 . 2 , Consider 3 values of the penalty λ : (i) p “ 20 (ii) p “ 100 (iii) p “ 1000 . ‚ λ “ 0 . 001 , which corresponds to ‚ response from (i) d.o.f. “ 20 (ii) d.o.f. “ 99 (iii) d.o.f. “ 99 ; Y “ ř p j “ 1 X j β j ` σǫ ; ‚ λ “ 100 , which corresponds to ‚ signal to noise ratio Var r E p Y | X qs{ σ 2 “ 2 ; (i) d.o.f. “ 9 (ii) d.o.f. “ 35 (iii) d.o.f. “ 87 ; ‚ true β from a standard Gaussian; ‚ λ “ 1000 , which corresponds to (i) d.o.f. “ 2 (ii) d.o.f. “ 7 (iii) d.o.f. “ 43 . STK-IN4300: lecture 12 19/ 43 STK-IN4300: lecture 12 20/ 43

STK-IN4300 - Statistical Learning Methods in Data Science STK-IN4300 - Statistical Learning Methods in Data Science High-Dimensional Problems: when p is much larger than N High-Dimensional Problems: when p is much larger than N Remarks: ‚ with p “ 20 , ridge regression can find the relevant variables; § the covariance matrix can be estimated; ‚ moderate shrinkage works better in the middle case, in which we can find some non-zero effects; ‚ with p “ 1000 , there is no hope to find the relevant variables, and it is better to shrink down everything; § no possibility to estimate the covariance matrix. STK-IN4300: lecture 12 21/ 43 STK-IN4300: lecture 12 22/ 43 STK-IN4300 - Statistical Learning Methods in Data Science STK-IN4300 - Statistical Learning Methods in Data Science High-Dimensional Problems: computational short-cuts when p " N High-Dimensional Problems: computational short-cuts when p " N Consider the single-value decomposition of X, Theorem (Hastie et al., 2009, page 660): Let f ˚ p r i q “ θ 0 ` r T i θ and consider the optimization problems: X “ UDV T “ RV T N L p y i , β 0 ` x i β q ` λβ T β ; p ˆ β 0 , ˆ ÿ β q “ argmin β 0 ,β P R p Where i “ 1 N ‚ V is a p ˆ N matrix with orthonormal columns; i θ q ` λθ T θ. p ˆ θ 0 , ˆ ÿ L p y i , θ 0 ` r T θ q “ argmin θ 0 ,θ P R N ‚ U is a N ˆ N orthonormal matrix; i “ 1 ‚ D is a diagonal matrix with elements d 1 ě d 2 ě ¨ ¨ ¨ ě d N ě 0 ; Then β 0 “ θ 0 and ˆ β “ V ˆ θ . ‚ R is a N ˆ N with rows r T i . STK-IN4300: lecture 12 23/ 43 STK-IN4300: lecture 12 24/ 43

Recommend

More recommend