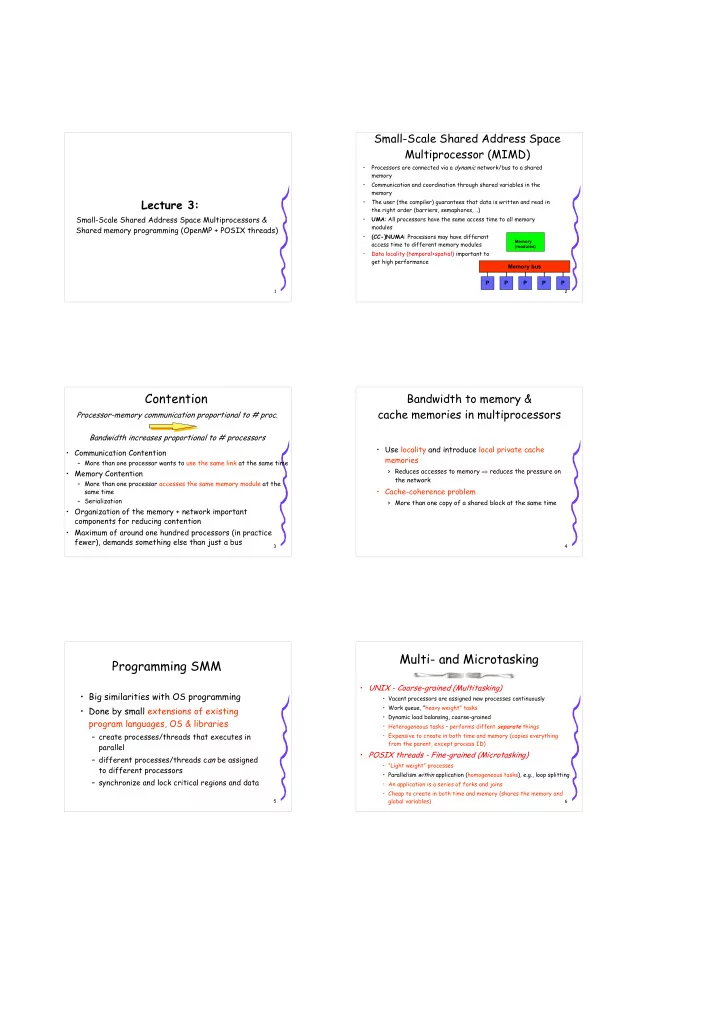

Small-Scale Shared Address Space Multiprocessor (MIMD) • Processors are connected via a dynamic network/bus to a shared memory • Communication and coordination through shared variables in the memory Lecture 3: • The user (the compiler) guarantees that data is written and read in the right order (barriers, semaphores, ..) Small-Scale Shared Address Space Multiprocessors & • UMA : All processors have the same access time to all memory modules Shared memory programming (OpenMP + POSIX threads) • (CC-)NUMA : Processors may have different Memory access time to different memory modules (modules) • Data locality (temporal+spatial) important to get high performance Memory bus P P P P P 1 2 Contention Bandwidth to memory & cache memories in multiprocessors Processor-memory communication proportional to # proc. Bandwidth increases proportional to # processors • Use locality and introduce local private cache • Communication Contention memories – More than one processor wants to use the same link at the same time > Reduces accesses to memory ⇒ reduces the pressure on • Memory Contention the network – More than one processor accesses the same memory module at the • Cache-coherence problem same time – Serialization > More than one copy of a shared block at the same time • Organization of the memory + network important components for reducing contention • Maximum of around one hundred processors (in practice fewer), demands something else than just a bus 3 4 Multi- and Microtasking Programming SMM • UNIX - Coarse-grained (Multitasking) • Big similarities with OS programming • Vacant processors are assigned new processes continuously • Work queue, ”heavy weight” tasks • Done by small extensions of existing • Dynamic load balansing, coarse-grained program languages, OS & libraries • Heterogeneous tasks – performs diffent separate things – create processes/threads that executes in • Expensive to create in both time and memory (copies everything from the parent, except process ID) parallel • POSIX threads - Fine-grained (Microtasking) – different processes/threads c an be assigned • ”Light weight” processes to different processors • Parallelism within application (homogeneous tasks), e.g., loop splitting – synchronize and lock critical regions and data • An application is a series of forks and joins • Cheap to create in both time and memory (shares the memory and global variables) 5 6

Introducing multiple threads UNIX Process & shared memory ● A thread is a sequens of instructions that • A UNIX-process has ● UNIX-process + shared executes within a program three segments: data segment ● A normal UNIX-process contains one single thread – text, executable code ● A fork() copies everything except the shared data – data • A process may contain more than one thread – stack, activation post + • Different parts of the same code may be executed at dynamical data the same time code code • Processes are • The threads form one process and share the address independent and have private private space, files, signal handling etc. For example: no shared addresses data data – When a thread opens a file, it is immediately code • fork() - accessable to all threads shared creates an data – When a thread writes to a global variable, it is data exact copy of the readable by all threads process (pid an • The threads have their own IP (instruction pointers) private private stack exception) and stacks stack stack 7 8 Coordinating parallel processes Execution of threads • Lock Master thread – Mechanism to maintain a policy for how shared Create worker threads with phtread_create() data may be read/written The worker threads starts • Determinism – The access order is the same for each execution The worker threads do their work • Nondeterminism – The access order is random The worker threads terminates • Indeterminism (not good...) – The result of nondeterminism which causes that Join the worker threads with phtread_join() different results can be received with different Master thread 9 10 executions. Safe, Live, and Fair Critical section, Road crossing Forced to wait for ever on • Safe Lock Yield Right unlimited number of cars Safe but – deterministic results (can lead to unfairness) unfair • Unfair Lock – a job may wait for ever while others get repeated Yield right + first in first out (FIFO) accesses d e a Safe, fair but not live All arrives at exactly the d l o c • Fair Lock k same time and everybody is forced to wait on each – all may get access in roughly the order they arrived + other ”neighbour to the right have priority” (may result in Yield right + FIFO + Priority deadlocks=”all waits for their right neighbour”) 1 2 Safe, fair and live • Live Lock 4 3 – prevents deadlocks 11 12

Example, Spin lock Unsafe Example, Spin lock Safe while C do; % spin while C is true Flag[me] := TRUE; % set my flag C := TRUE; % Lock (C),only while Flag[other] do; % Spin one access CR; % Critical sect. Flag[me] := False; % Clear lock CR; % Critical section C := FALSE; % unLock(C) • Safe – only one process may access CR; ● Unsafe • Not live – both may access critical section ● Race – risk for deadlock if all processes executes the – The processes competes for the CR first statement at the same time 13 14 Locks for simultaneous reading Example: Atomic test and set, • Queue Lock Safe and Live struct q_lock{ int head; • Atomic (uninterruptable operation) test int tasks[NPROCS]; } lock; and set lock void add_to_q(lock, myid) char *lock while (head_of_q(lock) != myid); CR; *lock = UNLOCKED void remove_from_q(lock, myid); • Safe, Live & Fair Atomic_test_and_set_lock(lock, LOCKED) – The queue assures that nobody has to wait % TRUE if lock already is LOCKED % FALSE if lock changes from UNLOCKED to LOCKED forever to access the CR • Drawback while Atomic_test_and_set_lock(lock, LOCK ); CR; – Spinning Atomic_test_and_set_lock(LOCK, UNLOCK ) – How to implement the queue? % Unlock 15 16 Multiple Readers Lock • Demands Shared memory programming - tools WriteQ ReadQ Access – Several readers may access the CR at Room the same time Waiting-room – When a writer arrives, no more readers are allowed to enter the Access Room •OpenMP – When readers inside the CR are finished the writer has exclusive right to the CR •POSIX threads • Waiting-room analogy – safe , only one Writer at the time is allowed into the Access Room – fair , when there is a Writer in the waiting-room, Readers in the waiting-room must let the Writer go first, except when there already is a Writer in the Access Room – fair , when there is a Reader in the waiting-room a Writer in the waiting-room must let the Reader go first, except when there already is a Reader in the Access Room – live , no deadlocks. Everyone inside the waiting-room are guaranteed access to the Access Room 17 18

OpenMP OpenMP • A portable fork-join parallel model for • Two kinds of parallelism: architectures with shared memory – Coarse-grained (task parallelism) • Portable • Split the program into segments (threads) that can be executed in parallel – Fortran 77 and C/C++ bindings • Implicit join at the end of the segment, or explicit – Many implementations, all work in the same way synchronization points (like barriers) (in theory at least) • E.g.: let two threads call different subroutines in parallel • Fork-join model – Fine-grained (loop parallelism) – Execution starts with one thread • Execute independent iterations of DO-loops in parallel • Several choices of splitting – Parallel regions forks new threads at entry • Data environments for both kinds: – The threads joins at thge end of the region – Shared data • Shared memory – Private data – (Almost all) memory accessable by all threads 19 20 Design of OpenMP “A flexible standard, easily implemented across different platforms” • Control structures – Minimalistic, for simplicity – PARALLEL, DO (for), SECTIONS, SINGLE, MASTER • Data environments – New access possibilities for forked threads – SHARED, PRIVATE, REDUCTION 22 Control structures I Design av OpenMP II • PARALLEL / END PARALLEL PARALLEL • Synchronization – Fork and join – Simple implicit synchronization at the end of control structures S1 S1 S1 – Number of threads does not change – Explicit synchronization for more complex patterns: within the region? BARRIER, CRITICAL, ATOMIC, ORDERED, FLUSH – SPMD-execution within the region S2 – Lock subroutines for fine-grained control • SINGLE / END SINGLE • Runtime environment and library – (Short) sequential section within a – Manages preferences for forking and scheduling S3 S3 S3 parallel region – E.g.: OMP_GET_THREAD_NUM(), OMP_SET_NUM_THREADS( intexpr) • MASTER / END MASTER • OpenMP may (i principal) be added to any computer language END – SINGLE on master processor (often 0) – In reality, Fortran och C (C++) C$OMP PARALLEL • OpenMP is mainly a collection of directive to the compiler #pragma omp parallel CALL S1() – In Fortran: structured comments interpreted by the compiler { C$OMP SINGLE S1(); CALL S2() C$OMP directive [ clause [,], clause [,],..] #pragma omp single C$OMP END SINGLE – In C: pragmas sending information to the compiler: { S2(); } CALL S3() #pragma omp directive [ clause [,], clause [,],..] S3(); C$OMP END PARALLEL 23 24 }

Recommend

More recommend