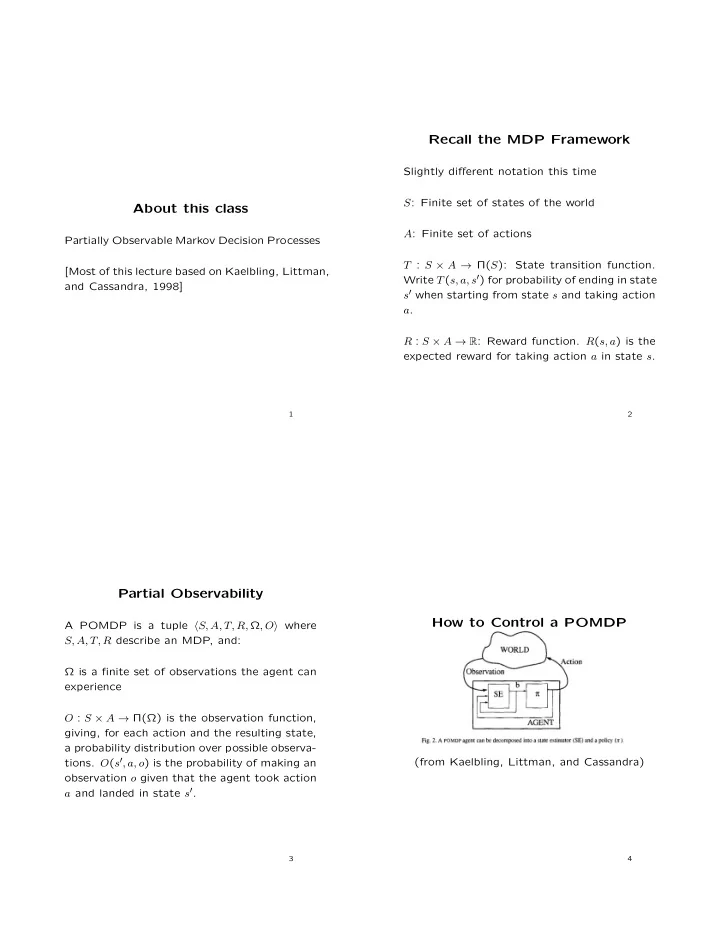

Recall the MDP Framework Slightly di ff erent notation this time S : Finite set of states of the world About this class A : Finite set of actions Partially Observable Markov Decision Processes T : S ⇥ A ! Π ( S ): State transition function. [Most of this lecture based on Kaelbling, Littman, Write T ( s, a, s 0 ) for probability of ending in state and Cassandra, 1998] s 0 when starting from state s and taking action a . R : S ⇥ A ! R : Reward function. R ( s, a ) is the expected reward for taking action a in state s . 1 2 Partial Observability How to Control a POMDP A POMDP is a tuple h S, A, T, R, Ω , O i where S, A, T, R describe an MDP, and: Ω is a finite set of observations the agent can experience O : S ⇥ A ! Π ( Ω ) is the observation function, giving, for each action and the resulting state, a probability distribution over possible observa- tions. O ( s 0 , a, o ) is the probability of making an (from Kaelbling, Littman, and Cassandra) observation o given that the agent took action a and landed in state s 0 . 3 4

Example 1 State Estimation Agent keeps an internal belief state that sum- (from Kaelbling, Littman, and Cassandra) marizes its previous experience. The SE up- dates this belief state based on the last action, 3 is a goal state. Task is episodic. Two ac- the current observation, and the previous belief tions, East and West that succeed with Pr 0 . 9 state. and, when they fail, go in the opposite direc- tion. If no movement is possible then the agent What should the belief state be? Most prob- stays in the same location. able state of the world? But this could lead to big problems. Suppose I’m wrong? Sup- Suppose the agent starts o ff equally likely to pose I’m uncertain and can gain value through be in any of the three non-goal states. Then taking an informative action? takes action East twice and does not observe the goal state. What is the evolution of belief Instead we will use probability distributions over states? the true state of the world. [0 . 333 , 0 . 333 , 0 . 000 , 0 . 333] [0 . 100 , 0 . 450 , 0 . 000 , 0 . 450] 5 6 Example 2 [0 . 100 , 0 . 164 , 0 . 000 , 0 . 736] (from Littman, 2009) Suppose in either of the two Start states you There will always be some probability mass on can look up and make an observation that will each of the nongoal states, since actions have be either Green or Red. This gives you the in- some chance of failing. formation you need to succeed, but if there’s a small penalty for actions or some discount- ing, you wouldn’t necessarily do it if you were using the most probable state (for example if your initial belief state is 1/4 probability on be- ing in (rewardLeft, start) and 3/4 probability on being in (rewardRight, start) Interesting connection, again, to value of in- formation, and exploration-exploitation. 7

The “Belief MDP” State space: B : the set of belief states Belief State Updates Action space: A : same as original MDP Let b ( s ) be the probability assigned to world Transition model: state s by belief state b . Then P s 2 S b ( s ) = 1. τ ( b, a, b 0 ) = Pr( b 0 | a, b ) = Pr( b 0 | a, b, o ) Pr( o | a, b ) X o 2 Ω Given b, a, o compute b 0 . where Pr( b 0 | b, a, o ) is 1 if SE ( b, a, o ) = b 0 and 0 otherwise. b 0 ( s 0 ) = Pr( s 0 | o, a, b ) Reward function: ρ ( b, a ) = P s 2 S b ( s ) R ( s, a ) = Pr( o | s 0 , a, b ) Pr( s 0 | a, b ) Pr( o | a, b ) Isn’t this delusional? I’m getting rewarded just = Pr( o | s 0 , a ) P s 2 S Pr( s 0 | a, b, s ) Pr( s | a, b ) for believing I’m in a good state? Only works Pr( o | a, b ) because my updates are based on a correct ob- = O ( s 0 , a, o ) P s 2 S T ( s, a, s 0 ) b ( s ) servation and transition model of the world, so Pr( o | a, b ) the belief state represents the true probabilities The denominator is a normalizing factor, so of being in each world state. this is all easy to compute. The bad news: In general, very hard to solve continuous space MDPs (uncountably many belief states). 8 9 Policy Trees / Contingent Plans Let a ( p ) be the action specified at the top of a policy tree, and o i ( p ) be the policy subtree Think about finite-horizon policies. Can’t just induced from p when observing o i . have a mapping from states to actions in this case, because we don’t know what state we’re Suppose p is a one-step policy tree. going to be in. Instead formulate contingent V p ( s ) = R ( s, a ( p )) plans or policy trees that tell the agent what to do in case of each particular sequence of Now, how do we go from the value functions observations from a given start (world)-state. constructed from policy trees of depth t � 1 to value functions constructed from policy trees of depth t ? V p ( s ) = R ( s, a ( p )) + γ [Expected value of the future] X Pr( s 0 | s, a ( p )) X Pr( o i | s 0 , a ( p )) V o i ( p ) ( s 0 ) = R ( s, a ( p )) + γ s 0 2 S o i 2 Ω X T ( s, a ( p ) , s 0 ) X O ( s 0 , a ( p ) , o i ) V o i ( p ) ( s 0 ) = R ( s, a ( p )) + γ s 0 2 S o i 2 Ω Since we won’t actually know s , we need: X V p ( b ) = b ( s ) V p ( s ) s 2 S 10

Let α p = h V p ( s 1 ) , . . . V p ( s n ) i . Then V p ( b ) = b · α p Then the optimal t -step policy starting from belief state b is given by: V t ( b ) = max p 2 P b · α p where P is the (finite) set of all t -step policy trees.

Recommend

More recommend