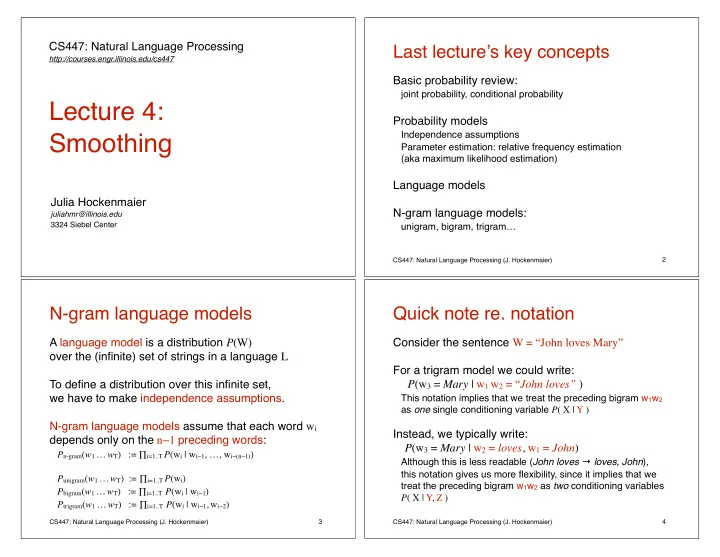

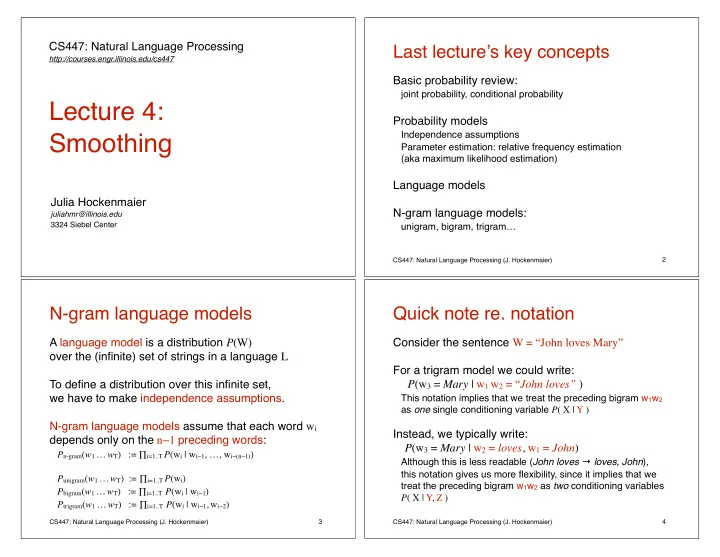

CS447: Natural Language Processing Last lecture’s key concepts http://courses.engr.illinois.edu/cs447 Basic probability review: joint probability, conditional probability Lecture 4: Probability models Independence assumptions Smoothing Parameter estimation: relative frequency estimation (aka maximum likelihood estimation) Language models Julia Hockenmaier N-gram language models: juliahmr@illinois.edu 3324 Siebel Center unigram, bigram, trigram… � 2 CS447: Natural Language Processing (J. Hockenmaier) N-gram language models Quick note re. notation A language model is a distribution P (W) Consider the sentence W = “John loves Mary” over the (infinite) set of strings in a language L For a trigram model we could write: To define a distribution over this infinite set, P (w 3 = Mary | w 1 w 2 = “ John loves” ) we have to make independence assumptions. This notation implies that we treat the preceding bigram w 1 w 2 as one single conditioning variable P ( X | Y ) N-gram language models assume that each word w i Instead, we typically write: depends only on the n − 1 preceding words: P (w 3 = Mary | w 2 = loves , w 1 = John ) P n-gram ( w 1 … w T ) := ∏ i=1..T P (w i | w i − 1 , …, w i − (n − 1) ) Although this is less readable ( John loves → loves, John ), this notation gives us more flexibility, since it implies that we P unigram ( w 1 … w T ) := ∏ i=1..T P (w i ) treat the preceding bigram w 1 w 2 as two conditioning variables P bigram ( w 1 … w T ) := ∏ i=1..T P (w i | w i − 1 ) P ( X | Y, Z ) P trigram ( w 1 … w T ) := ∏ i=1..T P (w i | w i − 1 , w i − 2 ) � 3 � 4 CS447: Natural Language Processing (J. Hockenmaier) CS447: Natural Language Processing (J. Hockenmaier)

Parameter estimation (training) Testing: unseen events will occur Parameters: the actual probabilities (numbers) Recall the Shakespeare example: P ( w i = ‘the’ | w i -1 = ‘on’ ) = 0.0123 Only 30,000 word types occurred. We need (a large amount of) text as training data Any word that does not occur in the training data to estimate the parameters of a language model. has zero probability! The most basic estimation technique: Only 0.04% of all possible bigrams occurred. relative frequency estimation (= counts) Any bigram that does not occur in the training data P ( w i = ‘the’ | w i-1 = ‘on’ ) = C ( ‘on the’ ) / C ( ‘on’ ) has zero probability! This assigns all probability mass to events in the training corpus. Also called Maximum Likelihood Estimation (MLE) � 5 � 6 CS447: Natural Language Processing (J. Hockenmaier) CS447: Natural Language Processing (J. Hockenmaier) Zipf’s law: the long tail So…. How many words occur once, twice, 100 times, 1000 times? … we can’t actually evaluate our MLE models on How many words occur N times? 100000 unseen test data (or system output)… A few words the r- th most are very frequent Word frequency (log-scale) 10000 common word w r Frequency (log) … because both are likely to contain words/n-grams has P ( w r ) ∝ 1/ r 1000 that these models assign zero probability to. Most words 100 are very rare We need language models that assign some 10 probability mass to unseen words and n-grams. 1 1 10 100 1000 10000 100000 Number of words (log) English words, sorted by frequency (log-scale) w 1 = the, w 2 = to, …., w 5346 = computer , ... In natural language: - A small number of events (e.g. words) occur with high frequency - A large number of events occur with very low frequency � 7 � 8 CS447: Natural Language Processing (J. Hockenmaier) CS447: Natural Language Processing (J. Hockenmaier)

Today’s lecture Dealing with unseen events How can we design language models* Relative frequency estimation assigns all probability that can deal with previously unseen events? mass to events in the training corpus *actually, probabilistic models in general But we need to reserve some probability mass to events that don’t occur in the training data P (unseen) > 0.0 Unseen events = new words, new bigrams Important questions: ??? What possible events are there? P (seen) P (seen) How much probability mass should they get? = 1.0 < 1.0 MLE model Smoothed model � 9 � 10 CS447: Natural Language Processing (J. Hockenmaier) CS447: Natural Language Processing (J. Hockenmaier) What unseen events may occur? What unseen events may occur? Simple distributions: Simple conditional distributions: P ( X = x ) P ( X = x | Y = y ) (e.g. unigram models) (e.g. bigram models) Case 1: The outcome x has been seen, Possibility: The outcome x has not occurred during training but not in the context of Y = y : - We need to reserve mass in P ( X | Y=y ) for X = x (i.e. is unknown): - We need to reserve mass in P ( X ) for x Case 2: The conditioning variable y has not been seen: - We have no P ( X | Y = y ) distribution. Questions: - What outcomes x are possible? - We need to drop the conditioning variable Y = y - How much mass should they get? and use P ( X ) instead. � 11 � 12 CS447: Natural Language Processing (J. Hockenmaier) CS447: Natural Language Processing (J. Hockenmaier)

What unseen events may occur? Examples Training data: The wolf is an endangered species Complex conditional distributions Test data: The wallaby is endangered (with multiple conditioning variables) P ( X = x | Y = y , Z = z ) Unigram Bigram Trigram P(the) P(the | <s>) P(the | <s>) (e.g. trigram models) × P(wallaby) × P( wallaby | the) × P( wallaby | the, <s>) × P(is) × P(is | wallaby) × P(is | wallaby, the) Case 1: The outcome X = x was seen, but not in the × P(endangered) × P(endangered | is) × P(endangered | is, wallaby) context of ( Y=y, Z=z ) : - We need to reserve mass in P ( X | Y = y , Z = z ) - Case 1: P(wallaby), P(wallaby | the), P( wallaby | the, <s>): Case 2: The joint conditioning event ( Y=y , Z=z ) hasn’t What is the probability of an unknown word (in any context)? - Case 2: P(endangered | is) been seen: What is the probability of a known word in a known context, - We have no P ( X | Y=y , Z=z ) distribution. if that word hasn’t been seen in that context? - But we can drop z and use P ( X | Y=y ) instead. - Case 3: P(is | wallaby) P(is | wallaby, the) P(endangered | is, wallaby): What is the probability of a known word in an unseen context? � 13 � 14 CS447: Natural Language Processing (J. Hockenmaier) CS447: Natural Language Processing (J. Hockenmaier) Dealing with unknown words: The simple solution Training: - Assume a fixed vocabulary Smoothing: (e.g. all words that occur at least twice (or n times) in the corpus) Reserving mass in - Replace all other words by a token <UNK> - Estimate the model on this corpus. P ( X ) for unseen events Testing: - Replace all unknown words by <UNK> - Run the model. This requires a large training corpus to work well. � 16 CS447: Natural Language Processing (J. Hockenmaier) � 15 CS447: Natural Language Processing (J. Hockenmaier)

Dealing with unknown events Add-1 (Laplace) smoothing Assume every (seen or unseen) event Use a different estimation technique: - Add-1(Laplace) Smoothing occurred once more than it did in the training data. - Good-Turing Discounting P ( w ) = C ( w ) Example: unigram probabilities Idea: Replace MLE estimate N Estimated from a corpus with N tokens and a vocabulary (number of word types) of size V. Combine a complex model with a simpler model: - Linear Interpolation ∑ j C ( w j ) = C ( w i ) C ( w i ) - Modified Kneser-Ney smoothing P ( w i ) = MLE ∑ j C ( w j ) N N Idea: use bigram probabilities of w i ∑ j ( C ( w j )+ 1 ) = C ( w i )+ 1 C ( w i )+ 1 P ( w i | w i − 1 ) P ( w i ) = Add One to calculate trigram probabilities of w i N + V P ( w i | w i − n ...w i − 1 ) � 17 � 18 CS447: Natural Language Processing (J. Hockenmaier) CS447: Natural Language Processing (J. Hockenmaier) Bigram counts Bigram probabilities Original: Original: Smoothed: Smoothed: Problem: Add-one moves too much probability mass from seen to unseen events! � 19 � 20 CS447: Natural Language Processing (J. Hockenmaier) CS447: Natural Language Processing (J. Hockenmaier)

Recommend

More recommend