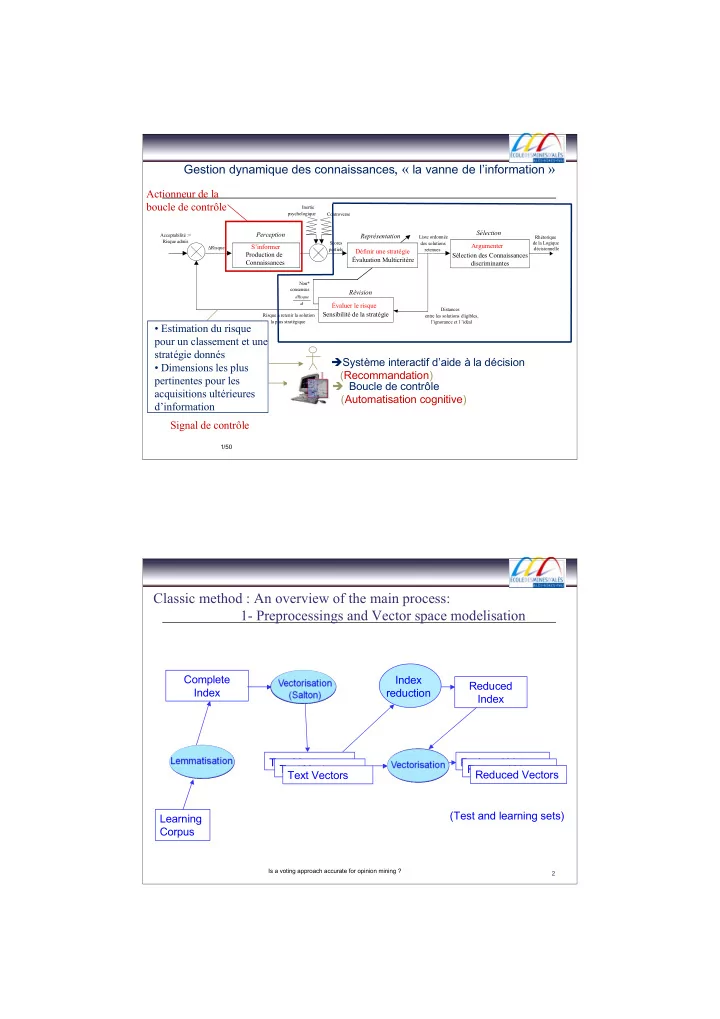

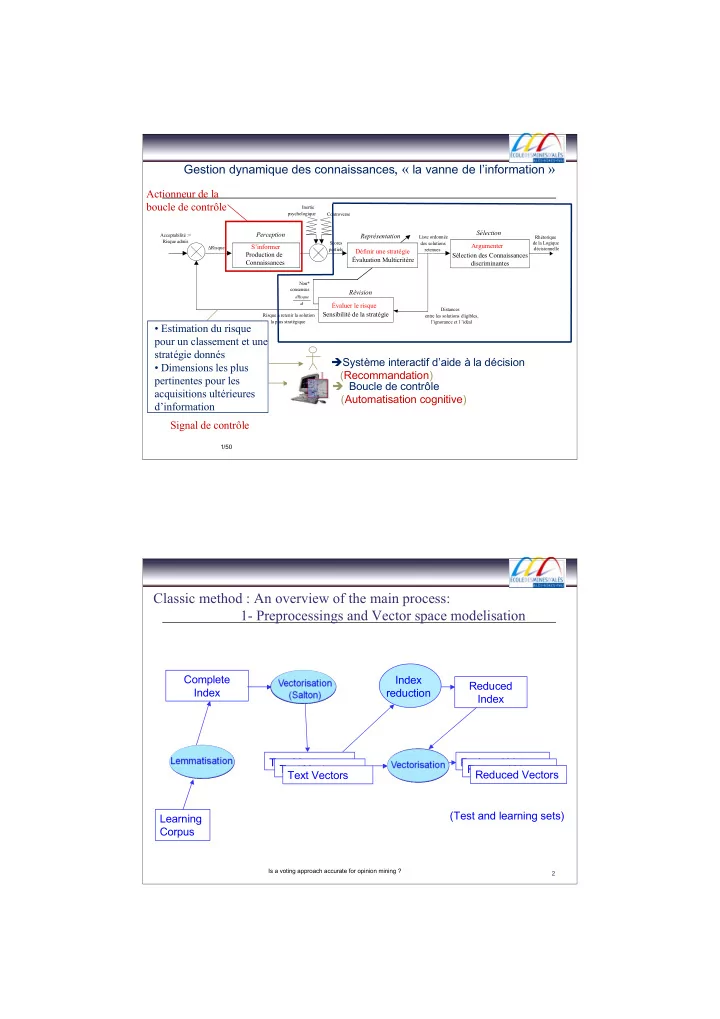

Gestion dynamique des connaissances , « la vanne de l’information » Actionneur de la boucle de contrôle Inertie psychologique Controverse Sélection Perception Acceptabilité := Représentation Liste ordonnée Rhétorique Risque admis Scores des solutions de la Logique S’informer Argumenter ∆ Risque partiels retenues décisionnelle Définir une stratégie Production de Sélection des Connaissances Évaluation Multicritère Connaissances discriminantes Non* consensus Révision dRisque dt Évaluer le risque Distances Sensibilité de la stratégie Risque à retenir la solution entre les solutions éligibles, la plus stratégique l’ignorance et l ’idéal • Estimation du risque pour un classement et une stratégie donnés Système interactif d’aide à la décision • Dimensions les plus (Recommandation) pertinentes pour les Boucle de contrôle acquisitions ultérieures (Automatisation cognitive) d’information Signal de contrôle 1/50 Classic method : An overview of the main process: 1- Preprocessings and Vector space modelisation Complete Index Reduced Index reduction Index Text Vectors Reduced Vectors Text Vectors Reduced Vectors Text Vectors Reduced Vectors (Test and learning sets) Learning Corpus Is a voting approach accurate for opinion mining ? 2

Classic method : An overview of the main process: 2- Modelisation and Classification (Training Corpus) Reduced Vectors Reduced Vectors Reduced Vectors Reduced Vectors Classification Model (Test Corpus) Assigned Class Reduced Vectors for each Vector Reduced Vectors Reduced Vectors Reduced Vectors Is a voting approach accurate for opinion mining ? 3 Extraction automatisée de CA pour l’évaluation multicritère Classic method : 2 étapes de classification Deux phases principales : Extraction de jugements de valeur et attribution à un critère d’évaluation Affectation d’un score au jugement de valeur Extraction des CAs Evaluation d’intention des CAs Cartographie Attribution d’un score 4/50 5 – Extraction automatisée de CAs

Web opinion mining: How to extract opinions from blogs ? Ali Harb, Michel Plantié , Gérard Dray, Mathieu Roche, François Trousset, Pascal Poncelet (LGI2P/EMA – LIRMM) Nîmes – France 5 Outline Introduction State of the art « AMOD » method Results on movie domain Test on another domain Conclusion and future work 6

Introduction Opinion detection on the Web New techniques to express opinions are more and more easy to use! We always have an opinion on anything!! Analyse expressed opinions: What about my public image? I want to buy a new camera! It is raining .... What about viewing Indiana Jones movie ? 7 Introduction Blogs phenomenon importance + 100 millions of blogs 120.000 blogs created every day 35% of net surfers rely on opinions posted on blogs . 44% of net surfers have stopped a purchase when seeing a negative opinion on a blog 91% think that the web has a “great or medium importance” in making up its own opinion regarding a company image. Sources : Médiamétrie, EIAA, Forrester, Technorati (août 2007), OpinionWay 2006 . 8

Introduction: One example of blog 9 Aggregation tools for opinions and journals 10

Classification vs Opinion Classification Classification Classify documents according to their theme: sport, cinema, literature, … Word Comparisons (bag of words approach) Goal, Football, Transfer, Blues => SPORT Class Opinion Classification Classify documents according to their general feeling (positive vs. negative) More difficult than traditional classification approaches: how to catch a particular opinion ? 11 State of the art Unsupervised opinion classification Turney Algorithm (2002) Input : opinion documents Output : classified documents (positive vs. negative) 1. Morphosyntaxic analysis to identify sentences 2. Semantic Orientation (SO) estimation of extracted sentences 3. Assignment of a document to a class (positive vs. negative) 12

State of the art Class assignment Average computation of SOs’ for a document > 0 : positive < 0 : negative Problems : Negative opinion expressions are very often softer than positive ones Adverbs may invert polarity 13 State of the art: Difficulties Do we use the same adjectives in different domains? The chair is comfortable The movie is comfortable ???? Same adjectives may have different meaning in different domains or contexts The picture quality of this camera is high (positive) The ceilings of the building are high (neutral) 14

Outline Introduction State of the art Automatic Mining of Opinion Dictionnaries (AMOD) method Results on movie domain Test on another domain Conclusion and future work 15 Input : PMots = {good, nice, excellent, positive, fortunate, correct, superior}, NMots = {bad, nasty, poor, negative, unfortunate, wrong, inferior}, one domain Output : New adjectives specific to one domain 1. Ask a search engine 2. Search for significant adjectives 3. Eliminate « noisy adjecives » 4. Run another time this algorithm to find new significant adjectives 16

AMOD: Ask a search engine Example of request with google and the word good "+opinion +review +cinema +good –bad -nasty - poor -negative -unfortunate -wrong -inferior" 17 AMOD: Ask a search engine Results 7 * 300 7 * 300 300 docs 300 docs poor nice good bad Negative words Positive words 4200 documents 18

AMOD: Search for significant adjectives Association rule usage Item : adjective Transaction : sentence– time window WS1 The movie is amazing , good acting, a lots of great action and the popcorn was delicious WS2 19 AMOD: Eliminate « noisy » adjectives Rule Example Positive Negative excellent, good → funny Bad, wrong → boring nice, good → great Bad, wrong → commercial nice → encouraging poor → current good → different bad → different Common adjective suppression 20

AMOD: Eliminate « noisy » adjectives How to eliminate useless adjectives ? … with hits Mutual Information PMI(w1,w2)=log2(p(w1&w2)/p(w1)*p(w2)) Cubic Mutual Information Favor frequent co-occurrences IM3(w1,w2)= log2(nb(w1&w2)^3/nb(w1)*nb(w2)) AcroDefIM3 IM3 + Domain information log2(hit((w1&w2) and C )^3/hit(w1 and C )*hit(w2 and C )) 21 AMOD: Eliminate « noisy » adjectives Use of AcroDefIM3 measure to get rid of noisy adjectives Positives Negatives excellent, good : funny (20,49) bad, wrong : boring (8,33) nice, good : great (12,50) bad, wrong : commercial (3,054) nice : encouraging (0,001) poor : current (0,0002) 22

State of the art Class assignment The movie is bad (negative) The movie is not bad (rather positive) 1 The movie is not bad , there is a lot of 6 funny moments 23 AMOD: Class assignment Use of averbs inverting polarity 1. The movie isn’t good 2. The movie isn’t amazing at all 3. The movie isn’t very good 4. The movie isn’t too good 5. The movie isn’t so good 6. The movie isn’t good enough 7. The movie is neither amazing nor funny 1, 2, 7 : inversion 3, 4, 5 : + 30% 6 : -30% 24

Outline Introduction State of the art « AMOD » method Results on movie domain Test on another domain Conclusion and future work 25 Experiments on Movie domain Learning phase: blogsearch.google.fr Test : Movie Review Data (positive and negative reviews of Internet Movie Database) 2 data sets very differents (blogs vs journalists) Positives PL NL Seeds L. 66,9% 7 7 Negatives PL NL Seeds L. 30,49% 7 7 26

Classification with learned adjectives WS-S Positives LP LN 1- 1% 67,2% 7+15 7+20 WS-S Negatives LP LN 1-1% 39,2% 7+15 7+20 WS-S: Window Size –support value Best results with WS=1 and support=1% 27 Learned adjectives, AcroDef, reinforcement Learned Adjectives and AcrodefIM3 WS-S Positives PL NL 1- 1% 75,9% 7+11 7+11 WS-S Negatives LP LN 1-1% 46,7% 7+11 7+11 Reinforcement (a learned word become a seed word) WS-S Positives PL NL 1- 1% 82,6% 7+11 7+11 WS-S Negatives PL NL 1-1% 52,4% 7+11 7+11 28

Influence of the learning set size Relation between corpus size and number of learned adjectives Nmber of learned adjectives Size of the learning set for each seed word From 250 documents 29 Comparison with a classic method Precision = Ratio of pertinent documents found in regard to all documents (pertinent or not) found Recall = Number of pertinent documents found in regard to all document of the knowledge base or corpus Fscore = Precision * Recall / (Precision+Recall) Classic Positives Negatives FSCORE 60,5% 60,9% AMOD Positives Negatives FSCORE 71,73% 62,2% 30

Recommend

More recommend