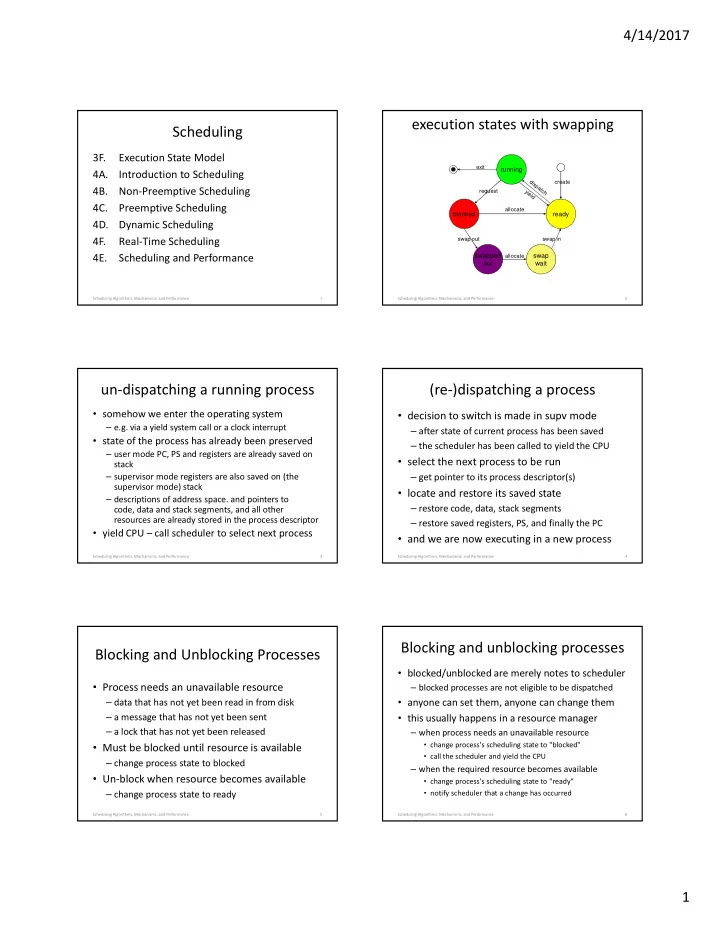

4/14/2017 execution states with swapping Scheduling 3F. Execution State Model exit running 4A. Introduction to Scheduling create 4B. Non-Preemptive Scheduling request 4C. Preemptive Scheduling allocate blocked ready 4D. Dynamic Scheduling 4F. Real-Time Scheduling swap out swap in 4E. Scheduling and Performance swapped swap allocate out wait Scheduling Algorithms, Mechanisms, and Performance 1 Scheduling Algorithms, Mechanisms, and Performance 2 un-dispatching a running process (re-)dispatching a process • somehow we enter the operating system • decision to switch is made in supv mode – e.g. via a yield system call or a clock interrupt – after state of current process has been saved • state of the process has already been preserved – the scheduler has been called to yield the CPU – user mode PC, PS and registers are already saved on • select the next process to be run stack – supervisor mode registers are also saved on (the – get pointer to its process descriptor(s) supervisor mode) stack • locate and restore its saved state – descriptions of address space. and pointers to – restore code, data, stack segments code, data and stack segments, and all other resources are already stored in the process descriptor – restore saved registers, PS, and finally the PC • yield CPU – call scheduler to select next process • and we are now executing in a new process Scheduling Algorithms, Mechanisms, and Performance 3 Scheduling Algorithms, Mechanisms, and Performance 4 Blocking and unblocking processes Blocking and Unblocking Processes • blocked/unblocked are merely notes to scheduler • Process needs an unavailable resource – blocked processes are not eligible to be dispatched • anyone can set them, anyone can change them – data that has not yet been read in from disk – a message that has not yet been sent • this usually happens in a resource manager – a lock that has not yet been released – when process needs an unavailable resource • change process's scheduling state to "blocked" • Must be blocked until resource is available • call the scheduler and yield the CPU – change process state to blocked – when the required resource becomes available • Un-block when resource becomes available • change process's scheduling state to "ready" – change process state to ready • notify scheduler that a change has occurred Scheduling Algorithms, Mechanisms, and Performance 5 Scheduling Algorithms, Mechanisms, and Performance 6 1

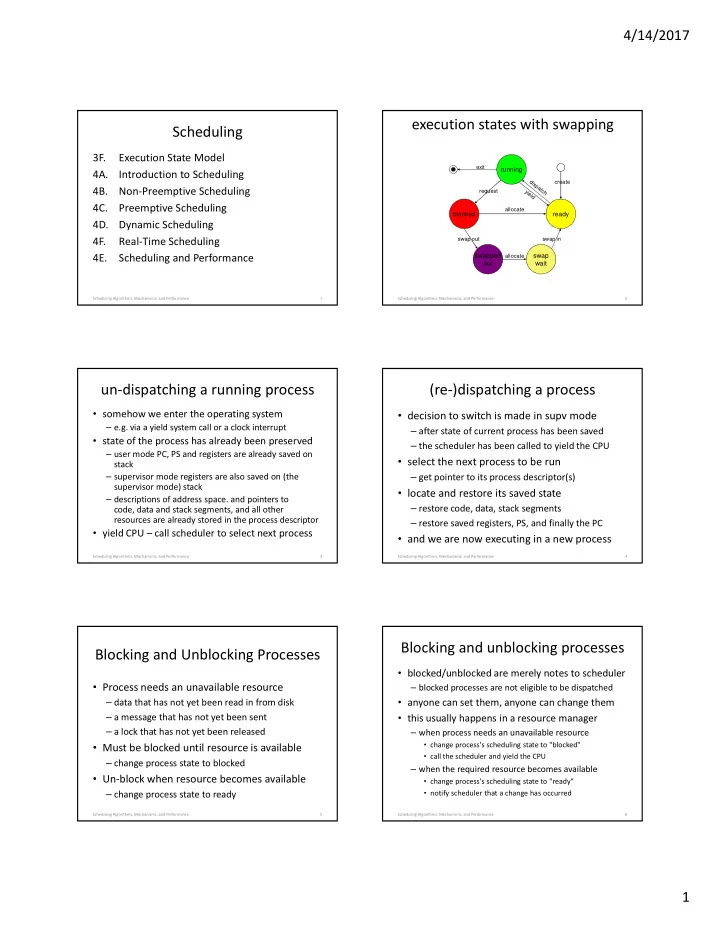

4/14/2017 Primary and Secondary Storage Why we swap • Make the best use of limited memory • primary = main (executable) memory – a process can only execute if it is in memory – primary storage is expensive and very limited – max # of processes limited by memory size – only processes in primary storage can be run – if it isn't READY, it doesn't need to be in memory • secondary = non-executable (e.g. Disk) • Improve CPU utilization – blocked processes can be moved to secondary storage – when there are no READY processes, CPU is idle – swap out code, data, stack and non-resident context – idle CPU time is wasted, reduced throughput – make room in primary for other "ready" processes – we need READY processes in memory • returning to primary memory • Swapping takes time and consumes I/O – process is copied back when it becomes unblocked – so we want to do it as little as possible Scheduling Algorithms, Mechanisms, and Performance 7 Scheduling Algorithms, Mechanisms, and Performance 8 Swapping Out Swapping Back In • Re-Allocate memory to contain process • Process’ state is in main memory – code and data segments, non-resident process descriptor – code and data segments • Read that data back from secondary storage – non-resident process descriptor • Change process state back to Ready • Copy them out to secondary storage • What about the state of the computations – if we are lucky, some may still be there – saved registers are on the stack • Update resident process descriptor – user-mode stack is in the saved data segments – process is no longer in memory – supervisor-mode stack is in non-resident descriptor – pointer to location on 2ndary storage device • This involves a lot of time and I/O • Freed memory available for other processes Scheduling Algorithms, Mechanisms, and Performance 9 Scheduling Algorithms, Mechanisms, and Performance 10 What is CPU Scheduling? Goals and Metrics • Choosing which ready process to run next • goals should be quantitative and measurable • Goals: – if something is important, it must be measurable – if we want "goodness" we must be able to quantify it – keeping the CPU productively occupied – you cannot optimize what you do not measure – meeting the user’s performance expectations • metrics ... the way & units in which we measure yield (or preemption) – choose a characteristic to be measured • it must correlate well with goodness/badness of service context ready queue dispatcher CPU switcher • it must be a characteristic we can measure or compute – find a unit to quantify that characteristic resource resource granted manager resource request – define a process for measuring the characteristic new process Scheduling Algorithms, Mechanisms, and Performance 11 Scheduling Algorithms, Mechanisms, and Performance 12 2

4/14/2017 CPU Scheduling: Proposed Metrics Rectified Scheduling Metrics • candidate metric: time to completion (seconds) • mean time to completion (seconds) – different processes require different run times – for a particular job mix (benchmark) • candidate metric: throughput (procs/second) • throughput (operations per second) – same problem, not different processes – for a particular activity or job mix (benchmark) • candidate metric: response time (milliseconds) • mean response time (milliseconds) – some delays are not the scheduler’s fault – time spent on the ready queue • time to complete a service request, wait for a resource • overall “goodness” • candidate metric: fairness (standard deviation) – requires a customer specific weighting function – per user, per process, are all equally important – often stated in Service Level Agreements Scheduling Algorithms, Mechanisms, and Performance 13 Scheduling Algorithms, Mechanisms, and Performance 14 Different Kinds of Systems have Non-Preepmtive Scheduling Different Scheduling Goals • scheduled process runs until it yields CPU • Time sharing – may yield specifically to another process – Fast response time to interactive programs – may merely yield to "next" process – Each user gets an equal share of the CPU – Execution favors higher priority processes • works well for simple systems • Batch – small numbers of processes – Maximize total system throughput – with natural producer consumer relationships – Delays of individual processes are unimportant • depends on each process to voluntarily yield • Real-time – a piggy process can starve others – Critical operations must happen on time – a buggy process can lock up the entire system – Non-critical operations may not happen at all Scheduling Algorithms, Mechanisms, and Performance 15 Scheduling Algorithms, Mechanisms, and Performance 16 Non-Preemptive: First-In-First-Out Example: First In First Out • Algorithm: Tav = (10 +20 + 120)/3 A B C = 50 – run first process in queue until it blocks or yields • Advantages: 0 20 40 60 80 100 120 – very simple to implement A B C – seems intuitively fair – all process will eventually be served Tav = (100 +110 + 120)/3 • Problems: = 110 0 20 40 60 80 100 120 – highly variable response time (delays) – a long task can force many others to wait (convoy) Scheduling Algorithms, Mechanisms, and Performance 17 Scheduling Algorithms, Mechanisms, and Performance 18 3

Recommend

More recommend