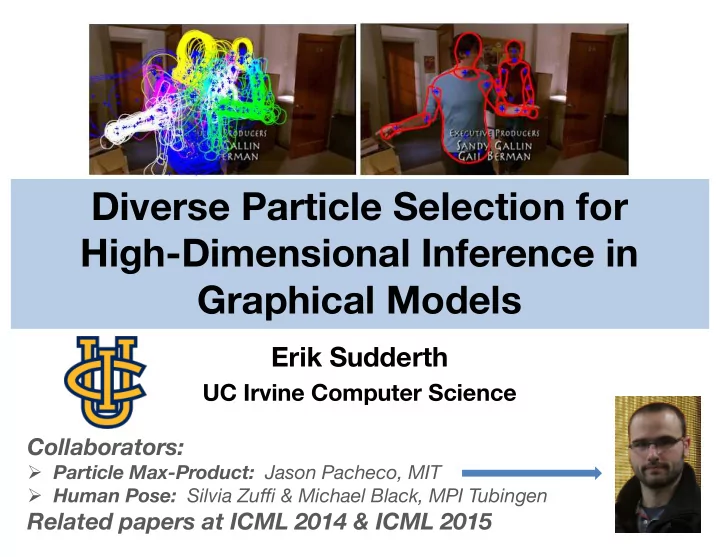

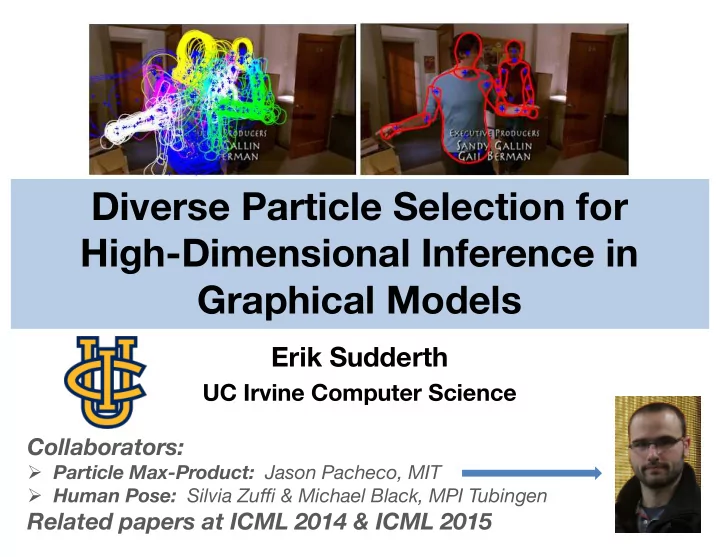

Diverse Particle Selection for High-Dimensional Inference in Graphical Models Erik Sudderth UC Irvine Computer Science Collaborators: Ø Particle Max-Product: Jason Pacheco, MIT Ø Human Pose: Silvia Zuffi & Michael Black, MPI Tubingen Related papers at ICML 2014 & ICML 2015

High-Dimensional Inference Data Unknowns Estimate Probability Model Discrete Efficient inference based on Unknowns combinatorial optimization Unless we make unrealistic model Continuous approximations, no efficient general Unknowns solutions. Standard gradient-based optimization is ineffective.

Continuous Inference Problems Human pose estimation & tracking Protein structure & side chain prediction Robot motion & vehicle path planning

Maximum a Posteriori (MAP) Data Unknowns Posterior MAP Estimate * Posterior often intractable and multimodal complicating exact MAP inference:

Maximum a Posteriori (MAP) Data Unknowns Posterior Local Optimum * * Posterior often intractable and multimodal complicating exact MAP inference: Local optima can be useful when models are inaccurate or data are noisy.

Goal Develop maximum a posteriori (MAP) inference algorithms for continuous probability models that: Ø Apply to any pairwise graphical model, even if model is complex (highly non-Gaussian) Ø Are black-box (no gradients required) Ø Will reliably infer multiple local optima

Pairwise Graphical Models x s ∈ R d Ø Nodes are continuous random variables Ø Potentials encode statistical relationships Ø Edges indicate direct, pairwise energetic interactions x 1 x 4 x 2 x 8 x 3 x 9 x 7 x 5 x 6

Message Passing on Trees Global MAP inference decomposes into local computations via graph structure…

Max-Product Belief Propagation Max-Product Belief Propagation Finding max-marginals via message-passing Y q s ( x s ) = max x t 6 = s p ( x s , x t 6 = s ) ∝ ψ s ( x s ) m ts ( x s ) t 2 Γ ( s ) Why max-marginals? Ø Directly encode global MAP Ø Other modes important: models approximate, data uncertain Max-product dynamic programming finds exact max-marginals on tree-structured graphs.

Articulated Pose Estimation [ Zuffi et al., CVPR 2012 ] Complicated Non-Gaussian Likelihood Compatibility Deformable Structures (DS): Continuous state for part shape , location , orientation, scale . PCA Shape

Recommend

More recommend