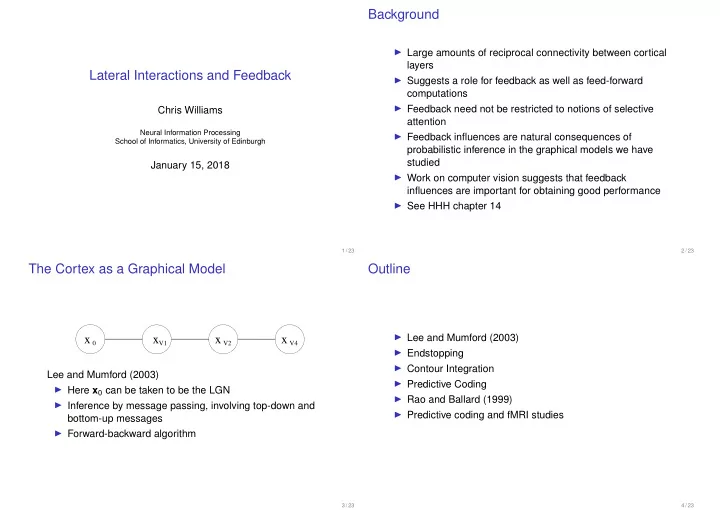

Background ◮ Large amounts of reciprocal connectivity between cortical layers Lateral Interactions and Feedback ◮ Suggests a role for feedback as well as feed-forward computations ◮ Feedback need not be restricted to notions of selective Chris Williams attention Neural Information Processing ◮ Feedback influences are natural consequences of School of Informatics, University of Edinburgh probabilistic inference in the graphical models we have studied January 15, 2018 ◮ Work on computer vision suggests that feedback influences are important for obtaining good performance ◮ See HHH chapter 14 1 / 23 2 / 23 The Cortex as a Graphical Model Outline ◮ Lee and Mumford (2003) x 0 x V1 x V2 x V4 ◮ Endstopping ◮ Contour Integration Lee and Mumford (2003) ◮ Predictive Coding ◮ Here x 0 can be taken to be the LGN ◮ Rao and Ballard (1999) ◮ Inference by message passing, involving top-down and ◮ Predictive coding and fMRI studies bottom-up messages ◮ Forward-backward algorithm 3 / 23 4 / 23

Lee and Mumford (2003) Example: Endstopping ◮ See e.g. HHH §14.2 ◮ Time course of responses of some V1 neurons suggests ◮ Cell output is reduced when optimal stimulus made longer feedforward response initially, later becomes sensitive to (reported in 1960’s by Hubel and Wiesel) context (e.g. illusory contours in Kanizsa square) ◮ Can arise from competitive interactions, e.g. in the sparse ◮ Feedback is particularly important when the input scene is coding model ambiguous, and one may need to entertain multiple ◮ In the example below for the long bar v 2 = 0 as it is competing hypotheses (as for the Kanizsa square) “explained away” by activation of v 1 and v 3 ◮ Concept of a “non-classical” receptive field [Lee and Mumford, 2003] Figure credit: Hyvärinen, Hurri and Hoyer (2009) 5 / 23 6 / 23 Example: Contour Integration ◮ HHH §14.1 ◮ Consider model with ◮ Given input for c we obtain samples from p ( s | c ) or argmax complex cells and ˆ s countour cells (ICA ◮ This gives rise to predictions ˆ c = A ˆ s which are different to model) from HHH c (due to the possibility of noise n ) §12.1 ◮ This is particularly interesting for non-linear feedback ◮ x k = � i a ik s i + n k (arising from a non-Gaussian prior on s ) ◮ s units have sparse ◮ Note: can think of integrating out s , this will induce lateral priors connectivity between the c ’s ◮ n k ∼ N ( 0 , σ 2 ) ◮ Network learns about contour regularities Figure credit: Hyvärinen, Hurri and Hoyer (2009) 7 / 23 8 / 23

Predictive Coding ◮ Predictive coding (in a general sense) is the idea that the representation of the environment requiers that the brain actively predicts what the sensory input will be, rather than just passively registering it ◮ To an electrical engineer, predictive coding means something like p ( x 1 , x 2 , . . . , x n ) = p ( x 1 ) p ( x 2 | x 1 ) . . . p ( x n | x 1 , . . . , x n − 1 ) ◮ What predictive coding is taken to mean in some Figure credit: Hyvärinen, Hurri and Hoyer (2009) neuroscience contexts is (roughly) that if there is a top ◮ (a) patches, (b) feedforward activations c , (c) ˆ c (after down prediction ˆ c k , then the lower level need only send the feedback) prediction error c k − ˆ c k ◮ Top patch contains aligned Gabors, bottom does not have ◮ Question: can you carry out valid inferences passing only this alignment these messages? ◮ Noise reduction has retained the activations of the ◮ See HHH §14.3 co-linear stimuli but suppressed activity that does not fit the learned contour model well 9 / 23 10 / 23 Rao and Ballard (1999) ◮ Basically a hierarchical factor analysis model, or ◮ HHH say (p 317) “the essential difference is in the tree-structured Kalman filter interpretation of how the abstract quantities are computed ◮ u 1 , u 2 and u 3 are nearby image patches and coded in the cortex. In the predictive modelling ◮ At each level there is a predictive estimator (PE) module framework, it is assumed that the prediction errors [c k − ˆ c k ] are actually the activities (firing rates) of the neurons on ◮ R & B need spatial architecture to get interesting effects as the lower level. This is a strong departure from the the model is linear/Gaussian (cf contour integration) framework used in this book, where the c k are considered ◮ Top down prediction v td = F i w i as the activities of the neurons. Which one of these ◮ Error signal v i − v td (difference between top-down interpretations is closer to the neural reality is an open i prediction and the actual response) is propagated upwards question which has inspired some experimental work ...” E i = 1 σ 2 ( u i − G i v i ) T ( u i − G i v i ) + 1 ( v i − v td i ) T ( v i − v td i ) σ 2 td (if v td = 0 this is simply FA for each patch) i 11 / 23 12 / 23

End-stopped responses PE u 1 PE PE u short bar long bar 2 ◮ As long edges are more prevalent in natural scenes, the top-down prediction will favour this. PE ◮ For a long bar, t-d predictions are correct, so errors are u 3 close to zero. ◮ For a short bar the t-d predictions are incorrect, so there are more error signals in level 1 level 0 level 1 level2 13 / 23 14 / 23 ◮ Thus response v i − v td in a model neuron is stronger for i short bars, and decays for longer bars: endstopping (see Fig 3c in R & B) ◮ Similar tuning to experimental data from Bolz and Gilbert (1986), (see Fig 5a in R & B) ◮ The observed endstopping depends on feedback connections (see Fig 5a in R & B) ◮ Other experiments look at predictability of grating patterns over a 3 × 3 set of patches, and observe similar effects (see Fig 6 in R & B) ◮ Extra-classical RF effects can be seen as an emergent property of the network Figure credit: Rao and Ballard (1999) 15 / 23 16 / 23

Predictive Coding or Sharpening? ◮ Contour integration a la HHH §14.1 can be called sharpening ; increase activity in those aspects of the input that area consistent with the predicted activity, and reduce all other activity. Can lead to an overall reduction in activity ◮ Contrast with predictive coding viewpoint; difference depends on whether unpredicetd activity is noise or signal 3C ◮ See discussion in Murray, Schrater and Kersten (2004) 5A Figure credit: Rao and Ballard (1999) Figure credit: Murray, Schrater and Kersten (2004) 17 / 23 18 / 23 Predictive coding and fMRI studies Interpreting fMRI studies on predictive coding ◮ Experiments in Murray, Schrater and Kersten (2004) ◮ Stimuli are lines arranged in 3D, 2D or random configurations ◮ fMRI is a very blunt instrument, as every voxel reflects an ◮ Measure activity in LOC (lateral occipital complex), which average of more than 100,000 neurons has been shown to code for 3D percepts, and V1 ◮ Reduced fMRI activity is consistent with sharpening as well as predictive coding ◮ “Predictive coding appears to be at odds with single-neuron recordings indicating that neurons along the ventral pathway respond with vigorous activity ever more complex objects ...” (Koch and Poggio, 1999) ◮ “... what about functional imaging data revealing that particular cortical areas respond to specific image classes.. ? ” (Koch and Poggio, 1999) Figure credit: Murray, Schrater and Kersten (2004) 19 / 23 20 / 23

References ◮ Egner, T., Monti, J. M., Summerfield, C. Expectation and Surprise Determine Neural Population Responses in the ◮ Egner et al (2010): “... each stage of the visual cortical Ventral Visual Stream. J. Neurosci. 30(49) 16601-16608 hierarchy is thought to harbor two computationally distinct (2010) classes of processing unit: representational units that ◮ Koch, C. and Poggio, T. Predicting the Visual World: encode the conditional probability of a stimulus Silence is Golden. Nature Neurosci. 2(1) 9-10 (1999) (“expectation”) [..]; and error units that encode the mismatch between predictions and bottom up evidence ◮ Lee, T-S., Mumford, D. Hierarchical Bayesian inference in (“surprise”), and forward this prediction error to the next the visual cortex, J. Opt. Soc. America 20(7) 1434-1448 higher level ...” (2003) ◮ Need more clarity on how the brain is meant to be ◮ Murray, S. O., Schrater, P . and Kersten D. Perceptual implementing belief propagation ... grouping and the interactions between visual cortical areas. Neural Networks 17 695-705 (2004) ◮ Rao, R. P . N., Ballard, D. H. Predictive coding in the visual cortex: a functional interpretation of some extra-classical receptive-field effects, Nature Neurosci. 2(1) 79-87 (1999) 21 / 23 22 / 23

Recommend

More recommend