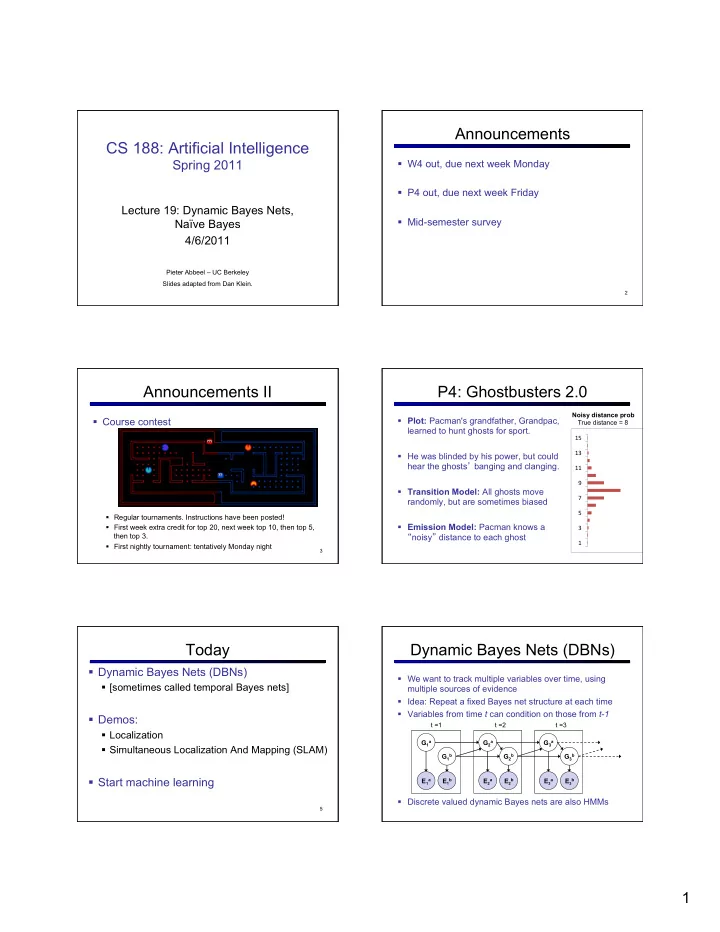

Announcements CS 188: Artificial Intelligence Spring 2011 § W4 out, due next week Monday § P4 out, due next week Friday Lecture 19: Dynamic Bayes Nets, § Mid-semester survey Naïve Bayes 4/6/2011 Pieter Abbeel – UC Berkeley Slides adapted from Dan Klein. 2 Announcements II P4: Ghostbusters 2.0 Noisy distance prob § Course contest § Plot: Pacman's grandfather, Grandpac, True distance = 8 learned to hunt ghosts for sport. 15 13 § He was blinded by his power, but could hear the ghosts ’ banging and clanging. 11 9 § Transition Model: All ghosts move 7 randomly, but are sometimes biased 5 § Regular tournaments. Instructions have been posted! § Emission Model: Pacman knows a § First week extra credit for top 20, next week top 10, then top 5, 3 then top 3. “ noisy ” distance to each ghost 1 § First nightly tournament: tentatively Monday night 3 Today Dynamic Bayes Nets (DBNs) § Dynamic Bayes Nets (DBNs) § We want to track multiple variables over time, using § [sometimes called temporal Bayes nets] multiple sources of evidence § Idea: Repeat a fixed Bayes net structure at each time § Variables from time t can condition on those from t-1 § Demos: t =1 t =2 t =3 § Localization G 1 a G 2 a G 3 a § Simultaneous Localization And Mapping (SLAM) G 1 b G 2 b G 3 b § Start machine learning E 1 a E 1 b E 2 a E 2 b E 3 a E 3 b § Discrete valued dynamic Bayes nets are also HMMs 5 1

Exact Inference in DBNs DBN Particle Filters § Variable elimination applies to dynamic Bayes nets § A particle is a complete sample for a time step § Procedure: “ unroll ” the network for T time steps, then § Initialize : Generate prior samples for the t=1 Bayes net eliminate variables until P(X T |e 1:T ) is computed § Example particle: G 1 a = (3,3) G 1 b = (5,3) t =1 t =2 t =3 § Elapse time : Sample a successor for each particle § Example successor: G 2 a = (2,3) G 2 b = (6,3) G 1 a G 2 a G 3 a § Observe : Weight each entire sample by the likelihood of G 1 b G 2 b G 3 G 3 b b the evidence conditioned on the sample § Likelihood: P( E 1 a | G 1 a ) * P( E 1 b | G 1 b ) E 1 a E 1 b E 2 a E 2 b E 3 a E 3 b § Resample: Select prior samples (tuples of values) in proportion to their likelihood § Online belief updates: Eliminate all variables from the 7 8 previous time step; store factors for current time only [Demo] Trick I to Improve Particle Filtering DBN Particle Filters Performance: Low Variance Resampling § A particle is a complete sample for a time step § Initialize : Generate prior samples for the t=1 Bayes net § Example particle: G 1 a = (3,3) G 1 b = (5,3) § Elapse time : Sample a successor for each particle § Example successor: G 2 a = (2,3) G 2 b = (6,3) § Observe : Weight each entire sample by the likelihood of the evidence conditioned on the sample § Advantages: § Likelihood: P( E 1 a | G 1 a ) * P( E 1 b | G 1 b ) § More systematic coverage of space of samples § If all samples have same importance weight, no § Resample: Select prior samples (tuples of values) in samples are lost proportion to their likelihood § Lower computational complexity 9 Trick II to Improve Particle Filtering SLAM Performance: Regularization § If no or little noise in transitions model, all § SLAM = Simultaneous Localization And Mapping § We do not know the map or our location particles will start to coincide § Our belief state is over maps and positions! § Main techniques: Kalman filtering (Gaussian HMMs) and particle methods à regularization: introduce additional (artificial) noise into the transition model § [DEMOS] DP-SLAM, Ron Parr 2

Robot Localization SLAM § In robot localization: § SLAM = Simultaneous Localization And Mapping § We know the map, but not the robot ’ s position § We do not know the map or our location § Observations may be vectors of range finder readings § State consists of position AND map! § State space and readings are typically continuous (works § Main techniques: Kalman filtering (Gaussian HMMs) and particle basically like a very fine grid) and so we cannot store B(X) methods § Particle filtering is a main technique § [Demos] Global-floor Particle Filter Example SLAM 3 particles § DEMOS § fastslam.avi, visionSlam_heliOffice.wmv map of particle 3 map of particle 1 15 map of particle 2 Further readings Part III: Machine Learning § We are done with Part II Probabilistic § Up until now: how to reason in a model Reasoning and how to make optimal decisions § To learn more (beyond scope of 188): § Machine learning: how to acquire a model § Koller and Friedman, Probabilistic Graphical Models (CS281A) on the basis of data / experience § Thrun, Burgard and Fox, Probabilistic § Learning parameters (e.g. probabilities) Robotics (CS287) § Learning structure (e.g. BN graphs) § Learning hidden concepts (e.g. clustering) 3

Machine Learning Today Parameter Estimation r g g r g g r g g r r g g g g § Estimating the distribution of a random variable § An ML Example: Parameter Estimation § Elicitation: ask a human (why is this hard?) § Maximum likelihood § Empirically: use training data (learning!) § Smoothing § E.g.: for each outcome x, look at the empirical rate of that value: § Applications r g g § Main concepts § Naïve Bayes § This is the estimate that maximizes the likelihood of the data § Issue: overfitting. E.g., what if only observed 1 jelly bean? Estimation: Smoothing Estimation: Laplace Smoothing § Relative frequencies are the maximum likelihood estimates § Laplace ’ s estimate: § Pretend you saw every outcome H H T once more than you actually did § In Bayesian statistics, we think of the parameters as just another random variable, with its own distribution § Can derive this as a MAP ???? estimate with Dirichlet priors (see cs281a) Estimation: Laplace Smoothing Example: Spam Filter § Laplace ’ s estimate Dear Sir. § Input: email H H T (extended): § Output: spam/ham First, I must solicit your confidence in this transaction, this is by virture of its nature § Pretend you saw every outcome § Setup: as being utterly confidencial and top k extra times § Get a large collection of secret. … example emails, each labeled “ spam ” or “ ham ” TO BE REMOVED FROM FUTURE § Note: someone has to hand MAILINGS, SIMPLY REPLY TO THIS label all this data! MESSAGE AND PUT "REMOVE" IN THE § Want to learn to predict SUBJECT. § What ’ s Laplace with k = 0? labels of new, future emails § k is the strength of the prior 99 MILLION EMAIL ADDRESSES FOR ONLY $99 § Features: The attributes used to make the ham / spam decision § Laplace for conditionals: Ok, Iknow this is blatantly OT but I'm § Words: FREE! beginning to go insane. Had an old Dell § Smooth each condition § Text Patterns: $dd, CAPS Dimension XPS sitting in the corner and independently: § Non-text: SenderInContacts decided to put it to use, I know it was § … working pre being stuck in the corner, but when I plugged it in, hit the power nothing happened. 4

Recommend

More recommend