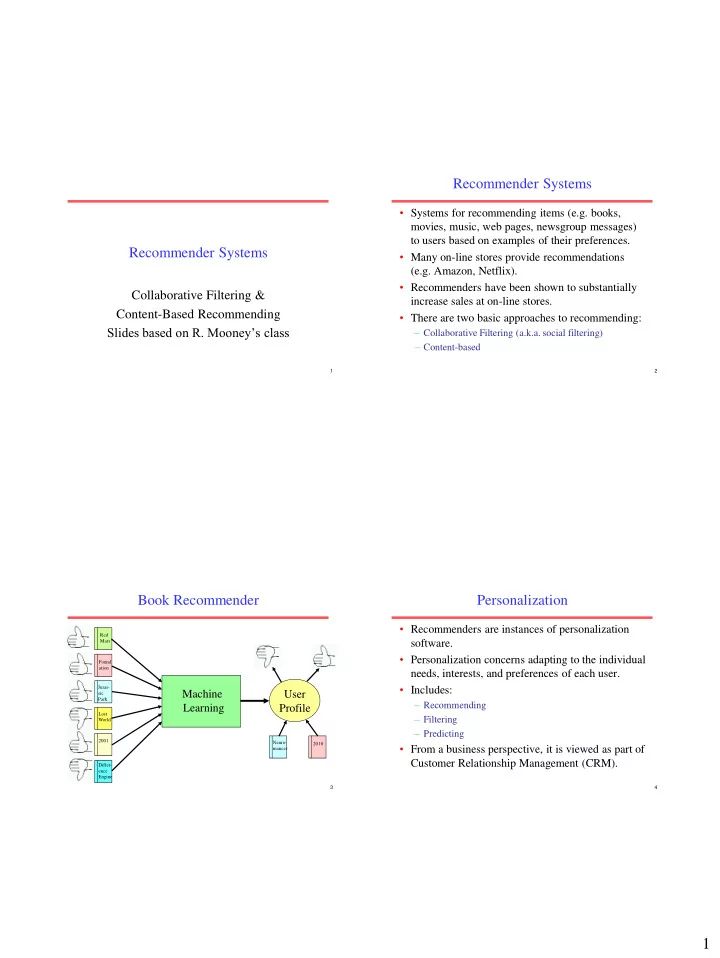

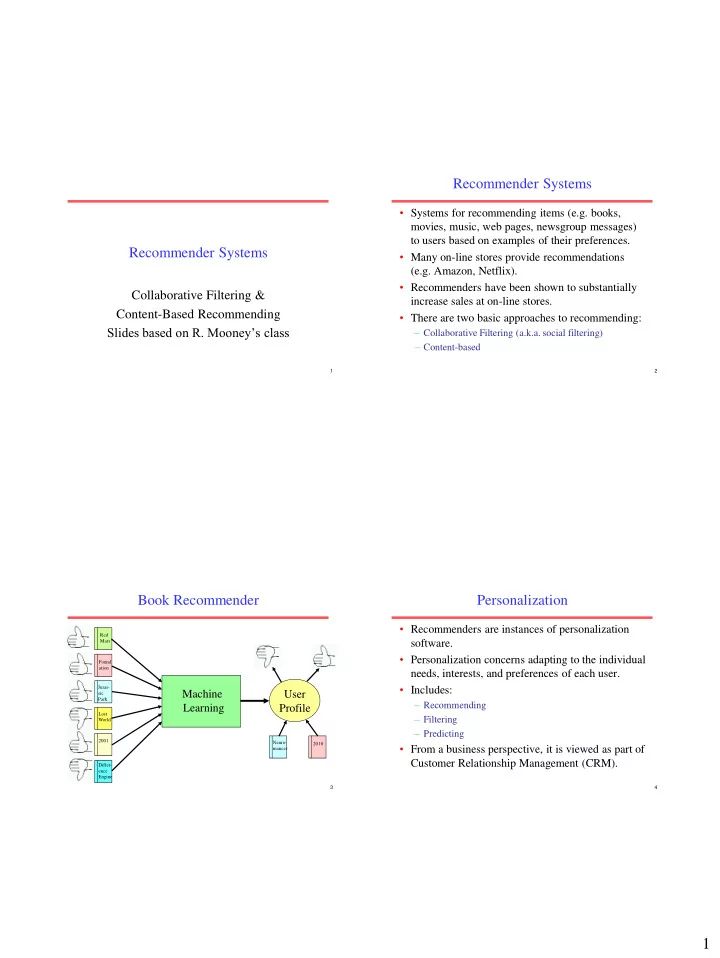

Recommender Systems • Systems for recommending items (e.g. books, movies, music, web pages, newsgroup messages) to users based on examples of their preferences. Recommender Systems • Many on-line stores provide recommendations (e.g. Amazon, Netflix). • Recommenders have been shown to substantially Collaborative Filtering & increase sales at on-line stores. Content-Based Recommending • There are two basic approaches to recommending: Slides based on R. Mooney’s class – Collaborative Filtering (a.k.a. social filtering) – Content-based 1 2 Book Recommender Personalization • Recommenders are instances of personalization Red software. Mars • Personalization concerns adapting to the individual Found ation needs, interests, and preferences of each user. • Includes: Juras- Machine User sic Park – Recommending Learning Profile Lost – Filtering World – Predicting 2001 Neuro- 2010 • From a business perspective, it is viewed as part of mancer Customer Relationship Management (CRM). Differ- ence Engine 3 4 1

Collaborative Filtering Collaborative Filtering • Maintain a database of many users’ ratings of a variety of items. • For a given user, find other similar users whose A 9 A A 5 A A 6 A 10 User B 3 B B 3 B B 4 B 4 ratings strongly correlate with the current user. C C 9 C C 8 C C 8 Database : : : : : : : : : : . . Z 5 Z 10 Z 7 Z Z Z 1 • Recommend items rated highly by these similar users, but not rated by the current user. A 9 A 10 • Almost all existing commercial recommenders use B 3 B 4 Correlation C C 8 this approach (e.g. Amazon). Match User rating? User rating : : . . User rating User rating Z 5 Z 1 User rating User rating A 9 Extract Active B 3 C C Recommendations User Item recommendation . . 5 6 Z 5 Collaborative Filtering Method Find users with similar ratings/interests 1. Weight all users with respect to similarity with the active user. 2. Select a subset of the users ( neighbors ) to A 9 A A 5 A A 6 A 10 User B 3 B B 3 B B 4 B 4 use as predictors. C C 9 C C 8 C C 8 Database : : : : : : : : : : . . Z 5 Z 10 Z 7 Z Z Z 1 3. Normalize ratings and compute a prediction from a weighted combination of r u the selected neighbors’ ratings. Which users have similar ratings? 4. Present items with highest predicted ratings as recommendations. A 9 r a Active B 3 C User . . 7 8 Z 5 2

Similarity Weighting Covariance and Standard Deviation • Typically use Pearson correlation coefficient between • Covariance: m ratings for active user, a , and another user, u . ( )( ) r r r r a , i a u , i u i 1 covar ( r , r ) covar ( , ) a u r r m , a u c m a u r r r , x i a u i 1 r x m r a and r u are the ratings vectors for the m items rated by both a and u • Standard Deviation: r i,j is user i ’s rating for item j m 2 ( ) r r x , i x i 1 r x m 9 10 Relationship between Covariance and Neighbor Selection Cosine Similarity • For a given active user, a , select correlated • Covariance: users to serve as source of predictions. – Standard approach is to use the most similar n users, u , based on similarity weights, w a,u • Cosine similarity: – Alternate approach is to include all users whose similarity weight is above a given threshold. Sim( r a , r u )> t a 11 12 3

Significance Weighting Rating Prediction (Version 0) • Predict a rating, p a,i , for each item i , for active user, a , • Important not to trust correlations based on by using the n selected neighbor users, u {1,2,… n }. very few co-rated items. • Weight users’ ratings contribution by their similarity to • Include significance weights , s a,u , based on the active user. number of co-rated items, m . , w s c , , a u a u a u n 1 if m 50 w r a , u u , i m s 1 p u , if 50 a u m a , i n 50 w a , u u 1 13 14 Rating Prediction (Version 1) Problems with Collaborative Filtering • Predict a rating, p a,i , for each item i , for active user, a , • Cold Start : There needs to be enough other users by using the n selected neighbor users, u {1,2,… n }. already in the system to find a match. • Sparsity : If there are many items to be • To account for users different ratings levels, base recommended, even if there are many users, the predictions on differences from a user’s average rating. user/ratings matrix is sparse, and it is hard to find • Weight users’ ratings contribution by their similarity to users that have rated the same items. the active user. • First Rater : Cannot recommend an item that has n not been previously rated. ( ) w r r , , a u u i u – New items 1 u p r a , i a n – Esoteric items w a , u • Popularity Bias : Cannot recommend items to u 1 someone with unique tastes. – Tends to recommend popular items. 15 16 4

Recommendation vs Web Ranking Content-Based Recommending • Recommendations are based on information on the content of items rather than on other users’ Text Content User click data opinions. User rating Link popularity Content • Uses a machine learning algorithm to induce a profile of the users preferences from examples based on a featural description of content. • Applications: Item recommendation Web page ranking – News article recommendation 17 18 Advantages of Content-Based Approach Disadvantages of Content-Based Method • No need for data on other users. • Requires content that can be encoded as – No cold-start or sparsity problems. meaningful features. • Able to recommend to users with unique tastes. • Users’ tastes must be represented as a learnable function of these content features. • Able to recommend new and unpopular items • Unable to exploit quality judgments of other – No first-rater problem. users. • Can provide explanations of recommended – Unless these are somehow included in the items by listing content-features that caused an content features. item to be recommended. 19 20 5

LIBRA LIBRA System Learning Intelligent Book Recommending Agent • Content-based recommender for books using Amazon Pages information about titles extracted from Amazon. LIBRA Database Information • Uses information extraction from the web to Extraction organize text into fields: – Author – Title Rated – Editorial Reviews Examples Machine Learning – Customer Comments Learner – Subject terms Recommendations – Related authors 1.~~~~~~ User Profile – Related titles 2.~~~~~~~ 3.~~~~~ : : Predictor : 21 22 Sample Extracted Amazon Book Libra Content Information Information • Libra uses this extracted information to Title: <The Age of Spiritual Machines: When Computers Exceed Human Intelligence> Author: <Ray Kurzweil> form “bags of words” for the following Price: <11.96> Publication Date: <January 2000> slots: ISBN: <0140282025> – Author Related Titles: <Title: <Robot: Mere Machine or Transcendent Mind> Author: <Hans Moravec> > – Title … Reviews: <Author: <Amazon.com Reviews> Text: <How much do we humans…> > – Description (reviews and comments) … – Subjects Comments: <Stars: <4> Author: <Stephen A. Haines> Text:<Kurzweil has …> > … – Related Titles Related Authors: <Hans P. Moravec> <K. Eric Drexler>… Subjects: <Science/Mathematics> <Computers> <Artificial Intelligence> … – Related Authors 23 24 6

Libra Overview Bayesian Categorization in LIBRA • User rates selected titles on a 1 to 10 scale. • Model is generalized to generate a vector of bags of words (one bag for each slot). • Use a Bayesian algorithm to learn – Instances of the same word in different slots are treated – Rating 6 – 10: Positive as separate features: – Rating 1 – 5: Negative • “Chrichton” in author vs. “Chrichton” in description • The learned classifier is used to rank all other books • Training examples are treated as weighted positive as recommendations. or negative examples when estimating conditional • User can also provide explicit positive/negative probability parameters: keywords, which are used as priors to bias the role – An example with rating 1 r 10 is given: of these features in categorization. positive probability: ( r – 1)/9 negative probability: (10 – r )/9 25 26 Implementation & Weighting Experimental Method • 10-fold cross-validation to generate learning curves. • Stopwords removed from all bags. • Measured several metrics on independent test data: • A book’s title and author are added to its own – Precision at top 3: % of the top 3 that are positive related title and related author slots. – Rating of top 3: Average rating assigned to top 3 • All probabilities are smoothed using Laplace – Rank Correlation : Spearman’s, r s , between system’s and estimation to account for small sample size. user’s complete rankings. • Test ablation of related author and related title slots • Feature strength of word w k appearing in a (LIBRA-NR). slot s j : – Test influence of information generated by Amazon’s P ( w | positive , s ) collaborative approach. k j strength ( , ) log w s k j ( | negative, s ) P w j k 27 28 7

Recommend

More recommend