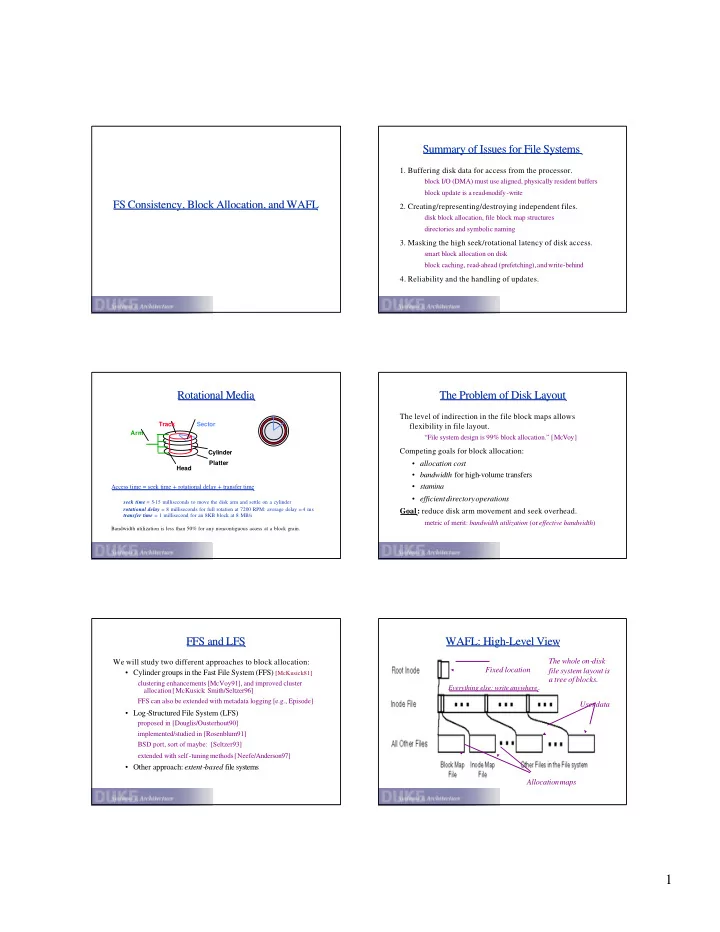

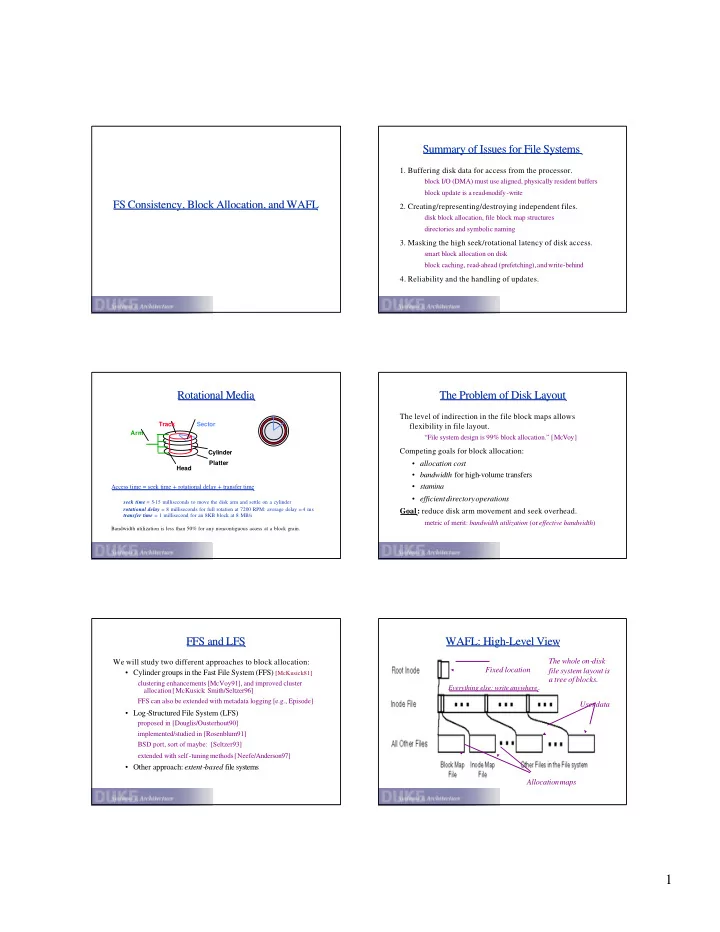

Summary of Issues for File Systems Summary of Issues for File Systems 1. Buffering disk data for access from the processor. block I/O (DMA) must use aligned, physically resident buffers block update is a read-modify-write FS Consistency, Block Allocation, and WAFL FS Consistency, Block Allocation, and WAFL 2. Creating/representing/destroying independent files. disk block allocation, file block map structures directories and symbolic naming 3. Masking the high seek/rotational latency of disk access. smart block allocation on disk block caching, read-ahead (prefetching), and write-behind 4. Reliability and the handling of updates. Rotational Media Rotational Media The Problem of Disk Layout The Problem of Disk Layout The level of indirection in the file block maps allows Track Sector flexibility in file layout. Arm “File system design is 99% block allocation.” [McVoy] Competing goals for block allocation: Cylinder Platter • allocation cost Head • bandwidth for high-volume transfers • stamina Access time = seek time + rotational delay + transfer time • efficient directory operations seek time = 5-15 milliseconds to move the disk arm and settle on a cylinder rotational delay = 8 milliseconds for full rotation at 7200 RPM: average delay = 4 ms Goal: reduce disk arm movement and seek overhead. transfer time = 1 millisecond for an 8KB block at 8 MB/s metric of merit: bandwidth utilization (or effective bandwidth ) Bandwidth utilization is less than 50% for any noncontiguous acc ess at a block grain. FFS and LFS FFS and LFS WAFL: High-Level View WAFL: High Level View The whole on-disk We will study two different approaches to block allocation: Fixed location file system layout is • Cylinder groups in the Fast File System (FFS) [McKusick81] a tree of blocks. clustering enhancements [McVoy91], and improved cluster Everything else: write anywhere . allocation [McKusick: Smith/Seltzer96] FFS can also be extended with metadata logging [e.g., Episode] User data • Log-Structured File System (LFS) proposed in [Douglis/Ousterhout90] implemented/studied in [Rosenblum91] BSD port, sort of maybe: [Seltzer93] extended with self-tuning methods [Neefe/Anderson97] • Other approach: extent-based file systems Allocation maps 1

WAFL: A Closer Look WAFL: A Closer Look Snapshots Snapshots “WAFL’s primary distinguishing characteristic is Snapshots, which are readonly copies of the entire file system.” This was really the origin of the idea of a point-in-time copy for the file server market. What is this idea good for? Snapshots Snapshots Shadowing Shadowing Shadowing is the basic technique for doing an atomic force . reminiscent of copy-on-write 1. starting point 3. overwrite block map 2. write new blocks to disk ( atomic commit ) modify purple/grey blocks prepare new block map and free old blocks The snapshot mechanism is used for user-accessible snapshots and for transient consistency points. Frequent problems: nonsequentialdisk writes, damages clustered allocation on disk. How does WAFL deal with this? How is this like a fork ? WAFL Consistency Points WAFL Consistency Points The Problem of Metadata Updates The Problem of Metadata Updates Metadata updates are a second source of FFS seek overhead. “WAFL uses Snapshots internally so that it can restart quickly even after an unclean system shutdown.” • Metadata writes are poorly localized. E.g., extending a file requires writes to the inode, direct and “A consistency point is a completely self-consistent image of the indirect blocks, cylinder group bit maps and summaries, and entire file system. When WAFL restarts, it simply reverts to the the file block itself. most recent consistency point.” Metadata writes can be delayed, but this incurs a higher risk • Buffer dirty data in memory (delayed writes) and write new of file system corruption in a crash. consistency points as an atomic batch ( force ). • If you lose your metadata, you are dead in the water. • A consistency point transitions the FS from one self- • FFS schedules metadata block writes carefully to limit the consistent state to another. kinds of inconsistencies that can occur. • Combine with NFS operation log in NVRAM Some metadata updates must be synchronous on controllers that What if NVRAM fails? don’t respect order of writes. 2

FFS Failure Recovery FFS Failure Recovery Alternative: Logging and Journaling Alternative: Logging and Journaling Logging can be used to localize synchronous metadata writes, FFS uses a two-pronged approach to handling failures: and reduce the work that must be done on recovery. 1. Carefully order metadata updates to ensure that no dangling Universally used in database systems. references can exist on disk after a failure. Used for metadata writes in journaling file systems (e.g., Episode). • Never recycle a resource (block or inode) before zeroing all Key idea: group each set of related updates into a single log pointers to it ( truncate, unlink, rmdir ). record that can be written to disk atomically (“all-or-nothing”). • Never point to a structure before it has been initialized. • Log records are written to the log file or log disk sequentially . E.g., sync inode on creat before filling directory entry, and sync a new block before writing the block map. No seeks, and preserves temporal ordering. • Each log record is trailed by a marker (e.g., checksum) that says 2. Run a file system scavenger ( fsck ) to fix other problems. “this log record is complete”. Free blocks and inodesthat are not referenced. • To recover, scan the log and reapply updates. Fsckwill never encounter a dangling reference or double allocation. Metadata Logging Metadata Logging The Nub of WAFL The Nub of WAFL Here’s one approach to building a fast filesystem: WAFL’s consistency points allow it to buffer writes and push them out in a batch. 1. Start with FFS with clustering. • Deferred, clustered allocation 2. Make all metadata writes asynchronous. But , that approach cannot survive a failure, so: • Batch writes • Localize writes 3. Add a supplementary log for modified metadata. 4. When metadata changes, write new versions immediately to Indirection through the metadata “tree” allows it to write data the log, in addition to the asynchronous writes to “home”. wherever convenient: the tree can point anywhere. 5. If the system crashes, recover by scanning the log. • Maximize the benefits from batching writes in consistency Much faster than scavenging ( fsck) for large volumes. points. • Also allow multiple copies of a given piece of metadata, for 6. If the system does not crash, then discard the log. snapshots. SnapMirror SnapMirror Mirroring Mirroring Is it research? Structural issue: build mirroring support at: • Application level What makes it interesting/elegant? • FS level What are the tech trends that motivate SnapMirror, and WAFL before it? • Block storage level (e.g., RAID unit) Why is disaster recovery so important now? Who has the information? • What has changed? How does WAFL make mirroring easier? • What has been deallocated? If a mirror fails, what is lost? Can both mirrors operate at the same time? 3

What Has Changed? What Has Changed? Details Details Given a snapshot X, WAFL can ask: is block B allocated in SnapMirror names disk blocks: why? What are the snapshot X? implications? Given a snapshot X and a later snapshot Y, WAFL can ask: What if a mirror fails? What is lost? How to keep the mirror what blocks of Y should be sent to the mirror? self-consistent? How does the no-overwrite policy of WAFL help in Y SnapMirror? 1 0 What is the strengths/weaknesses with implementing this 0 added unused functionality above or below the file system? X Does this conclusion depend on other details of WAFL? deleted unchanged What can we conclude from the experiments? 1 FFS Cylinder Groups FFS Cylinder Groups FFS Allocation Policies FFS Allocation Policies 1. Allocate file inodes close to their containing directories. FFS defines cylinder groups as the unit of disk locality, and it • For mkdir , select a cylinder group with a more-than-average factors locality into allocation choices. number of free inodes. • typical: thousands of cylinders, dozens of groups • For creat , place inode in the same group as the parent. • Strategy: place “related” data blocks in the same cylinder group 2. Concentrate related file data blocks in cylinder groups. whenever possible. Most files are read and written sequentially. seek latency is proportional to seek distance • Place initial blocks of a file in the same group as its inode. • Smear large files across groups: How should we handle directory blocks? Place a run of contiguous blocks in each group. • Place adjacent logical blocks in the same cylinder group. • Reserveinodeblocks in each cylinder group. Logical block n+1 goes in the same group as block n. This allows inodesto be allocated close to their directory entries and Switch to a different group for each indirect block. close to their data blocks (for small files). Disk Hardware (4) Disk Hardware (4) What to Know What to Know We did not cover the LFS material in class, though it was in the Tanenbaumreading. I just want you to know what LFS is and how it compares to WAFL. • FS is log-structured: all writes are to the end of the log • WAFL can write anywhere • Both use no overwrite and indirect access to metadata • LFS requires a cleaner to find log segments with few allocated blocks, and rewrite those blocks at the end of the log so it can free the segment. Raid levels 3 through 5 Backup and parity drives are shaded 4

Recommend

More recommend