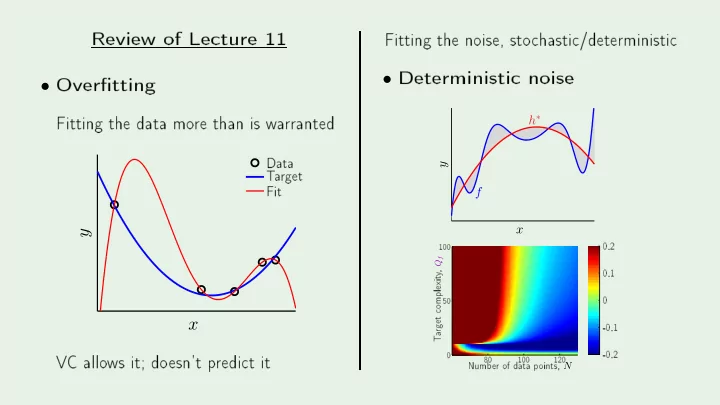

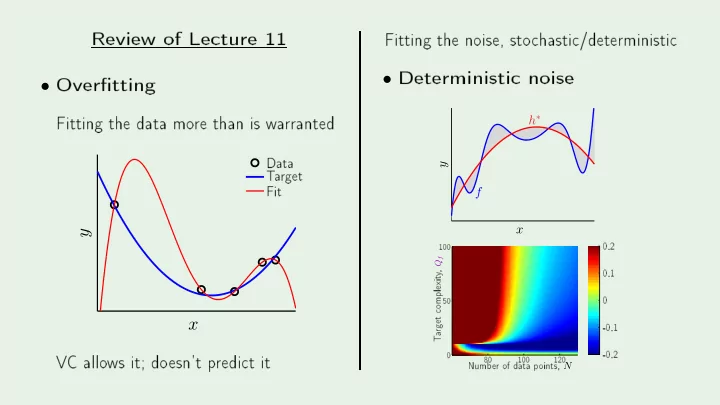

PSfrag repla ements PSfrag repla ements -0.8 -0.6 PSfrag repla ements -0.4 -0.2 Fit 0 Review of Le ture 11 Fitting the noise, sto hasti /deterministi 0.2 0.4 Deterministi noise Over�tting 0.6 0.8 1 -1 Fitting the data mo re than is w a rranted -100 • -0.5 • -80 0 Data 0 0.5 -60 T a rget 1 h ∗ -40 0.5 Fit -20 1 0 y 20 -30 40 f -20 100 0.2 -10 x 0.1 y 0 50 0 , Q f 10 y omplexit -0.1 V C allo ws it; do esn't p redi t it 0 -0.2 80 100 120 rget x Numb er of data p oints, N a T

Lea rning F rom Data Y aser S. Abu-Mostafa Califo rnia Institute of T e hnology Le ture 12 : Regula rization Sp onso red b y Calte h's Provost O� e, E&AS Division, and IST Thursda y , Ma y 10, 2012 •

Outline Regula rization - info rmal Regula rization - fo rmal • W eight de a y • Cho osing a regula rizer • • Creato r: Y aser Abu-Mostafa - LFD Le ture 12 2/21 M � A L

T w o app roa hes to regula rization Mathemati al: Ill-p osed p roblems in fun tion app ro ximation Heuristi : Handi apping the minimization of E in Creato r: Y aser Abu-Mostafa - LFD Le ture 12 3/21 M � A L

PSfrag repla ements PSfrag repla ements -1 -0.8 -1 -0.6 -0.8 -0.4 -0.6 -0.2 -0.4 0 -0.2 0.2 0 0.4 0.2 0.6 0.4 0.8 0.6 1 0.8 A familia r example -2 1 -1.5 -8 -1 -6 -0.5 -4 0 -2 0.5 0 1 2 y y 1.5 4 2 6 without regula rization with regula rization x x Creato r: Y aser Abu-Mostafa - LFD Le ture 12 4/21 M � A L

PSfrag repla ements PSfrag repla ements -1 -1 -0.8 -0.8 -0.6 -0.6 -0.4 -0.4 -0.2 -0.2 0 0 0.2 0.2 0.4 0.4 0.6 0.6 and the winner is . . . 0.8 0.8 without regula rization with regula rization 1 1 -1.5 -3 -1 -2 -0.5 -1 0 0 0.5 1 g ( x ) ¯ g ( x ) ¯ y y 2 1 1.5 3 sin( πx ) sin( πx ) bias = 0 . 21 va r = 1 . 69 bias = 0 . 23 va r = 0 . 33 x x Creato r: Y aser Abu-Mostafa - LFD Le ture 12 5/21 M � A L

The p olynomial mo del : p olynomials of o rder Q linea r regression in Z spa e q H q = . . . q ( x ) 1 Q L 1 ( x ) � w q L q ( x ) z = H Legendre p olynomials: q =0 L L 1 L 2 L 3 L 4 L 5 PSfrag repla ements PSfrag repla ements PSfrag repla ements PSfrag repla ements PSfrag repla ements Creato r: Y aser Abu-Mostafa - LFD Le ture 12 6/21 8 (35 x 4 − 30 x 2 + 3) 2 (3 x 2 − 1) 2 (5 x 3 − 3 x ) 8 (63 x 5 · · · ) 1 1 1 1 x M � A L

Un onstrained solution Given T z n − y n ) 2 ( x 1 , y 1 ) , · · · , ( x N , y n ) ( z 1 , y 1 ) , · · · , ( z N , y n ) − → Minimize in ( w ) = N 1 � ( w E T (Z w − y ) Minimize N n =1 T Z) − 1 Z T y 1 N (Z w − y ) lin = (Z w Creato r: Y aser Abu-Mostafa - LFD Le ture 12 7/21 M � A L

Constraining the w eights Ha rd onstraint: is onstrained version of H 10 with w q = 0 fo r q > 2 � soft-o rder � onstraint H 2 Softer version: Q T (Z w − y ) � w 2 Minimize q ≤ C q =0 T w ≤ C subje t to: 1 N (Z w − y ) Solution: w instead of w reg lin w Creato r: Y aser Abu-Mostafa - LFD Le ture 12 8/21 M � A L

Solving fo r w reg onst. in = T (Z w − y ) Minimize in ( w ) = T w ≤ C subje t to: lin 1 N (Z w − y ) E E normal in ( w reg ) ∝ − w reg w w reg ∇ E in w in ( w reg ) + 2 λ reg = 0 = − 2 λ N w t w = C T w Minimize in ( w ) + λ ∇ E N w ∇ E Creato r: Y aser Abu-Mostafa - LFD Le ture 12 9/21 w E C ↑ λ ↓ N w M � A L

Augmented erro r T w Minimizing aug ( w ) = E in ( w ) + λ T (Z w − y ) + λ T w un onditionally E N w solves − 1 = N (Z w − y ) N w T (Z w − y ) Minimizing in ( w ) = − T w ≤ C subje t to: V C fo rmulation 1 N (Z w − y ) E Creato r: Y aser Abu-Mostafa - LFD Le ture 12 10/21 ← − w M � A L

The solution T w Minimize aug ( w ) = E in ( w ) + λ T (Z w − y ) + λ w t w E N w 1 T (Z w − y ) + λ w = 0 � � aug ( w ) = 0 = (Z w − y ) N T Z + λ I) − 1 Z T y (with regula rization) reg = (Z = Z ∇ E ⇒ T Z) − 1 Z T y as opp osed to (without regula rization) lin = (Z w Creato r: Y aser Abu-Mostafa - LFD Le ture 12 11/21 w M � A L

The result T w Minimizing fo r di�erent λ 's: in ( w ) + λ PSfrag repla ements E N w Fit Data PSfrag repla ements PSfrag repla ements PSfrag repla ements T a rget λ = 0 λ = 0 . 0001 λ = 0 . 01 λ = 1 Fit -1 -1 -1 -1 -0.5 -0.5 -0.5 -0.5 0 0 0 0 0.5 0.5 0.5 0.5 1 1 1 1 0 0 0 -30 0.5 0.5 0.5 -20 y y y y 1 1 1 -10 1.5 1.5 1.5 0 2 2 2 10 over�tting under�tting x x x x Creato r: Y aser Abu-Mostafa - LFD Le ture 12 12/21 − → − → − → − → M � A L

W eight `de a y' T w Minimizing is alled w eight de a y . Why? in ( w ) + λ E Gradient des ent: N w in − 2 η λ � � in w ( t + 1) = w ( t ) − η ∇ E w ( t ) N w ( t ) Applies in neural net w o rks: = w ( t ) (1 − 2 η λ � � N ) − η ∇ E w ( t ) T w = d ( l − 1) d ( l ) L � 2 Creato r: Y aser Abu-Mostafa - LFD Le ture 12 13/21 � w ( l ) � � � w ij i =0 j =1 l =1 M � A L

V a riations of w eight de a y Emphasis of ertain w eights: Q � γ q w 2 Examples: q lo w-o rder �t q =0 high-o rder �t γ q = 2 q = ⇒ γ q = 2 − q = Neural net w o rks: di�erent la y ers get di�erent γ 's ⇒ T Γ T Γ w Tikhonov regula rizer: Creato r: Y aser Abu-Mostafa - LFD Le ture 12 14/21 w M � A L

PSfrag repla ements 0 0.5 Even w eight gro wth! 1 1.5 0 0.5 W e ` onstrain' the w eights to b e la rge - bad! 1 w eight gro wth 1.5 Pra ti al rule: 2 w eight de a y out 2.5 e ted E sto hasti noise is `high-frequen y' 3 3.5 deterministi noise is also non-smo oth Exp Regula rization P a rameter, λ 4 onstrain lea rning to w a rds smo other hyp otheses = Creato r: Y aser Abu-Mostafa - LFD Le ture 12 15/21 ⇒ M � A L

General fo rm of augmented erro r Calling the regula rizer Ω = Ω( h ) , w e minimize aug ( h ) = E in ( h ) + λ Rings a b ell? N Ω( h ) E out ( h ) ≤ E in ( h ) + ↓ ↓ is b etter than E as a p ro xy fo r E Ω( H ) aug in out E Creato r: Y aser Abu-Mostafa - LFD Le ture 12 16/21 E M � A L

Outline Regula rization - info rmal Regula rization - fo rmal • W eight de a y • Cho osing a regula rizer • • Creato r: Y aser Abu-Mostafa - LFD Le ture 12 17/21 M � A L

The p erfe t regula rizer Ω Constraint in the `dire tion' of the ta rget fun tion (going in ir les ) Guiding p rin iple: Dire tion of smo other o r �simpler� Chose a bad Ω ? W e still have λ ! regula rization is a ne essa ry evil Creato r: Y aser Abu-Mostafa - LFD Le ture 12 18/21 M � A L

PSfrag repla ements Neural-net w o rk regula rizers -4 linea r -2 0 W eight de a y: F rom linea r to logi al tanh 2 4 -1 W eight elimination: +1 -0.5 ha rd threshold 0 0.5 F ew er w eights = smaller V C dimension 1 − 1 Soft w eight elimination: ⇒ � 2 � w ( l ) ij � Ω( w ) = Creato r: Y aser Abu-Mostafa - LFD Le ture 12 19/21 � 2 � β 2 + w ( l ) i,j,l ij M � A L

Ea rly stopping as a regula rizer Regula rization through the optimizer! top 3.5 When to stop? validation 3 2.5 2 Error 1.5 E 1 Early stopping out 0.5 E Creato r: Y aser Abu-Mostafa - LFD Le ture 12 20/21 in 0 0 1000 2000 3000 4000 5000 6000 7000 8000 9000 10000 Epochs bottom M � A L

PSfrag repla ements PSfrag repla ements The optimal λ 1 0.6 .75 0.4 0.5 σ 2 = 0 . 5 Q f = 100 out out 0.2 e ted E .25 e ted E σ 2 = 0 . 25 Q f = 30 Exp Q f = 15 Exp 0.5 1 1.5 2 0.5 1 1.5 2 σ 2 = 0 Regula rization P a rameter, λ Regula rization P a rameter, λ Sto hasti noise Deterministi noise Creato r: Y aser Abu-Mostafa - LFD Le ture 12 21/21 M � A L

Recommend

More recommend