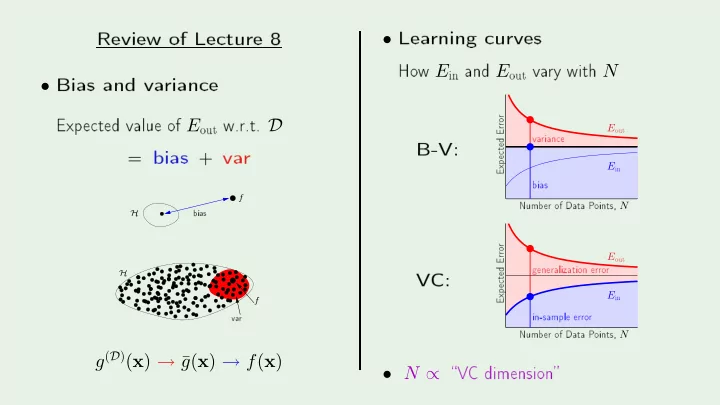

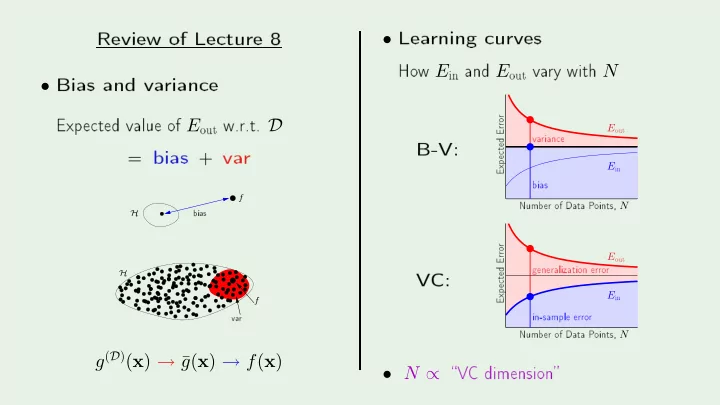

PSfrag repla ements Lea rning urves Review of Le ture 8 PSfrag repla ements Ho w E and E va ry with N in out 20 Bias and va rian e 40 • 60 Exp e ted value of E w.r.t. D 80 out 0.16 • B-V: 0.17 bias + va r out va rian e 0.18 r 0.19 Erro E in 0.2 e ted 20 bias 0.21 = 40 bias Exp 0.22 E Numb er of Data P oints, N 60 80 f 0.16 H V C: 0.17 out 0.18 generalization erro r r 0.19 Erro E in 0.2 va r e ted H 0.21 in-sample erro r Exp 0.22 E Numb er of Data P oints, N f �V C dimension� g ( D ) ( x ) → ¯ g ( x ) → f ( x ) • N ∝

Lea rning F rom Data Y aser S. Abu-Mostafa Califo rnia Institute of T e hnology Le ture 9 : The Linea r Mo del I I Sp onso red b y Calte h's Provost O� e, E&AS Division, and IST T uesda y , Ma y 1, 2012 •

Where w e a re Linea r lassi� ation Linea r regression • � Logisti regression ? • � Nonlinea r transfo rms • • ≈ � Creato r: Y aser Abu-Mostafa - LFD Le ture 9 2/24 M � A L

Nonlinea r transfo rms Φ − → Ea h z i = φ i ( x ) x = ( x 0 , x 1 , · · · , x d ) z = ( z 0 , z 1 , · · · · · · · · · · · · , z ˜ d ) Example: z = Φ( x ) z = (1 , x 1 , x 2 , x 1 x 2 , x 2 1 , x 2 Final hyp othesis g ( x ) in X spa e: 2 ) T Φ( x ) T Φ( x ) sign o r � ˜ Creato r: Y aser Abu-Mostafa - LFD Le ture 9 3/24 � ˜ w w M � A L

The p ri e w e pa y Φ − → x = ( x 0 , x 1 , · · · , x d ) z = ( z 0 , z 1 , · · · · · · · · · · · · , z ˜ d ) ↓ ↓ v = d + 1 v ≤ ˜ ˜ w w d + 1 d d Creato r: Y aser Abu-Mostafa - LFD Le ture 9 4/24 M � A L

PSfrag repla ements -1 -0.5 0 0.5 T w o non-sepa rable ases 1 -1.5 -1 -0.5 0 0.5 1 1.5 Creato r: Y aser Abu-Mostafa - LFD Le ture 9 5/24 M � A L

PSfrag repla ements -1 -0.5 0 First ase 0.5 1 Use a linea r mo del in X ; a ept E in > 0 -1.5 -1 o r -0.5 Insist on E in = 0 ; go to high-dimensional Z 0 0.5 1 1.5 Creato r: Y aser Abu-Mostafa - LFD Le ture 9 6/24 M � A L

PSfrag repla ements -1 -0.5 0 Se ond ase 0.5 1 -1.5 -1 Why not: z = (1 , x 1 , x 2 , x 1 x 2 , x 2 1 , x 2 2 ) -0.5 o r b etter y et: 0 z = (1 , x 2 1 , x 2 2 ) 0.5 o r even: z = (1 , x 2 1 + x 2 2 ) 1 1.5 z = ( x 2 1 + x 2 2 − 0 . 6) Creato r: Y aser Abu-Mostafa - LFD Le ture 9 7/24 M � A L

Lesson lea rned Lo oking at the data b efo re ho osing the mo del an b e haza rdous to y our E out Data sno oping Lea rning F rom Data - Le ture 9 8/24

Logisti regression - Outline The mo del Erro r measure • Lea rning algo rithm • • Creato r: Y aser Abu-Mostafa - LFD Le ture 9 9/24 M � A L

A third linea r mo del d � linea r lassi� ation linea r regression logisti regression s = w i x i i =0 sign ( s ) h ( x ) = h ( x ) = s h ( x ) = θ ( s ) x 0 x 0 x 0 x 1 s x 1 ( ) s x x h x 1 2 ( ) s x h x 2 ( ) x h x 2 x d x Creato r: Y aser Abu-Mostafa - LFD Le ture 9 10/24 d x d M � A L

PSfrag repla ements The logisti fun tion θ -4 -2 0 The fo rmula: 2 4 0 1 0.5 e s θ ( s ) θ ( s ) = 1 1 + e s 0 soft threshold: un ertaint y s sigmoid: �attened out `s' Creato r: Y aser Abu-Mostafa - LFD Le ture 9 11/24 M � A L

Probabilit y interp retation is interp reted as a p robabilit y Example. Predi tion of hea rt atta ks h ( x ) = θ ( s ) Input x : holesterol level, age, w eight, et . : p robabilit y of a hea rt atta k T x The signal s = w �risk s o re� θ ( s ) Creato r: Y aser Abu-Mostafa - LFD Le ture 9 12/24 h ( x ) = θ ( s ) M � A L

Genuine p robabilit y Data ( x , y ) with bina ry y , generated b y a noisy ta rget: fo r y = +1; fo r y = − 1 . � f ( x ) P ( y | x ) = 1 − f ( x ) The ta rget f : R d → [0 , 1] is the p robabilit y T x ) ≈ f ( x ) Lea rn g ( x ) = θ ( w Creato r: Y aser Abu-Mostafa - LFD Le ture 9 13/24 M � A L

Erro r measure F o r ea h ( x , y ) , y is generated b y p robabilit y f ( x ) Plausible erro r measure based on lik eliho o d: If h = f , ho w lik ely to get y from x ? fo r y = +1; fo r y = − 1 . � h ( x ) P ( y | x ) = 1 − h ( x ) Creato r: Y aser Abu-Mostafa - LFD Le ture 9 14/24 M � A L

PSfrag repla ements F o rmula fo r lik eliho o d -4 fo r y = +1; -2 0 fo r y = − 1 . 2 � h ( x ) 4 P ( y | x ) = T x ) 0 1 Substitute h ( x ) = θ ( w , noting θ ( − s ) = 1 − θ ( s ) 1 − h ( x ) 0.5 θ ( s ) 1 T x ) 0 s Lik eliho o d of D = ( x 1 , y 1 ) , . . . , ( x N , y N ) is P ( y | x ) = θ ( y w T x n ) N N Creato r: Y aser Abu-Mostafa - LFD Le ture 9 15/24 � � P ( y n | x n ) = θ ( y n w n =1 n =1 M � A L

Maximizing the lik eliho o d T x n ) Minimize � � N − 1 � N ln θ ( y n w n =1 T x n ) N � � � � 1 1 � 1 = ln θ ( s ) = θ ( y n w 1 + e − s N n =1 T x n � in ( w ) = 1 � ross-entrop y� erro r e ( h ( x n ) ,y n ) N � � Creato r: Y aser Abu-Mostafa - LFD Le ture 9 16/24 1 + e − y n w ln E N � �� � n =1 M � A L

Logisti regression - Outline The mo del Erro r measure • Lea rning algo rithm • • Creato r: Y aser Abu-Mostafa - LFD Le ture 9 17/24 M � A L

Ho w to minimize E in F o r logisti regression, T x n � iterative solution in ( w ) = N 1 � � 1 + e − y n w ln ← − E Compa re to linea r regression: N n =1 T x n − y n ) 2 losed-fo rm solution in ( w ) = N 1 � ( w E ← − N n =1 Creato r: Y aser Abu-Mostafa - LFD Le ture 9 18/24 M � A L

PSfrag repla ements Iterative metho d: gradient des ent -10 -8 General metho d fo r nonlinea r optimization in ( w ) -6 -4 Sta rt at w (0) ; tak e a step along steep est slop e -2 E 0 in 2 r, E Fixed step size: 10 Erro 15 In-sample What is the dire tion ˆ ? 20 w (1) = w (0) + η ˆ v 25 W eights, w v Creato r: Y aser Abu-Mostafa - LFD Le ture 9 19/24 M � A L

F o rmula fo r the dire tion ˆ in = E in ( w (0) + η ˆ in ( w (0)) v t ˆ in ( w (0)) v ) − E ∆ E v + O ( η 2 ) in ( w (0)) � = η ∇ E Sin e ˆ is a unit ve to r, ≥ − η �∇ E in ( w (0)) v in ( w (0)) � Creato r: Y aser Abu-Mostafa - LFD Le ture 9 20/24 ∇ E ˆ v = − �∇ E M � A L

PSfrag repla ements PSfrag repla ements PSfrag repla ements Fixed-size step? Ho w η a�e ts the algo rithm: -1 -1 -1 -0.8 -0.8 -0.8 -0.6 -0.6 -0.6 -0.4 -0.4 -0.4 la rge η -0.2 -0.2 -0.2 0 0 0 0.2 0.2 0.2 0.4 0.4 0.4 0.6 0.6 0.6 in in in 0.8 0.8 0.8 r, E r, E r, E small η 1 1 1 0 0 0 Erro Erro Erro 0.2 0.05 0.2 0.4 0.1 0.4 In-sample 0.6 In-sample 0.15 In-sample 0.6 0.8 0.2 0.8 1 0.25 1 W eights, w W eights, w W eights, w to o small to o la rge va riable η � just right should in rease with the slop e η η Creato r: Y aser Abu-Mostafa - LFD Le ture 9 21/24 η M � A L

Easy implementation Instead of in ( w (0)) ∆ w = η ˆ v in ( w (0)) � ∇ E Have = − η �∇ E in ( w (0)) Fixed lea rning rate η ∆ w = − η ∇ E Creato r: Y aser Abu-Mostafa - LFD Le ture 9 22/24 M � A L

Logisti regression algo rithm 1: Initialize the w eights at t = 0 to w (0) 2: fo r t = 0 , 1 , 2 , . . . do 3: Compute the gradient in = − 1 T ( t ) x n N y n x n � 4: Up date the w eights: w ( t + 1) = w ( t ) − η ∇ E in ∇ E 1 + e y n w N 5: Iterate to the next step until it is time to stop n =1 6: Return the �nal w eights w Creato r: Y aser Abu-Mostafa - LFD Le ture 9 23/24 M � A L

Credit Appro v e Classi� ation Error Analysis Summa ry of P Linea er eptron r Mo dels or Den y PLA, P o k et,. . . Amoun t Squared Error Linea r Regression of Credit Pseudo-in v erse Probabilit y Cross-en trop y Error Logisti Regression of Default Gradien t des en t Creato r: Y aser Abu-Mostafa - LFD Le ture 9 24/24 M � A L

Recommend

More recommend