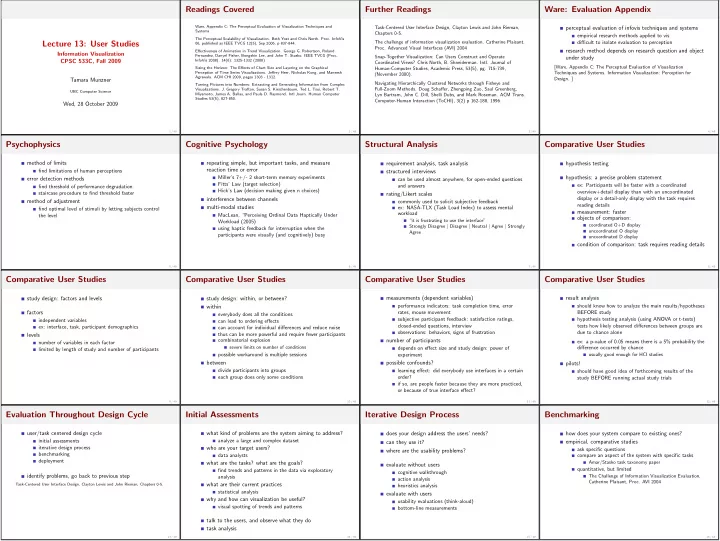

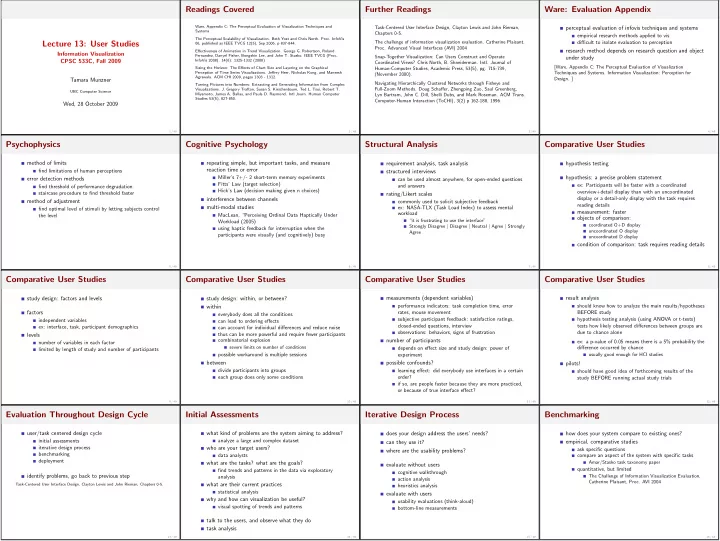

Readings Covered Further Readings Ware: Evaluation Appendix Ware, Appendix C: The Perceptual Evaluation of Visualization Techniques and Task-Centered User Interface Design, Clayton Lewis and John Rieman, perceptual evaluation of infovis techniques and systems Systems Chapters 0-5. empirical research methods applied to vis The Perceptual Scalability of Visualization. Beth Yost and Chris North. Proc. InfoVis Lecture 13: User Studies 06, published as IEEE TVCG 12(5), Sep 2006, p 837-844. The challenge of information visualization evaluation. Catherine Plaisant. difficult to isolate evaluation to perception Proc. Advanced Visual Interfaces (AVI) 2004 Effectiveness of Animation in Trend Visualization. George G. Robertson, Roland research method depends on research question and object Information Visualization Fernandez, Danyel Fisher, Bongshin Lee, and John T. Stasko. IEEE TVCG (Proc. Snap-Together Visualization: Can Users Construct and Operate under study CPSC 533C, Fall 2009 InfoVis 2008). 14(6): 1325-1332 (2008) Coordinated Views? Chris North, B. Shneiderman. Intl. Journal of [Ware, Appendix C: The Perceptual Evaluation of Visualization Sizing the Horizon: The Effects of Chart Size and Layering on the Graphical Human-Computer Studies, Academic Press, 53(5), pg. 715-739, Perception of Time Series Visualizations. Jeffrey Heer, Nicholas Kong, and Maneesh Techniques and Systems. Information Visualization: Perception for (November 2000). Agrawala. ACM CHI 2009, pages 1303 - 1312. Tamara Munzner Design. ] Navigating Hierarchically Clustered Networks through Fisheye and Turning Pictures into Numbers: Extracting and Generating Information from Complex Full-Zoom Methods. Doug Schaffer, Zhengping Zuo, Saul Greenberg, Visualizations. J. Gregory Trafton, Susan S. Kirschenbaum, Ted L. Tsui, Robert T. UBC Computer Science Miyamoto, James A. Ballas, and Paula D. Raymond. Intl Journ. Human Computer Lyn Bartram, John C. Dill, Shelli Dubs, and Mark Roseman. ACM Trans. Studies 53(5), 827-850. Computer-Human Interaction (ToCHI), 3(2) p 162-188, 1996. Wed, 28 October 2009 1 / 49 2 / 49 3 / 49 4 / 49 Psychophysics Cognitive Psychology Structural Analysis Comparative User Studies method of limits repeating simple, but important tasks, and measure requirement analysis, task analysis hypothesis testing find limitations of human perceptions reaction time or error structured interviews error detection methods Miller’s 7+/- 2 short-term memory experiments hypothesis: a precise problem statement can be used almost anywhere, for open-ended questions Fitts’ Law (target selection) find threshold of performance degradation and answers ex: Participants will be faster with a coordinated Hick’s Law (decision making given n choices) overview+detail display than with an uncoordinated staircase procedure to find threshold faster rating/Likert scales display or a detail-only display with the task requires interference between channels method of adjustment commonly used to solicit subjective feedback reading details multi-modal studies ex: NASA-TLX (Task Load Index) to assess mental find optimal level of stimuli by letting subjects control measurement: faster workload the level MacLean, “Perceiving Ordinal Data Haptically Under objects of comparison: “it is frustrating to use the interface” Workload (2005) Strongly Disagree | Disagree | Neutral | Agree | Strongly coordinated O+D display using haptic feedback for interruption when the Agree uncoordinated O display participants were visually (and cognitively) busy uncoordinated D display condition of comparison: task requires reading details 5 / 49 6 / 49 7 / 49 8 / 49 Comparative User Studies Comparative User Studies Comparative User Studies Comparative User Studies measurements (dependent variables) result analysis study design: factors and levels study design: within, or between? performance indicators: task completion time, error should know how to analyze the main results/hypotheses within factors rates, mouse movement BEFORE study everybody does all the conditions subjective participant feedback: satisfaction ratings, hypothesis testing analysis (using ANOVA or t-tests) independent variables can lead to ordering effects closed-ended questions, interview tests how likely observed differences between groups are ex: interface, task, participant demographics can account for individual differences and reduce noise observations: behaviors, signs of frustration due to chance alone levels thus can be more powerful and require fewer participants combinatorial explosion number of participants ex: a p-value of 0.05 means there is a 5% probability the number of variables in each factor severe limits on number of conditions difference occurred by chance limited by length of study and number of participants depends on effect size and study design: power of possible workaround is multiple sessions experiment usually good enough for HCI studies between possible confounds? pilots! divide participants into groups learning effect: did everybody use interfaces in a certain should have good idea of forthcoming results of the each group does only some conditions order? study BEFORE running actual study trials if so, are people faster because they are more practiced, or because of true interface effect? 9 / 49 10 / 49 11 / 49 12 / 49 Evaluation Throughout Design Cycle Initial Assessments Iterative Design Process Benchmarking user/task centered design cycle what kind of problems are the system aiming to address? does your design address the users’ needs? how does your system compare to existing ones? initial assessments analyze a large and complex dataset can they use it? empirical, comparative studies iterative design process who are your target users? where are the usability problems? ask specific questions benchmarking data analysts compare an aspect of the system with specific tasks deployment what are the tasks? what are the goals? Amar/Stasko task taxonomy paper evaluate without users find trends and patterns in the data via exploratory quantitative, but limited cognitive walkthrough identify problems, go back to previous step The Challenge of Information Visualization Evaluation, analysis action analysis Catherine Plaisant, Proc. AVI 2004 what are their current practices Task-Centered User Interface Design, Clayton Lewis and John Rieman, Chapters 0-5. heuristics analysis statistical analysis evaluate with users why and how can visualization be useful? usability evaluations (think-aloud) visual spotting of trends and patterns bottom-line measurements talk to the users, and observe what they do task analysis 13 / 49 14 / 49 15 / 49 16 / 49

Recommend

More recommend