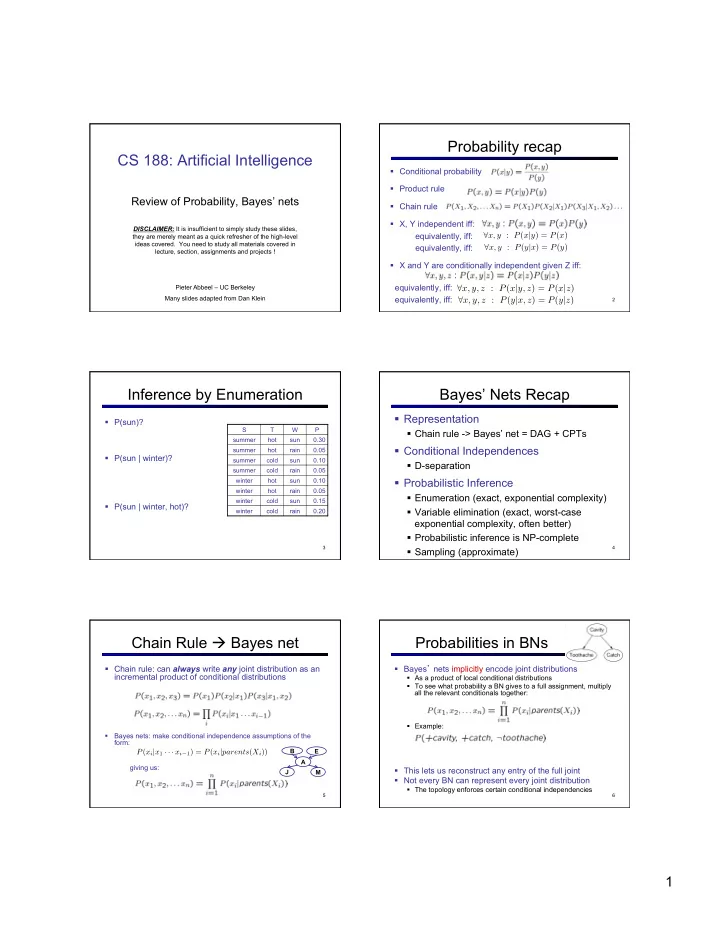

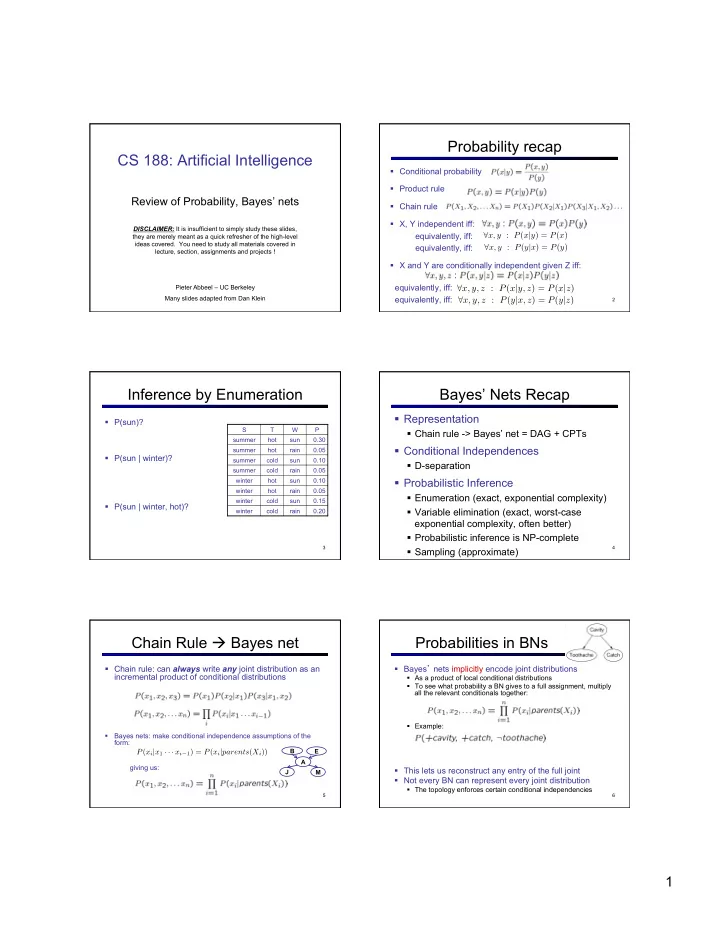

Probability recap CS 188: Artificial Intelligence § Conditional probability § Product rule Review of Probability, Bayes’ nets § Chain rule § X, Y independent iff: DISCLAIMER: It is insufficient to simply study these slides, equivalently, iff: ∀ x, y : P ( x | y ) = P ( x ) they are merely meant as a quick refresher of the high-level ideas covered. You need to study all materials covered in equivalently, iff: ∀ x, y : P ( y | x ) = P ( y ) lecture, section, assignments and projects ! § X and Y are conditionally independent given Z iff: equivalently, iff: Pieter Abbeel – UC Berkeley ∀ x, y, z : P ( x | y, z ) = P ( x | z ) Many slides adapted from Dan Klein equivalently, iff: ∀ x, y, z : P ( y | x, z ) = P ( y | z ) 2 Inference by Enumeration Bayes’ Nets Recap § Representation § P(sun)? S T W P § Chain rule -> Bayes’ net = DAG + CPTs summer hot sun 0.30 § Conditional Independences summer hot rain 0.05 § P(sun | winter)? summer cold sun 0.10 § D-separation summer cold rain 0.05 winter hot sun 0.10 § Probabilistic Inference winter hot rain 0.05 § Enumeration (exact, exponential complexity) winter cold sun 0.15 § P(sun | winter, hot)? § Variable elimination (exact, worst-case winter cold rain 0.20 exponential complexity, often better) § Probabilistic inference is NP-complete 3 4 § Sampling (approximate) Chain Rule à Bayes net Probabilities in BNs § Chain rule: can always write any joint distribution as an § Bayes ’ nets implicitly encode joint distributions incremental product of conditional distributions § As a product of local conditional distributions § To see what probability a BN gives to a full assignment, multiply all the relevant conditionals together: § Example: § Bayes nets: make conditional independence assumptions of the form: B E P ( x i | x 1 · · · x i − 1 ) = P ( x i | parents ( X i )) A giving us: § This lets us reconstruct any entry of the full joint J M § Not every BN can represent every joint distribution § The topology enforces certain conditional independencies 5 6 1

Example: Alarm Network Size of a Bayes’ Net for E P(E) B P(B) § How big is a joint distribution over N Boolean variables? B urglary E arthqk +e 0.002 +b 0.001 2 N ¬ e 0.998 ¬ b 0.999 § Size of representation if we use the chain rule A larm 2 N B E A P(A|B,E) § How big is an N-node net if nodes have up to k parents? +b +e +a 0.95 J ohn M ary O(N * 2 k+1 ) +b +e ¬ a 0.05 calls calls +b ¬ e +a 0.94 A J P(J|A) A M P(M|A) +b ¬ e ¬ a 0.06 § Both give you the power to calculate +a +j 0.9 +a +m 0.7 ¬ b +e +a 0.29 § BNs: ¬ b +e ¬ a 0.71 +a ¬ j 0.1 +a ¬ m 0.3 § Huge space savings! ¬ a +j 0.05 ¬ a +m 0.01 ¬ b ¬ e +a 0.001 § Easier to elicit local CPTs ¬ a ¬ j 0.95 ¬ a ¬ m 0.99 ¬ b ¬ e ¬ a 0.999 § Faster to answer queries 8 Bayes Nets: Assumptions D-Separation § Assumptions made by specifying the graph: § Question: Are X and Y Active Triples Inactive Triples conditionally independent given evidence vars {Z}? P ( x i | x 1 · · · x i − 1 ) = P ( x i | parents ( X i )) § Yes, if X and Y “ separated ” by Z § Consider all (undirected) paths § Given a Bayes net graph additional conditional from X to Y independences can be read off directly from the graph § No active paths = independence! § Question: Are two nodes guaranteed to be independent given § A path is active if each triple certain evidence? is active: § If no, can prove with a counter example § Causal chain A → B → C where B is unobserved (either direction) § I.e., pick a set of CPT’s, and show that the independence § Common cause A ← B → C assumption is violated by the resulting distribution where B is unobserved § If yes, can prove with § Common effect (aka v-structure) A → B ← C where B or one of its § Algebra (tedious) descendents is observed § D-separation (analyzes graph) § All it takes to block a path is 9 a single inactive segment D-Separation Example ? § Given query ⊥ X j |{ X k 1 , ..., X k n } X i ⊥ L § Shade all evidence nodes Yes § For all (undirected!) paths between and R B Yes § Check whether path is active § If active return ⊥ X j |{ X k 1 , ..., X k n } X i ⊥ D T § (If reaching this point all paths have been checked and shown inactive) Yes T ’ X i ⊥ ⊥ X j |{ X k 1 , ..., X k n } § Return 11 12 2

All Conditional Independences Topology Limits Distributions Y Y X Z § Given a Bayes net structure, can run d- § Given some graph { X ⊥ ⊥ Y, X ⊥ ⊥ Z, Y ⊥ ⊥ Z, topology G, only certain X Z X ⊥ ⊥ Z | Y, X ⊥ ⊥ Y | Z, Y ⊥ ⊥ Z | X } separation to build a complete list of joint distributions can Y { X ⊥ ⊥ Z | Y } be encoded conditional independences that are X Z § The graph structure necessarily true of the form guarantees certain Y (conditional) independences X Z ⊥ X j |{ X k 1 , ..., X k n } X i ⊥ § (There might be more {} independence) § This list determines the set of probability § Adding arcs increases Y Y Y the set of distributions, distributions that can be represented by X Z X Z X Z but has several costs Bayes’ nets with this graph structure § Full conditioning can Y Y Y encode any distribution 13 14 X Z X Z X Z Inference by Enumeration Example: Enumeration § In this simple method, we only need the BN to § Given unlimited time, inference in BNs is easy synthesize the joint entries § Recipe: § State the marginal probabilities you need § Figure out ALL the atomic probabilities you need § Calculate and combine them § Example: B E A J M 15 16 Variable Elimination Variable Elimination Outline § Track objects called factors § Why is inference by enumeration so slow? § Initial factors are local CPTs (one per node) R § You join up the whole joint distribution before you sum out the hidden variables +r ¡ 0.1 ¡ +r ¡ +t ¡ 0.8 ¡ +t ¡ +l ¡ 0.3 ¡ § You end up repeating a lot of work! -‑r ¡ 0.9 ¡ +r ¡ -‑t ¡ 0.2 ¡ +t ¡ -‑l ¡ 0.7 ¡ T -‑r ¡ +t ¡ 0.1 ¡ -‑t ¡ +l ¡ 0.1 ¡ -‑r ¡ -‑t ¡ 0.9 ¡ -‑t ¡ -‑l ¡ 0.9 ¡ § Idea: interleave joining and marginalizing! § Any known values are selected L § Called “ Variable Elimination ” § E.g. if we know , the initial factors are § Still NP-hard, but usually much faster than inference by enumeration +r ¡ 0.1 ¡ +r ¡ +t ¡ 0.8 ¡ +t ¡ +l ¡ 0.3 ¡ -‑r ¡ 0.9 ¡ +r ¡ -‑t ¡ 0.2 ¡ -‑t ¡ +l ¡ 0.1 ¡ -‑r ¡ +t ¡ 0.1 ¡ -‑r ¡ -‑t ¡ 0.9 ¡ 17 § VE: Alternately join factors and eliminate variables 18 3

Variable Elimination Example Variable Elimination Example T +r ¡ 0.1 ¡ T, L L Sum out R Join R Join T Sum out T -‑r ¡ 0.9 ¡ L +r ¡ +t ¡ 0.08 ¡ R +t ¡ 0.17 ¡ +r ¡ +t ¡ 0.8 ¡ +r ¡ -‑t ¡ 0.02 ¡ -‑t ¡ 0.83 ¡ +r ¡ -‑t ¡ 0.2 ¡ -‑r ¡ +t ¡ 0.09 ¡ +t ¡ 0.17 ¡ -‑r ¡ +t ¡ 0.1 ¡ -‑r ¡ -‑t ¡ 0.81 ¡ -‑t ¡ 0.83 ¡ T T +t ¡ +l ¡ 0.051 ¡ -‑r ¡ -‑t ¡ 0.9 ¡ R, T +l ¡ 0.134 ¡ +t ¡ -‑l ¡ 0.119 ¡ -‑l ¡ 0.886 ¡ -‑t ¡ +l ¡ 0.083 ¡ +t ¡ +l ¡ 0.3 ¡ L L -‑t ¡ -‑l ¡ 0.747 ¡ +t ¡ +l ¡ 0.3 ¡ +t ¡ +l ¡ 0.3 ¡ +t ¡ +l ¡ 0.3 ¡ +t ¡ -‑l ¡ 0.7 ¡ L +t ¡ -‑l ¡ 0.7 ¡ +t ¡ -‑l ¡ 0.7 ¡ +t ¡ -‑l ¡ 0.7 ¡ -‑t ¡ +l ¡ 0.1 ¡ -‑t ¡ +l ¡ 0.1 ¡ -‑t ¡ +l ¡ 0.1 ¡ -‑t ¡ +l ¡ 0.1 ¡ -‑t ¡ -‑l ¡ 0.9 ¡ -‑t ¡ -‑l ¡ 0.9 ¡ -‑t ¡ -‑l ¡ 0.9 ¡ -‑t ¡ -‑l ¡ 0.9 ¡ 19 * VE is variable elimination Example Example Choose E Choose A Finish with B Normalize 21 22 Another (bit more abstractly worked General Variable Elimination out) Variable Elimination Example § Query: § Start with initial factors: § Local CPTs (but instantiated by evidence) § While there are still hidden variables (not Q or evidence): § Pick a hidden variable H § Join all factors mentioning H § Eliminate (sum out) H § Join all remaining factors and normalize Computational complexity critically depends on the largest factor being generated in this process. Size of factor = number of entries in table. In example above (assuming binary) all factors generated are of size 2 --- as 23 24 they all only have one variable (Z, Z, and X3 respectively). 4

Recommend

More recommend