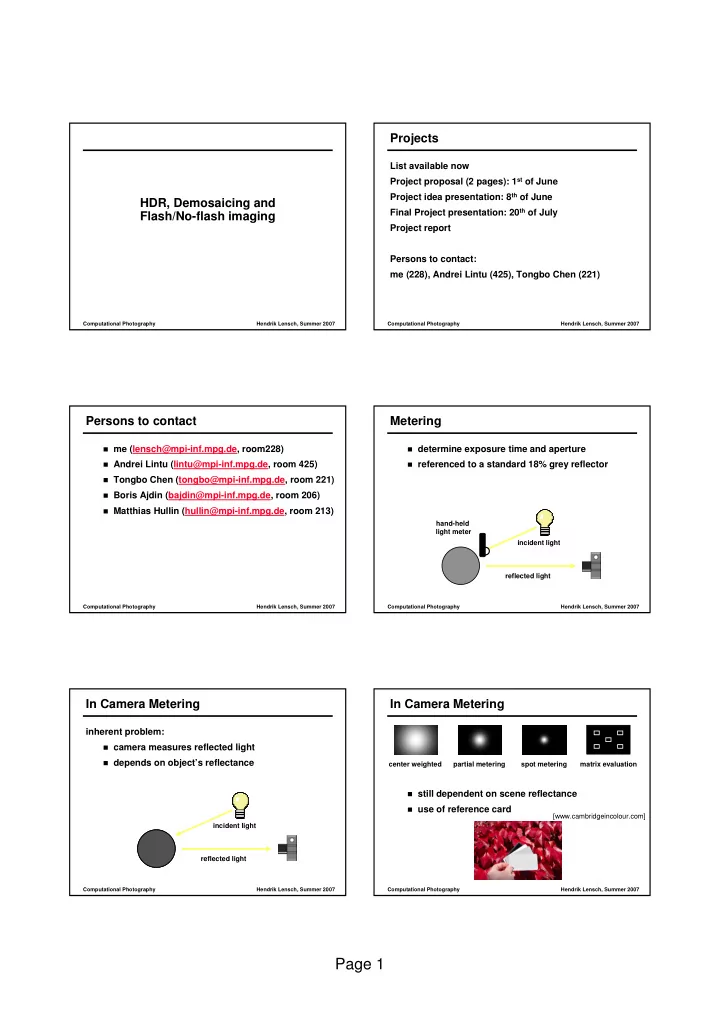

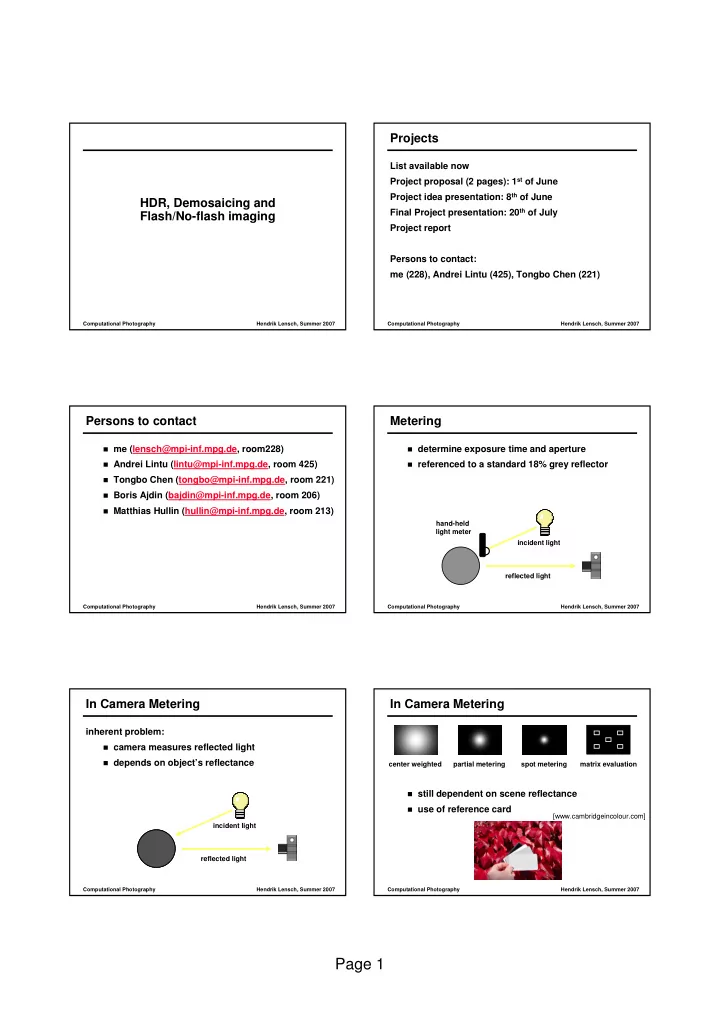

Projects List available now Project proposal (2 pages): 1 st of June Project idea presentation: 8 th of June HDR, Demosaicing and Final Project presentation: 20 th of July Flash/No-flash imaging Project report Persons to contact: me (228), Andrei Lintu (425), Tongbo Chen (221) Computational Photography Hendrik Lensch, Summer 2007 Computational Photography Hendrik Lensch, Summer 2007 Persons to contact Metering � me (lensch@mpi-inf.mpg.de, room228) � determine exposure time and aperture � Andrei Lintu (lintu@mpi-inf.mpg.de, room 425) � referenced to a standard 18% grey reflector � Tongbo Chen (tongbo@mpi-inf.mpg.de, room 221) � Boris Ajdin (bajdin@mpi-inf.mpg.de, room 206) � Matthias Hullin (hullin@mpi-inf.mpg.de, room 213) hand-held light meter incident light reflected light Computational Photography Hendrik Lensch, Summer 2007 Computational Photography Hendrik Lensch, Summer 2007 In Camera Metering In Camera Metering inherent problem: � camera measures reflected light � depends on object’s reflectance center weighted partial metering spot metering matrix evaluation � still dependent on scene reflectance � use of reference card [www.cambridgeincolour.com] incident light reflected light Computational Photography Hendrik Lensch, Summer 2007 Computational Photography Hendrik Lensch, Summer 2007 Page 1

Exposure Bracketing Exposure Bracketing � capture additional over and underexposed � capture additional over and underexposed images images Computational Photography Hendrik Lensch, Summer 2007 Computational Photography Hendrik Lensch, Summer 2007 Exposure Bracketing Dynamic Range in Real World Images � natural scenes: 18 stops (2^18) � human: 17stops (after adaptation 30stops ~ 1:1,000,000,000) � camera: 10-16stops [Stumpfel et al. 00] � capture additional over and underexposed images � how much variation? � how to combine? Computational Photography Hendrik Lensch, Summer 2007 Computational Photography Hendrik Lensch, Summer 2007 Dynamic Range of Cameras High Dynamic Range (HDR) Imaging example: photographic camera with standard CCD sensor basic idea of multi-exposure techniques: dynamic range of sensor 1:1000 � � exposure variation (handheld camera/non- � combine multiple images with static scene): 1/60 th s – 1/6000 th s exposure time 1:100 different exposure settings � varying aperture f/2.0 – f/22.0 ~1:100 � makes use of available exposure bias/varying “sensitivity” 1:10 � sequential dynamic range other techniques available (e.g. � total (sequential) 1:100,000,000 HDR video) simultaneous dynamic range still only 1:1000 similar situation for analog cameras Computational Photography Hendrik Lensch, Summer 2007 Computational Photography Hendrik Lensch, Summer 2007 Page 2

OECF Test Chart High Dynamic Range Imaging absolute calibration � limited dynamic range of cameras is a problem � shadows are underexposed � bright areas are overexposed � sampling density is not sufficient � some modern CMOS imagers have a higher and often sufficient dynamic range than most CCD imagers Computational Photography Hendrik Lensch, Summer 2007 Computational Photography Hendrik Lensch, Summer 2007 High Dynamic Range (HDR) Imaging High Dynamic Range Imaging general idea of High Dynamic Range (HDR) imaging: � analog film with several emulsions of different sensitivity levels by Wyckoff in the 1960s � combine multiple images with different exposure times � dynamic range of about 10 8 � pick for each pixel a well exposed image � commonly used method for digital photography by Debevec and Malik (1997) � response curve needs to be known � don’t change aperture due to different depth-of-field � selects a small number of pixels from the images � performs an optimization of the response curve with a smoothness constraint � newer method by Robertson et al. (1999) � optimization over all pixels in all images Computational Photography Hendrik Lensch, Summer 2007 Computational Photography Hendrik Lensch, Summer 2007 High Dynamic Range Imaging HDR Imaging [Robertson et al.99] Principle of this approach: Principle of this approach: • calculate a HDR image using the response curve calculate a HDR image using the response curve • • find a better response curve using the HDR image • find a better response curve using the HDR image (to be iterated until convergence) (to be iterated until convergence) Computational Photography Hendrik Lensch, Summer 2007 Computational Photography Hendrik Lensch, Summer 2007 Page 3

HDR Imaging [Robertson et al.99] HDR Imaging [Robertson et al.99] input: input: � series of i images with exposure times t i � series of i images with exposure times t i and pixel values y ij and pixel values y ij � a weighting function w ij = w ij (y ij ) (bell shaped curve) y = ( ) f t x ij i j � a camera response curve I ( y ) ij initial assumption: linear response � task: ⇒ calculate HDR values x j from images using � find irradiance (luminance) x j ∑ w t I � recover response curve I ( y ) ij i y ij ij i x = − 1 ∑ f ( y ) = t x = I j 2 w t ij i j y ij ij i i Computational Photography Hendrik Lensch, Summer 2007 Computational Photography Hendrik Lensch, Summer 2007 HDR Imaging [Robertson et al.99] HDR Imaging [Robertson et al.99] I ( y ) I ( m ) optimizing the response curve resp. : both steps ij � minimization of objective function O � calculation of a HDR image using I = ∑ 2 � optimization of I using the HDR image O w ( I ij − t x ) ij y i j i , j are now iterated until convergence using Gauss-Seidel relaxation yields � criterion: decrease of O below some threshold 1 ∑ � usually about 5 iterations I = t x m i j Card ( E ) i , j ∈ E m m E = {( i , j ) : y = m } m ij � normalization of I so that I 128 =1.0 Computational Photography Hendrik Lensch, Summer 2007 Computational Photography Hendrik Lensch, Summer 2007 HDR Imaging [Robertson et al.99] Example: Capturing Environment Maps 1/2000s 1/500s 1/125s 1/30s 1/2000s 1/500s 1/125s 1/30s 1/8s 1/8s series of input images series of input images log( I ( y )) ij Computational Photography Hendrik Lensch, Summer 2007 Computational Photography Hendrik Lensch, Summer 2007 Page 4

Example: Capturing Environment Maps Weighting Function [Robertson et al.99] choice of weighting function w(y ij ) for response recovery 2 ( y − 127 . 5 ) ij w = exp − 4 � for 8 bit images ij 2 127 . 5 � possible correction at both ends (over/underexposure) � motivated by general noise model series of input images series of input images Computational Photography Hendrik Lensch, Summer 2007 Computational Photography Hendrik Lensch, Summer 2007 Weighting function [Robertson et al.03] Algorithm of Robertson et al. choice of weighting function w(y ij ) for HDR � consider response curve gradient reconstruction � what would be the best curve based on noise? � introduce certainty function c as derivative of the [Robertson et al. 2003] response curve with logarithmic exposure axis � approximation of response function by cubic spline to compute derivative w = w ( y ) = c ( I ) ij ij y ij w = w ( y ) = c ( I ) ij ij y ij Computational Photography Hendrik Lensch, Summer 2007 Computational Photography Hendrik Lensch, Summer 2007 Algorithm of Robertson et al. Input Images for Response Recovery discussion my favorite: � method very easy � grey card, out of focus, smooth illumination gradient � doesn’t make assumptions about response curve shape advantages � converges fast � uniform histogram of values � takes all available input data into account � no color processing or sharpening interfering with the result � can be extended to >8 bit color depth � 16bit should be followed by smoothing Computational Photography Hendrik Lensch, Summer 2007 Computational Photography Hendrik Lensch, Summer 2007 Page 5

Input Images for HDR Generation HDR-Video how many images are necessary to get good results? � LDR [Bennett & McMillan 2005] � depends on scene dynamic range and on quality � HDR image formats [OpenExr, HDR JPEG] requirements � HDR MPEG Encoding [Mantiuk et al. 2004] � most often a difference of two stops (factor of 4) � HDR + motion compensation [Kang et al. 2003] between exposures is sufficient � [Grossberg & Nayar 2003] Computational Photography Hendrik Lensch, Summer 2007 Computational Photography Hendrik Lensch, Summer 2007 White Balance White Balance build-in function derive scale from white point capture the spectral sun characteristics of tungsten the light source to tungsten daylight daylight tungsten assure correct incandescent color reproduction red green blue infrared ultra violet wavelength flourescent flourescent flash flash Computational Photography Hendrik Lensch, Summer 2007 Computational Photography Hendrik Lensch, Summer 2007 White Balance White Balance build-in function build-in function derive scale from white point derive scale from white point red green blue red green blue infrared ultra violet infrared ultra violet wavelength wavelength Computational Photography Hendrik Lensch, Summer 2007 Computational Photography Hendrik Lensch, Summer 2007 Page 6

Recommend

More recommend