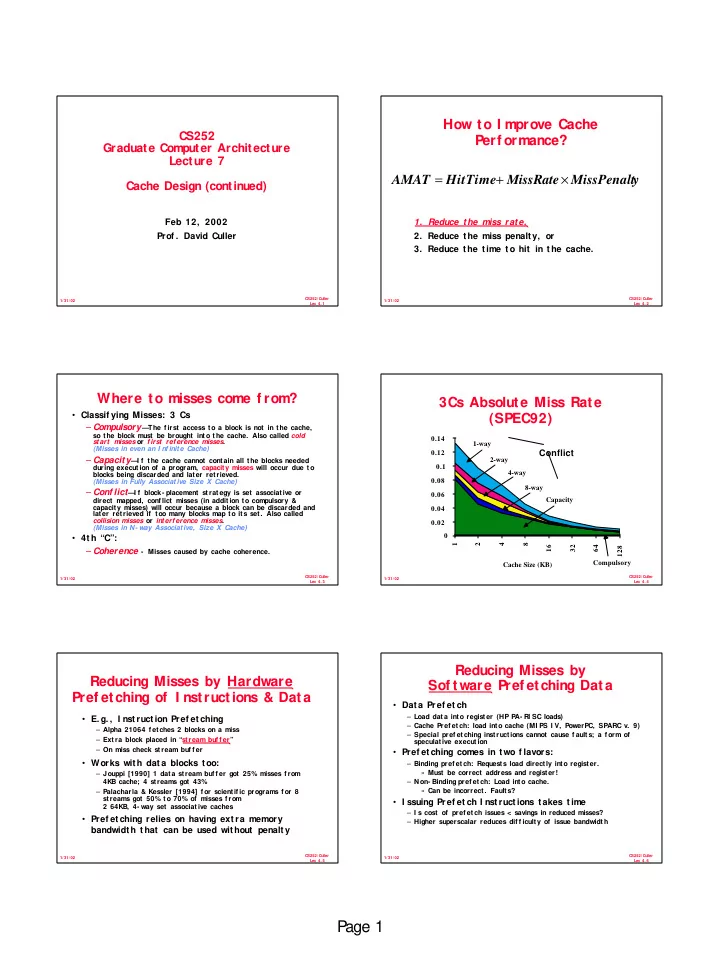

How to I mprove Cache CS252 Perf ormance? Graduate Computer Architecture Lecture 7 = + × AMAT HitTime MissRate MissPenalt y Cache Design (continued) Feb 12, 2002 1. Reduce the miss rate, Prof . David Culler 2. Reduce t he miss penalt y, or 3. Reduce the time to hit in the cache. CS252/ Culler CS252/ Culler 1/ 31/ 02 1/ 31/ 02 Lec 4. 1 Lec 4. 2 Where to misses come f rom? 3Cs Absolute Miss Rate • Classif ying Misses: 3 Cs (SPEC92) – Compulsory —The f irst access to a block is not in the cache, so the block must be brought into the cache. Also called cold 0.14 start misses or f irst ref erence misses . 1-way (Misses in even an I nf inite Cache) Conflict 0.12 – Capacit y —I f the cache cannot contain all the blocks needed 2-way during execution of a program, capacity misses will occur due t o 0.1 4-way blocks being discarded and later retrieved. 0.08 (Misses in Fully Associative Size X Cache) 8-way – Conf lict —I f block- placement strategy is set associative or 0.06 Capacity direct mapped, conf lict misses (in addition to compulsory & capacity misses) will occur because a block can be discarded and 0.04 later retrieved if too many blocks map to its set. Also called collision misses or interf erence misses . 0.02 (Misses in N- way Associative, Size X Cache) 0 • 4t h “C”: 1 2 4 8 16 32 64 128 – Coherence - Misses caused by cache coherence. Compulsory Cache Size (KB) CS252/ Culler CS252/ Culler 1/ 31/ 02 1/ 31/ 02 Lec 4. 3 Lec 4. 4 Reducing Misses by Reducing Misses by Hardware Sof t ware Pref etching Dat a Pref etching of I nstructions & Data • Data Pref et ch – Load data into register (HP PA- RI SC loads) • E. g. , I nst ruct ion Pref et ching – Cache Pref etch: load into cache (MI PS I V, PowerPC, SPARC v. 9) – Alpha 21064 f etches 2 blocks on a miss – Special pref etching instructions cannot cause f aults; a f orm of – Extra block placed in “stream buf f er” speculat ive execut ion – On miss check stream buf f er • Pref et ching comes in t wo f lavors: • Works wit h dat a blocks t oo: – Binding pref etch: Requests load directly into register. » Must be correct address and register! – Jouppi [1990] 1 data stream buf f er got 25% misses f rom 4KB cache; 4 streams got 43% – Non- Binding pref etch: Load into cache. » Can be incorrect. Faults? – Palacharla & Kessler [1994] f or scientif ic programs f or 8 streams got 50% to 70% of misses f rom • I ssuing Pref et ch I nst ruct ions t akes t ime 2 64KB, 4- way set associative caches – I s cost of pref etch issues < savings in reduced misses? • Pref et ching relies on having ext ra memory – Higher superscalar reduces dif f iculty of issue bandwidth bandwidt h t hat can be used wit hout penalt y CS252/ Culler CS252/ Culler 1/ 31/ 02 1/ 31/ 02 Lec 4. 5 Lec 4. 6 P age 1

Reducing Misses by Compiler Merging Arrays Example Optimizations • McFarling [1989] reduced caches misses by 75% /* Before: 2 sequential arrays */ on 8KB direct mapped cache, 4 byte blocks in sof tware int val[SIZE]; • I nstructions int key[SIZE]; – Reorder procedures in memory so as to reduce conf lict misses – Prof iling t o look at conf lict s(using t ools t hey developed) /* After: 1 array of stuctures */ • Dat a struct merge { int val; – Merging Arrays : improve spat ial localit y by single array of compound element s vs. 2 arrays int key; – Loop I nterchange : change nest ing of loops t o access dat a in order st ored in }; memory struct merge merged_array[SIZE]; – Loop Fusion : Combine 2 independent loops that have same looping and some variables overlap – Blocking : I mprove temporal locality by accessing “blocks” of data repeat edly Reducing conf lict s bet ween val & key; vs. going down whole columns or rows improve spatial locality CS252/ Culler CS252/ Culler 1/ 31/ 02 1/ 31/ 02 Lec 4. 7 Lec 4. 8 Loop I nterchange Example Loop Fusion Example /* Before */ for (i = 0; i < N; i = i+1) /* Before */ for (j = 0; j < N; j = j+1) for (k = 0; k < 100; k = k+1) a[i][j] = 1/b[i][j] * c[i][j]; for (j = 0; j < 100; j = j+1) for (i = 0; i < N; i = i+1) for (i = 0; i < 5000; i = i+1) for (j = 0; j < N; j = j+1) x[i][j] = 2 * x[i][j]; d[i][j] = a[i][j] + c[i][j]; /* After */ /* After */ for (k = 0; k < 100; k = k+1) for (i = 0; i < N; i = i+1) for (i = 0; i < 5000; i = i+1) for (j = 0; j < N; j = j+1) for (j = 0; j < 100; j = j+1) { a[i][j] = 1/b[i][j] * c[i][j]; x[i][j] = 2 * x[i][j]; d[i][j] = a[i][j] + c[i][j];} Sequent ial accesses inst ead of st riding 2 misses per access to a & c vs. one miss per t hrough memory every 100 words; improved access; improve spat ial localit y spat ial localit y CS252/ Culler CS252/ Culler 1/ 31/ 02 1/ 31/ 02 Lec 4. 9 Lec 4.10 Blocking Example Blocking Example /* Before */ for (i = 0; i < N; i = i+1) /* After */ for (j = 0; j < N; j = j+1) for (jj = 0; jj < N; jj = jj+B) for (kk = 0; kk < N; kk = kk+B) {r = 0; for (k = 0; k < N; k = k+1){ for (i = 0; i < N; i = i+1) for (j = jj; j < min(jj+B-1,N); j = j+1) r = r + y[i][k]*z[k][j];}; {r = 0; x[i][j] = r; for (k = kk; k < min(kk+B-1,N); k = k+1) { }; r = r + y[i][k]*z[k][j];}; • Two I nner Loops: x[i][j] = x[ i][j] + r; – Read all NxN elements of z[] }; – Read N elements of 1 row of y[] repeatedly • B called Blocking Fact or – Write N elements of 1 row of x[] 3 + N 2 to N 3 / B+2N 2 • Capacit y Misses f rom 2N • Capacit y Misses a f unct ion of N & Cache Size: – 2N 3 + N 2 => (assuming no conf lict; otherwise … • Conf lict Misses Too? ) • I dea: comput e on BxB submat rix t hat f it s CS252/ Culler CS252/ Culler 1/ 31/ 02 1/ 31/ 02 Lec 4.11 Lec 4.12 P age 2

Summary of Compiler Optimizations to Reducing Conf lict Misses by Blocking Reduce Cache Misses (by hand) vpenta (nasa7) 0.1 gmty (nasa7) tomcatv btrix (nasa7) Direct Mapped Cache 0.05 mxm (nasa7) spice cholesky Fully Associative Cache (nasa7) compress 0 0 50 100 150 1 1.5 2 2.5 3 Blocking Factor Performance Improvement • Conf lict misses in caches not FA vs. Blocking size merged loop loop fusion blocking – Lam et al [1991] a blocking f actor of 24 had a f if th the misses arrays interchange vs. 48 despite both f it in cache CS252/ Culler CS252/ Culler 1/ 31/ 02 1/ 31/ 02 Lec 4.13 Lec 4.14 Review: I mproving Cache Summary: Miss Rate Reduction Perf ormance CPUtime = IC × CPI Execution + Memory accesses × Miss rate × Miss penalty × Clock cycle time Instruction 1. Reduce the miss rate, • 3 Cs: Compulsory, Capacity, Conf lict 2. Reduce t he miss penalt y, or 0. Larger cache 1. Reduce Misses via Larger Block Size 3. Reduce t he t ime t o hit in t he cache. 2. Reduce Misses via Higher Associativity 3. Reducing Misses via Victim Cache 4. Reducing Misses via Pseudo - Associativity 5. Reducing Misses by HW Pref etching I nstr, Dat a 6. Reducing Misses by SW Pref et ching Dat a 7. Reducing Misses by Compiler Optimizations • Pref etching comes in two f lavors: – Binding pref et ch : Request s load direct ly int o regist er. » Must be correct address and regist er! – Non- Binding pref etch: Load int o cache. » Can be incorrect. Frees HW/ SW to guess! CS252/ Culler CS252/ Culler 1/ 31/ 02 1/ 31/ 02 Lec 4.15 Lec 4.16 Write Policy: Writ e Policy 2: Writ e- Through vs Write- Back Write Allocate vs Non- Allocate (What happens on write- miss) • Write- through: all writes update cache and underlying memory/ cache – Can always discard cached data - most up- to- date data is in memory – Cache control bit: only a valid bit • Write allocate: allocate new cache line in cache • Write- back: all writes simply update cache – Usually means that you have to do a “read miss” to – Can’t just discard cached data - may have to write it back to memory f ill in rest of the cache- line! – Cache cont rol bit s: bot h valid and dirty bit s • Other Advantages: – Alternative: per/ word valid bits – Writ e- through: • Write non- allocat e (or “writ e- around”): » memory (or ot her processors) always have lat est dat a » Simpler management of cache – Simply send write data through to underlying – Writ e- back: memory/ cache - don’t allocate new cache line! » much lower bandwidt h, since dat a of t en overwrit t en mult iple t imes » Better tolerance to long- lat ency memory? CS252/ Culler CS252/ Culler 1/ 31/ 02 1/ 31/ 02 Lec 4.17 Lec 4.18 P age 3

Recommend

More recommend