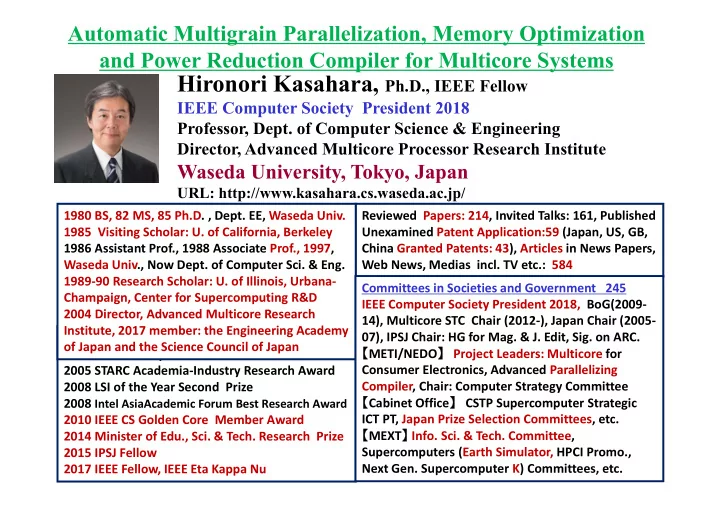

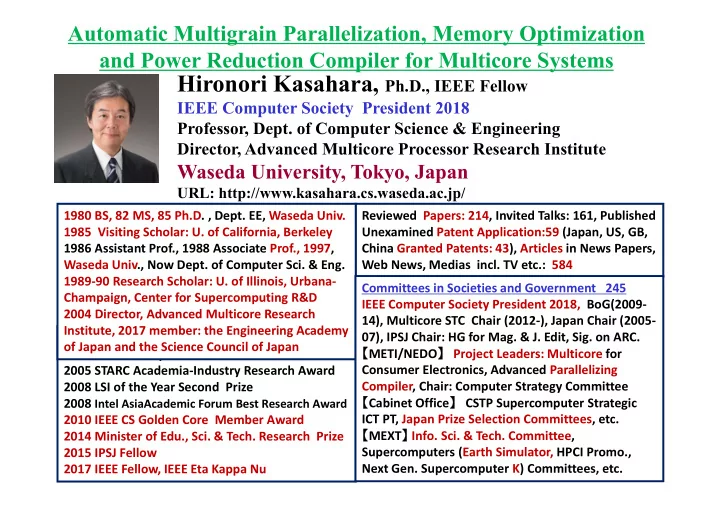

Automatic Multigrain Parallelization, Memory Optimization and Power Reduction Compiler for Multicore Systems Hironori Kasahara, Ph.D., IEEE Fellow IEEE Computer Society President 2018 Professor, Dept. of Computer Science & Engineering Director, Advanced Multicore Processor Research Institute Waseda University, Tokyo, Japan URL: http://www.kasahara.cs.waseda.ac.jp/ 1980 BS, 82 MS, 85 Ph.D. , Dept. EE, Waseda Univ. Reviewed Papers: 214, Invited Talks: 161, Published 1985 Visiting Scholar: U. of California, Berkeley Unexamined Patent Application:59 (Japan, US, GB, 1986 Assistant Prof., 1988 Associate Prof., 1997, China Granted Patents: 43), Articles in News Papers, Waseda Univ., Now Dept. of Computer Sci. & Eng. Web News, Medias incl. TV etc.: 584 1989‐90 Research Scholar: U. of Illinois, Urbana‐ Committees in Societies and Government 245 Champaign, Center for Supercomputing R&D IEEE Computer Society President 2018, BoG(2009‐ 2004 Director, Advanced Multicore Research 14), Multicore STC Chair (2012‐), Japan Chair (2005‐ Institute, 2017 member: the Engineering Academy 1987 IFAC World Congress Young Author Prize 07), IPSJ Chair: HG for Mag. & J. Edit, Sig. on ARC. of Japan and the Science Council of Japan 【 METI/NEDO 】 Project Leaders: Multicore for 1997 IPSJ Sakai Special Research Award Consumer Electronics, Advanced Parallelizing 2005 STARC Academia‐Industry Research Award Compiler, Chair: Computer Strategy Committee 2008 LSI of the Year Second Prize 【 Cabinet Office 】 CSTP Supercomputer Strategic 2008 Intel AsiaAcademic Forum Best Research Award 2010 IEEE CS Golden Core Member Award ICT PT, Japan Prize Selection Committees, etc. 【 MEXT 】 Info. Sci. & Tech. Committee, 2014 Minister of Edu., Sci. & Tech. Research Prize Supercomputers (Earth Simulator, HPCI Promo., 2015 IPSJ Fellow Next Gen. Supercomputer K) Committees, etc. 2017 IEEE Fellow, IEEE Eta Kappa Nu

Multicores for Performance and Low Power Power consumption is one of the biggest problems for performance scaling from smartphones to cloud servers and supercomputers (“K” more than 10MW) . Power ∝ Frequency * Voltage 2 I$ ILRAM LBSC (Voltage ∝ Frequency) Core#0 Core#1 URAM Power ∝ Frequency 3 DLRAM D$ SNC0 Core#2 Core#3 If Frequency is reduced to 1/4 SHWY VSWC (Ex. 4GHz 1GHz), Core#6 Core#7 SNC1 Power is reduced to 1/64 and Performance falls down to 1/4 . Core#4 Core#5 CSM <Multicores> DBSC GCPG DDRPAD If 8cores are integrated on a chip, IEEE ISSCC08: Paper No. 4.5, M.ITO, … and H. Kasahara, Power is still 1/8 and “An 8640 MIPS SoC with Independent Power-off Control of 8 Performance becomes 2 times. CPUs and 8 RAMs by an Automatic Parallelizing Compiler” 2

Parallel Soft is important for scalable performance of multicore (LCPC2015) Just more cores donʼt give us speedup Development cost and period of parallel software are getting a bottleneck of development of embedded systems, eg. IoT , Automobile Earthquake wave propagation simulation GMS developed by National Research Institute for Earth Science and Disaster Resilience (NIED) Fjitsu M9000 SPARC Multicore Server OSCAR Compiler gives us 211 times speedup with 128 cores Commercial compiler gives us 0.9 times speedup with 128 cores (slow- downed against 1 core) Automatic parallelizing compiler available on the market gave us no speedup against execution time on 1 core on 64 cores Execution time with 128 cores was slower than 1 core (0.9 times speedup) Advanced OSCAR parallelizing compiler gave us 211 times speedup with 128cores against execution time with 1 core using commercial compiler OSCAR compiler gave us 2.1 times speedup on 1 core against commercial compiler by global cache optimization 3

Power Reduction of MPEG2 Decoding to 1/4 on 8 Core Homogeneous Multicore RP-2 by OSCAR Parallelizing Compiler MPEG2 Decoding with 8 CPU cores Without Power With Power Control Control ( Frequency, 7 ( Voltage : 1.4V) 7 Resume Standby: Power shutdown & 6 Voltage lowering 1.4V-1.0V) 6 5 5 4 4 3 3 2 2 1 1 0 0 Avg. Power Avg. Power 73.5% Power Reduction 5.73 [W] 1.52 [W] 4

OSCAR Parallelizing Compiler To improve effective performance, cost-performance and software productivity and reduce power Multigrain Parallelization (LCPC1991,2001,04) coarse-grain parallelism among loops and subroutines (2000 on SMP), near fine grain parallelism among statements (1992) in addition to loop parallelism 1 Data Localization 3 2 5 4 Automatic data management for distributed 6 12 7 10 11 9 8 shared memory, cache and local memory 14 13 15 16 (Local Memory 1995, 2016 on RP2,Cache2001,03) Software Coherent Control (2017) 18 17 19 21 22 20 Data Transfer Overlapping (2016 partially) 24 25 23 26 dlg1 dlg2 dlg3 dlg0 28 29 27 31 32 30 Data transfer overlapping using Data Transfer Controllers (DMAs) Data Localization Group 33 Power Reduction (2005 for Multicore, 2011 Multi-processes, 2013 on ARM) Reduction of consumed power by compiler control DVFS and Power gating with hardware supports.

Generation of Coarse Grain Tasks M acro-tasks (MTs) Block of Pseudo Assignments (BPA): Basic Block (BB) Repetition Block (RB) : natural loop Subroutine Block (SB): subroutine BPA Ne a r fine g ra in pa ra lle liza tion BPA RB SB L oop le ve l pa ra lle liza tion BPA BPA RB Prog ra m RB Ne ar fine gr ain of loop body RB SB Coa rse g ra in SB BPA pa ra lle liza tion RB BPA SB SB Coa rse g ra in RB BPA pa ra lle liza tion RB SB SB T ota l 1 st . L 2 nd . L 3 rd . L a ye r a ye r a ye r Syste m 6

Earliest Executable Condition Analysis for Coarse Grain Tasks (Macro-tasks) Data Dependency 1 Control flow Conditional branch 1 BPA Block of Psuedo BPA 2 3 Assignment Statements 2 BPA 3 BPA RB Repetition Block 4 BPA 4 8 7 RB 5 BPA 6 BPA 9 10 6 RB BPA RB 5 6 15 BPA 7 RB RB 11 8 BPA 15 7 BPA 9 BPA 10 RB 12 Data dependency 11 BPA Extended control dependency 13 Conditional branch 12 BPA OR 13 RB AND 14 A Macro Flow Graph Original control flow 14 RB A Macro Task END Graph 7

PRIORITY DETERMINATION IN DYNAMIC CP METHOD 8

Earliest Executable Conditions 9

Automatic processor assignment in 103.su2cor • Using 14 processors Coarse grain parallelization within DO400 10

MTG of Su2cor-LOOPS-DO400 Coarse grain parallelism PARA_ALD = 4.3 DOALL Sequential LOOP SB BB 11

Data-Localization: Loop Aligned Decomposition • Decompose multiple loop (Doall and Seq) into CARs and LRs considering inter-loop data dependence. – Most data in LR can be passed through LM. – LR: Localizable Region, CAR: Commonly Accessed Region C RB1(Doall) LR CAR L R CAR LR DO I=1,101 A(I)=2*I DO I=1,33 DO I=34,35 DO I=36,66 DO I=67,68 DO I=69,101 ENDDO DO I=1,33 C RB2(Doseq) DO I=34,34 DO I=1,100 B(I)=B(I-1) DO I=35,66 +A(I)+A(I+1) ENDDO DO I=67,67 RB3(Doall) DO I=68,100 DO I=2,100 C(I)=B(I)+B(I-1) DO I=2,34 DO I=35,67 DO I=68,100 ENDDO C 12

Data Localization 1 1 PE0 PE1 12 1 2 3 3 2 5 4 2 6 7 4 14 6 12 7 10 11 9 8 6 3 4 5 8 18 15 5 19 9 14 13 15 16 7 25 11 29 10 18 17 19 21 22 20 8 9 10 13 16 17 20 24 25 23 26 11 22 26 dlg2 dlg3 21 30 dlg1 dlg0 23 24 28 29 27 31 32 30 14 12 13 27 28 32 Data Localization Group 33 15 31 A schedule for MTG MTG after Division two processors 13

Inter-loop data dependence analysis in TLG • Define exit-RB in TLG as Standard-Loop C RB1(Doall) DO I=1,101 K-1 K K+1 I(RB1) • Find iterations on which A(I)=2*I ENDDO a iteration of Standard-Loop is data dependent C RB2(Doseq) DO I=1,100 – e.g. K th of RB3 is data-dep B(I)=B(I-1) I(RB2) on K-1 th ,K th of RB2, K-1 K +A(I)+A(I+1) ENDDO on K-1 th ,K th ,K+1 th of RB1 C RB3(Doall) DO I=2,100 I(RB3) C(I)=B(I)+B(I-1) K ENDDO Example of TLG 14

Decomposition of RBs in TLG • Decompose GCIR into DGCIR p (1 ≦ p ≦ n) – n: (multiple) num of PCs, DGCIR: Decomposed GCIR • Generate CAR on which DGCIR p &DGCIR p+1 are data-dep. • Generate LR on which DGCIR p is data-dep. RB1 1 RB1 <1,2> RB1 2 RB1 <2,3> RB1 3 I(RB1) 1 2 3 33 34 35 36 66 67 68 65 99 100 101 RB2 1 RB2 <1,2> RB2 2 RB2 <2,3> RB2 3 I(RB2) 1 2 33 34 65 66 67 35 99 100 RB3 1 RB3 2 RB3 3 I(RB3) 35 2 34 67 66 100 DGCIR 1 DGCIR 2 DGCIR 3 GCIR 15

Recommend

More recommend