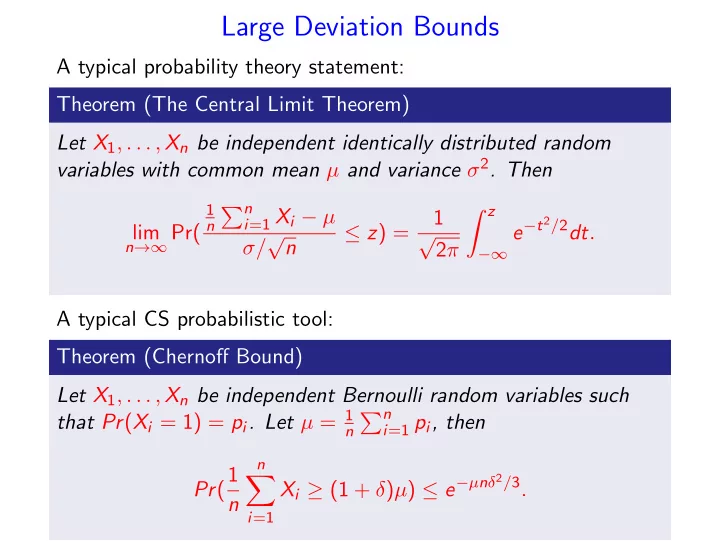

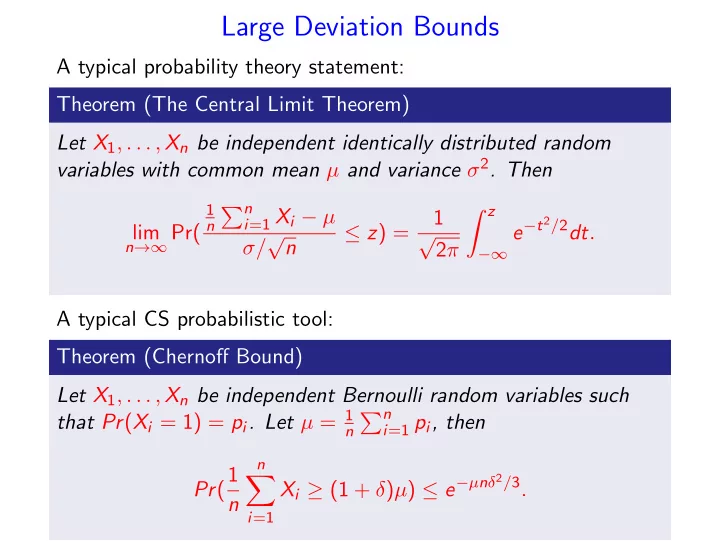

Large Deviation Bounds A typical probability theory statement: Theorem (The Central Limit Theorem) Let X 1 , . . . , X n be independent identically distributed random variables with common mean µ and variance σ 2 . Then � z � n 1 i =1 X i − µ 1 e − t 2 / 2 dt . n σ/ √ n √ n →∞ Pr( lim ≤ z ) = 2 π −∞ A typical CS probabilistic tool: Theorem (Chernoff Bound) Let X 1 , . . . , X n be independent Bernoulli random variables such that Pr ( X i = 1) = p i . Let µ = 1 � n i =1 p i , then n n Pr (1 X i ≥ (1 + δ ) µ ) ≤ e − µ n δ 2 / 3 . � n i =1

We build on Basic Probability Theory Reminder: Theorem (Markov Inequality) If a random variable X is non-negative (X ≥ 0 ) then Prob ( X ≥ a ) ≤ E [ X ] . a Theorem (Chebyshev’s Inequality) For any random variable X. Prob ( | X − E [ X ] | ≥ a ) ≤ Var [ X ] a 2 Both bound are general but relatively weak.

The Basic Idea of Large Deviation Bounds: For any random variable X , by Markov inequality we have: For any t > 0, Pr ( X ≥ a ) = Pr ( e tX ≥ e ta ) ≤ E [ e tX ] . e ta Similarly, for any t < 0 Pr ( X ≤ a ) = Pr ( e tX ≥ e ta ) ≤ E [ e tX ] . e ta

The General Scheme: We obtain specific bounds for particular conditions/distributions by 1 computing E [ e tX ] 2 optimizing E [ e tX ] Pr ( X ≥ a ) ≤ min e ta t > 0 E [ e tX ] Pr ( X ≤ a ) ≤ min . e ta t < 0 3 symplifying

Chernof Bound - Large Deviation Bound Theorem Let X 1 , . . . , X n be independent, identically distributed, 0 − 1 random variables with Pr ( X i = 1) = E [ X i ] = p. Let ¯ X n = 1 � n i =1 X i , then for any δ ∈ [0 , 1] we have n Prob ( ¯ X n ≥ (1 + δ ) p ) ≤ e − np δ 2 / 3 and Prob ( ¯ X n ≤ (1 − δ ) p ) ≤ e − np δ 2 / 2 .

Chernof Bound - Large Deviation Bound Theorem Let X 1 , . . . , X n be independent, 0 − 1 random variables with Pr ( X i = 1) = E [ X i ] = p i . Let µ = � n i =1 p i , then for any δ ∈ [0 , 1] we have n X i ≥ (1 + δ ) µ ) ≤ e − µδ 2 / 3 � Prob ( i =1 and n � X i ≤ (1 − δ ) µ ) ≤ e − µδ 2 / 2 . Prob ( i =1

Consider n coin flips. Let X be the number of heads. Markov Inequality gives � � X ≥ 3 n ≤ n / 2 3 n / 4 ≤ 2 Pr 3 . 4 Using the Chebyshev’s bound we have: � X − n � ≥ n ≤ 4 �� � � Pr n . � � 2 4 Using the Chernoff bound in this case, we obtain � X − n � ≥ n � X ≥ n � 1 + 1 �� �� � � Pr = Pr � � 2 4 2 2 � X ≤ n � 1 − 1 �� + Pr 2 2 e − 1 n 1 4 + e − 1 n 1 4 ≤ 2 e − n 24 . ≤ 3 2 2 2

Moment Generating Function Definition The moment generating function of a random variable X is defined for any real value t as M X ( t ) = E [ e tX ] .

Theorem Let X be a random variable with moment generating function M X ( t ) . Assuming that exchanging the expectation and differentiation operands is legitimate, then for all n ≥ 1 E [ X n ] = M ( n ) X (0) , where M ( n ) X (0) is the n-th derivative of M X ( t ) evaluated at t = 0 . Proof. M ( n ) X ( t ) = E [ X n e tX ] . Computed at t = 0 we get M ( n ) X (0) = E [ X n ] .

Theorem Let X and Y be two random variables. If M X ( t ) = M Y ( t ) for all t ∈ ( − δ, δ ) for some δ > 0 , then X and Y have the same distribution. Theorem If X and Y are independent random variables then M X + Y ( t ) = M X ( t ) M Y ( t ) . Proof. M X + Y ( t ) = E [ e t ( X + Y ) ] = E [ e tX ] E [ e tY ] = M X ( t ) M Y ( t ) .

Chernoff Bound for Sum of Bernoulli Trials Theorem Let X 1 , . . . , X n be independent Bernoulli random variables such that Pr ( X i = 1) = p i . Let X = � n i =1 X i and µ = � n i =1 p i . • For any δ > 0 , � µ e δ � Pr ( X ≥ (1 + δ ) µ ) ≤ . (1) (1 + δ ) 1+ δ • For 0 < δ ≤ 1 , Pr ( X ≥ (1 + δ ) µ ) ≤ e − µδ 2 / 3 . (2) • For R ≥ 6 µ , Pr ( X ≥ R ) ≤ 2 − R . (3)

Chernoff Bound for Sum of Bernoulli Trials Let X 1 , . . . , X n be a sequence of independent Bernoulli trials with Pr ( X i = 1) = p i . Let X = � n i =1 X i , and let � n � n n � � � µ = E [ X ] = E X i = E [ X i ] = p i . i =1 i =1 i =1 For each X i : E [ e tX i ] M X i ( t ) = p i e t + (1 − p i ) = 1 + p i ( e t − 1) = e p i ( e t − 1) . ≤

E [ e tX i ] ≤ e p i ( e t − 1) . M X i ( t ) = Taking the product of the n generating functions we get for X = � n i =1 X i n � M X ( t ) = M X i ( t ) i =1 n e p i ( e t − 1) � ≤ i =1 � n i =1 p i ( e t − 1) = e e ( e t − 1) µ =

M X ( t ) = E [ e tX ] = e ( e t − 1) µ Applying Markov’s inequality we have for any t > 0 Pr ( e tX ≥ e t (1+ δ ) µ ) Pr ( X ≥ (1 + δ ) µ ) = E [ e tX ] ≤ e t (1+ δ ) µ e ( e t − 1) µ ≤ e t (1+ δ ) µ For any δ > 0, we can set t = ln(1 + δ ) > 0 to get: � µ e δ � Pr ( X ≥ (1 + δ ) µ ) ≤ . (1 + δ ) (1+ δ ) This proves (1).

We show that for 0 < δ < 1, e δ (1 + δ ) (1+ δ ) ≤ e − δ 2 / 3 f ( δ ) = δ − (1 + δ ) ln(1 + δ ) + δ 2 / 3 ≤ 0 or that in that interval. Computing the derivatives of f ( δ ) we get 1 − 1 + δ 1 + δ − ln(1 + δ ) + 2 3 δ = − ln(1 + δ ) + 2 f ′ ( δ ) = 3 δ, 1 + δ + 2 1 f ′′ ( δ ) = − 3 . f ′′ ( δ ) < 0 for 0 ≤ δ < 1 / 2, and f ′′ ( δ ) > 0 for δ > 1 / 2. f ′ ( δ ) first decreases and then increases over the interval [0 , 1]. Since f ′ (0) = 0 and f ′ (1) < 0, f ′ ( δ ) ≤ 0 in the interval [0 , 1]. Since f (0) = 0, we have that f ( δ ) ≤ 0 in that interval. This proves (2).

For R ≥ 6 µ , δ ≥ 5. � µ e δ � Pr ( X ≥ (1 + δ ) µ ) ≤ (1 + δ ) (1+ δ ) � e � R ≤ 6 2 − R , ≤ that proves (3).

Theorem Let X 1 , . . . , X n be independent Bernoulli random variables such that Pr ( X i = 1) = p i . Let X = � n i =1 X i and µ = E [ X ] . For 0 < δ < 1 : • � µ e − δ � Pr ( X ≤ (1 − δ ) µ ) ≤ . (4) (1 − δ ) (1 − δ ) • Pr ( X ≤ (1 − δ ) µ ) ≤ e − µδ 2 / 2 . (5)

Using Markov’s inequality, for any t < 0, Pr ( e tX ≥ e (1 − δ ) t µ ) Pr ( X ≤ (1 − δ ) µ ) = E [ e tX ] ≤ e t (1 − δ ) µ e ( e t − 1) µ ≤ e t (1 − δ ) µ For 0 < δ < 1, we set t = ln(1 − δ ) < 0 to get: � µ e − δ � Pr ( X ≤ (1 − δ ) µ ) ≤ (1 − δ ) (1 − δ ) This proves (4). We need to show: f ( δ ) = − δ − (1 − δ ) ln(1 − δ ) + 1 2 δ 2 ≤ 0 .

We need to show: f ( δ ) = − δ − (1 − δ ) ln(1 − δ ) + 1 2 δ 2 ≤ 0 . Differentiating f ( δ ) we get f ′ ( δ ) = ln(1 − δ ) + δ, 1 f ′′ ( δ ) = − 1 − δ + 1 . Since f ′′ ( δ ) < 0 for δ ∈ (0 , 1), f ′ ( δ ) decreasing in that interval. Since f ′ (0) = 0, f ′ ( δ ) ≤ 0 for δ ∈ (0 , 1). Therefore f ( δ ) is non increasing in that interval. f (0) = 0. Since f ( δ ) is non increasing for δ ∈ [0 , 1), f ( δ ) ≤ 0 in that interval, and (5) follows.

Example: Coin flips Let X be the number of heads in a sequence of n independent fair coin flips. √ �� � X − n � ≥ 1 � � Pr 6 n ln n � � 2 2 � � �� � X ≥ n 6 ln n = Pr 1 + 2 n � � �� � X ≤ n 6 ln n + Pr 1 − 2 n ≤ 2 ≤ e − 1 6 ln n + e − 1 6 ln n n n n . 3 2 n 2 2 n � Note that the standard deviation is n / 4

Markov Inequality gives � � X ≥ 3 n ≤ n / 2 3 n / 4 ≤ 2 Pr 3 . 4 Using the Chebyshev’s bound we have: � X − n � ≥ n ≤ 4 �� � � Pr n . � � 2 4 Using the Chernoff bound in this case, we obtain � X − n � ≥ n � X ≥ n � 1 + 1 �� �� � � Pr = Pr � � 2 4 2 2 � X ≤ n � 1 − 1 �� + Pr 2 2 e − 1 n 4 + e − 1 1 n 1 ≤ 3 2 2 2 4 2 e − n 24 . ≤

Chernof Bound - Large Deviation Bound Theorem Let X 1 , . . . , X n be independent, identically distributed, 0 − 1 random variables with Pr ( X i = 1) = E [ X i ] = p. Let ¯ X n = 1 � n i =1 X i , then for any δ ∈ [0 , 1] we have n Prob ( ¯ X n ≥ (1 + δ ) p ) ≤ e − np δ 2 / 3 and Prob ( ¯ X n ≤ (1 − δ ) p ) ≤ e − np δ 2 / 2 .

Chernof Bound - Large Deviation Bound Theorem Let X 1 , . . . , X n be independent, identically distributed, 0 − 1 random variables with Pr ( X i = 1) = E [ X i ] = p i . Let µ = � n i =1 p i , then for any δ ∈ [0 , 1] we have n X i ≥ (1 + δ ) µ ) ≤ e − µδ 2 / 3 � Prob ( i =1 and n X i ≤ (1 − δ ) µ ) ≤ e − µδ 2 / 2 . � Prob ( i =1

Chernoff’s vs. Chebyshev’s Inequality Assume for all i we have p i = p ; 1 − p i = q . µ = E [ X ] = np Var [ X ] = npq If we use Chebyshev’s Inequality we get Pr ( | X − µ | > δµ ) ≤ npq npq q δ 2 µ 2 = δ 2 n 2 p 2 = δ 2 µ Chernoff bound gives Pr ( | X − µ | > δµ ) ≤ 2 e − µδ 2 / 3 .

Set Balancing Given an n × n matrix A with entries in { 0 , 1 } , let a 11 a 12 ... a 1 n b 1 c 1 a 21 a 22 ... a 2 n b 2 c 2 ... ... ... ... ... = ... . ... ... ... ... ... ... a n 1 a n 2 ... a nn b n c n Find a vector ¯ b with entries in {− 1 , 1 } that minimizes ||A ¯ b || ∞ = max i =1 ,..., n | c i | .

Recommend

More recommend