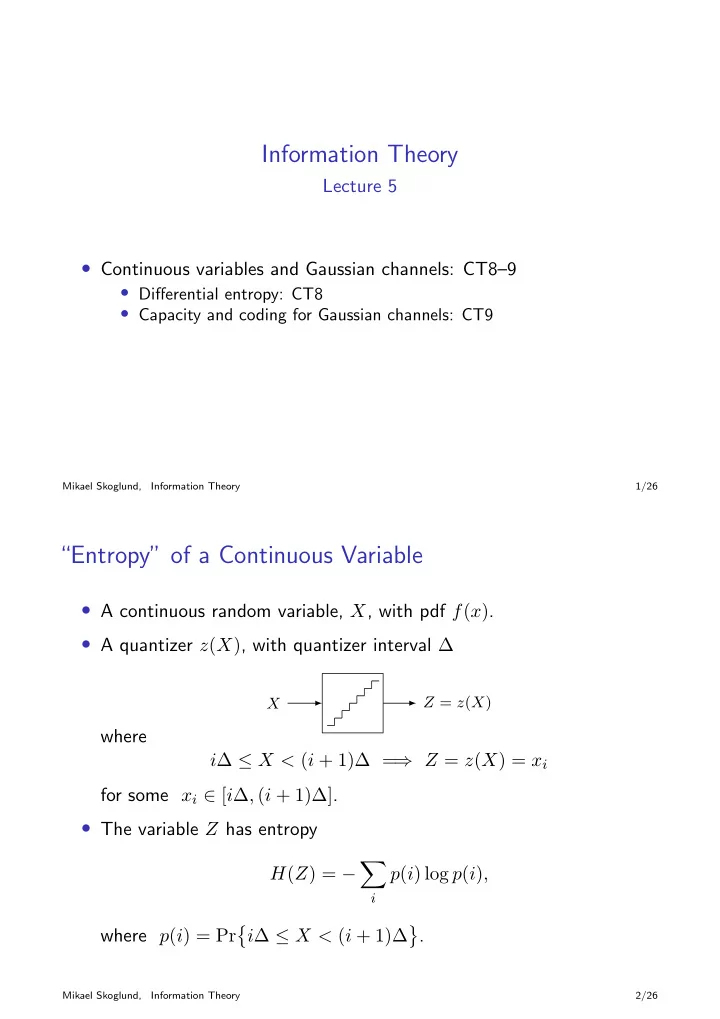

Information Theory Lecture 5 • Continuous variables and Gaussian channels: CT8–9 • Differential entropy: CT8 • Capacity and coding for Gaussian channels: CT9 Mikael Skoglund, Information Theory 1/26 “Entropy” of a Continuous Variable • A continuous random variable, X , with pdf f ( x ) . • A quantizer z ( X ) , with quantizer interval ∆ Z = z ( X ) X where i ∆ ≤ X < ( i + 1)∆ = ⇒ Z = z ( X ) = x i for some x i ∈ [ i ∆ , ( i + 1)∆] . • The variable Z has entropy � H ( Z ) = − p ( i ) log p ( i ) , i � � where p ( i ) = Pr i ∆ ≤ X < ( i + 1)∆ . Mikael Skoglund, Information Theory 2/26

• Notice that � ( i +1)∆ p ( i ) = f ( x ) dx = f ( x i )∆ i ∆ for some x i ∈ [ i ∆ , ( i + 1)∆] . Hence for small ∆ , we get � � � H ( Z ) = − f ( x i )∆ log f ( x i )∆ i � = − f ( x i )∆ log f ( x i ) − log ∆ i � ∞ ≈ − f ( x ) log f ( x ) dx − log ∆ −∞ (if f ( x ) is Riemann integrable). Mikael Skoglund, Information Theory 3/26 • Define the differential entropy h ( X ) , or h ( f ) , of X as � h ( X ) � − f ( x ) log f ( x ) dx (if the integral exists). • Then for small ∆ H ( Z ) + log ∆ ≈ h ( X ) • Note that H ( Z ) → ∞ , in general, even if h ( X ) exists and is finite; • h ( X ) is not “entropy,” and H ( Z ) → h ( X ) does not hold! Mikael Skoglund, Information Theory 4/26

• Maximum differential entropy : For any random variable X with pdf f ( x ) such that � E [ X 2 ] = x 2 f ( x ) dx = P it holds that h ( X ) ≤ 1 2 log 2 πeP with equality iff f ( x ) = N (0 , P ) . Mikael Skoglund, Information Theory 5/26 Typical Sets for Continuous Variables • A discrete-time continuous-amplitude i.i.d. process { X m } , with marginal pdf f ( x ) of support X . • It holds that 1 n log f ( X n − lim 1 ) = − E log f ( X 1 ) = h ( f ) a.s. n →∞ • Define the typical set A ( n ) ε , with respect to f ( x ) , as � − 1 � 1 ∈ X n : � � � A ( n ) x n n log f ( x n 1 ) − h ( f ) � ≤ ε = � � ε • For A ⊂ R n , define � dx n Vol( A ) � 1 A Mikael Skoglund, Information Theory 6/26

• For n sufficiently large � 1 ∈ A ( n ) X n f ( x n 1 ) dx n � � Pr = 1 > 1 − ε ε A ( n ) ε and A ( n ) ≥ (1 − ε )2 n ( h ( f ) − ε ) � � Vol ε • For all n A ( n ) ≤ 2 n ( h ( f )+ ε ) � � Vol ε ≈ 2 nh ( f ) = A ( n ) 2 h ( f ) � n , h ( f ) is the logarithm of the • Since Vol � � � ε side-length of a hypercube with the same volume as A ( n ) ε . • Low h ( f ) = ⇒ X n 1 typically lives in a small subset of R n . • Jointly typical sequences : Straightforward extension. Mikael Skoglund, Information Theory 7/26 Relative Entropy and Mutual Information • Define the relative entropy between the pdfs f and g as f ( x ) log f ( x ) � D ( f � g ) = g ( x ) dx and the mutual information between ( X, Y ) ∼ f ( x, y ) as � � I ( X ; Y ) = D f ( x, y ) � f ( x ) f ( y ) �� f ( x, y ) log f ( x, y ) = f ( x ) f ( y ) dxdy • While h ( X ) , for a continuous real-valued X , does not have an interpretation as “entropy,” both D ( f � g ) and I ( X ; Y ) have equivalent interpretations as in the discrete case. Mikael Skoglund, Information Theory 8/26

• In fact, both relative entropy and mutual information exist, and their operational interpretations stay intact, under very general conditions. • Let X ∈ X and Y ∈ Y be random variables (or “measurable functions”) defined on a common abstract probability space (Ω , B , P ) . Let q ( x ) and r ( y ) be “quantizers” that map X and Y , respectively, into real-valued discrete versions q ( X ) and r ( Y ) . Then, mutual information is defined as I ( X ; Y ) � sup I � � q ( X ); r ( Y ) , over all quantizers q and r . (The two previous definitions of I ( X ; Y ) are then special cases of this general definition.) Mikael Skoglund, Information Theory 9/26 The Gaussian Channel • A continuous-alphabet memoryless channel ( X , f ( y | x ) , Y ) maps a continuous real-valued channel input X ∈ X to a continuous real-valued channel output Y ∈ Y , in a stochastic and memoryless manner as described by the conditional pdf f ( y | x ) . • A memoryless Gaussian channel (with noise variance σ 2 ) is defined as X = Y = R , and 1 1 � 2 σ 2 ( y − x ) 2 � f ( y | x ) = √ − 2 πσ 2 exp . That is, for a given X = x the channel adds zero mean Gaussian “noise” Z , of variance σ 2 , such that the variable Y = x + Z is measured at its output. Mikael Skoglund, Information Theory 10/26

• Coding for a continuous X : if X is very large, or even X = R , coding needs to be defined subject to a power constraint . • An ( M, n ) code with an average power constraint P : 1 An index set I M � { 1 , . . . , M } . 2 An encoder mapping α : I M → X n , which defines the codebook � � � � x n 1 : x n x n 1 (1) , . . . , x n C n � 1 = α ( i ) , ∀ i ∈ I M = 1 ( M ) , subject to n 1 � x 2 m ( i ) ≤ P, ∀ i ∈ I M . n m =1 3 A decoder mapping β : Y n → I M . Mikael Skoglund, Information Theory 11/26 • A rate R � log M n is achievable (subject to the power constraint P ) if there exists a sequence of ( ⌈ 2 nR ⌉ , n ) codes with codewords satisfying the power constraint, and such that the maximal probability of error λ ( n ) = max β ( Y n 1 ) � = i | X n 1 = x n � � Pr 1 ( i ) i tends to 0 as n → ∞ . The capacity C is the supremum of all rates that are achievable over the channel . Mikael Skoglund, Information Theory 12/26

Memoryless Gaussian Channel: Lower Bound for C • Gaussian random code design : Fix the distribution x 2 1 � � f ( x ) = exp − 2( P − ε ) � 2 π ( P − ε ) and draw X n 1 (1) , . . . , X n � � C n = 1 ( M ) i.i.d. according to � f ( x n 1 ) = f ( x m ) . m • Encoding : A message ω ∈ I M is encoded as X n 1 ( ω ) Mikael Skoglund, Information Theory 13/26 • Transmission : Received sequence Y n 1 = X n 1 ( ω ) + Z n 1 where { Z m } are i.i.d. zero-mean Gaussian with E [ Z 2 m ] = σ 2 . • Decoding : Declare ˆ ω = β ( Y n 1 ) = i if X n 1 ( i ) is the only codeword such that 1 ) ∈ A ( n ) ( X n 1 ( i ) , Y n ε � n and in addition 1 m =1 X 2 m ( i ) ≤ P , otherwise set ˆ ω = 0 . n • Average probability of error : � � � � � � π n = Pr ω � = ω ˆ = symmetry = Pr ω � = 1 | ω = 1 ˆ with “Pr” over the random codebook and the noise. Mikael Skoglund, Information Theory 14/26

• Let � 1 � � m X 2 E 0 = m (1) > P n and �� � X n 1 ( i ) , X n 1 (1) + Z n ∈ A ( n ) � E i = 1 ε then π n = P ( E 0 ∪ E c 1 ∪ E 2 ∪ · · · ∪ E M ) � M ≤ P ( E 0 ) + P ( E c 1 ) + i =2 P ( E i ) • Fix a small ε > 0 : • Law of large numbers: P ( E 0 ) < ε for sufficiently large n , since � n 1 m =1 X 2 m (1) → P − ε a.s. n • Joint AEP: P ( E c 1 ) < ε for sufficiently large n . • Definition of joint typicality: P ( E i ) ≤ 2 − n ( I ( X ; Y ) − 3 ε ) , i = 2 , . . . , M. Mikael Skoglund, Information Theory 15/26 • For sufficiently large n , we thus get π n ≤ 2 ε + 2 − n ( I ( X ; Y ) − R − 3 ε ) with f ( y | x ) �� f ( y | x ) f ( x ) log I ( X ; Y ) = f ( y | x ) f ( x ) dxdxdy � where f ( x ) = N (0 , P − ε ) generated the codebook and f ( y | x ) is given by the channel. Since f ( y | x ) = N ( x, σ 2 ) � 1 + P − ε � I ( X ; Y ) = 1 2 log σ 2 Mikael Skoglund, Information Theory 16/26

• As long as R < I ( X ; Y ) − 3 ε , π n → 0 as n → ∞ = ⇒ exists at least one code, say C ∗ n , with P n e → 0 for R < I ( X ; Y ) − 3 ε • Throw away worst half of the codewords in C ∗ n to strengthen from to λ ( n ) (the worst half has the codewords that do not satisfy the P ( n ) e ⇒ all power constraint, i.e., λ i = 1 ) = R < 1 � 1 + P − ε � 2 log σ 2 ⇒ are achievable for all ε > 0 = C ≥ 1 � 1 + P � 2 log σ 2 Mikael Skoglund, Information Theory 17/26 Memoryless Gaussian Channel: An Upper Bound for C • Consider any sequence of codes that can achieve the rate R , that is λ ( n ) → 0 and 1 � n m =1 x 2 m ( i ) ≤ P, ∀ n . n • Assume ω ∈ I M equally likely. Fano = ⇒ n R ≤ 1 � I ( x m ( ω ); Y m ) + ǫ n n m =1 n + RP ( n ) where ǫ n = 1 → 0 as n → ∞ , and where e I ( x m ( ω ); Y m ) = h ( Y m ) − h ( Z m ) = h ( Y m ) − 1 2 log 2 πeσ 2 Mikael Skoglund, Information Theory 18/26

m ] = P m + σ 2 where P m = � M • Since E [ Y 2 1 i =1 x 2 m ( i ) we get M h ( Y m ) ≤ 1 2 log 2 πe ( σ 2 + P m ) and hence I ( x m ( ω ); Y m ) ≤ 1 2 log(1 + P m σ 2 ) . Thus, n R ≤ 1 1 � 1 + P m � � 2 log + ǫ n σ 2 n m =1 � � 1 � m P m ≤ 1 n 2 log 1 + + ǫ n σ 2 ≤ 1 � 1 + P � + ǫ n → 1 � 1 + P � as n → ∞ 2 log 2 log σ 2 σ 2 for all achievable R , due to Jensen’s inequality and the power constraint = ⇒ C ≤ 1 � 1 + P � 2 log σ 2 Mikael Skoglund, Information Theory 19/26 The Coding Theorem for a Memoryless Gaussian Channel Theorem A memoryless Gaussian channel with noise variance σ 2 and power constraint P has capacity � � C = 1 1 + P 2 log σ 2 That is, all rates R < C and no rates R > C are achievable. Mikael Skoglund, Information Theory 20/26

Recommend

More recommend