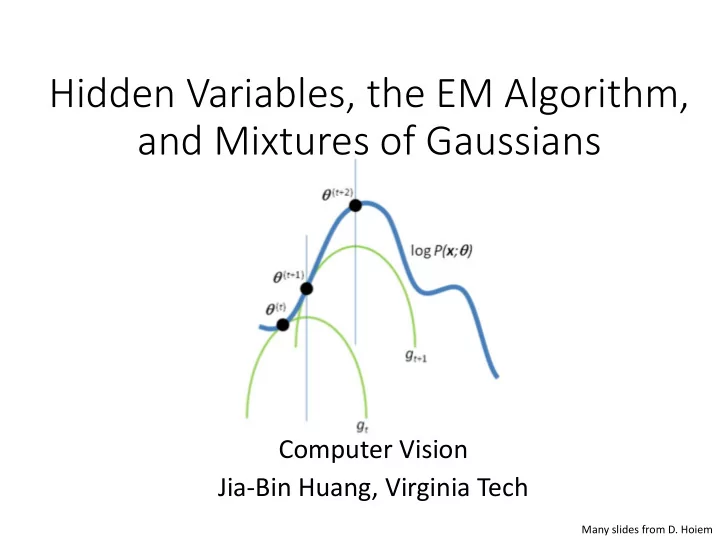

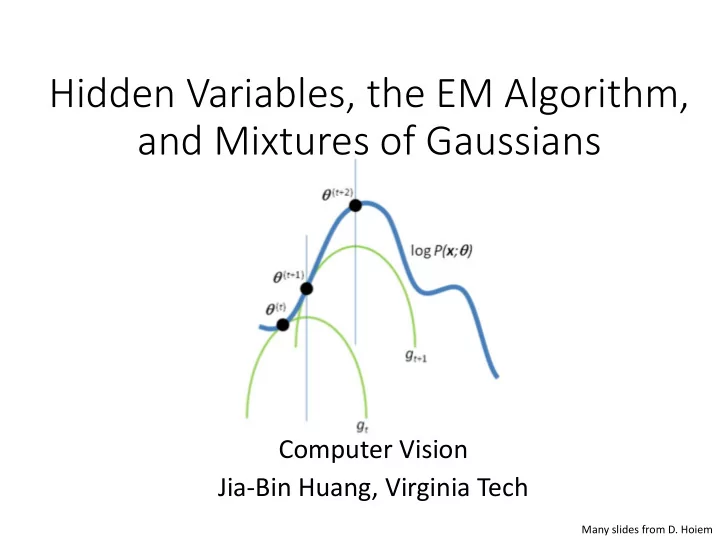

Hidden Variables, the EM Algorithm, and Mixtures of Gaussians Computer Vision Jia-Bin Huang, Virginia Tech Many slides from D. Hoiem

Administrative stuffs • Final project • proposal due soon - extended to Oct 29 Monday • Tips for final project • Set up several milestones • Think about how you are going to evaluate • Demo is highly encouraged • HW 4 out tomorrow

Sample final projects • State quarter classification • Stereo Vision - correspondence matching • Collaborative monocular SLAM for Multiple Robots in an unstructured environment • Fight Detection using Convolutional Neural Networks • Actor Rating using Facial Emotion Recognition • Fiducial Markers on Bat Tracking Based on Non-rigid Registration • Im2Latex: Converting Handwritten Mathematical Expressions to Latex • Pedestrian Detection and Tracking • Inference with Deep Neural Networks • Rubik's Cube • Plant Leaf Disease Detection and Classification • MBZIRC Challenge-2017 • Multi-modal Learning Scheme for Athlete Recognition System in Long Video • Computer Vision In Quantitative Phase Imaging • Aircraft pose estimation for level flight • Automatic segmentation of brain tumor from MRI images • Visual Dialog • PixelDream

Superpixel algorithms • Goal: divide the image into a large number of regions, such that each regions lie within object boundaries • Examples • Watershed • Felzenszwalb and Huttenlocher graph-based • Turbopixels • SLIC

Watershed algorithm

Watershed segmentation Image Gradient Watershed boundaries

Meyer’s watershed segmentation 1. Choose local minima as region seeds 2. Add neighbors to priority queue, sorted by value 3. Take top priority pixel from queue 1. If all labeled neighbors have same label, assign that label to pixel 2. Add all non-marked neighbors to queue 4. Repeat step 3 until finished (all remaining pixels in queue are on the boundary) Matlab: seg = watershed(bnd_im) Meyer 1991

Simple trick • Use Gaussian or median filter to reduce number of regions

Watershed usage • Use as a starting point for hierarchical segmentation – Ultrametric contour map (Arbelaez 2006) • Works with any soft boundaries – Pb (w/o non-max suppression) – Canny (w/o non-max suppression) – Etc.

Watershed pros and cons • Pros – Fast (< 1 sec for 512x512 image) – Preserves boundaries • Cons – Only as good as the soft boundaries (which may be slow to compute) – Not easy to get variety of regions for multiple segmentations • Usage – Good algorithm for superpixels, hierarchical segmentation

Felzenszwalb and Huttenlocher: Graph- Based Segmentation http://www.cs.brown.edu/~pff/segment/ + Good for thin regions + Fast + Easy to control coarseness of segmentations + Can include both large and small regions - Often creates regions with strange shapes - Sometimes makes very large errors

Turbo Pixels: Levinstein et al. 2009 http://www.cs.toronto.edu/~kyros/pubs/09.pami.turbopixels.pdf Tries to preserve boundaries like watershed but to produce more regular regions

SLIC (Achanta et al. PAMI 2012) http://infoscience.epfl.ch/record/177415/files/Superpixel_PAMI2011-2.pdf 1. Initialize cluster centers on pixel grid in steps S - Features: Lab color, x-y position 2. Move centers to position in 3x3 window with smallest gradient 3. Compare each pixel to cluster center within 2S pixel distance and assign to nearest 4. Recompute cluster centers as + Fast 0.36s for 320x240 mean color/position of pixels + Regular superpixels + Superpixels fit boundaries belonging to each cluster - May miss thin objects 5. Stop when residual error is - Large number of superpixels small

Choices in segmentation algorithms • Oversegmentation • Watershed + Structure random forest • Felzenszwalb and Huttenlocher 2004 http://www.cs.brown.edu/~pff/segment/ • SLIC • Turbopixels • Mean-shift • Larger regions (object-level) • Hierarchical segmentation (e.g., from Pb) • Normalized cuts • Mean-shift • Seed + graph cuts (discussed later)

Multiple segmentations • Don’t commit to one partitioning • Hierarchical segmentation • Occlusion boundaries hierarchy: Hoiem et al. IJCV 2011 (uses trained classifier to merge) • Pb+watershed hierarchy: Arbeleaz et al. CVPR 2009 • Selective search: FH + agglomerative clustering • Superpixel hierarchy • Vary segmentation parameters • E.g., multiple graph-based segmentations or mean-shift segmentations • Region proposals • Propose seed superpixel, try to segment out object that contains it (Endres Hoiem ECCV 2010, Carreira Sminchisescu CVPR 2010)

Review: Image Segmentation • Gestalt cues and principles of organization • Uses of segmentation • Efficiency • Provide feature supports • Propose object regions • Want the segmented object • Segmentation and grouping • Gestalt cues • By clustering (k-means, mean-shift) • By boundaries (watershed) • By graph (merging , graph cuts) • By labeling (MRF) <- Next lecture

HW 4: SLIC (Achanta et al. PAMI 2012) http://infoscience.epfl.ch/record/177415/files/Superpixel_PAMI2011-2.pdf 1. Initialize cluster centers on pixel grid in steps S - Features: Lab color, x-y position 2. Move centers to position in 3x3 window with smallest gradient 3. Compare each pixel to cluster center within 2S pixel distance and assign to nearest 4. Recompute cluster centers as + Fast 0.36s for 320x240 mean color/position of pixels + Regular superpixels + Superpixels fit boundaries belonging to each cluster - May miss thin objects 5. Stop when residual error is - Large number of superpixels small

Today’s Class • Examples of Missing Data Problems • Detecting outliers • Latent topic models • Segmentation (HW 4, problem 2) • Background • Maximum Likelihood Estimation • Probabilistic Inference • Dealing with “Hidden” Variables • EM algorithm, Mixture of Gaussians • Hard EM

Missing Data Problems: Outliers You want to train an algorithm to predict whether a photograph is attractive. You collect annotations from Mechanical Turk. Some annotators try to give accurate ratings, but others answer randomly. Challenge: Determine which people to trust and the average rating by accurate annotators. Annotator Ratings 10 8 9 2 8 Photo: Jam343 (Flickr)

Missing Data Problems: Object Discovery You have a collection of images and have extracted regions from them. Each is represented by a histogram of “visual words”. Challenge: Discover frequently occurring object categories, without pre-trained appearance models. http://www.robots.ox.ac.uk/~vgg/publications/papers/russell06.pdf

Missing Data Problems: Segmentation You are given an image and want to assign foreground/background pixels. Challenge: Segment the image into figure and ground without knowing what the foreground looks like in advance. Foreground Background

Missing Data Problems: Segmentation Challenge: Segment the image into figure and ground without knowing what the foreground looks like in advance. Three steps: 1. If we had labels, how could we model the appearance of foreground and background? • Maximum Likelihood Estimation 2. Once we have modeled the fg/bg appearance, how do we compute the likelihood that a pixel is foreground? • Probabilistic Inference 3. How can we get both labels and appearance models at once? • Expectation-Maximization (EM) Algorithm

Maximum Likelihood Estimation 1. If we had labels, how could we model the appearance of foreground and background? Background Foreground

Maximum Likelihood Estimation data parameters .. x x x 1 N ˆ argmax ( | ) p x ˆ argmax ( | ) p x n n

Maximum Likelihood Estimation .. x x x 1 N ˆ argmax ( | ) p x ˆ argmax ( | ) p x n n Gaussian Distribution 2 1 x 2 n ( | , ) exp p x n 2 2 2 2

Maximum Likelihood Estimation 2 1 x 2 Gaussian Distribution n ( | , ) exp p x n 2 2 2 2 መ 𝜄 = argmax 𝜄 𝑞 𝐲 𝜄) = argmax 𝜄 log 𝑞 𝐲 𝜄) Log-Likelihood መ 𝜄 = argmax 𝜄 log (𝑞 𝑦 𝑜 𝜄 ) = argmax 𝜄 𝑀(𝜄) 𝑜 𝑀 𝜄 = −𝑂 2 log 2𝜌 − −𝑂 2 log 𝜏 2 − 1 𝑦 𝑜 − 𝜈 2 2𝜏 2 𝑜 𝜖𝑀(𝜄) = 1 𝜈 = 1 𝜏 2 𝑦 𝑜 − 𝑣 = 0 → ො 𝑂 𝑦 𝑜 𝜖𝜈 𝑜 𝑜 𝜖𝑀(𝜄) = 𝑂 𝜏 − 1 𝜏 2 = 1 𝑦 𝑜 − 𝜈 2 = 0 𝜈 2 𝜏 3 → 𝑂 𝑦 𝑜 − ො 𝜖𝜏 𝑜 𝑜

Maximum Likelihood Estimation .. x x x 1 N ˆ argmax ( | ) p x ˆ argmax ( | ) p x n n Gaussian Distribution 2 1 x 2 n ( | , ) exp p x n 2 2 2 2 1 1 ˆ ˆ ˆ 2 2 x x n n N N n n

Recommend

More recommend