Hidden Markov Model 𝑞 𝑨 1 , 𝑥 1 , 𝑨 2 , 𝑥 2 , … , 𝑨 𝑂 , 𝑥 𝑂 = 𝑞 𝑨 1 | 𝑨 0 𝑞 𝑥 1 |𝑨 1 ⋯ 𝑞 𝑨 𝑂 | 𝑨 𝑂−1 𝑞 𝑥 𝑂 |𝑨 𝑂 = ෑ 𝑞 𝑥 𝑗 |𝑨 𝑗 𝑞 𝑨 𝑗 | 𝑨 𝑗−1 𝑗 Goal: maximize (log-)likelihood In practice: we don’t actually observe these z values; we just see the words w

Hidden Markov Model 𝑞 𝑨 1 , 𝑥 1 , 𝑨 2 , 𝑥 2 , … , 𝑨 𝑂 , 𝑥 𝑂 = 𝑞 𝑨 1 | 𝑨 0 𝑞 𝑥 1 |𝑨 1 ⋯ 𝑞 𝑨 𝑂 | 𝑨 𝑂−1 𝑞 𝑥 𝑂 |𝑨 𝑂 = ෑ 𝑞 𝑥 𝑗 |𝑨 𝑗 𝑞 𝑨 𝑗 | 𝑨 𝑗−1 𝑗 Goal: maximize (log-)likelihood In practice: we don’t actually observe these z values; we just see the words w if we did observe z , estimating the if we knew the probability parameters probability parameters would be easy… then we could estimate z and evaluate but we don’t! :( likelihood… but we don’t! :(

Hidden Markov Model Terminology 𝑞 𝑨 1 , 𝑥 1 , 𝑨 2 , 𝑥 2 , … , 𝑨 𝑂 , 𝑥 𝑂 = 𝑞 𝑨 1 | 𝑨 0 𝑞 𝑥 1 |𝑨 1 ⋯ 𝑞 𝑨 𝑂 | 𝑨 𝑂−1 𝑞 𝑥 𝑂 |𝑨 𝑂 = ෑ 𝑞 𝑥 𝑗 |𝑨 𝑗 𝑞 𝑨 𝑗 | 𝑨 𝑗−1 𝑗 Each z i can take the value of one of K latent states

Hidden Markov Model Terminology 𝑞 𝑨 1 , 𝑥 1 , 𝑨 2 , 𝑥 2 , … , 𝑨 𝑂 , 𝑥 𝑂 = 𝑞 𝑨 1 | 𝑨 0 𝑞 𝑥 1 |𝑨 1 ⋯ 𝑞 𝑨 𝑂 | 𝑨 𝑂−1 𝑞 𝑥 𝑂 |𝑨 𝑂 = ෑ 𝑞 𝑥 𝑗 |𝑨 𝑗 𝑞 𝑨 𝑗 | 𝑨 𝑗−1 𝑗 transition probabilities/parameters Each z i can take the value of one of K latent states

Hidden Markov Model Terminology 𝑞 𝑨 1 , 𝑥 1 , 𝑨 2 , 𝑥 2 , … , 𝑨 𝑂 , 𝑥 𝑂 = 𝑞 𝑨 1 | 𝑨 0 𝑞 𝑥 1 |𝑨 1 ⋯ 𝑞 𝑨 𝑂 | 𝑨 𝑂−1 𝑞 𝑥 𝑂 |𝑨 𝑂 = ෑ 𝑞 𝑥 𝑗 |𝑨 𝑗 𝑞 𝑨 𝑗 | 𝑨 𝑗−1 𝑗 emission transition probabilities/parameters probabilities/parameters Each z i can take the value of one of K latent states

Hidden Markov Model Terminology 𝑞 𝑨 1 , 𝑥 1 , 𝑨 2 , 𝑥 2 , … , 𝑨 𝑂 , 𝑥 𝑂 = 𝑞 𝑨 1 | 𝑨 0 𝑞 𝑥 1 |𝑨 1 ⋯ 𝑞 𝑨 𝑂 | 𝑨 𝑂−1 𝑞 𝑥 𝑂 |𝑨 𝑂 = ෑ 𝑞 𝑥 𝑗 |𝑨 𝑗 𝑞 𝑨 𝑗 | 𝑨 𝑗−1 𝑗 emission transition probabilities/parameters probabilities/parameters Each z i can take the value of one of K latent states Transition and emission distributions do not change

Hidden Markov Model Terminology 𝑞 𝑨 1 , 𝑥 1 , 𝑨 2 , 𝑥 2 , … , 𝑨 𝑂 , 𝑥 𝑂 = 𝑞 𝑨 1 | 𝑨 0 𝑞 𝑥 1 |𝑨 1 ⋯ 𝑞 𝑨 𝑂 | 𝑨 𝑂−1 𝑞 𝑥 𝑂 |𝑨 𝑂 = ෑ 𝑞 𝑥 𝑗 |𝑨 𝑗 𝑞 𝑨 𝑗 | 𝑨 𝑗−1 𝑗 emission transition probabilities/parameters probabilities/parameters Each z i can take the value of one of K latent states Transition and emission distributions do not change Q: How many different probability values are there with K states and V vocab items?

Hidden Markov Model Terminology 𝑞 𝑨 1 , 𝑥 1 , 𝑨 2 , 𝑥 2 , … , 𝑨 𝑂 , 𝑥 𝑂 = 𝑞 𝑨 1 | 𝑨 0 𝑞 𝑥 1 |𝑨 1 ⋯ 𝑞 𝑨 𝑂 | 𝑨 𝑂−1 𝑞 𝑥 𝑂 |𝑨 𝑂 = ෑ 𝑞 𝑥 𝑗 |𝑨 𝑗 𝑞 𝑨 𝑗 | 𝑨 𝑗−1 𝑗 emission transition probabilities/parameters probabilities/parameters Each z i can take the value of one of K latent states Transition and emission distributions do not change Q: How many different probability values are there with K states and V vocab items? A: VK emission values and K 2 transition values

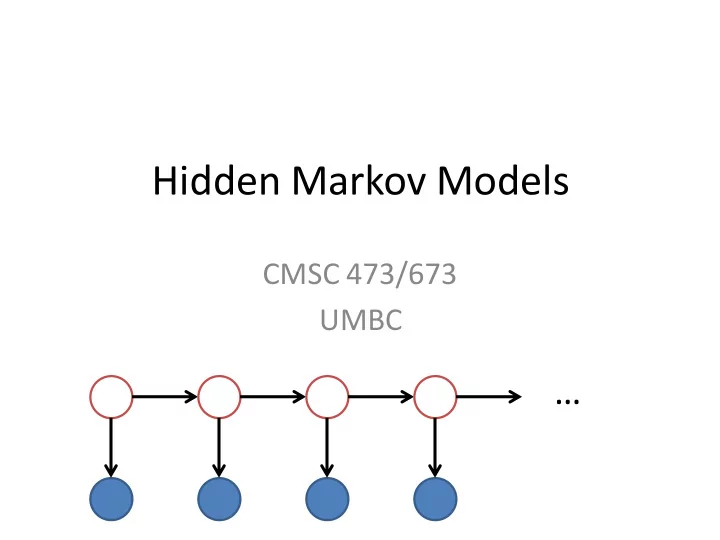

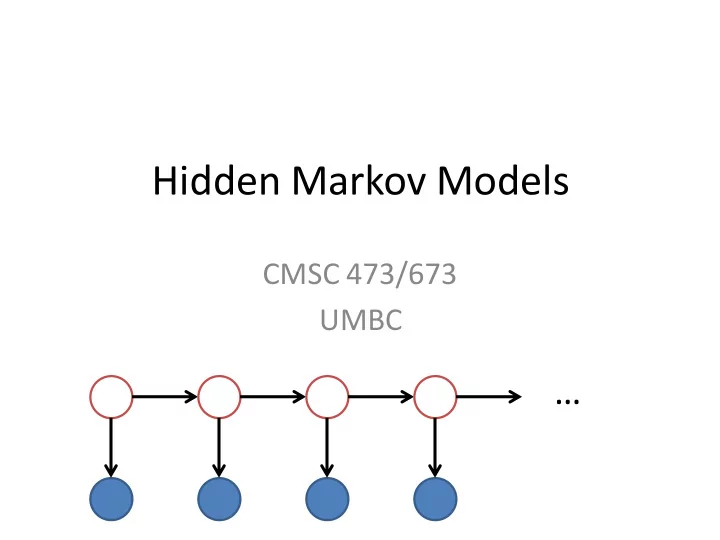

Hidden Markov Model Representation 𝑞 𝑨 1 , 𝑥 1 , 𝑨 2 , 𝑥 2 , … , 𝑨 𝑂 , 𝑥 𝑂 = 𝑞 𝑨 1 | 𝑨 0 𝑞 𝑥 1 |𝑨 1 ⋯ 𝑞 𝑨 𝑂 | 𝑨 𝑂−1 𝑞 𝑥 𝑂 |𝑨 𝑂 emission transition = ෑ 𝑞 𝑥 𝑗 |𝑨 𝑗 𝑞 𝑨 𝑗 | 𝑨 𝑗−1 probabilities/parameters probabilities/parameters 𝑗 … z 1 z 2 z 3 z 4 w 1 w 2 w 3 w 4 represent the probabilities and independence assumptions in a graph

Hidden Markov Model Representation 𝑞 𝑨 1 , 𝑥 1 , 𝑨 2 , 𝑥 2 , … , 𝑨 𝑂 , 𝑥 𝑂 = 𝑞 𝑨 1 | 𝑨 0 𝑞 𝑥 1 |𝑨 1 ⋯ 𝑞 𝑨 𝑂 | 𝑨 𝑂−1 𝑞 𝑥 𝑂 |𝑨 𝑂 emission transition = ෑ 𝑞 𝑥 𝑗 |𝑨 𝑗 𝑞 𝑨 𝑗 | 𝑨 𝑗−1 probabilities/parameters probabilities/parameters 𝑗 … z 1 z 2 z 3 z 4 w 1 w 2 w 3 w 4 Graphical Models (see 478/678)

Hidden Markov Model Representation 𝑞 𝑨 1 , 𝑥 1 , 𝑨 2 , 𝑥 2 , … , 𝑨 𝑂 , 𝑥 𝑂 = 𝑞 𝑨 1 | 𝑨 0 𝑞 𝑥 1 |𝑨 1 ⋯ 𝑞 𝑨 𝑂 | 𝑨 𝑂−1 𝑞 𝑥 𝑂 |𝑨 𝑂 emission transition = ෑ 𝑞 𝑥 𝑗 |𝑨 𝑗 𝑞 𝑨 𝑗 | 𝑨 𝑗−1 probabilities/parameters probabilities/parameters 𝑗 … z 1 z 2 z 3 z 4 𝑞 𝑥 4 |𝑨 4 𝑞 𝑥 1 |𝑨 1 𝑞 𝑥 2 |𝑨 2 𝑞 𝑥 3 |𝑨 3 w 1 w 2 w 3 w 4

Hidden Markov Model Representation 𝑞 𝑨 1 , 𝑥 1 , 𝑨 2 , 𝑥 2 , … , 𝑨 𝑂 , 𝑥 𝑂 = 𝑞 𝑨 1 | 𝑨 0 𝑞 𝑥 1 |𝑨 1 ⋯ 𝑞 𝑨 𝑂 | 𝑨 𝑂−1 𝑞 𝑥 𝑂 |𝑨 𝑂 emission transition = ෑ 𝑞 𝑥 𝑗 |𝑨 𝑗 𝑞 𝑨 𝑗 | 𝑨 𝑗−1 probabilities/parameters probabilities/parameters 𝑗 𝑞 𝑨 2 | 𝑨 1 𝑞 𝑨 3 | 𝑨 2 𝑞 𝑨 4 | 𝑨 3 … z 1 z 2 z 3 z 4 𝑞 𝑥 4 |𝑨 4 𝑞 𝑥 1 |𝑨 1 𝑞 𝑥 2 |𝑨 2 𝑞 𝑥 3 |𝑨 3 w 1 w 2 w 3 w 4

Hidden Markov Model Representation 𝑞 𝑨 1 , 𝑥 1 , 𝑨 2 , 𝑥 2 , … , 𝑨 𝑂 , 𝑥 𝑂 = 𝑞 𝑨 1 | 𝑨 0 𝑞 𝑥 1 |𝑨 1 ⋯ 𝑞 𝑨 𝑂 | 𝑨 𝑂−1 𝑞 𝑥 𝑂 |𝑨 𝑂 emission transition = ෑ 𝑞 𝑥 𝑗 |𝑨 𝑗 𝑞 𝑨 𝑗 | 𝑨 𝑗−1 probabilities/parameters probabilities/parameters 𝑗 initial starting distribution (“BOS”) 𝑞 𝑨 1 | 𝑨 0 𝑞 𝑨 2 | 𝑨 1 𝑞 𝑨 3 | 𝑨 2 𝑞 𝑨 4 | 𝑨 3 … z 1 z 2 z 3 z 4 𝑞 𝑥 4 |𝑨 4 𝑞 𝑥 1 |𝑨 1 𝑞 𝑥 2 |𝑨 2 𝑞 𝑥 3 |𝑨 3 w 1 w 2 w 3 w 4

Hidden Markov Model Representation 𝑞 𝑨 1 , 𝑥 1 , 𝑨 2 , 𝑥 2 , … , 𝑨 𝑂 , 𝑥 𝑂 = 𝑞 𝑨 1 | 𝑨 0 𝑞 𝑥 1 |𝑨 1 ⋯ 𝑞 𝑨 𝑂 | 𝑨 𝑂−1 𝑞 𝑥 𝑂 |𝑨 𝑂 emission transition = ෑ 𝑞 𝑥 𝑗 |𝑨 𝑗 𝑞 𝑨 𝑗 | 𝑨 𝑗−1 probabilities/parameters probabilities/parameters 𝑗 initial starting distribution (“BOS”) 𝑞 𝑨 1 | 𝑨 0 𝑞 𝑨 2 | 𝑨 1 𝑞 𝑨 3 | 𝑨 2 𝑞 𝑨 4 | 𝑨 3 … z 1 z 2 z 3 z 4 𝑞 𝑥 4 |𝑨 4 𝑞 𝑥 1 |𝑨 1 𝑞 𝑥 2 |𝑨 2 𝑞 𝑥 3 |𝑨 3 w 1 w 2 w 3 w 4 Each z i can take the value of one of K latent states Transition and emission distributions do not change

Example: 2-state Hidden Markov Model as a Lattice … z 1 = z 2 = z 3 = z 4 = V V V V … z 1 = z 2 = z 3 = z 4 = N N N N w 1 w 2 w 3 w 4

Example: 2-state Hidden Markov Model as a Lattice … z 1 = z 2 = z 3 = z 4 = V V V V … z 1 = z 2 = z 3 = z 4 = N N N N 𝑞 𝑥 3 |𝑊 𝑞 𝑥 4 |𝑊 𝑞 𝑥 1 |𝑊 𝑞 𝑥 2 |𝑊 𝑞 𝑥 4 |𝑂 𝑞 𝑥 1 |𝑂 𝑞 𝑥 2 |𝑂 𝑞 𝑥 3 |𝑂 w 1 w 2 w 3 w 4

Example: 2-state Hidden Markov Model as a Lattice 𝑞 𝑊| start 𝑞 𝑊| 𝑊 𝑞 𝑊| 𝑊 𝑞 𝑊| 𝑊 … z 1 = z 2 = z 3 = z 4 = V V V V 𝑞 𝑂| start 𝑞 𝑂| 𝑂 𝑞 𝑂| 𝑂 𝑞 𝑂| 𝑂 … z 1 = z 2 = z 3 = z 4 = N N N N 𝑞 𝑥 3 |𝑊 𝑞 𝑥 4 |𝑊 𝑞 𝑥 1 |𝑊 𝑞 𝑥 2 |𝑊 𝑞 𝑥 4 |𝑂 𝑞 𝑥 1 |𝑂 𝑞 𝑥 2 |𝑂 𝑞 𝑥 3 |𝑂 w 1 w 2 w 3 w 4

Example: 2-state Hidden Markov Model as a Lattice 𝑞 𝑊| start 𝑞 𝑊| 𝑊 𝑞 𝑊| 𝑊 𝑞 𝑊| 𝑊 … z 1 = z 2 = z 3 = z 4 = V V V V 𝑞 𝑊| 𝑂 𝑞 𝑊| 𝑂 𝑞 𝑊| 𝑂 𝑞 𝑂| 𝑊 𝑞 𝑂| 𝑊 𝑞 𝑂| 𝑊 𝑞 𝑂| start 𝑞 𝑂| 𝑂 𝑞 𝑂| 𝑂 𝑞 𝑂| 𝑂 … z 1 = z 2 = z 3 = z 4 = N N N N 𝑞 𝑥 3 |𝑊 𝑞 𝑥 4 |𝑊 𝑞 𝑥 1 |𝑊 𝑞 𝑥 2 |𝑊 𝑞 𝑥 4 |𝑂 𝑞 𝑥 1 |𝑂 𝑞 𝑥 2 |𝑂 𝑞 𝑥 3 |𝑂 w 1 w 2 w 3 w 4

Comparison of Joint Probabilities 𝑞 𝑥 1 , 𝑥 2 , … , 𝑥 𝑂 = 𝑞 𝑥 1 𝑞 𝑥 2 ⋯ 𝑞 𝑥 𝑂 = ෑ 𝑞 𝑥 𝑗 𝑗 Unigram Language Model

Comparison of Joint Probabilities 𝑞 𝑥 1 , 𝑥 2 , … , 𝑥 𝑂 = 𝑞 𝑥 1 𝑞 𝑥 2 ⋯ 𝑞 𝑥 𝑂 = ෑ 𝑞 𝑥 𝑗 𝑗 Unigram Language Model 𝑞 𝑨 1 , 𝑥 1 , 𝑨 2 , 𝑥 2 , … ,𝑨 𝑂 , 𝑥 𝑂 = 𝑞 𝑨 1 𝑞 𝑥 1 |𝑨 1 ⋯ 𝑞 𝑨 𝑂 𝑞 𝑥 𝑂 |𝑨 𝑂 = ෑ 𝑞 𝑥 𝑗 |𝑨 𝑗 𝑞 𝑨 𝑗 𝑗 Unigram Class- based Language Model (“K” coins)

Comparison of Joint Probabilities 𝑞 𝑥 1 , 𝑥 2 , … , 𝑥 𝑂 = 𝑞 𝑥 1 𝑞 𝑥 2 ⋯ 𝑞 𝑥 𝑂 = ෑ 𝑞 𝑥 𝑗 𝑗 Unigram Language Model 𝑞 𝑨 1 , 𝑥 1 , 𝑨 2 , 𝑥 2 , … ,𝑨 𝑂 , 𝑥 𝑂 = 𝑞 𝑨 1 𝑞 𝑥 1 |𝑨 1 ⋯ 𝑞 𝑨 𝑂 𝑞 𝑥 𝑂 |𝑨 𝑂 = ෑ 𝑞 𝑥 𝑗 |𝑨 𝑗 𝑞 𝑨 𝑗 𝑗 Unigram Class- based Language Model (“K” coins) 𝑞 𝑨 1 , 𝑥 1 , 𝑨 2 , 𝑥 2 , … , 𝑨 𝑂 , 𝑥 𝑂 = 𝑞 𝑨 1 | 𝑨 0 𝑞 𝑥 1 |𝑨 1 ⋯ 𝑞 𝑨 𝑂 | 𝑨 𝑂−1 𝑞 𝑥 𝑂 |𝑨 𝑂 = ෑ 𝑞 𝑥 𝑗 |𝑨 𝑗 𝑞 𝑨 𝑗 | 𝑨 𝑗−1 𝑗 Hidden Markov Model

Estimating Parameters from Observed Data 𝑞 𝑊| 𝑊 z 1 = z 2 = z 3 = z 4 = 𝑞 𝑂| 𝑊 V V V V Transition Counts 𝑞 𝑂| start 𝑞 𝑊| 𝑂 N V end z 1 = z 2 = z 3 = z 4 = N N N N start 𝑞 𝑥 4 |𝑂 N 𝑞 𝑥 1 |𝑂 𝑞 𝑥 3 |𝑊 𝑞 𝑥 2 |𝑊 V w 1 w 2 w 3 w 4 Emission Counts w 1 w 2 W 3 w 4 z 1 = z 2 = z 3 = z 4 = N V V V V V 𝑞 𝑊| 𝑂 𝑞 𝑂| 𝑊 𝑞 𝑂| start end emission not shown 𝑞 𝑂| 𝑂 z 1 = z 2 = z 3 = z 4 = N N N N 𝑞 𝑥 2 |𝑊 𝑞 𝑥 4 |𝑂 𝑞 𝑥 1 |𝑂 𝑞 𝑥 3 |𝑂 w 1 w 2 w 3 w 4

Estimating Parameters from Observed Data 𝑞 𝑊| 𝑊 z 1 = z 2 = z 3 = z 4 = 𝑞 𝑂| 𝑊 V V V V Transition Counts 𝑞 𝑂| start 𝑞 𝑊| 𝑂 N V end z 1 = z 2 = z 3 = z 4 = N N N N start 2 0 0 𝑞 𝑥 4 |𝑂 N 1 2 2 𝑞 𝑥 1 |𝑂 𝑞 𝑥 3 |𝑊 𝑞 𝑥 2 |𝑊 V 2 1 0 w 1 w 2 w 3 w 4 Emission Counts w 1 w 2 W 3 w 4 z 1 = z 2 = z 3 = z 4 = N 2 0 1 2 V V V V V 0 2 1 0 𝑞 𝑊| 𝑂 𝑞 𝑂| 𝑊 𝑞 𝑂| start end emission not shown 𝑞 𝑂| 𝑂 z 1 = z 2 = z 3 = z 4 = N N N N 𝑞 𝑥 2 |𝑊 𝑞 𝑥 4 |𝑂 𝑞 𝑥 1 |𝑂 𝑞 𝑥 3 |𝑂 w 1 w 2 w 3 w 4

Estimating Parameters from Observed Data 𝑞 𝑊| 𝑊 z 1 = z 2 = z 3 = z 4 = 𝑞 𝑂| 𝑊 V V V V Transition MLE 𝑞 𝑂| start 𝑞 𝑊| 𝑂 N V end z 1 = z 2 = z 3 = z 4 = N N N N start 1 0 0 𝑞 𝑥 4 |𝑂 N .2 .4 .4 𝑞 𝑥 1 |𝑂 𝑞 𝑥 3 |𝑊 𝑞 𝑥 2 |𝑊 V 2/3 1/3 0 w 1 w 2 w 3 w 4 Emission MLE w 1 w 2 W 3 w 4 z 1 = z 2 = z 3 = z 4 = N .4 0 .2 .4 V V V V V 0 2/3 1/3 0 𝑞 𝑊| 𝑂 𝑞 𝑂| 𝑊 𝑞 𝑂| start end emission not shown 𝑞 𝑂| 𝑂 z 1 = z 2 = z 3 = z 4 = N N N N 𝑞 𝑥 2 |𝑊 𝑞 𝑥 4 |𝑂 𝑞 𝑥 1 |𝑂 𝑞 𝑥 3 |𝑂 w 1 w 2 w 3 w 4

Estimating Parameters from Observed Data 𝑞 𝑊| 𝑊 z 1 = z 2 = z 3 = z 4 = 𝑞 𝑂| 𝑊 V V V V Transition MLE 𝑞 𝑂| start 𝑞 𝑊| 𝑂 N V end z 1 = z 2 = z 3 = z 4 = N N N N start 1 0 0 𝑞 𝑥 4 |𝑂 N .2 .4 .4 𝑞 𝑥 1 |𝑂 𝑞 𝑥 3 |𝑊 𝑞 𝑥 2 |𝑊 V 2/3 1/3 0 w 1 w 2 w 3 w 4 Emission MLE w 1 w 2 W 3 w 4 z 1 = z 2 = z 3 = z 4 = N .4 0 .2 .4 V V V V V 0 2/3 1/3 0 𝑞 𝑊| 𝑂 𝑞 𝑂| 𝑊 𝑞 𝑂| start end emission not shown 𝑞 𝑂| 𝑂 z 1 = z 2 = z 3 = z 4 = N N N N smooth these 𝑞 𝑥 2 |𝑊 values if 𝑞 𝑥 4 |𝑂 𝑞 𝑥 1 |𝑂 𝑞 𝑥 3 |𝑂 needed w 1 w 2 w 3 w 4

Outline HMM Motivation (Part of Speech) and Brief Definition What is Part of Speech? HMM Detailed Definition HMM Tasks

Hidden Markov Model Tasks 𝑞 𝑨 1 , 𝑥 1 , 𝑨 2 , 𝑥 2 , … , 𝑨 𝑂 , 𝑥 𝑂 = 𝑞 𝑨 1 | 𝑨 0 𝑞 𝑥 1 |𝑨 1 ⋯ 𝑞 𝑨 𝑂 | 𝑨 𝑂−1 𝑞 𝑥 𝑂 |𝑨 𝑂 emission transition = ෑ 𝑞 𝑥 𝑗 |𝑨 𝑗 𝑞 𝑨 𝑗 | 𝑨 𝑗−1 probabilities/parameters probabilities/parameters 𝑗 Calculate the (log) likelihood of an observed sequence w 1 , …, w N Calculate the most likely sequence of states (for an observed sequence) Learn the emission and transition parameters

Hidden Markov Model Tasks 𝑞 𝑨 1 , 𝑥 1 , 𝑨 2 , 𝑥 2 , … , 𝑨 𝑂 , 𝑥 𝑂 = 𝑞 𝑨 1 | 𝑨 0 𝑞 𝑥 1 |𝑨 1 ⋯ 𝑞 𝑨 𝑂 | 𝑨 𝑂−1 𝑞 𝑥 𝑂 |𝑨 𝑂 emission transition = ෑ 𝑞 𝑥 𝑗 |𝑨 𝑗 𝑞 𝑨 𝑗 | 𝑨 𝑗−1 probabilities/parameters probabilities/parameters 𝑗 Calculate the (log) likelihood of an observed sequence w 1 , …, w N Calculate the most likely sequence of states (for an observed sequence) Learn the emission and transition parameters

HMM Likelihood Task Marginalize over all latent sequence joint likelihoods 𝑞 𝑥 1 , 𝑥 2 , … , 𝑥 𝑂 = 𝑞 𝑨 1 , 𝑥 1 , 𝑨 2 , 𝑥 2 , … , 𝑨 𝑂 , 𝑥 𝑂 𝑨 1 ,⋯,𝑨 𝑂 Q: In a K-state HMM for a length N observation sequence, how many summands (different latent sequences) are there?

HMM Likelihood Task Marginalize over all latent sequence joint likelihoods 𝑞 𝑥 1 , 𝑥 2 , … , 𝑥 𝑂 = 𝑞 𝑨 1 , 𝑥 1 , 𝑨 2 , 𝑥 2 , … , 𝑨 𝑂 , 𝑥 𝑂 𝑨 1 ,⋯,𝑨 𝑂 Q: In a K-state HMM for a length N observation sequence, how many summands (different latent sequences) are there? A: K N

HMM Likelihood Task Marginalize over all latent sequence joint likelihoods 𝑞 𝑥 1 , 𝑥 2 , … , 𝑥 𝑂 = 𝑞 𝑨 1 , 𝑥 1 , 𝑨 2 , 𝑥 2 , … , 𝑨 𝑂 , 𝑥 𝑂 𝑨 1 ,⋯,𝑨 𝑂 Q: In a K-state HMM for a length N observation sequence, how many summands (different latent sequences) are there? A: K N Goal: Find a way to compute this exponential sum efficiently (in polynomial time)

HMM Likelihood Task Like in language modeling, you need to model when to Marginalize over all latent sequence joint stop generating. likelihoods This ending state is generally not included in “K.” 𝑞 𝑥 1 , 𝑥 2 , … , 𝑥 𝑂 = 𝑞 𝑨 1 , 𝑥 1 , 𝑨 2 , 𝑥 2 , … , 𝑨 𝑂 , 𝑥 𝑂 𝑨 1 ,⋯,𝑨 𝑂 Q: In a K-state HMM for a length N observation sequence, how many summands (different latent sequences) are there? A: K N Goal: Find a way to compute this exponential sum efficiently (in polynomial time)

2 (3)-State HMM Likelihood 𝑞 𝑊| start 𝑞 𝑊| 𝑊 𝑞 𝑊| 𝑊 𝑞 𝑊| 𝑊 … z 1 = z 2 = z 3 = z 4 = V V V V 𝑞 𝑊| 𝑂 𝑞 𝑊| 𝑂 𝑞 𝑊| 𝑂 𝑞 𝑂| 𝑊 𝑞 𝑂| 𝑊 𝑞 𝑂| 𝑊 𝑞 𝑂| start 𝑞 𝑂| 𝑂 𝑞 𝑂| 𝑂 𝑞 𝑂| 𝑂 … z 1 = z 2 = z 3 = z 4 = N N N N 𝑞 𝑥 3 |𝑊 𝑞 𝑥 4 |𝑊 𝑞 𝑥 1 |𝑊 𝑞 𝑥 2 |𝑊 𝑞 𝑥 4 |𝑂 𝑞 𝑥 1 |𝑂 𝑞 𝑥 2 |𝑂 𝑞 𝑥 3 |𝑂 w 1 w 2 w 3 w 4 Q: What are the latent sequences here (EOS excluded)?

2 (3)-State HMM Likelihood 𝑞 𝑊| start 𝑞 𝑊| 𝑊 𝑞 𝑊| 𝑊 𝑞 𝑊| 𝑊 … z 1 = z 2 = z 3 = z 4 = V V V V 𝑞 𝑊| 𝑂 𝑞 𝑊| 𝑂 𝑞 𝑊| 𝑂 𝑞 𝑂| 𝑊 𝑞 𝑂| 𝑊 𝑞 𝑂| 𝑊 𝑞 𝑂| start 𝑞 𝑂| 𝑂 𝑞 𝑂| 𝑂 𝑞 𝑂| 𝑂 … z 1 = z 2 = z 3 = z 4 = N N N N 𝑞 𝑥 3 |𝑊 𝑞 𝑥 4 |𝑊 𝑞 𝑥 1 |𝑊 𝑞 𝑥 2 |𝑊 𝑞 𝑥 4 |𝑂 𝑞 𝑥 1 |𝑂 𝑞 𝑥 2 |𝑂 𝑞 𝑥 3 |𝑂 w 1 w 2 w 3 w 4 Q: What are the latent sequences here (EOS excluded)? A: (N, w 1 ), (N, w 2 ), (N, w 3 ), (N, w 4 ) (N, w 1 ), (V, w 2 ), (N, w 3 ), (N, w 4 ) (V, w 1 ), (N, w 2 ), (N, w 3 ), (N, w 4 ) (N, w 1 ), (N, w 2 ), (N, w 3 ), (V, w 4 ) (N, w 1 ), (V, w 2 ), (N, w 3 ), (V, w 4 ) (V, w 1 ), (N, w 2 ), (N, w 3 ), (V, w 4 ) (N, w 1 ), (N, w 2 ), (V, w 3 ), (N, w 4 ) (N, w 1 ), (V, w 2 ), (V, w 3 ), (N, w 4 ) … (six more) (N, w 1 ), (N, w 2 ), (V, w 3 ), (V, w 4 ) (N, w 1 ), (V, w 2 ), (V, w 3 ), (V, w 4 )

2 (3)-State HMM Likelihood 𝑞 𝑊| start 𝑞 𝑊| 𝑊 𝑞 𝑊| 𝑊 𝑞 𝑊| 𝑊 … z 1 = z 2 = z 3 = z 4 = V V V V 𝑞 𝑊| 𝑂 𝑞 𝑊| 𝑂 𝑞 𝑊| 𝑂 𝑞 𝑂| 𝑊 𝑞 𝑂| 𝑊 𝑞 𝑂| 𝑊 𝑞 𝑂| start 𝑞 𝑂| 𝑂 𝑞 𝑂| 𝑂 𝑞 𝑂| 𝑂 … z 1 = z 2 = z 3 = z 4 = N N N N 𝑞 𝑥 3 |𝑊 𝑞 𝑥 4 |𝑊 𝑞 𝑥 1 |𝑊 𝑞 𝑥 2 |𝑊 𝑞 𝑥 4 |𝑂 𝑞 𝑥 1 |𝑂 𝑞 𝑥 2 |𝑂 𝑞 𝑥 3 |𝑂 w 1 w 2 w 3 w 4 Q: What are the latent sequences here (EOS excluded)? A: (N, w 1 ), (N, w 2 ), (N, w 3 ), (N, w 4 ) (N, w 1 ), (V, w 2 ), (N, w 3 ), (N, w 4 ) (V, w 1 ), (N, w 2 ), (N, w 3 ), (N, w 4 ) (N, w 1 ), (N, w 2 ), (N, w 3 ), (V, w 4 ) (N, w 1 ), (V, w 2 ), (N, w 3 ), (V, w 4 ) (V, w 1 ), (N, w 2 ), (N, w 3 ), (V, w 4 ) (N, w 1 ), (N, w 2 ), (V, w 3 ), (N, w 4 ) (N, w 1 ), (V, w 2 ), (V, w 3 ), (N, w 4 ) … (six more) (N, w 1 ), (N, w 2 ), (V, w 3 ), (V, w 4 ) (N, w 1 ), (V, w 2 ), (V, w 3 ), (V, w 4 )

2 (3)-State HMM Likelihood 𝑞 𝑊| start 𝑞 𝑊| 𝑊 𝑞 𝑊| 𝑊 𝑞 𝑊| 𝑊 … z 1 = z 2 = z 3 = z 4 = V V V V 𝑞 𝑊| 𝑂 𝑞 𝑊| 𝑂 𝑞 𝑊| 𝑂 𝑞 𝑂| 𝑊 𝑞 𝑂| 𝑊 𝑞 𝑂| 𝑊 𝑞 𝑂| start 𝑞 𝑂| 𝑂 𝑞 𝑂| 𝑂 𝑞 𝑂| 𝑂 … z 1 = z 2 = z 3 = z 4 = N N N N 𝑞 𝑥 3 |𝑊 𝑞 𝑥 4 |𝑊 𝑞 𝑥 1 |𝑊 𝑞 𝑥 2 |𝑊 𝑞 𝑥 4 |𝑂 𝑞 𝑥 1 |𝑂 𝑞 𝑥 2 |𝑂 𝑞 𝑥 3 |𝑂 w 1 w 2 w 3 w 4 N V end w 1 w 2 w 3 w 4 start .7 .2 .1 N .7 .2 .05 .05 N .15 .8 .05 V .2 .6 .1 .1 V .6 .35 .05

2 (3)-State HMM Likelihood 𝑞 𝑊| start 𝑞 𝑊| 𝑊 𝑞 𝑊| 𝑊 𝑞 𝑊| 𝑊 … z 1 = z 2 = z 3 = z 4 = V V V V 𝑞 𝑊| 𝑂 𝑞 𝑊| 𝑂 𝑞 𝑊| 𝑂 𝑞 𝑂| 𝑊 𝑞 𝑂| 𝑊 𝑞 𝑂| 𝑊 𝑞 𝑂| start 𝑞 𝑂| 𝑂 𝑞 𝑂| 𝑂 𝑞 𝑂| 𝑂 … z 1 = z 2 = z 3 = z 4 = N N N N 𝑞 𝑥 3 |𝑊 𝑞 𝑥 4 |𝑊 𝑞 𝑥 1 |𝑊 𝑞 𝑥 2 |𝑊 𝑞 𝑥 4 |𝑂 𝑞 𝑥 1 |𝑂 𝑞 𝑥 2 |𝑂 𝑞 𝑥 3 |𝑂 w 1 w 2 w 3 w 4 Q: What’s the probability of N V end (N, w 1 ), (V, w 2 ), (V, w 3 ), (N, w 4 )? w 1 w 2 w 3 w 4 start .7 .2 .1 N .7 .2 .05 .05 N .15 .8 .05 V .2 .6 .1 .1 V .6 .35 .05

2 (3)-State HMM Likelihood 𝑞 𝑊| 𝑊 z 1 = z 2 = z 3 = z 4 = V V V V 𝑞 𝑊| 𝑂 𝑞 𝑂| 𝑊 𝑞 𝑂| start z 1 = z 2 = z 3 = z 4 = N N N N 𝑞 𝑥 3 |𝑊 𝑞 𝑥 2 |𝑊 𝑞 𝑥 4 |𝑂 𝑞 𝑥 1 |𝑂 w 1 w 2 w 3 w 4 Q: What’s the probability of N V end (N, w 1 ), (V, w 2 ), (V, w 3 ), (N, w 4 )? w 1 w 2 w 3 w 4 start .7 .2 .1 N .7 .2 .05 .05 A: (.7*.7) * (.8*.6) * (.35*.1) * (.6*.05) = N .15 .8 .05 V .2 .6 .1 .1 0.0002822 V .6 .35 .05

2 (3)-State HMM Likelihood 𝑞 𝑊| 𝑊 z 1 = z 2 = z 3 = z 4 = V V V V 𝑞 𝑊| 𝑂 𝑞 𝑂| 𝑊 𝑞 𝑂| start z 1 = z 2 = z 3 = z 4 = N N N N 𝑞 𝑥 3 |𝑊 𝑞 𝑥 2 |𝑊 𝑞 𝑥 4 |𝑂 𝑞 𝑥 1 |𝑂 w 1 w 2 w 3 w 4 Q: What’s the probability of (N, w 1 ), (V, w 2 ), (V, w 3 ), (N, w 4 ) w 1 w 2 w 3 w 4 # N V end with ending included (unique N .7 .2 .05 .05 0 start .7 .2 .1 ending symbol “#”)? V .2 .6 .1 .1 0 A: (.7*.7) * (.8*.6) * (.35*.1) * (.6*.05) * N .15 .8 .05 (.05 * 1) = 0 0 0 0 1 end V .6 .35 .05 0.00001235

2 (3)-State HMM Likelihood 𝑞 𝑊| start 𝑞 𝑊| 𝑊 𝑞 𝑊| 𝑊 𝑞 𝑊| 𝑊 … z 1 = z 2 = z 3 = z 4 = V V V V 𝑞 𝑊| 𝑂 𝑞 𝑊| 𝑂 𝑞 𝑊| 𝑂 𝑞 𝑂| 𝑊 𝑞 𝑂| 𝑊 𝑞 𝑂| 𝑊 𝑞 𝑂| start 𝑞 𝑂| 𝑂 𝑞 𝑂| 𝑂 𝑞 𝑂| 𝑂 … z 1 = z 2 = z 3 = z 4 = N N N N 𝑞 𝑥 3 |𝑊 𝑞 𝑥 4 |𝑊 𝑞 𝑥 1 |𝑊 𝑞 𝑥 2 |𝑊 𝑞 𝑥 4 |𝑂 𝑞 𝑥 1 |𝑂 𝑞 𝑥 2 |𝑂 𝑞 𝑥 3 |𝑂 w 1 w 2 w 3 w 4 Q: What’s the probability of N V end (N, w 1 ), (V, w 2 ), (N, w 3 ), (N, w 4 )? w 1 w 2 w 3 w 4 start .7 .2 .1 N .7 .2 .05 .05 N .15 .8 .05 V .2 .6 .1 .1 V .6 .35 .05

2 (3)-State HMM Likelihood z 1 = z 2 = z 3 = z 4 = V V V V 𝑞 𝑊| 𝑂 𝑞 𝑂| 𝑊 𝑞 𝑂| start 𝑞 𝑂| 𝑂 z 1 = z 2 = z 3 = z 4 = N N N N 𝑞 𝑥 2 |𝑊 𝑞 𝑥 4 |𝑂 𝑞 𝑥 1 |𝑂 𝑞 𝑥 3 |𝑂 w 1 w 2 w 3 w 4 N V end Q: What’s the probability of w 1 w 2 w 3 w 4 (N, w 1 ), (V, w 2 ), (N, w 3 ), (N, w 4 )? start .7 .2 .1 N .7 .2 .05 .05 A: (.7*.7) * (.8*.6) * (.6*.05) * (.15*.05) = N .15 .8 .05 V .2 .6 .1 .1 0.00007056 V .6 .35 .05

2 (3)-State HMM Likelihood z 1 = z 2 = z 3 = z 4 = V V V V 𝑞 𝑊| 𝑂 𝑞 𝑂| 𝑊 𝑞 𝑂| start 𝑞 𝑂| 𝑂 z 1 = z 2 = z 3 = z 4 = N N N N 𝑞 𝑥 2 |𝑊 𝑞 𝑥 4 |𝑂 𝑞 𝑥 1 |𝑂 𝑞 𝑥 3 |𝑂 w 1 w 2 w 3 w 4 Q: What’s the probability of w 1 w 2 w 3 w 4 # N V end (N, w 1 ), (V, w 2 ), (N, w 3 ), (N, w 4 ) with N .7 .2 .05 .05 0 ending (unique symbol “#”)? start .7 .2 .1 V .2 .6 .1 .1 0 A: (.7*.7) * (.8*.6) * (.6*.05) * (.15*.05) * N .15 .8 .05 (.05 * 1) = 0 0 0 0 1 end V .6 .35 .05 0.000002646

2 (3)-State HMM Likelihood 𝑞 𝑊| 𝑊 z 1 = z 2 = z 3 = z 4 = 𝑞 𝑂| 𝑊 V V V V 𝑞 𝑂| start 𝑞 𝑊| 𝑂 z 1 = z 2 = z 3 = z 4 = N N N N 𝑞 𝑥 1 |𝑂 𝑞 𝑥 4 |𝑂 𝑞 𝑥 3 |𝑊 𝑞 𝑥 2 |𝑊 w 1 w 2 w 3 w 4 z 1 = z 2 = z 3 = z 4 = V V V V 𝑞 𝑊| 𝑂 𝑞 𝑂| 𝑊 𝑞 𝑂| start 𝑞 𝑂| 𝑂 z 1 = z 2 = z 3 = z 4 = N N N N 𝑞 𝑥 2 |𝑊 𝑞 𝑥 4 |𝑂 𝑞 𝑥 1 |𝑂 𝑞 𝑥 3 |𝑂 w 1 w 2 w 3 w 4

2 (3)-State HMM Likelihood 𝑞 𝑊| 𝑊 z 1 = z 2 = z 3 = z 4 = 𝑞 𝑂| 𝑊 V V V V 𝑞 𝑂| start 𝑞 𝑊| 𝑂 z 1 = z 2 = z 3 = z 4 = N N N N 𝑞 𝑥 1 |𝑂 𝑞 𝑥 4 |𝑂 𝑞 𝑥 3 |𝑊 𝑞 𝑥 2 |𝑊 w 1 w 2 w 3 w 4 Up until here, all the computation was the same Let’s reuse what computations we can z 1 = z 2 = z 3 = z 4 = V V V V 𝑞 𝑊| 𝑂 𝑞 𝑂| 𝑊 𝑞 𝑂| start 𝑞 𝑂| 𝑂 z 1 = z 2 = z 3 = z 4 = N N N N 𝑞 𝑥 2 |𝑊 𝑞 𝑥 4 |𝑂 𝑞 𝑥 1 |𝑂 𝑞 𝑥 3 |𝑂 w 1 w 2 w 3 w 4

2 (3)-State HMM Likelihood 𝑞 𝑊| 𝑊 z 1 = z 2 = z 3 = z 4 = 𝑞 𝑂| 𝑊 V V V V 𝑞 𝑂| start 𝑞 𝑊| 𝑂 z 1 = z 2 = z 3 = z 4 = N N N N 𝑞 𝑥 1 |𝑂 𝑞 𝑥 4 |𝑂 𝑞 𝑥 3 |𝑊 𝑞 𝑥 2 |𝑊 Solution : pass information w 1 w 2 w 3 w 4 "forward" in the graph, e.g., from timestep 2 to 3... z 1 = z 2 = z 3 = z 4 = V V V V 𝑞 𝑊| 𝑂 𝑞 𝑂| 𝑊 𝑞 𝑂| start 𝑞 𝑂| 𝑂 z 1 = z 2 = z 3 = z 4 = N N N N 𝑞 𝑥 2 |𝑊 𝑞 𝑥 4 |𝑂 𝑞 𝑥 1 |𝑂 𝑞 𝑥 3 |𝑂 w 1 w 2 w 3 w 4

2 (3)-State HMM Likelihood 𝑞 𝑊| 𝑊 z 1 = z 2 = z 3 = z 4 = 𝑞 𝑂| 𝑊 V V V V 𝑞 𝑂| start 𝑞 𝑊| 𝑂 z 1 = z 2 = z 3 = z 4 = N N N N 𝑞 𝑥 1 |𝑂 𝑞 𝑥 4 |𝑂 𝑞 𝑥 3 |𝑊 𝑞 𝑥 2 |𝑊 Solution : pass information w 1 w 2 w 3 w 4 "forward" in the graph, e.g., from timestep 2 to 3... z 1 = z 2 = z 3 = z 4 = V V V V Issue : these are only two of the 𝑞 𝑊| 𝑂 16 paths through the trellis 𝑞 𝑂| 𝑊 𝑞 𝑂| start 𝑞 𝑂| 𝑂 z 1 = z 2 = z 3 = z 4 = N N N N 𝑞 𝑥 2 |𝑊 𝑞 𝑥 4 |𝑂 𝑞 𝑥 1 |𝑂 𝑞 𝑥 3 |𝑂 w 1 w 2 w 3 w 4

2 (3)-State HMM Likelihood 𝑞 𝑊| 𝑊 z 1 = z 2 = z 3 = z 4 = 𝑞 𝑂| 𝑊 V V V V 𝑞 𝑂| start 𝑞 𝑊| 𝑂 z 1 = z 2 = z 3 = z 4 = N N N N 𝑞 𝑥 1 |𝑂 𝑞 𝑥 4 |𝑂 𝑞 𝑥 3 |𝑊 𝑞 𝑥 2 |𝑊 Solution : pass information w 1 w 2 w 3 w 4 "forward" in the graph, e.g., from timestep 2 to 3... z 1 = z 2 = z 3 = z 4 = V V V V Issue : these are only two of the 𝑞 𝑊| 𝑂 16 paths through the trellis 𝑞 𝑂| 𝑊 𝑞 𝑂| start 𝑞 𝑂| 𝑂 z 1 = z 2 = z 3 = z 4 = N N N N Solution : … marginalize (sum) 𝑞 𝑥 2 |𝑊 out all information from 𝑞 𝑥 4 |𝑂 𝑞 𝑥 1 |𝑂 𝑞 𝑥 3 |𝑂 previous timesteps (0 & 1) w 1 w 2 w 3 w 4

Reusing Computation α(i -1, A) z i-2 z i-1 z i = = A = A A z i-2 z i-1 z i = = B = B B α(i -1, B) z i-2 z i-1 z i = = C = C C α(i -1, C) let’s first consider “ any shared path ending with B (AB, BB, or CB) → B” assume any necessary information has been properly computed and stored along these paths: α(i -1, A), α(i -1, B), α(i -1, C)

Reusing Computation α(i -1, A) z i-2 z i-1 z i = = A = A A z i-2 z i-1 z i = = B = B B α(i -1, B) z i-2 z i-1 z i = = C = C C α(i -1, C) let’s first consider “ any shared path ending with B (AB, BB, or CB) → B” marginalize across the previous hidden state values, α(i -1, A), α(i -1, B), α(i -1, C)

Reusing Computation α(i -1, A) z i-2 z i-1 z i = = A = A A z i-2 z i-1 z i = = B = B B α( i, B) α(i -1, B) z i-2 z i-1 z i = = C = C C α(i -1, C) let’s first consider “ any shared path ending with B (AB, BB, or CB) → B” marginalize across the previous hidden state values, α(i -1, A), α(i -1, B), α(i -1, C) 𝛽 𝑗, 𝐶 = 𝛽 𝑗 − 1, 𝑡 ∗ 𝑞 𝐶 𝑡) ∗ 𝑞(obs at 𝑗 | 𝐶) 𝑡

Reusing Computation α(i -1, A) z i-2 z i-1 z i = = A = A A z i-2 z i-1 z i = = B = B B α( i, B) α(i -1, B) z i-2 z i-1 z i = = C = C C α(i -1, C) let’s first consider “ any shared path ending with B (AB, BB, or CB) → B” marginalize across the previous hidden state values 𝛽 𝑗, 𝐶 = 𝛽 𝑗 − 1, 𝑡 ∗ 𝑞 𝐶 𝑡) ∗ 𝑞(obs at 𝑗 | 𝐶) 𝑡 computing α at time i-1 will correctly incorporate paths through time i-2 : we correctly obey the Markov property

Forward Probability z i-2 z i-1 z i = = A = A A z i-2 z i-1 z i = = B = B B z i-2 z i-1 z i = = C = C C let’s first consider “ any shared path ending with B (AB, BB, or CB) → B” marginalize across the previous hidden state values α(i, B) is the total probability of all 𝛽 𝑗 − 1, 𝑡 ′ 𝑡 ′ ) ∗ 𝑞(obs at 𝑗 | 𝐶) 𝛽 𝑗, 𝐶 = ∗ 𝑞 𝐶 paths to that state B from the 𝑡 ′ beginning computing α at time i-1 will correctly incorporate paths through time i-2 : we correctly obey the Markov property

Forward Probability α(i, s ) is the total probability of all paths: 1. that start from the beginning 2. that end (currently) in s at step i 3. that emit the observation obs at i

Forward Probability what are the what’s the total probability how likely is it to get immediate ways to up until now? into state s this way? get into state s ? α(i, s ) is the total probability of all paths: 1. that start from the beginning 2. that end (currently) in s at step i 3. that emit the observation obs at i

2 (3) -State HMM Likelihood with Forward Probabilities α[3, V] = α[2, V] * (.35*.1)+ z 3 = z 4 = 𝑞 𝑂| 𝑊 α[2, N] * (.8*.1) V V α[2, V] = 𝑞 𝑊| 𝑊 α[1, N] * (.8*.6) + z 3 = z 4 = α[1, V] * (.35*.6) N N 𝑞 𝑊| start z 1 = z 2 = 𝑞 𝑥 4 |𝑂 𝑞 𝑥 3 |𝑊 V V w 3 w 4 𝑞 𝑂| start 𝑞 𝑊| 𝑂 z 1 = z 2 = α[1, N] = N N (.7*.7) z 3 = z 4 = 𝑞 𝑥 2 |𝑊 𝑞 𝑥 1 |𝑂 α[3, N] = V V α[2, V] * (.6*.05) + w 1 w 2 α[2, N] * (.15*.05) 𝑞 𝑂| 𝑊 𝑞 𝑂| 𝑂 z 3 = z 4 = N V end N N start .7 .2 .1 w 1 w 2 w 3 w 4 𝑞 𝑥 4 |𝑂 𝑞 𝑥 3 |𝑂 N .15 .8 .05 N .7 .2 .05 .05 V .2 .6 .1 .1 V .6 .35 .05 w 3 w 4

2 (3) -State HMM Likelihood with Forward Probabilities α[3, V] = α[2, V] * (.35*.1)+ z 3 = z 4 = 𝑞 𝑂| 𝑊 α[2, N] * (.8*.1) V V α[2, V] = 𝑞 𝑊| 𝑊 α[1, V] = α[1, N] * (.8*.6) + z 3 = z 4 = (.2*.2) α[1, V] * (.35*.6) N N 𝑞 𝑊| start z 1 = z 2 = 𝑞 𝑥 4 |𝑂 𝑞 𝑥 3 |𝑊 V V w 3 w 4 𝑞 𝑂| start 𝑞 𝑊| 𝑂 z 1 = z 2 = α[1, N] = N N (.7*.7) z 3 = z 4 = 𝑞 𝑥 2 |𝑊 𝑞 𝑥 1 |𝑂 α[3, N] = V V α[2, V] * (.6*.05) + w 1 w 2 α[2, N] * (.15*.05) 𝑞 𝑂| 𝑊 𝑞 𝑂| 𝑂 z 3 = z 4 = N V end N N start .7 .2 .1 w 1 w 2 w 3 w 4 𝑞 𝑥 4 |𝑂 𝑞 𝑥 3 |𝑂 N .15 .8 .05 N .7 .2 .05 .05 V .2 .6 .1 .1 V .6 .35 .05 w 3 w 4

2 (3) -State HMM Likelihood with Forward Probabilities α[3, V] = α[2, V] * (.35*.1)+ z 3 = z 4 = 𝑞 𝑂| 𝑊 α[2, N] * (.8*.1) V V α[2, V] = 𝑞 𝑊| 𝑊 α[1, N] * (.8*.6) + α[1, V] = z 3 = z 4 = α[1, V] * (.35*.6) = (.2*.2) = .04 N N 0.2436 𝑞 𝑊| start z 1 = z 2 = 𝑞 𝑥 4 |𝑂 𝑞 𝑥 3 |𝑊 V V w 3 w 4 𝑞 𝑂| start 𝑞 𝑊| 𝑂 z 1 = z 2 = α[1, N] = N N (.7*.7) = .49 z 3 = z 4 = 𝑞 𝑥 2 |𝑊 𝑞 𝑥 1 |𝑂 α[2, N] = α[3, N] = V V α[1, N] * (.15*.2) + α[2, V] * (.6*.05) + w 1 w 2 α[1, V] * (.6*.2) = α[2, N] * (.2*.05) 𝑞 𝑂| 𝑊 .0195 𝑞 𝑂| 𝑂 z 3 = z 4 = N V end N N start .7 .2 .1 w 1 w 2 w 3 w 4 𝑞 𝑥 4 |𝑂 𝑞 𝑥 3 |𝑂 N .15 .8 .05 N .7 .2 .05 .05 V .2 .6 .1 .1 V .6 .35 .05 w 3 w 4

2 (3) -State HMM Likelihood with Forward Probabilities α[3, V] = α[2, V] * (.35*.1)+ z 3 = z 4 = 𝑞 𝑂| 𝑊 α[2, N] * (.8*.1) V V α[2, V] = 𝑞 𝑊| 𝑊 α[1, N] * (.8*.6) + α[1, V] = z 3 = z 4 = α[1, V] * (.35*.6) = (.2*.2) = .04 N N 0.2436 𝑞 𝑊| start z 1 = z 2 = 𝑞 𝑥 4 |𝑂 𝑞 𝑥 3 |𝑊 V V Use dynamic programming w 3 w 4 𝑞 𝑂| start 𝑞 𝑊| 𝑂 to build the α left-to-right z 1 = z 2 = α[1, N] = N N (.7*.7) = .49 z 3 = z 4 = 𝑞 𝑥 2 |𝑊 𝑞 𝑥 1 |𝑂 α[2, N] = α[3, N] = V V α[1, N] * (.15*.2) + α[2, V] * (.6*.05) + w 1 w 2 α[1, V] * (.6*.2) = α[2, N] * (.2*.05) 𝑞 𝑂| 𝑊 .0195 𝑞 𝑂| 𝑂 z 3 = z 4 = N V end N N start .7 .2 .1 w 1 w 2 w 3 w 4 𝑞 𝑥 4 |𝑂 𝑞 𝑥 3 |𝑂 N .15 .8 .05 N .7 .2 .05 .05 V .2 .6 .1 .1 V .6 .35 .05 w 3 w 4

Forward Algorithm α : a 2D table, N+2 x K* N+2: number of observations (+2 for the BOS & EOS symbols) K*: number of states Use dynamic programming to build the α left-to- right

Forward Algorithm α = double[N+2][K*] α [0][*] = 0.0 α [0][START] = 1.0 for(i = 1; i ≤ N+1; ++ i) { }

Forward Algorithm α = double[N+2][K*] α [0][*] = 0.0 α [0][START] = 1.0 for(i = 1; i ≤ N+1; ++ i) { for(state = 0; state < K*; ++state) { } }

Forward Algorithm α = double[N+2][K*] α [0][*] = 0.0 α [0][START] = 1.0 for(i = 1; i ≤ N+1; ++ i) { for(state = 0; state < K*; ++state) { p obs = p emission (obs i | state) } }

Forward Algorithm α = double[N+2][K*] α [0][*] = 0.0 α [0][START] = 1.0 for(i = 1; i ≤ N+1; ++ i) { for(state = 0; state < K*; ++state) { p obs = p emission (obs i | state) for(old = 0; old < K*; ++old) { p move = p transition (state | old) α [i][state] += α [i-1][old] * p obs * p move } } }

Forward Algorithm α = double[N+2][K*] α [0][*] = 0.0 we still need to learn these α [0][START] = 1.0 (EM if not observed) for(i = 1; i ≤ N+1; ++ i) { for(state = 0; state < K*; ++state) { p obs = p emission (obs i | state) for(old = 0; old < K*; ++old) { p move = p transition (state | old) α [i][state] += α [i-1][old] * p obs * p move } } }

Forward Algorithm α = double[N+2][K*] α [0][*] = 0.0 α [0][START] = 1.0 Q: What do we return? (How do we return the likelihood of the sequence?) for(i = 1; i ≤ N+1; ++ i) { for(state = 0; state < K*; ++state) { p obs = p emission (obs i | state) for(old = 0; old < K*; ++old) { p move = p transition (state | old) α [i][state] += α [i-1][old] * p obs * p move } } }

Forward Algorithm α = double[N+2][K*] α [0][*] = 0.0 α [0][START] = 1.0 Q: What do we return? (How do we return the likelihood of the sequence?) for(i = 1; i ≤ N+1; ++ i) { for(state = 0; state < K*; ++state) { p obs = p emission (obs i | state) A: α [N+1][end] for(old = 0; old < K*; ++old) { p move = p transition (state | old) α [i][state] += α [i-1][old] * p obs * p move } } }

Interactive HMM Example https://goo.gl/rbHEoc (Jason Eisner, 2002) Original: http://www.cs.jhu.edu/~jason/465/PowerPoint/lect24-hmm.xls

Recommend

More recommend