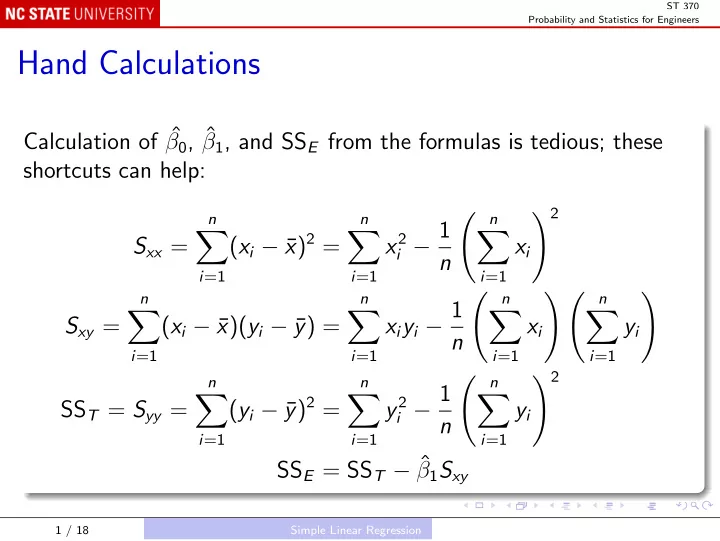

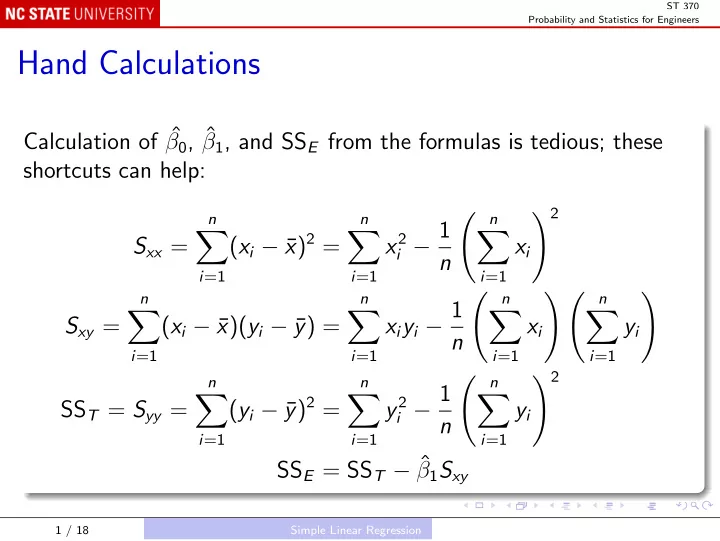

ST 370 Probability and Statistics for Engineers Hand Calculations Calculation of ˆ β 0 , ˆ β 1 , and SS E from the formulas is tedious; these shortcuts can help: � 2 � n n n i − 1 x ) 2 = � � � x 2 S xx = ( x i − ¯ x i n i =1 i =1 i =1 n n � n � � n � x i y i − 1 � � � � S xy = ( x i − ¯ x )( y i − ¯ y ) = x i y i n i =1 i =1 i =1 i =1 � 2 n n � n i − 1 y ) 2 = � � � y 2 SS T = S yy = ( y i − ¯ y i n i =1 i =1 i =1 SS E = SS T − ˆ β 1 S xy 1 / 18 Simple Linear Regression

ST 370 Probability and Statistics for Engineers Standard errors Calculations of standard errors begin with an estimate of σ , the standard deviation of the noise term ǫ . The least squares residuals are e i = y i − (ˆ β 0 + ˆ β 1 x i ) so the residual sum of squares is n � e 2 SS E = i . i =1 Because two parameters were estimated in finding the residuals, the residual degrees of freedom are n − 2, and the estimate of σ 2 is σ 2 = MS E = SS E ˆ n − 2 . 2 / 18 Simple Linear Regression

ST 370 Probability and Statistics for Engineers The estimated standard errors of the least squares estimates are � 1 se(ˆ β 1 ) = ˆ σ S xx and � x 2 n + ¯ 1 se(ˆ β 0 ) = ˆ σ . S xx 3 / 18 Simple Linear Regression

ST 370 Probability and Statistics for Engineers These estimated standard errors, especially se(ˆ β 1 ), are used to set up confidence intervals like ˆ β 1 ± t α/ 2 ,ν × estimated standard error and test statistics like ˆ β 1 t obs = estimated standard error . 4 / 18 Simple Linear Regression

ST 370 Probability and Statistics for Engineers Predicting a New Observation Regression equations are often used to predict what the response would be in a new experiment, in which the predictor value is say x new . If we carried out many experiments with x = x new , we would expect the average response to be β 0 + β 1 x new , which we estimate by Y new = ˆ ˆ β 0 + ˆ β 1 x new . 5 / 18 Simple Linear Regression Predicting a New Observation

ST 370 Probability and Statistics for Engineers When this mean response is the quantity of interest, you use its estimated standard error � x ) 2 1 n + ( x new − ¯ se( ˆ Y new ) = ˆ σ S xx to set up confidence intervals. Note that if x new = 0, ˆ Y new is just ˆ β 0 , and this expression reduces to the estimated standard error given earlier. σ/ √ n , and increases as Note also that se( ˆ Y new ) is always at least ˆ x new gets farther from ¯ x . 6 / 18 Simple Linear Regression Predicting a New Observation

ST 370 Probability and Statistics for Engineers Often, however, what is wanted is not a confidence interval for the mean response , but a prediction interval for a single new observation Y new at x = x new . Now Y new = β 0 + β 1 x new + ǫ new so our uncertainty about Y new comes from two sources: We have to use estimates ˆ β 0 and ˆ β 1 instead of the true values; We have no information about the value of ǫ new . 7 / 18 Simple Linear Regression Predicting a New Observation

ST 370 Probability and Statistics for Engineers The best prediction of Y new is still ˆ Y new , but the estimated prediction standard error is � x ) 2 1 + 1 n + ( x new − ¯ pse( Y new ) = ˆ σ S xx We use the estimated prediction standard error to set up a 100(1 − α ) prediction interval for Y new : ˆ Y new ± t α/ 2 ,ν × pse( Y new ) . 8 / 18 Simple Linear Regression Predicting a New Observation

ST 370 Probability and Statistics for Engineers In R The predict() method can produce either A confidence interval, for the mean response; A prediction interval, for a single new observation. oxygenLm <- lm(Purity ~ HC, oxygen) # confidence interval for mean response: predict(oxygenLm, newdata = data.frame(HC = 1.0), interval = "confidence") # prediction interval for single observation: predict(oxygenLm, newdata = data.frame(HC = 1.0), interval = "prediction") 9 / 18 Simple Linear Regression Predicting a New Observation

ST 370 Probability and Statistics for Engineers Indicator variables Typically, the predictor variable x is a controllable or measured variable; however, sometimes it is an artificial quantity, constructed to convey some information. An indicator variable is a variable that takes only the values 0 and 1; the observed data can then be divided into two groups: those where x = 0 and those where x = 1. 10 / 18 Simple Linear Regression Indicator variables

ST 370 Probability and Statistics for Engineers When x = 0, the regression model Y = β 0 + β 1 x + ǫ simplifies to Y = β 0 + ǫ so the mean response for this group is β 0 . When x = 1, the regression model becomes Y = β 0 + β 1 + ǫ so the mean response for this group is β 0 + β 1 . 11 / 18 Simple Linear Regression Indicator variables

ST 370 Probability and Statistics for Engineers So the interpretation of the coefficients β 0 and β 1 is: β 0 is the mean response for group 0; β 1 is the difference between the mean response for group 1 and the mean response for group 0. When we discussed the factorial design with one factor having two levels, we used the model Y i , j = µ + τ i + ǫ i , j , i = 1 or 2 , j = 1 , 2 , . . . , n with the constraint τ 1 = 0; so: µ is the mean response for the first (baseline) level of the factor; τ 2 is the difference between the mean response for the second level and the mean response for the first level. 12 / 18 Simple Linear Regression Indicator variables

ST 370 Probability and Statistics for Engineers The two models represent the same features, in a different notation. That is: the factorial model with a single factor having two levels is essentially the same as the regression model with an indicator variable as the predictor. That insight is not especially helpful in this context, but when we move on to regression models with more than one predictor it is very useful. Using indicator variables allows us to put regression models and factorial models into a unified framework, called the general linear model . 13 / 18 Simple Linear Regression Indicator variables

ST 370 Probability and Statistics for Engineers Coefficient of Determination How well does a regression model fit a given set of data? A careful answer must look at the context: does the model give usefully precise predictions? What is “usefully precise” varies from one context to another. A quick but not careful answer is to look at the coefficient of determination, R 2 : R 2 = 1 − SS E , SS T the fraction of variability in Y explained by the predictor x . 14 / 18 Simple Linear Regression Coefficient of Determination

ST 370 Probability and Statistics for Engineers In R For the Oxygen example, part of the regression output is: Coefficients: Estimate Std. Error t value Pr(>|t|) (Intercept) 74.283 1.593 46.62 < 2e-16 *** HC 14.947 1.317 11.35 1.23e-09 *** --- Signif. codes: 0 *** 0.001 ** 0.01 * 0.05 . 0.1 1 Residual standard error: 1.087 on 18 degrees of freedom Multiple R-squared: 0.8774,Adjusted R-squared: 0.8706 F-statistic: 128.9 on 1 and 18 DF, p-value: 1.227e-09 The R 2 is reported as the Multiple R-squared , 0 . 8774. We might report that “87 . 74% of the variability in oxygen purity is explained by variations in hydrocarbon levels”. 15 / 18 Simple Linear Regression Coefficient of Determination

ST 370 Probability and Statistics for Engineers Regression with Transformed Variables In some applications of regression, either the response Y or the predictor x may need to be transformed, and sometimes both. Example: wind turbine Response: DC Output Predictor: Wind speed In R turbine <- read.csv("Data/Table-11-05.csv") with(turbine, plot(WindSpeed, Output)) with(turbine, plot(-1 / WindSpeed, Output)) 16 / 18 Simple Linear Regression Regression with Transformed Variables

ST 370 Probability and Statistics for Engineers No straight line will give a good fit to the plot of Output versus Wind Speed. The plot of Output versus (-1 / Wind Speed) looks more appropriate for a straight line fit. Try both: summary(lm(Output ~ WindSpeed, turbine)) summary(lm(Output ~ I(-1 / WindSpeed), turbine)) Note: many symbols like “-” and “/” that usually represent arithmetic operations have special meanings in a formula, so an expression like -1 / WindSpeed must be “wrapped” in the identity function I(.) . 17 / 18 Simple Linear Regression Regression with Transformed Variables

ST 370 Probability and Statistics for Engineers Note that the second model has R 2 = 0 . 98, while the first has R 2 = 0 . 8745. That is, using -1 / WindSpeed as the predictor accounts for much more of the variability in Output than using WindSpeed . Interestingly, using -1 / WindSpeed also results in the model equation y = 2 . 98 − 6 . 93 ˆ x , which predicts that the Output will never be higher than 2.98, no matter how high the Wind Speed. 18 / 18 Simple Linear Regression Regression with Transformed Variables

Recommend

More recommend