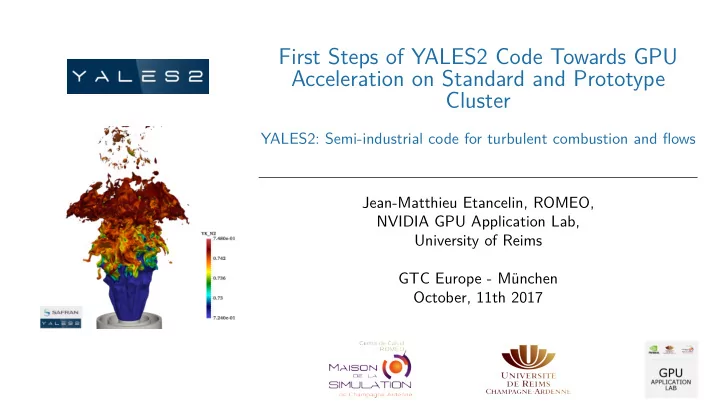

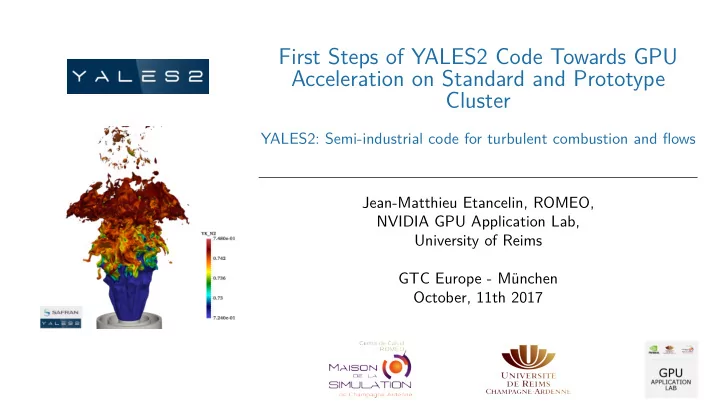

First Steps of YALES2 Code Towards GPU Acceleration on Standard and Prototype Cluster YALES2: Semi-industrial code for turbulent combustion and flows Jean-Matthieu Etancelin, ROMEO, NVIDIA GPU Application Lab, University of Reims GTC Europe - M¨ unchen October, 11th 2017

Introduction : Context Existing code profiling Code porting Benchmarks Conclusions 1. Introduction : Context ROMEO HPC Center – GPU Application Lab Yales2 2. Existing code profiling 3. Code porting Porting stategies Internal kernels performances 4. Benchmarks 5. Conclusions Limitations and future work JM Etancelin – First Steps of YALES2 Code Towards GPU Acceleration on Standard and Prototype Cluster 2

Introduction : Context Existing code profiling Code porting Benchmarks Conclusions ROMEO HPC Center University of Reims I about 25000 students I Multidisciplinary university (undergraduate, graduate, PhD, research labs) ROMEO HPC Center I HPC resources for both academic and industrial research I Expertise and teaching in HPC and GPU technologies I Integrated in the European HPC ecosystem (French Tier 1.5 equip@meso , ETP4HPC ) I Full hybrid cluster (2 × Intel Ivy Bridge + 2 × K20 + IB QDR ) JM Etancelin – First Steps of YALES2 Code Towards GPU Acceleration on Standard and Prototype Cluster 3

Introduction : Context Existing code profiling Code porting Benchmarks Conclusions GPU Application Lab Objectives : intensive exploitation of ROMEO I Expertise in hybrid HPC, in particular in GPU Technologies I GPU code porting I Optimization and scaling-up towards a large number of GPUs I Training and teaching for ROMEO users Activities I GPU, hybrid and parallel codes optimization I Algorithms improvements regarding targeted architectures I Numerical methods adapting to hybrid and parallel architecture Various collaborations I Local URCA laboratories, and some external collaborations (ONERA, Univ. of Normandy) I Several domains of application (fluid mechanics, chemistry, computer science, applied maths, ...) JM Etancelin – First Steps of YALES2 Code Towards GPU Acceleration on Standard and Prototype Cluster 4

Introduction : Context Existing code profiling Code porting Benchmarks Conclusions Massively parallel solver for multi-physics problems in fluid dynamics from primary atomisation to pollutants dispersion in complex geometries I Code developped at CORIA (University of Normandy) since 2007 I V. Moureau, G. Lartigue, P. B´ enard (projects leaders) I ∼ 10 developers (engineers, researchers, PhD students, . . .) + contributors Code I Diphasic and reactive fluids flows simulations at low Mach number on complex geometries I LES and DNS solvers on unstructured meshes I 3D flow simulations on massively parallel architectures I Use by more than 160 academic and industrial researchers I 60+ scientific publications JM Etancelin – First Steps of YALES2 Code Towards GPU Acceleration on Standard and Prototype Cluster 5

Introduction : Context Existing code profiling Code porting Benchmarks Conclusions YALES2, a complete library Main features I 350 000 lines of code f90 and f03 I Portable I Python Interface I Main solvers : I Scalar solver (SCS) I Level set solver (LSS) I Lagrangian solver (LGS) I Incompressible solver (ICS) I Variable density solver (VDS) I Spray solver (SPS) I Magneto-Hydrodynamic solver (MHD) I Heat transfer solver (HTS) I Chemical reactor solver (CRS) I Darcy solver (DCY) I Mesh movement solver (MMS) I ALE solver (ALE) I Linear acoustics solver (ACS) I 5+ solvers in progress JM Etancelin – First Steps of YALES2 Code Towards GPU Acceleration on Standard and Prototype Cluster 6

Introduction : Context Existing code profiling Code porting Benchmarks Conclusions HPC with YALES2 in combustion Multi-scale and multi-physics applications I More than 85% of used energy comes from combustion I Related to many fields (transportation, industry, energy, . . .) Examples in aeronautics : JM Etancelin – First Steps of YALES2 Code Towards GPU Acceleration on Standard and Prototype Cluster 7

Introduction : Context Existing code profiling Code porting Benchmarks Conclusions HPC with YALES2 HPC I Using up to 10000 cores on national french clusters (IDRIS, CINES, . . .), regional (CRIANN) and local machines I Using advanced parallel programming techiques (hybrid computing, automatic mesh adaptation, . . .) I Collaborations with Exascale Lab, INTEL/CEA/GENCI/UVSQ I Code used as benchmark on prototypes (IDRIS, Ouessant : Power8+P100), Cellule de Veille technologique GENCI I Collaboration on GPU porting, GPU Application Lab, ROMEO JM Etancelin – First Steps of YALES2 Code Towards GPU Acceleration on Standard and Prototype Cluster 8

Introduction : Context Existing code profiling Code porting Benchmarks Conclusions Existing code profiling 1. Introduction : Context ROMEO HPC Center – GPU Application Lab Yales2 2. Existing code profiling 3. Code porting Porting stategies Internal kernels performances 4. Benchmarks 5. Conclusions Limitations and future work JM Etancelin – First Steps of YALES2 Code Towards GPU Acceleration on Standard and Prototype Cluster 9

Introduction : Context Existing code profiling Code porting Benchmarks Conclusions Profiling the existing code Specific tools (MAQAO + TAU + PAPI) I In-depth profiling : I Computational time (per functions, per internal and external loops) I Number of floating point operations I Number of caches misses I . . . I Hot-spot : matrix-vector product in Preconditionned Conjugate Gradient (PCG) Functions profiles External loops profile JM Etancelin – First Steps of YALES2 Code Towards GPU Acceleration on Standard and Prototype Cluster 10

Introduction : Context Existing code profiling Code porting Benchmarks Conclusions Profiling existing code Indentifying hot-spot I Preconditioned conjugate gradient : I Matrix-vector product : 250 lines of code for 55% of total time 30 lines of code for 30% of total time JM Etancelin – First Steps of YALES2 Code Towards GPU Acceleration on Standard and Prototype Cluster 11

Introduction : Context Existing code profiling Code porting Benchmarks Conclusions Existing code profiling 1. Introduction : Context ROMEO HPC Center – GPU Application Lab Yales2 2. Existing code profiling 3. Code porting Porting stategies Internal kernels performances 4. Benchmarks 5. Conclusions Limitations and future work JM Etancelin – First Steps of YALES2 Code Towards GPU Acceleration on Standard and Prototype Cluster 12

Introduction : Context Existing code profiling Code porting Benchmarks Conclusions How to port hot-spot on GPU ? Code main feature : data-centered structure I Hierarchical well-defined data structures based on bloc-decomposition of the mesh I Every computing loops follow the same skeleton (two levels of nested loops : over blocs meshes, then over vertex, edges or elements) Code porting Three major possibilities : I CUDA/C with Intel compilers I OpenACC with PGI compilers I Fine management of GPU (code+data) I Non intrusive for code (macros) I Passing through intermediary C interfaces I Complementary with in-progress OpenMP I No deep copy for complex data structures version I Code rewriting (only for computational loops) I Strong potential with unified memory I No deep copy for complex data structures I CUDA/Fortran with PGI (not tested) I No support for Fortran pointers I Similar to CUDA/C without interface JM Etancelin – First Steps of YALES2 Code Towards GPU Acceleration on Standard and Prototype Cluster 13

Introduction : Context Existing code profiling Code porting Benchmarks Conclusions Code porting with CUDA Key points Data management I Exploiting the Fortran/C interoperability for data structures I Fortran derived types translation to C typedef (automatic translation tool YALES2-specific) GPU memory management I Allocation ans management of GPU specific data and utilities arrays I CPU-GPU transfers optimized with a bu ff er array (in Pinned memory ) Execution model I Mapping mesh decomposition and hierarchical data structure to CUDA blocks/threads Algorithm adaptation : inverse connectivity for mesh exploration I Loop first over vertices instead of edges (Finite Volumes method works on edges by construction) JM Etancelin – First Steps of YALES2 Code Towards GPU Acceleration on Standard and Prototype Cluster 14

Introduction : Context Existing code profiling Code porting Benchmarks Conclusions CUDA code porting Inverse connectivity for mesh exploration Matrix-vector product computing (op product) I Initial algorithm (not well suited to GPU) : I Algorithm with inverse connectivity : Foreach bloc b of mesh //blocks Foreach bloc b of mesh //blocks Foreach edge e of b //threads Foreach vertex v of b //threads vs, ve = vertex(e) r = 0 // Register result(vs) += f(value(e), data(vs), data(ve)) Foreach edge e from vertex s result(ve) -= f(value(e), data(vs), data(ve)) ve = end(e) r += f(value(e), data(v), data(ve)) Foreach edge e to vertex s vs = start(e) r -= f(value(e), data(vs), data(v)) result(v) = r JM Etancelin – First Steps of YALES2 Code Towards GPU Acceleration on Standard and Prototype Cluster 15

Recommend

More recommend