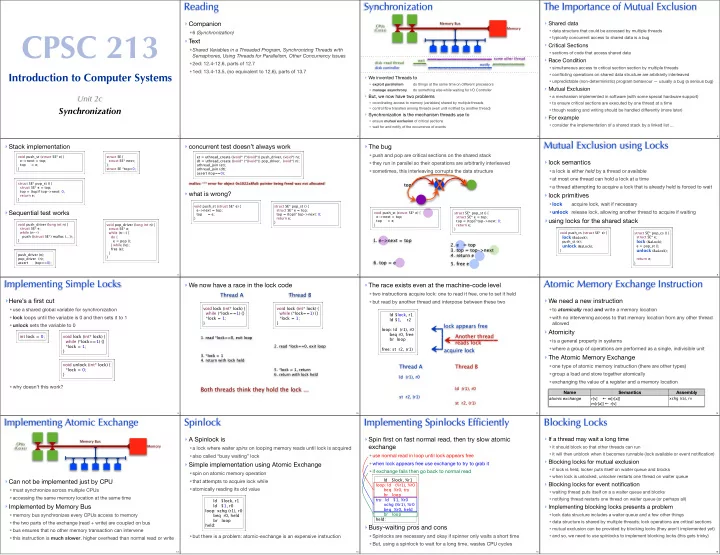

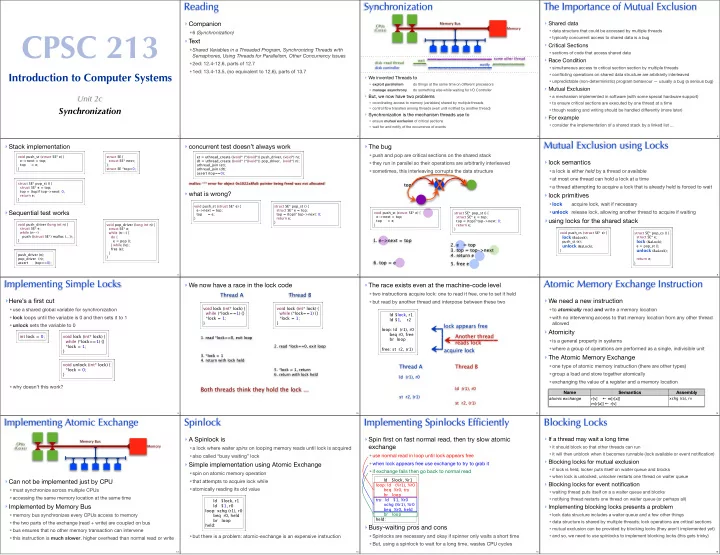

Reading Synchronization The Importance of Mutual Exclusion ‣ Companion ‣ Shared data Memory Bus CPUs Memory (Cores) CPSC 213 • data structure that could be accessed by multiple threads •6 (Synchronization) • typically concurrent access to shared data is a bug ‣ Text ‣ Critical Sections •Shared Variables in a Threaded Program, Synchronizing Threads with • sections of code that access shared data Semaphores, Using Threads for Parallelism, Other Concurrency Issues some other thread ‣ Race Condition wait •2ed: 12.4-12.6, parts of 12.7 disk-read thread notify • simultaneous access to critical section section by multiple threads disk controller •1ed: 13.4-13.5, (no equivalent to 12.6), parts of 13.7 Introduction to Computer Systems • conflicting operations on shared data structure are arbitrarily interleaved ‣ We invented Threads to • unpredictable (non-deterministic) program behaviour — usually a bug (a serious bug) • exploit parallelism do things at the same time on different processors ‣ Mutual Exclusion • manage asynchrony do something else while waiting for I/O Controller ‣ But, we now have two problems Unit 2c • a mechanism implemented in software (with some special hardware support) • coordinating access to memory (variables) shared by multiple threads • to ensure critical sections are executed by one thread at a time Synchronization • control flow transfers among threads (wait until notified by another thread) • though reading and writing should be handled differently (more later) ‣ Synchronization is the mechanism threads use to ‣ For example • ensure mutual exclusion of critical sections • consider the implementation of a shared stack by a linked list ... • wait for and notify of the occurrence of events 1 2 3 4 Mutual Exclusion using Locks ‣ Stack implementation ‣ concurrent test doesn’t always work ‣ The bug •push and pop are critical sections on the shared stack void push_st (struct SE* e) { struct SE { et = uthread_create ((void* (*)(void*)) push_driver, (void*) n); ‣ lock semantics e->next = top; struct SE* next; dt = uthread_create ((void* (*)(void*)) pop_driver, (void*) n); •they run in parallel so their operations are arbitrarily interleaved top = e; }; uthread_join (et); } struct SE *top=0; uthread_join (dt); •a lock is either held by a thread or available •sometimes, this interleaving corrupts the data structure assert (top==0); X •at most one thread can hold a lock at a time struct SE* pop_st () { malloc: *** error for object 0x1022a8fa0: pointer being freed was not allocated top •a thread attempting to acquire a lock that is already held is forced to wait struct SE* e = top; top = (top)? top->next: 0; ‣ what is wrong? ‣ lock primitives return e; } • lock acquire lock, wait if necessary void push_st (struct SE* e) { struct SE* pop_st () { e->next = top; ‣ Sequential test works struct SE* e = top; •unlock release lock, allowing another thread to acquire if waiting void push_st (struct SE* e) { struct SE* pop_st () { top = e; top = (top)? top->next: 0; e->next = top; struct SE* e = top; } return e; ‣ using locks for the shared stack top = e; top = (top)? top->next: 0; } void push_driver (long int n) { void pop_driver (long int n) { } return e; struct SE* e; struct SE* e; } while (n--) void push_cs (struct SE* e) { while (n--) { struct SE* pop_cs () { push ((struct SE*) malloc (...)); do { lock (&aLock); struct SE* e; 1. e->next = top } e = pop (); lock (&aLock); push_st (e); 2. e = top } while (!e); unlock (&aLock); e = pop_st (); free (e); 3. top = top->next unlock (&aLock); } } push_driver (n); 4. return e } pop_driver (n); return e; 6. top = e assert (top==0); 5. free e } 5 6 7 8 Implementing Simple Locks Atomic Memory Exchange Instruction ‣ We now have a race in the lock code ‣ The race exists even at the machine-code level •two instructions acquire lock: one to read it free, one to set it held Thread A Thread B ‣ Here’s a first cut ‣ We need a new instruction •but read by another thread and interpose between these two void lock (int* lock) { void lock (int* lock) { • use a shared global variable for synchronization •to atomically read and write a memory location while (*lock==1) {} while (*lock==1) {} ld $lock, r1 •lock loops until the variable is 0 and then sets it to 1 •with no intervening access to that memory location from any other thread *lock = 1; *lock = 1; ld $1, r2 } } allowed •unlock sets the variable to 0 lock appears free loop: ld (r1), r0 ‣ Atomicity beq r0, free Another thread int lock = 0; void lock (int* lock) { 1. read *lock==0, exit loop br loop •is a general property in systems while (*lock==1) {} reads lock *lock = 1; 2. read *lock==0, exit loop •where a group of operations are performed as a single, indivisible unit free: st r2, (r1) acquire lock } 3. *lock = 1 ‣ The Atomic Memory Exchange 4. return with lock held void unlock (int* lock) { Thread A Thread B •one type of atomic memory instruction (there are other types) *lock = 0; 5. *lock = 1, return •group a load and store together atomically 6. return with lock held } ld (r1), r0 •exchanging the value of a register and a memory location • why doesn’t this work? Both threads think they hold the lock ... ld (r1), r0 Name Semantics Assembly st r2, (r1) atomic exchange r[v] ← m[r[a]] xchg (ra), rv st r2, (r1) m[r[a]] ← r[v] 9 10 11 12 Implementing Atomic Exchange Spinlock Implementing Spinlocks Efficiently Blocking Locks ‣ A Spinlock is ‣ Spin first on fast normal read, then try slow atomic ‣ If a thread may wait a long time Memory Bus CPUs exchange Memory • it should block so that other threads can run •a lock where waiter spins on looping memory reads until lock is acquired (Cores) • it will then unblock when it becomes runnable (lock available or event notification) •use normal read in loop until lock appears free •also called “busy waiting” lock ‣ Blocking locks for mutual exclusion ‣ Simple implementation using Atomic Exchange •when lock appears free use exchange to try to grab it • if lock is held, locker puts itself on waiter queue and blocks •if exchange fails then go back to normal read •spin on atomic memory operation • when lock is unlocked, unlocker restarts one thread on waiter queue ‣ Can not be implemented just by CPU ld $lock, %r1 •that attempts to acquire lock while ‣ Blocking locks for event notification loop: ld (%r1), %r0 •atomically reading its old value •must synchronize across multiple CPUs beq %r0, try • waiting thread puts itself on a a waiter queue and blocks br loop •accessing the same memory location at the same time • notifying thread restarts one thread on waiter queue (or perhaps all) try: ld $1, %r0 ld $lock, r1 xchg (%r1), %r0 ‣ Implemented by Memory Bus ld $1, r0 ‣ Implementing blocking locks presents a problem beq %r0, held loop: xchg (r1), r0 br loop •memory bus synchronizes every CPUs access to memory • lock data structure includes a waiter queue and a few other things beq r0, held held: br loop • data structure is shared by multiple threads; lock operations are critical sections •the two parts of the exchange (read + write) are coupled on bus held: ‣ Busy-waiting pros and cons • mutual exclusion can be provided by blocking locks (they aren’t implemented yet) •bus ensures that no other memory transaction can intervene • and so, we need to use spinlocks to implement blocking locks (this gets tricky) •but there is a problem: atomic-exchange is an expensive instruction •Spinlocks are necessary and okay if spinner only waits a short time •this instruction is much slower , higher overhead than normal read or write •But, using a spinlock to wait for a long time, wastes CPU cycles 13 14 15 16

Recommend

More recommend