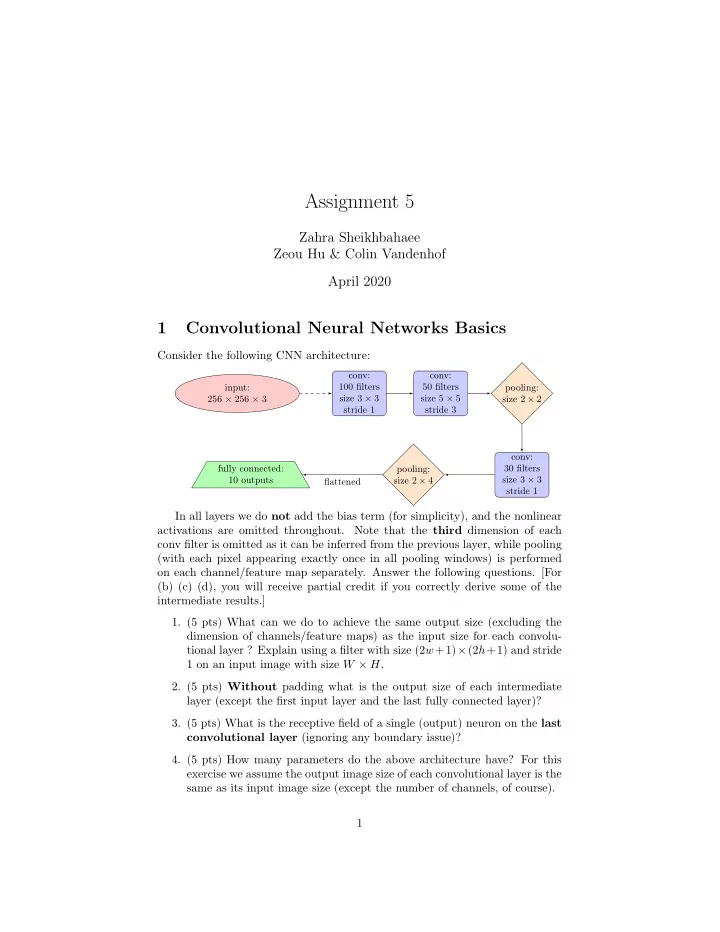

Assignment 5 Zahra Sheikhbahaee Zeou Hu & Colin Vandenhof April 2020 1 Convolutional Neural Networks Basics Consider the following CNN architecture: conv: conv: input: 100 filters 50 filters pooling: size 3 × 3 size 5 × 5 256 × 256 × 3 size 2 × 2 stride 1 stride 3 conv: fully connected: 30 filters pooling: size 3 × 3 10 outputs size 2 × 4 flattened stride 1 In all layers we do not add the bias term (for simplicity), and the nonlinear activations are omitted throughout. Note that the third dimension of each conv filter is omitted as it can be inferred from the previous layer, while pooling (with each pixel appearing exactly once in all pooling windows) is performed on each channel/feature map separately. Answer the following questions. [For (b) (c) (d), you will receive partial credit if you correctly derive some of the intermediate results.] 1. (5 pts) What can we do to achieve the same output size (excluding the dimension of channels/feature maps) as the input size for each convolu- tional layer ? Explain using a filter with size (2 w +1) × (2 h +1) and stride 1 on an input image with size W × H . 2. (5 pts) Without padding what is the output size of each intermediate layer (except the first input layer and the last fully connected layer)? 3. (5 pts) What is the receptive field of a single (output) neuron on the last convolutional layer (ignoring any boundary issue)? 4. (5 pts) How many parameters do the above architecture have? For this exercise we assume the output image size of each convolutional layer is the same as its input image size (except the number of channels, of course). 1

2 CNN Implementation Note : Please mention your Python version (and maybe the version of all other packages) in the code. In this exercise you are going to run some experiments involving CNNs. You need to know Python and install the following libraries: PyTorch, Numpy and all their dependencies. You can find detailed instructions and tutorials for each of these libraries on the respective websites. For all experiments, running on CPU is sufficient. You do not need to run the code on GPUs. Before start, we suggest you review what we learned about each layer in CNN, and read at least this tutorial. 1. Train a VGG11 net on the MNIST dataset. VGG11 was an earlier version of VGG16 and can be found as model A in Table 1 of this paper, whose Section 2.1 also gives you all the details about each layer. The goal is to get as close to 0 loss as possible. Note that our input dimension is different from the VGG paper. You need to resize each image in MNIST from its original size 28 × 28 to 32 × 32 [why?], and it might be necessary to change at least one other layer of VGG11. [This experiment will take up to 1 hour on a CPU, so please be cautious of your time. If this running time is not bearable, you may cut the training set by 1/10, so only have ∼ 600 images per class instead of the regular ∼ 6000.] 2. Once you’ve done the above, the next goal is to inspect the training pro- cess. Create the following plots: (a) test accuracy vs the number of epochs (say 3 ∼ 5) (b) training accuracy vs the number of epochs (c) test loss vs the number of epochs (d) training loss vs the number of epochs [If running more than 1 epoch is computationally infeasible, simply run 1 epoch and try to record the accuracy / loss every few minibatches.] 3 Recurrent Neural Network Implementation In this question, you will implement a recurrent neural network to classify Twitter messages as positive or negative sentiment. You are provided two files, tweets train.csv and tweets test.csv that contain the training data and test data. Each line of the files consists of a tweet and its sentiment, either negative (0) or positive (1). To classify the tweets, implement an LSTM using PyTorch. Each tweet should be treated as a sequence of ASCII characters. The input to the LSTM at each step should be a one-hot encoding of the ASCII character. Since there are 128 ASCII characters, this should be a vector of size 128. 2

The size of the hidden layer should also be 128. Use a linear layer to map the final hidden layer to the output (size 2). Finally, apply the softmax function to obtain class probabilities. Use negative log likelihood as the loss function with stochastic gradient descent or any related optimization algorithm. Train on the training set using a batch size of 32 and a learning rate of 0.0001 for at least 100000 iterations. Plot curves for (a) the test set accuracy vs. number of iterations and (b) the training set accuracy vs. number of iterations. You may compute accuracy every 1000 iterations to speed up the process. 4 Variational Auto-Encoder In Variational autoencoder, we optimize the evidence lower bound � � L ( θ, φ ; x ) = − D KL [ q φ ( z | x ) || p θ ( z )] + E q φ ( z | x ) log p θ ( x | z ) where q φ ( z | x ) is the variational distribution with variational parameter φ which approximates the posterior p ( z | x ). - Given z ∈ R 1 , p ( z ) ∼ N (0 , 1) and q ( z | x ) ∼ N ( µ z , σ 2 z ), write down D KL [ q φ ( z | x ) || p θ ( z )] in terms of µ z and σ z . - Assuming q φ ( z | x ) is a Gaussian, the decoder network computes its mean µ z and its variance σ 2 . Why do we model σ 2 z in log space using neural networks instead of directly model σ 2 z ? Why do we need the reparame- terization trick, instead of directly sampling from the latent distribution N ( µ z , σ 2 z )? - For decoder, we use sometimes a Multi-Layer Perceptions with either Bernoulli (in case of binary data) or Gaussian (in case of real-valued data) outputs. The expected reconstruction error (cross-entropy term) � log p θ ( x ( i ) | z ) � can be estimated by sampling, that is E q φ ( z | x ( i ) ) L = 1 � � � log p θ ( x ( i ) | z ) log p θ ( x ( i ) | z ) � � E q φ ( z | x ( i ) ) L l =1 if data x given z follows a multivariate Bernoulli with dimension D , how should this reconstruction loss term look like? 5 The Final Small-Project This part of assignment should be done by the students who decided to attend the final exam. Collaboration policy : This assignment should be done individually. 3

5.1 Implementation of VAE In the penultimate lecture, we have learned about approximating posteriors with variational inference, using the reparameterization trick for VI, and deep gen- erative models for images using variational autoencoders. We also learnt more about convolutional neural networks in the final lecture and their applications in the computer vision. In this project, you will bring together both constructs to train a model that can generate images of different classes of galaxies. The data is available here. The training images are JPG images of 61578 galaxies. The probability distributions for the classifications for each of the training images are given in solutions training rev1 . There are 37 different classes of galaxy types and at the end of training process, you should be able to generate these categories and compare with the original images. You’ll need to install PyTorch to use the starter code. The objective is to minimize the reconstruction error using cross-entropy and the Kullback–Leibler divergence. In the following you’ll find a code snippet that you can start to complete and make it work for this dataset. import torch import torch.utils.data from torch import nn, optim from torch.nn import functional as F from torchvision import datasets, transforms from torchvision.utils import save_image class VAE(nn.Module): def __init__(self, input_chanel=3, zdim=512, image_size=424): super(VAE, self).__init__() self.z_dim = z_dim # encoder part self.encoder_conv1 = nn.Conv2d(input_chanel, zdim//16, kernel_size=4, stride=2, padding=1) self.encoder_bn1 = nn.BatchNorm2d(zdim//16) #You must extend this part #decoder part self.decoder_conv1 = nn.ConvTranspose2d(zdim, zdim//2, kernel_size=4, stride=1, padding=0) self.decoder_bn1 = nn.BatchNorm2d(zdim//2) #You must extend this part def encode(self, x): x1 = F.leaky_relu(self.encoder_bn1(self.encoder_conv1(x)), negative_slope=0.2) #Fill this part with some CNN architecture def decode(self, z): #complete this part 4

Recommend

More recommend