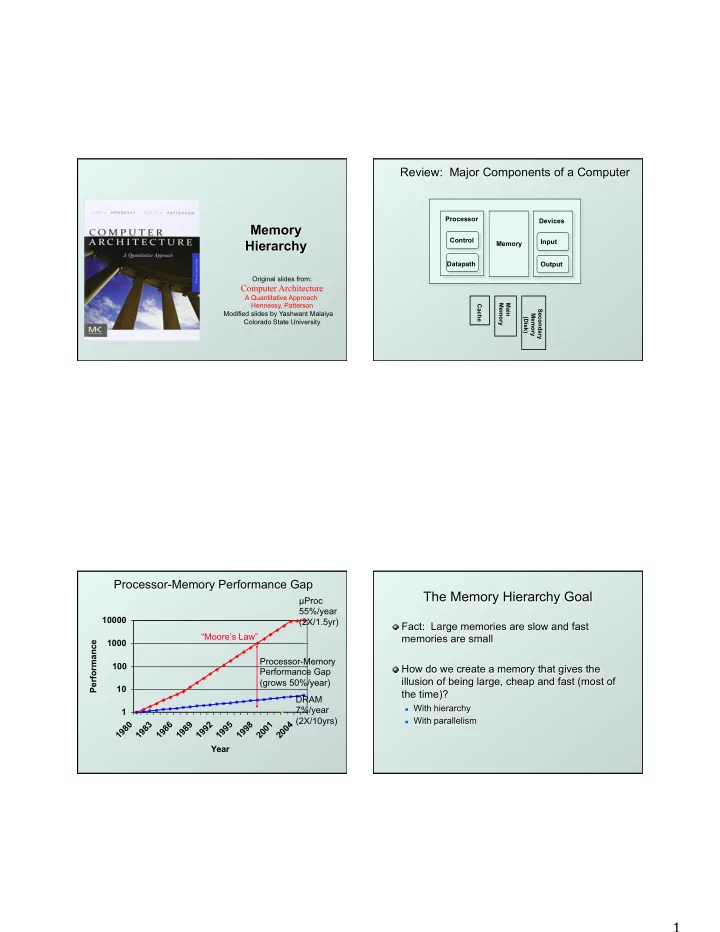

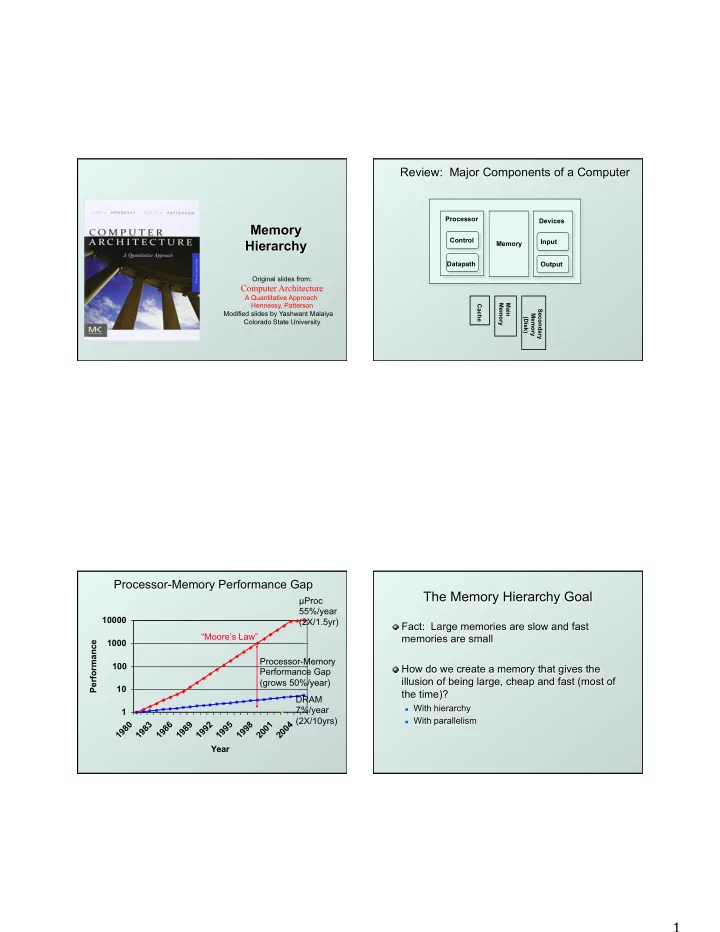

Review: Major Components of a Computer Processor Devices Memory Control Hierarchy Input Memory Datapath Output Original slides from: Computer Architecture A Quantitative Approach Hennessy, Patterson Memory Main Cache Secondary Modified slides by Yashwant Malaiya Memory (Disk) Colorado State University Processor-Memory Performance Gap The Memory Hierarchy Goal µ Proc 55%/year 10000 (2X/1.5yr) Fact: Large memories are slow and fast “Moore’s Law” memories are small 1000 Performance Processor-Memory 100 How do we create a memory that gives the Performance Gap illusion of being large, cheap and fast (most of (grows 50%/year) 10 the time)? DRAM n With hierarchy 7%/year 1 (2X/10yrs) n With parallelism 0 3 6 9 2 5 8 1 4 8 8 8 8 9 9 9 0 0 9 9 9 9 9 9 9 0 0 1 1 1 1 1 1 1 2 2 Year 1

§5.1 Introduction A Typical Memory Hierarchy Memory Technology q Take advantage of the principle of locality to present the user with as much memory as is available in the cheapest technology at the Static RAM (SRAM) speed offered by the fastest technology n 0.5-2.5ns, 2010: $2000–$5000 per GB (2015: same?) Dynamic RAM (DRAM) On-Chip Components n 50-70ns, 2010: $20–$75 per GB (2015: <$10 per GB) Control Flash Memory Cache Secondary Second ITLB DTLB Instr n 70-150ns, 2010: $4-$12 per GB (2015: $.14 per GB) Main Memory Level Memory (Disk) Datapath RegFile Cache Magnetic disk Cache (DRAM) Data (SRAM) n 5ms-20ms, $0.2-$2.0 per GB (2015: $.7 per GB) Ideal memory Speed (%cycles): ½ ’s 1’s 10’s 100’s 10,000’s Size (bytes): 100’s 10K’s M’s G’s T’s n Access time of SRAM Cost: highest lowest Chapter 5 — Large and n Capacity and cost/GB of disk Fast: Exploiting Memory Hierarchy — 6 Principle of Locality Taking Advantage of Locality Programs access a small proportion of their Memory hierarchy address space at any time Store everything on disk Temporal locality Copy recently accessed (and nearby) items from n Items accessed recently are likely to be accessed disk to smaller DRAM memory again soon n Main memory n e.g., instructions in a loop, induction variables Copy more recently accessed (and nearby) Spatial locality items from DRAM to smaller SRAM memory n Items near those accessed recently are likely to be n Cache memory attached to CPU accessed soon n E.g., sequential instruction access, array data Chapter 5 — Large and Chapter 5 — Large and Fast: Exploiting Memory Fast: Exploiting Memory Hierarchy — 7 Hierarchy — 8 2

Memory Hierarchy Levels Characteristics of the Memory Hierarchy Block (aka line): unit of copying Processor Inclusive– n May be multiple words 4-8 bytes (word) what is in L1$ If accessed data is present in is a subset of upper level Increasing L1$ what is in L2$ distance 8-32 bytes (block) is a subset of n Hit: access satisfied by upper level from the L2$ what is in MM Hit ratio: hits/accesses processor that is a If accessed data is absent 1 to 4 blocks in access subset of is in Main Memory time n Miss: block copied from lower level SM 1,024+ bytes (disk sector = page) Time taken: miss penalty Miss ratio: misses/accesses Secondary Memory = 1 – hit ratio n Then accessed data supplied from upper level (Relative) size of the memory at each level Chapter 5 — Large and Fast: Exploiting Memory Hierarchy — 9 §5.2 The Basics of Caches Cache Size Cache Memory Cache memory n The level of the memory hierarchy closest to the CPU hit rate Given accesses X 1 , … , X n–1 , X n 1/(cycle time) n How do we know if the data is present? n Where do we look? optimum Increasing cache size Chapter 5 — Large and Fast: Exploiting Memory 11 Hierarchy — 12 3

Block Size Considerations Increasing Hit Rate Hit rate increases with cache size. Larger blocks should reduce miss rate Hit rate mildly depends on block size. n Due to spatial locality 100% But in a fixed-sized cache 0% n Larger blocks ⇒ fewer of them miss rate = 1 – hit rate 64KB Decreasing Decreasing 16KB hit rate, h More competition ⇒ increased miss rate chances of chances of covering large getting n Larger blocks ⇒ pollution 95% 5% data locality fragmented data Larger miss penalty Cache size = 4KB n Can override benefit of reduced miss rate n Early restart and critical-word-first can help 10% 90% 16B 32B 64B 128B 256B Block size Chapter 5 — Large and Fast: Exploiting Memory Hierarchy — 13 14 Cache Misses Static vs Dynamic RAMs On cache hit, CPU proceeds normally On cache miss n Stall the CPU pipeline n Fetch block from next level of hierarchy n Instruction cache miss Restart instruction fetch n Data cache miss Complete data access Chapter 5 — Large and Chapter 5 — Large and Fast: Exploiting Memory Fast: Exploiting Memory Hierarchy — 15 Hierarchy — 16 4

Random Access Memory (RAM) Six-Transistor SRAM Cell Address bits bit bit Address decoder Memory cell array Read/write circuits Word line Bit line Bit line Data bits 17 18 Dynamic RAM (DRAM) Cell Advanced DRAM Organization Bits in a DRAM are organized as a rectangular Bit array line n DRAM accesses an entire row Word line n Burst mode: supply successive words from a row with reduced latency Double data rate (DDR) DRAM n Transfer on rising and falling clock edges Quad data rate (QDR) DRAM “Single-transistor DRAM cell” Robert Dennard’s 1967 invevention n Separate DDR inputs and outputs Chapter 5 — Large and Fast: Exploiting Memory 19 Hierarchy — 20 5

DRAM Generations Average Access Time 300 Hit time is also important for performance Year Capacity $/GB 1980 64Kbit $1500000 Average memory access time (AMAT) 250 1983 256Kbit $500000 n AMAT = Hit time + Miss rate × Miss penalty 1985 1Mbit $200000 200 Example 1989 4Mbit $50000 Trac 150 Tcac 1992 16Mbit $15000 n CPU with 1ns clock, hit time = 1 cycle, miss penalty = 1996 64Mbit $10000 100 20 cycles, I-cache miss rate = 5% 1998 128Mbit $4000 n AMAT = 1 + 0.05 × 20 = 2ns 50 2000 256Mbit $1000 2 cycles per instruction 2004 512Mbit $250 0 2007 1Gbit $50 '80 '83 '85 '89 '92 '96 '98 '00 '04 '07 Chapter 5 — Large and Chapter 5 — Large and Fast: Exploiting Memory Fast: Exploiting Memory Hierarchy — 21 Hierarchy — 22 Performance Summary Multilevel Caches When CPU performance increased Primary cache attached to CPU n Miss penalty becomes more significant n Small, but fast Can’t neglect cache behavior when evaluating Level-2 cache services misses from primary system performance cache n Larger, slower, but still faster than main memory Main memory services L-2 cache misses Some high-end systems include L-3 cache Chapter 5 — Large and Chapter 5 — Large and Fast: Exploiting Memory Fast: Exploiting Memory Hierarchy — 23 Hierarchy — 24 6

§5.4 Virtual Memory Interactions with Advanced CPUs Virtual Memory Use main memory as a “cache” for secondary Out-of-order CPUs can execute instructions (disk) storage during cache miss n Managed jointly by CPU hardware and the operating n Pending store stays in load/store unit system (OS) n Dependent instructions wait in reservation stations Programs share main memory Independent instructions continue n Each gets a private virtual address space holding its frequently used code and data Effect of miss depends on program data flow n Protected from other programs n Much harder to analyse CPU and OS translate virtual addresses to physical addresses n Use system simulation n VM “block” is called a page n VM translation “miss” is called a page fault Chapter 5 — Large and Chapter 5 — Large and Fast: Exploiting Memory Fast: Exploiting Memory Hierarchy — 25 Hierarchy — 26 §6.3 Disk Storage Disk Storage Disk Sectors and Access Nonvolatile, rotating magnetic storage Each sector records n Sector ID n Data (512 bytes, 4096 bytes proposed) n Error correcting code (ECC) Used to hide defects and recording errors n Synchronization fields and gaps Access to a sector involves n Queuing delay if other accesses are pending n Seek: move the heads n Rotational latency n Data transfer n Controller overhead Chapter 6 — Storage and Chapter 6 — Storage and Other I/O Topics — 27 Other I/O Topics — 28 7

Recommend

More recommend