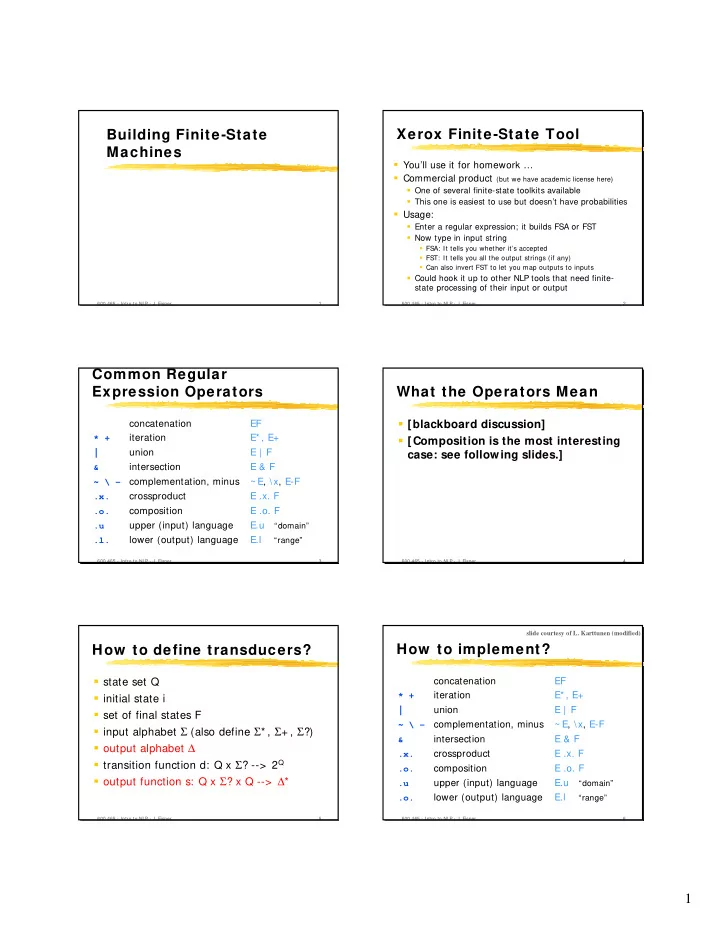

Xerox Finite-State Tool Building Finite-State Machines � You’ll use it for homework … � Commercial product (but we have academic license here) � One of several finite-state toolkits available � This one is easiest to use but doesn’t have probabilities � Usage: � Enter a regular expression; it builds FSA or FST � Now type in input string � FSA: It tells you whether it’s accepted � FST: It tells you all the output strings (if any) � Can also invert FST to let you map outputs to inputs � Could hook it up to other NLP tools that need finite- state processing of their input or output 600.465 - Intro to NLP - J. Eisner 1 600.465 - Intro to NLP - J. Eisner 2 Common Regular Expression Operators What the Operators Mean concatenation EF � [blackboard discussion] * + iteration E* , E+ � [Composition is the most interesting | union E | F case: see following slides.] & intersection E & F ~ \ - complementation, minus ~ E, \x, E-F .x. crossproduct E .x. F .o. composition E .o. F .u upper (input) language E.u “domain” .l. lower (output) language E.l “range” 600.465 - Intro to NLP - J. Eisner 3 600.465 - Intro to NLP - J. Eisner 4 slide courtesy of L. Karttunen (modified) How to implement? How to define transducers? � state set Q concatenation EF * + iteration E* , E+ � initial state i | union E | F � set of final states F ~ \ - complementation, minus ~ E, \x, E-F � input alphabet Σ (also define Σ * , Σ + , Σ ?) & intersection E & F � output alphabet ∆ .x. crossproduct E .x. F � transition function d: Q x Σ ? --> 2 Q .o. composition E .o. F � output function s: Q x Σ ? x Q --> ∆ * .u upper (input) language E.u “domain” .o. lower (output) language E.l “range” 600.465 - Intro to NLP - J. Eisner 5 600.465 - Intro to NLP - J. Eisner 6 1

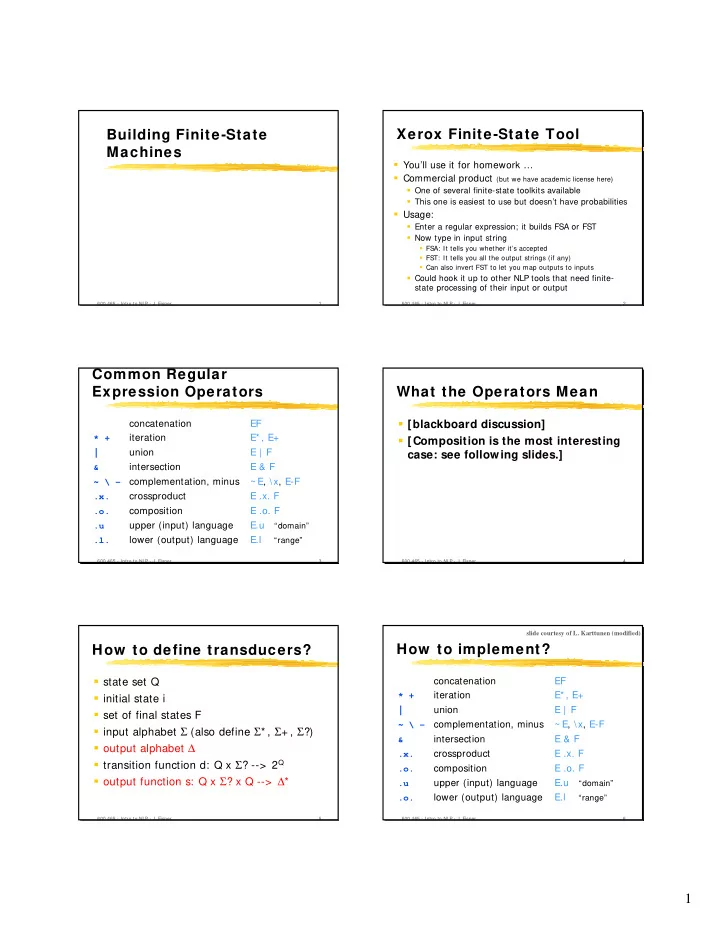

example courtesy of M. Mohri example courtesy of M. Mohri Concatenation Union + + = = = = 600.465 - Intro to NLP - J. Eisner 7 600.465 - Intro to NLP - J. Eisner 8 example courtesy of M. Mohri example courtesy of M. Mohri Closure (this example has outputs too) Upper language (domain) * * .u .u = = = = similarly construct lower language .l why add new start state 4? why add new start state 4? also called input & output languages why not just make state 0 final? why not just make state 0 final? 600.465 - Intro to NLP - J. Eisner 9 600.465 - Intro to NLP - J. Eisner 10 example courtesy of M. Mohri example courtesy of M. Mohri Reversal Inversion .r .i .r .i = = = = 600.465 - Intro to NLP - J. Eisner 11 600.465 - Intro to NLP - J. Eisner 12 2

example adapted from M. Mohri Complementation Intersection fat/0.5 � Given a machine M, represent all strings pig/0.3 eats/0 2/0.8 0 1 not accepted by M sleeps/0.6 � Just change final states to non-final and pig/0.4 vice-versa fat/0.2 sleeps/1.3 & & 2/0.5 0 1 � Works only if machine has been eats/0.6 determinized and completed first (why?) 2,0/0.8 eats/0.6 fat/0.7 pig/0.7 = = 0,0 0,1 1,1 sleeps/1.9 2,2/1.3 600.465 - Intro to NLP - J. Eisner 13 600.465 - Intro to NLP - J. Eisner 14 Intersection Intersection fat/0.5 fat/0.5 pig/0.3 eats/0 2/0.8 0 1 pig/0.3 eats/0 2/0.8 0 1 sleeps/0.6 sleeps/0.6 pig/0.4 pig/0.4 fat/0.2 sleeps/1.3 & & 2/0.5 fat/0.2 sleeps/1.3 0 1 & & 2/0.5 0 1 eats/0.6 eats/0.6 2,0/0.8 eats/0.6 fat/0.7 pig/0.7 = = 0,0 0,1 1,1 fat/0.7 = = 0,0 0,1 sleeps/1.9 2,2/1.3 Paths 0012 and 0110 both accept fat pig eats Paths 00 and 01 both accept fat So must the new machine: along path 0,0 0,1 1,1 2,0 So must the new machine: along path 0,0 0,1 600.465 - Intro to NLP - J. Eisner 15 600.465 - Intro to NLP - J. Eisner 16 Intersection Intersection fat/0.5 fat/0.5 pig/0.3 eats/0 pig/0.3 eats/0 0 1 2/0.8 0 1 2/0.8 sleeps/0.6 sleeps/0.6 pig/0.4 pig/0.4 fat/0.2 sleeps/1.3 fat/0.2 sleeps/1.3 & & 0 1 2/0.5 & & 0 1 2/0.5 eats/0.6 eats/0.6 fat/0.7 pig/0.7 fat/0.7 pig/0.7 = = = = 0,0 0,1 0,0 0,1 1,1 1,1 Paths 00 and 11 both accept pig Paths 12 and 12 both accept fat sleeps/1.9 2,2/1.3 So must the new machine: along path 0,1 1,1 So must the new machine: along path 1,1 2,2 600.465 - Intro to NLP - J. Eisner 17 600.465 - Intro to NLP - J. Eisner 18 3

Intersection What Composition Means fat/0.5 pig/0.3 eats/0 2/0.8 0 1 sleeps/0.6 g f pig/0.4 3 4 αβγδ fat/0.2 sleeps/1.3 ab?d abcd & & 2/0.5 0 1 eats/0.6 2 2 αβεδ abed 2,0/1.3 eats/0.6 fat/0.7 pig/0.7 8 = = 0,0 0,1 1,1 6 αβ∈δ abjd sleeps/1.9 2,2/0.8 ... 600.465 - Intro to NLP - J. Eisner 19 600.465 - Intro to NLP - J. Eisner 20 does not contain any pair of the What Composition Means Relation = set of pairs form abjd � … abcd � αβγδ ab?d � abcd abed � αβεδ ab?d � abed abed � αβ∈δ ab?d � abjd … … g f 4 3 4 αβγδ αβγδ ab?d ab?d abcd 2 2 2 αβεδ αβεδ Relation composition: f ° g abed 8 8 αβ∈δ 6 αβ∈δ abjd ... ... 600.465 - Intro to NLP - J. Eisner 21 600.465 - Intro to NLP - J. Eisner 22 Relation = set of pairs Intersection vs. Composition abcd � αβγδ ab?d � abcd abed � αβεδ Intersection ab?d � abed f ° g abed � αβ∈δ ab?d � abjd … ab?d � αβγδ … pig /0.4 ab?d � αβεδ pig /0.3 pig /0.7 ab?d � αβ∈δ & & = = 0,1 0 1 1 1,1 4 … αβγδ ab?d Composition 2 αβεδ pig :pink/0.4 f ° g = { x � z: ∃ y (x � y ∈ f and y � z ∈ g)} Wilbur: pig /0.3 Wilbur:pink/0.7 8 .o. = αβ∈δ .o. = 0,1 where x, y, z are strings 0 1 1 1,1 ... 600.465 - Intro to NLP - J. Eisner 23 600.465 - Intro to NLP - J. Eisner 24 4

Composition example courtesy of M. Mohri Intersection vs. Composition Intersection mismatch elephant/0.4 .o. .o. = = pig /0.3 pig /0.7 & = & = 0,1 0 1 1 1,1 Composition mismatch elephant:gray/0.4 Wilbur: pig /0.3 Wilbur:gray/0.7 .o. .o. = = 0,1 0 1 1 1,1 600.465 - Intro to NLP - J. Eisner 25 Composition Composition .o. .o. = = .o. .o. = = a:b .o. b: :b .o. b:b b = = a a: :b b a:b .o. b: :b .o. b:a a = = a a: :a a a a Composition Composition .o. .o. = = .o. .o. = = a:b .o. b: :b .o. b:a a = = a a: :a a b:b .o. b: :b .o. b:a a = = b b: :a a a b 5

Composition Composition .o. .o. = = .o. .o. = = a:b .o. b: a :b .o. b:a a = = a a: :a a a a:a .o. a: :a .o. a:b b = = a a: :b b Composition Composition .o. .o. = = .o. .o. = = b:b .o. a: :b .o. a:b b = nothing = nothing b:b .o. b: :b .o. b:a a = = b b: :a a b b (since intermediate symbol doesn’ ’t match) t match) (since intermediate symbol doesn Composition Relation = set of pairs abcd � αβγδ ab?d � abcd abed � αβεδ ab?d � abed f ° g abed � αβ∈δ ab?d � abjd … ab?d � αβγδ … .o. .o. = = ab?d � αβεδ ab?d � αβ∈δ 4 … αβγδ ab?d 2 αβεδ f ° g = { x � z: ∃ y (x � y ∈ f and y � z ∈ g)} 8 αβ∈δ where x, y, z are strings a:b .o. a: :b .o. a:b b = = a a: :b b a ... 600.465 - Intro to NLP - J. Eisner 36 6

Composition w ith Sets Composition and Coercion � We’ve defined A .o. B where both are FSTs � Really just treats a set as identity relation on set � Now extend definition to allow one to be a FSA { abc, pqr, …} = { abc � abc, pqr � pqr, …} � Two relations (FSTs): � Two relations (FSTs): A ° B = { x � z: ∃ y (x � y ∈ A and y � z ∈ B)} A ° B = { x � z: ∃ y (x � y ∈ A and y � z ∈ B)} � Set and relation is now special case (if ∃ y then y= x) : � Set and relation: A ° B = { x � z: x ∈ A and x � z ∈ B } A ° B = { x � z: x � x ∈ A and x � z ∈ B } � Relation and set is now special case (if ∃ y then y= z) : � Relation and set: A ° B = { x � z: x � z ∈ A and z ∈ B } A ° B = { x � z: x � z ∈ A and z � z ∈ B } � � Two sets (acceptors) – same as intersection: � Two sets (acceptors) is now special case: A ° B = { x: x ∈ A and x ∈ B } A ° B = { x � z: x � x ∈ A and x � x ∈ B } 600.465 - Intro to NLP - J. Eisner 37 600.465 - Intro to NLP - J. Eisner 38 What are the “basic” 3 Uses of Set Composition: transducers? � Feed string into Greek transducer: � The operations on the previous slides � { abed � abed } .o. Greek = { abed � αβεδ , abed � αβ∈δ } combine transducers into bigger ones { abed } .o. Greek = { abed � αβεδ , abed � αβ∈δ } � � But where do we start? [{ abed } .o. Greek].l = { αβεδ, αβ∈δ } � � Feed several strings in parallel: a: ε � { abcd, abed } .o. Greek � a: ε for a ∈ Σ = { abcd � αβγδ , abed � αβεδ , abed � αβ∈δ } � ε :x for x ∈ ∆ ε :x � [{ abcd,abed } .o. Greek].l = { αβγδ , αβεδ , αβ∈δ } � Filter result via No ε ε = { αβγδ , ε ε αβ∈δ , … } � { abcd,abed } .o. Greek .o. No ε � Q: Do we also need a:x? How about ε:ε ? = { abcd � αβγδ , abed � αβ∈δ } 600.465 - Intro to NLP - J. Eisner 39 600.465 - Intro to NLP - J. Eisner 40 7

Recommend

More recommend