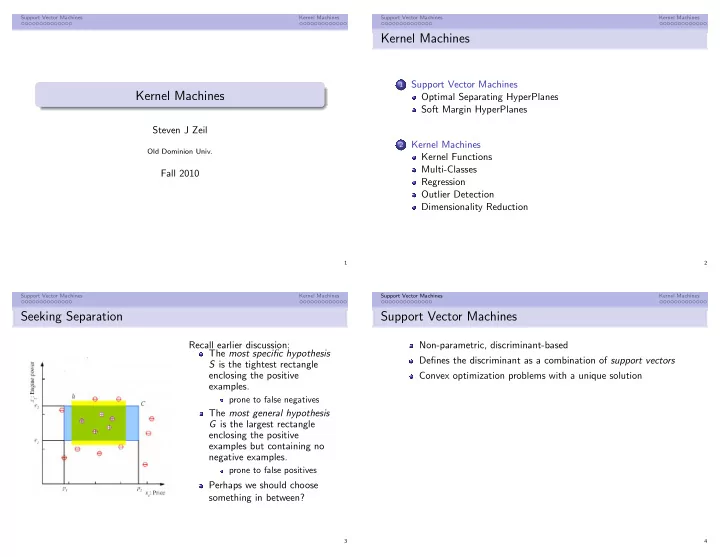

Support Vector Machines Kernel Machines Support Vector Machines Kernel Machines Kernel Machines Support Vector Machines 1 Kernel Machines Optimal Separating HyperPlanes Soft Margin HyperPlanes Steven J Zeil Kernel Machines 2 Old Dominion Univ. Kernel Functions Multi-Classes Fall 2010 Regression Outlier Detection Dimensionality Reduction 1 2 Support Vector Machines Kernel Machines Support Vector Machines Kernel Machines Seeking Separation Support Vector Machines Recall earlier discussion: Non-parametric, discriminant-based The most specific hypothesis Defines the discriminant as a combination of support vectors S is the tightest rectangle enclosing the positive Convex optimization problems with a unique solution examples. prone to false negatives The most general hypothesis G is the largest rectangle enclosing the positive examples but containing no negative examples. prone to false positives Perhaps we should choose something in between? 3 4

Support Vector Machines Kernel Machines Support Vector Machines Kernel Machines Separating HyperPlanes Support Vector Machines: Margins In our earlier linear SVM’s seek the plane that discrimination techniques, maximizes the margin we sought any separating between the plane and the hyperplane closest instances of each class. Some of the points could come arbitrarily close to the After testing, we do not border insist on the margin Distance from the plane But distance from plane is was a measure of still an indication of confidence confidence If the class was not linearly With minor modification, separable, too bad. can extend to classes that are not linearly separable. 5 6 Support Vector Machines Kernel Machines Support Vector Machines Kernel Machines Defining the Margin Maximizing the Margin Margin is the distance from the discriminant to the closest � +1 x t ∈ C 1 if � x t , r t } where r t = instances on either side X = { � x t ∈ C 2 − 1 if � w T � x t + w 0 | x to the hyperplane is | � Distance of � || � w || Find � w and w 0 such that Let ρ denote the margin side: x t + w 0 ≥ +1 for r t = +1 w T � x t + w 0 | � w T � ∀ t , | � ≥ ρ || � w || x t + w 0 ≤ +1 for r t = − 1 w T � � If we try to maximize ρ , there are infinite solutions form,ed by or, equialently, simply rescaling the � w x t + w 0 ) ≥ +1 r t ( � w T � Fix ρ || w || = 1, and minimize || w || to maximize ρ w || 2 subject to ∀ t , | � w T � x t + w 0 | Minimize 1 2 || � ≥ ρ || � w || 7 8

Support Vector Machines Kernel Machines Support Vector Machines Kernel Machines Margin Derivation (1/3) w || 2 subject to ∀ t , | � w T � x t + w 0 | Minimize 1 2 || � ≥ ρ || � w || Circled inputs are the ones that determine the border N After training, we could 1 w || 2 − x t + w 0 ) − 1] � α t [ r t ( � w T � L p = 2 || � forget about the others In fact, we might be able t =1 eliminate some of the N N 1 w || 2 − x t + w 0 )] + � � α t [ r t ( � w T � α t others before training = 2 || � t =1 t =1 N ∂ L p � α t r t � x t w = 0 ⇒ � w = ∂� t =1 9 10 Support Vector Machines Kernel Machines Support Vector Machines Kernel Machines Derivation (2/3) Derivation (3/3) N 1 ∂ L p x t − w 0 α t r t + w T � w T � � � � α t r t � α t α t r t � x t L d = 2( � w ) − � w = 0 ⇒ � w = ∂� t t t t =1 − 1 w T � � α t N = 2( � w ) + ∂ L p α t r t = 0 � = 0 ⇒ t ∂ w 0 − 1 t =1 α t α s r t r s + � � � α t = 2 Maximizing L p is equivalent to maximizing the dual t s t 1 t α t r t = 0 and α t ≥ 0 x t − w 0 α t r t + w T � w T � � � subject to � α t r t � α t L d = 2( � w ) − � Solve numerically. t t t Most α t are 0 The small number with α t > 0 are the support vectors : t α t r t = 0 and α t ≥ 0 subject to � N � α t r t � x t w = � t =1 11 12

Support Vector Machines Kernel Machines Support Vector Machines Kernel Machines Support Vectors Demo Applet on http://www.csie.ntu.edu.tw/ cjlin/libsvm/ Circled inputs are the support vectors w = � N t =1 α t r t � x t � Compute w 0 from the average over the support vectors of w 0 = r t − � w T � x t 13 14 Support Vector Machines Kernel Machines Support Vector Machines Kernel Machines Soft Margin HyperPlanes Soft Margin Derivation w || 2 + C � t ξ t C is a penalty factor that trades off L p = 1 Suppose the classes are almost, but not quite linearly 2 || � separable complexity against data misfitting x t + w 0 ) ≥ 1 − ξ t r t ( � w T � Leads to same numerical optimization problem with new The ξ are slack variables, ξ t ≥ 0 storing deviation from the constraint margin 0 ≤ α t ≤ C ξ t = 0 means � x t is more than 1 away from hyperplane 0 < ξ t < 1 means � x t is within the margin, but correctly classified ξ t ≥ 1 means � x t is misclassified t ξ t is a measure of error. Add as a penalty term � L p = 1 w || 2 + C � ξ t 2 || � t C is a penalty factor that trades off complexity against data misfitting 15 16

Support Vector Machines Kernel Machines Support Vector Machines Kernel Machines Soft Margin Example Hinge Loss � 0 if y t r r ≥ 1 L hinge ( y t , r t ) = 1 − y t r t ow Behavior in 0..1 makes this more robust than 0/1 and squred error Close to cross-entropy over much of its range Applet on http://www.csie.ntu.edu.tw/ cjlin/libsvm/ 17 18 Support Vector Machines Kernel Machines Support Vector Machines Kernel Machines Non-Linear SVM The Kernel z = � t α t r t � x T � x t Replace inputs � x by a sequence of basis functions � φ ( � x ) g ( � x ) = � x t is a measure of similarity between � x T � Linear SVM Kernel SVM � x and a support vector. z t = α t r t � � � α t r t � x t ) � w = � φ ( � α t r t � x t w = t α t r t � x t ) T � � g ( � x ) = � φ ( � φ ( � x ) t t x t ) T � t � φ ( � φ ( � x ) can be seen as a similarity measure in the non-linear basis space. w T � w T � x t ) T � g ( � x ) = � x x ) = � x t ,� g ( � x ) = � φ ( � x ) To generalize, let K ( � φ ( � φ ( � x ) � α t r t � x T � x t α t r t � x t ) T � � = = φ ( � φ ( � x ) � α t r t K ( � x t ,� g ( � x ) = x ) t t t K is a kernel function . 19 20

Support Vector Machines Kernel Machines Support Vector Machines Kernel Machines Polynomial Kernels Radial-basis Kernels x t − � x || 2 x t + 1) q � � −|| � x t ,� x T � K q ( � x ) = ( � x t ,� K ( � x ) = exp 2 s 2 E.g., Other options include sigmoidal y + 1) 2 (approximated as tanh ) K ( � x ,� y ) = ( � x � ( x 1 y 1 + x 2 y 2 + 1) 2 = = 1 + 2 x 1 y 1 + 2 x 2 y 2 +2 x 1 x 2 y 1 y 2 + x 2 1 y 2 1 + x 2 2 y 2 2 √ √ √ � 2 x 1 x 2 , x 2 1 , x 2 φ ( � x ) = [1 , 2 x 1 , 2 x 2 , 2 ] (FWIW) 21 22 Support Vector Machines Kernel Machines Support Vector Machines Kernel Machines Selecting Kernels Multi-Classes 1 versus all Kernels can be customized to application K separate N variable problems Choose appropriate measures of similarity pairwise separation Bag of words (normalized cosines between vocabulary vectors) Genetics: edit distance between strings K(K-1) separate N variable problems Graphs: length of shortest path between nodes, or number of single multiclass optimization connecting paths � K Minimize 1 t ξ t i =1 || w i || 2 + C � � i subject to 2 i For input sets with very large dimension, may be cheaper to x t + w z t 0 ≥ � x t + w i 0 + 2 − ξ t pre-compute the and save the matrix of kernel values ( Gram w T w T i , ∀ i � = z t � z t � i � matrix ) rather than keeping all the inputs available. x t where z t is the index of the class of � one K*N variable problem 23 24

Support Vector Machines Kernel Machines Support Vector Machines Kernel Machines Regression Linear Regression Linear regression to w T � f ( � x ) = � x + w 0 Instead of using the usual error measure: e 2 ( r t , f ( � x t )) = [ r t − f ( � x t )] 2 we use a linear, ǫ -sensitive error e ǫ ( r t , f ( � x t )) = max(0 , | r t − f ( � x t ) | − ǫ ) Errors of less than ǫ are tolerated and larger errors have only a linear effect more tolerant to noise t � w T � ( α t + − α t x t ) T � f ( � x ) = � x + w 0 = − )( � x + w 0 Function is a combination of a limited set of support vectors. 25 26 Support Vector Machines Kernel Machines Support Vector Machines Kernel Machines Kernel Regression Gaussian Kernel Regression Again, we can replace the inputs by a basis function, eventually leading to a Kernel function as a similarity measure. Shown here: polynomial 27 28

Support Vector Machines Kernel Machines Support Vector Machines Kernel Machines One-Class Kernel Machines Gaussian Kernel One-Class Consider a sphere with center � a amd radius R Minimize R 2 + C � t ξ t subject to x t − � a || ≤ R 2 + ξ t || � w is an outlier if it lies outside the sphere. � 29 30 Support Vector Machines Kernel Machines Dimensionality Reduction Kernel PCA does PCA on the kernel φ T � matrix � φ instead of on the direct inputs For high-dimension input spaces, we can work on an NxN problems instead of DxD 31

Recommend

More recommend