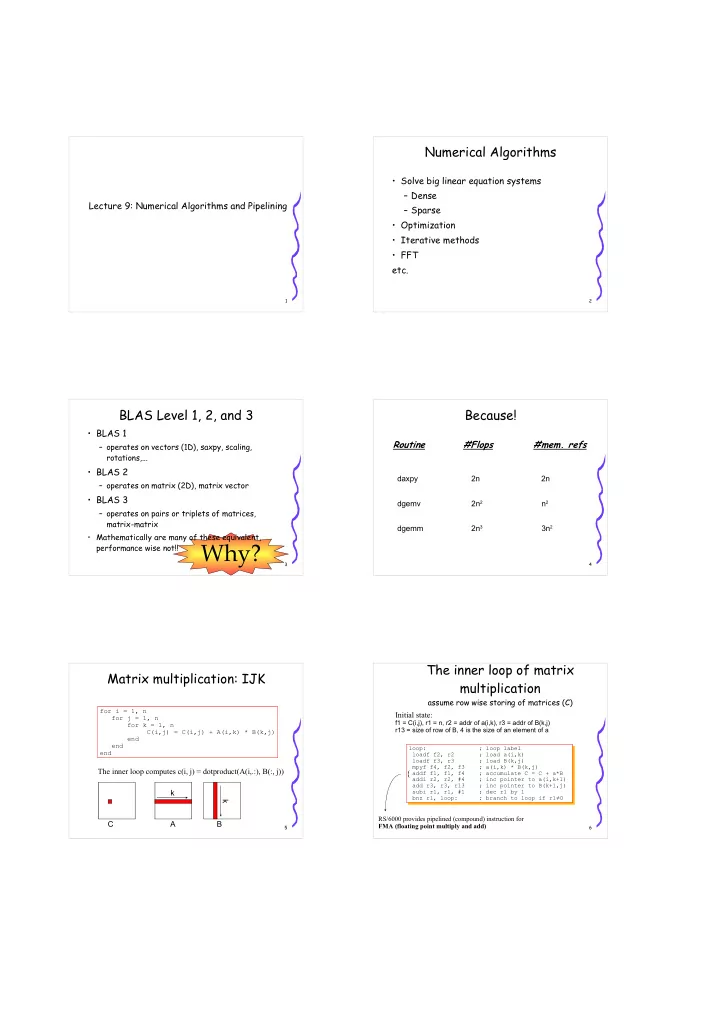

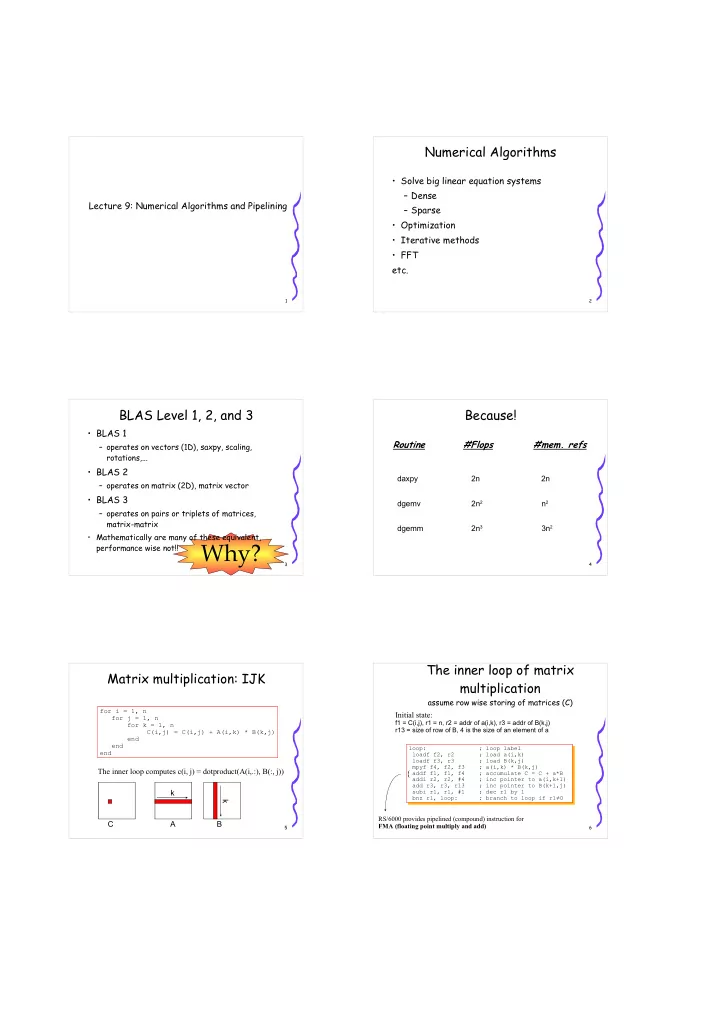

Numerical Algorithms • Solve big linear equation systems – Dense Lecture 9: Numerical Algorithms and Pipelining – Sparse • Optimization • Iterative methods • FFT etc. 1 2 BLAS Level 1, 2, and 3 Because! • BLAS 1 Routine #Flops #mem. refs – operates on vectors (1D), saxpy, scaling, rotations,... • BLAS 2 daxpy 2n 2n – operates on matrix (2D), matrix vector • BLAS 3 dgemv 2n 2 n 2 – operates on pairs or triplets of matrices, matrix-matrix dgemm 2n 3 3n 2 • Mathematically are many of these equivalent, Why? performance wise not!! 3 4 The inner loop of matrix Matrix multiplication: IJK multiplication assume row wise storing of matrices (C) for i = 1, n Initial state: for j = 1, n f1 = C(i,j), r1 = n, r2 = addr of a(i,k), r3 = addr of B(k,j) for k = 1, n r13 = size of row of B, 4 is the size of an element of a C(i,j) = C(i,j) + A(i,k) * B(k,j) end end loop: ; loop label end loadf f2, r2 ; load a(i,k) loadf f3, r3 ; load B(k,j) mpyf f4, f2, f3 ; a(i,k) * B(k,j) The inner loop computes c(i, j) = dotproduct(A(i,.:), B(:, j)) { addf f1, f1, f4 ; accumulate C = C + a*B addi r2, r2, #4 ; inc pointer to a(i,k+1) add r3, r3, r13 ; inc pointer to B(k+1,j) k subi r1, r1, #1 ; dec r1 by 1 bnz r1, loop: ; branch to loop if r1 ≠ 0 k RS/6000 provides pipelined (compound) instruction for C A B FMA (floating point multiply and add) 5 6

Cache Properties Matrix Multiplication: IKJ-optimization gives a better spatial locality • Assume row wise storing of matrices (C) • The inner loop fetches a whole row of A, a for i = 1, n Stride 1 whole column of B for k = 1, n for j = 1, n • One row may be larger than the cache C(i,j) = C(i,j) + A(i,k) * B(k,j) end end – 128KB cache = 32KWords = 16K double end • One column of B occupies s*n words All references in the inner loop are Stride 1; (refer to consecutive – s is the size of the cache line memory addresses) – The inner loop use 1 word from each j j fetched cache line – Fills the cache with lots of data not used C A B 7 8 Matrix Multiplication: Improve Temporal Locality Column Blocked block for better cache usage • Transform one multiplication to do jb = 1, n, bs multiplications of submatrices do i = 1, n do j = jb, jb+bs-1 C11 C12 C13 A11 A12 A13 B11 B12 B13 do k = 1, n C21 C22 C23 A21 A22 A23 B21 B22 B23 = * c(i,j) = c(i,j) + a(i,k)*b(k,j) C31 C32 C33 A31 A32 A33 B31 B32 B33 end C11 = A11*B11 + A12*B21 + A13*B31 C A B • The sizes of the submatrices is chosen such that they fit in the cache Assume the cache can hold one column –m x m submatrices block of C and B and –2m 3 flops for 3m 2 data in cache one row from A • Each word fetched to the cache is used m times 9 10 Blocked Matrix Multiplication Matrix Multiplication: blocked • The ib, jb, kb loops steps between submatrices • The i, j, k loops performs a matrix multiplication do ib = 1, n, bs Want C to be in the cache do jb = 1, n, bs on submatrices as long as possible do kb = 1, n , bs do i = ib, min(ib+bs, n) • bs is the block size do k = kb, min(kb+bs, n) do j = jb, min(jb+bs, n) • The i, j, k loops are optimized for fast execution C(i,j) = C(i,j) + A(i,k) * B(k,j) end • The ib, jb, kb loops are optimized for cache reuse end C A B end – the kb loop reuses one C block ib end kb kb end ib bs – new A and B blocks are fetched to the cache end • For example on performance, see the Master's jb jb Thesis cacheprobe 11 12

Parallel Matrix Multiplication for Parallel Matrix Multiplication Distributed Memory Message Passing Machine Several copies of A, block column of B and C • Static, but optimal load balancing – Each processor computes the same number of elements of C p 1 p 2 p 3 p 4 All • Coordination/synchronizing Let p = 4 Original data distribution • Initial data distribution – 1D (the nodes are organized in 1D, (0: p -1)) • Each node stores A and one block column of B and C A B,C Local computation • Each node stores one block row of A, B, C C = A * B • Each node stores one block row of A and C and one block column of B Extra space required to store a copy of A – 2D (the nodes are organized in 2D, ( i , j ) i = 1: √ P, j = 1: √ P ) • Each node stores n / √ P blocks of A, B, and C 13 14 Parallel Matrix Multiplication Each node stores one block row of A and C and one block column of B Assume 4 processors in a ring Original data distribution A, C B “No extra space needed” However there is need for buffer Local computation space (user or system) Communication Next = mod(me+1,p) Prev = mod(p+me-1,p) B: p send & receive of nx (n/p) for i = 1:p elements C = C + A*B send(B, Next) Overlap communication/computation? receive(B, Prev) end 15 Parallel Matrix Multiplication Parallel Matrix Multiplication 2D-blocking (Cannon’s algorithm) 2D-blocking (Cannon’s algorithm) A 00 A 01 A 02 A 03 A 00 A 01 A 02 A 03 A 01 A 02 A 03 A 00 A 00 A 01 A 02 A 03 B 00 B 01 B 02 B 03 B 00 B 11 B 22 B 33 B 10 B 21 B 32 B 03 B 00 B 01 B 02 B 03 A 10 A 11 A 12 A 13 A 11 A 12 A 13 A 10 A 12 A 13 A 10 A 11 Let p = 16 0 A 10 A 11 A 12 A 13 B 10 B 11 B 12 B 13 1 B 10 B 21 B 32 B 03 2 B 20 B 31 B 02 B 13 Original data distribution B 10 B 11 B 12 B 13 A 20 A 21 A 22 A 23 A 22 A 23 A 20 A 21 A 23 A 20 A 21 A 22 A 20 A 21 A 22 A 23 Processor ( i,j ) stores A ij , B ij , C ij B 20 B 21 B 22 B 23 B 20 B 31 B 02 B 13 B 30 B 01 B 12 B 23 B 20 B 21 B 22 B 23 A 30 A 31 A 32 A 33 A 33 A 30 A 31 A 32 A 30 A 31 A 32 A 33 A 30 A 31 A 32 A 33 B 30 B 31 B 32 B 33 B 30 B 01 B 12 B 23 B 00 B 11 B 22 B 33 B 30 B 31 B 32 B 33 A 02 A 03 A 00 A 01 A 03 A 00 A 01 A 02 B 20 B 31 B 02 B 13 B 30 B 01 B 12 B 23 Idea A 13 A 10 A 11 A 12 A 10 A 11 A 12 A 13 Shift blocks of A along rows 3 4 B 30 B 01 B 12 B 23 B 00 B 11 B 22 B 33 Shift blocks of B along columns A 20 A 21 A 22 A 23 A 21 A 22 A 23 A 20 B 00 B 11 B 22 B 33 B 10 B 21 B 32 B 03 A 31 A 32 A 33 A 30 A 32 A 33 A 30 A 31 B 10 B 21 B 32 B 03 B 20 B 31 B 02 B 13 17 18

Parallel Matrix Multiplication More about uniprocessors 2D-blocking (Cannon’s algorithm) • “No extra space needed” for i = 1, n for j = 1, n – However, there is a for k = 1, n need for buffer space C(i,j) = C(i,j) + A(i,k) * B(k,j) shift(B, MY_COL) end (user or system) shift(A, MY_ROW) end for i = 1:Q • Initial communication end C = C + A*B shift(A, MY_ROW) – shift A, B: shift(B, MY_COL) How do you do this on a super scalar processor?? end ( n / √ p )x( n / √ p ) elements I.e., when we want to do more than one (2-4) operations per cycle? • Additional Communication – shift A, B: ( n / √ p )x( n / √ p ) elements 19 20 Kernel Solution: Outer loop unroll for i = 1, n for i = 1, n, 2 for j = 1, n for j = 1, n, 2 T = C(i,j) T11..T22 = C(i,j)..C(i+1,j+1) for k = 1, n for k = 1, n T += A(i,k) * B(k,j) T11 += A(i ,k) * B(k,j ) end T12 += A(i ,k) * B(k,j+1) C(i,j) = T end T21 += A(i+1,k) * B(k,j ) end T22 += A(i+1,k) * B(k,j+1) end How well is this going on a super scalar C(i,j)..C(i+1,j+1) = T11..T22 processor with a ”long pipe”?? end I.e., when we want to fill the pipeline as much end as possible? 21 22 Preloading Prefetching for i = 1, n • Also called ”algorithmic preloading” for j = 1, n • Load the next set of blocks that are going to T11..T22 = C(i,j)..C(i+1,j+1) for k = 1, n be multiplied – can be made into temporary load A(i,k+1), B(k+1,j)... variables by the programmer, or in the T11 += A(i ,k) * B(k,j ) T12 += A(i+1,k) * B(k,j+1) object code by the compiler T21 += A(i ,k) * B(k,j ) T22 += A(i+1,k) * B(k,j+1) • O ( n 2 ) data accesses / O ( n 3 ) compute steps end C(i,j)..C(i+1,j+1) = T11..T22 end end 23 24

Gaussian elimination Recursion • The research group from Umeå are pioneers • Recursive data format • Pipelining • Recursive algorithms • Broadcast – SMP parallelism appears by it self • Cycling striping 25 26 Iterative Methods Laplace’s Equation • Used to solve sparse equation systems • Utilizes numerical principles • Example: heat distribution (Laplace's Equation) • Wants to find a solution to a partial differential equation • Finite difference method • Computation molecule 27 28 Laplace’s Equation Natural ordering • Put the points as you allocate • After approx. and rewrites (see the book) elements in a matrix • Iteratively: 29 30

Gauss-Seidel Relaxation Relation to linear equation systems 31 32 Multigrid Red-Black Ordering Next step: finite- element-methods 33 34 Pipelining Pipelining – example • Divide a problem into a series of tasks for (i = 0; i < n; i++) • Each task can be solved by one processor sum = sum + a[i] * b[i]; • The base for all sequential programs • Pipeline sum = sum + a[0] * b[0]; • Hide latency/communication sum = sum + a[1] * b[1]; sum = sum + a[2] * b[2]; sum = sum + a[3] * b[3]; sum = sum + a[4] * b[4]; 35 36

Pipelining Type 1 Three cases when pipelining should be considered: 1) More than one instance of the complete problem is to be executed 2) A series of data items must be processed, each requiring multiple operations 3) Information to start the next process can be passed forward before the process has completed all its internal operations An example is vector operations on processors with more than one functional unit 37 38 Type 2 Type 2 Sorting 39 40 Type 3 Type 3 Solving a linear equation system 41 42

Recommend

More recommend