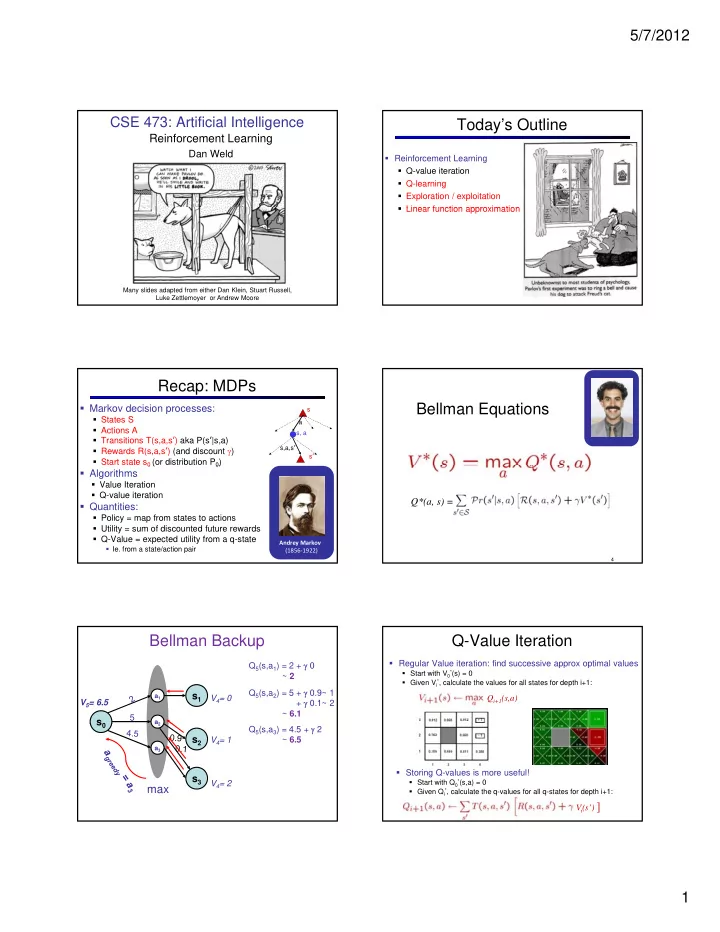

5/7/2012 CSE 473: Artificial Intelligence Today’s Outline Reinforcement Learning Dan Weld Reinforcement Learning Q-value iteration Q-learning Exploration / exploitation Linear function approximation Linear function approximation Many slides adapted from either Dan Klein, Stuart Russell, Luke Zettlemoyer or Andrew Moore 1 Recap: MDPs Bellman Equations Markov decision processes: s States S a Actions A s, a Transitions T(s,a,s ʼ ) aka P(s ʼ |s,a) s,a,s ʼ Rewards R(s,a,s ʼ ) (and discount ) s ʼ Start state s 0 (or distribution P 0 ) 0 ( 0 ) Algorithms Value Iteration Q-value iteration Q*(a, s) = Quantities: Policy = map from states to actions Utility = sum of discounted future rewards Q-Value = expected utility from a q-state Andrey Markov Ie. from a state/action pair (1856 ‐ 1922) 4 Bellman Backup Q-Value Iteration Regular Value iteration: find successive approx optimal values Q 5 (s,a 1 ) = 2 + 0 Start with V 0 * (s) = 0 ~ 2 Given V i * , calculate the values for all states for depth i+1: Q 5 (s,a 2 ) = 5 + 0.9~ 1 s 1 a 1 V 4 = 0 Q i+1 (s,a) + 0.1~ 2 V 5 = 6.5 ~ 6.1 5 s 0 s 0 a 2 a 2 Q 5 (s,a 3 ) = 4.5 + 2 s 2 V 4 = 1 ~ 6.5 a 3 Storing Q-values is more useful! s 3 Start with Q 0 V 4 = 2 * (s,a) = 0 max Given Q i * , calculate the q-values for all q-states for depth i+1: V i (s’) ] 1

5/7/2012 Q-Value Iteration Reinforcement Learning Markov decision processes: Initialize each q-state: Q 0 (s,a) = 0 States S s Actions A Repeat a Transitions T(s,a,s ʼ ) aka P(s ʼ |s,a) For all q-states, s,a s, a Rewards R(s,a,s ʼ ) (and discount ) Compute Q i+1 (s,a) from Q i by Bellman backup at s,a. s,a,s ʼ Start state s 0 (or distribution P 0 ) 0 ( 0 ) Until max s,a |Q i+1 (s,a) – Q i (s,a)| < s ʼ Algorithms Q-value iteration Q-learning Approaches for mixing exploration & exploitation V i (s’) ] -greedy Exploration functions Applications Stanford Autonomous Helicopter http://heli.stanford.edu/ Robotic control helicopter maneuvering, autonomous vehicles Mars rover - path planning, oversubscription planning g g elevator planning Game playing - backgammon, tetris, checkers Neuroscience Computational Finance, Sequential Auctions Assisting elderly in simple tasks Spoken dialog management Communication Networks – switching, routing, flow control War planning, evacuation planning 10 Two main reinforcement learning Two main reinforcement learning approaches approaches Model-based approaches: Model-based approaches: explore environment & learn model, T=P( s ʼ | s , a ) and R( s , a ), Learn T + R (almost) everywhere |S| 2 |A| + |S||A| parameters (40,000) use model to plan policy, MDP-style approach leads to strongest theoretical results h l d t t t th ti l lt often works well when state-space is manageable Model-free approach: Model-free approach: Learn Q don ʼ t learn a model; learn value function or policy directly |S||A| parameters (400) weaker theoretical results often works better when state space is large 2

5/7/2012 Recap: Sampling Expectations Recap: Exp. Moving Average Want to compute an expectation weighted by P(x): Exponential moving average Makes recent samples more important Model-based: estimate P(x) from samples, compute expectation Forgets about the past (distant past values were wrong anyway) Easy to compute from the running average Model-free: estimate expectation directly from samples Decreasing learning rate can give converging averages Why does this work? Because samples appear with the right frequencies! Q-Learning Update Exploration-Exploitation tradeoff You have visited part of the state space and found a Q-Learning = sample-based Q-value iteration reward of 100 is this the best you can hope for??? How learn Q*(s,a) values? Exploitation : should I stick with what I know and find Receive a sample (s a s ʼ r) Receive a sample (s,a,s ,r) a good policy w.r.t. this knowledge? d li hi k l d ? Consider your old estimate: at risk of missing out on a better reward somewhere Consider your new sample estimate: Exploration : should I look for states w/ more reward? Incorporate the new estimate into a running average: at risk of wasting time & getting some negative reward 16 Q-Learning: Greedy Exploration / Exploitation Several schemes for action selection Simplest: random actions ( greedy ) Every time step, flip a coin With probability , act randomly With probability 1- , act according to current policy With b bilit 1 t di t t li QuickTime™ and a H.264 decompressor Problems with random actions? are needed to see this picture. You do explore the space, but keep thrashing around once learning is done One solution: lower over time Another solution: exploration functions 3

5/7/2012 Exploration Functions Q-Learning Final Solution When to explore Q-learning produces tables of q-values: Random actions: explore a fixed amount Better idea: explore areas whose badness is not (yet) established Exploration function Exploration function Takes a value estimate and a count, and returns an optimistic utility , e.g. (exact form not important) Exploration policy π ( s ’ )= vs. Q-Learning – Small Problem Q-Learning Properties Doesn’t work Amazing result: Q-learning converges to optimal policy If you explore enough If you make the learning rate small enough In realistic situations, we can’t possibly learn about … but not decrease it too quickly! every single state! Not too sensitive to how you select actions (!) y ( ) Too many states to visit them all in training Too many states to visit them all in training Too many states to hold the q-tables in memory Neat property: off-policy learning learn optimal policy without following it (some caveats) Instead, we need to generalize : Learn about a few states from experience Generalize that experience to new, similar states (Fundamental idea in machine learning) S E S E Example: Pacman Feature-Based Representations Let ʼ s say we discover Solution: describe a state using a vector of features (properties) through experience Features are functions from states to that this state is bad: real numbers (often 0/1) that capture important properties of the state Example features: Example features: In naïve Q learning, Distance to closest ghost we know nothing Distance to closest dot about related states Number of ghosts 1 / (dist to dot) 2 and their Q values: Is Pacman in a tunnel? (0/1) …… etc. Or even this third one! Can also describe a q-state (s, a) with features (e.g. action moves closer to food) 4

5/7/2012 Linear Feature Functions Function Approximation Using a feature representation, we can write a q function (or value function) for any state Q-learning with linear q-functions: using a linear combination of a few weights: Exact Q ʼ s Advantage: our experience is summed up in Approximate Q ʼ s a few powerful numbers Intuitive interpretation: |S| 2 |A| ? |S||A| ? Adjust weights of active features E.g. if something unexpectedly bad happens, disprefer all states Disadvantage: states may share features but with that state ʼ s features actually be very different in value! Formal justification: online least squares Example: Q-Pacman Linear Regression 40 26 24 20 22 20 20 30 40 20 30 20 10 0 10 0 20 0 0 Prediction Prediction Ordinary Least Squares (OLS) Minimizing Error Imagine we had only one point x with features f(x): Error or “ residual ” Error or residual Observation Prediction Approximate q update: “ target ” “ prediction ” 0 0 20 5

5/7/2012 Overfitting Which Algorithm? 30 Q-learning, no features, 50 learning trials: 25 20 Degree 15 polynomial 15 10 QuickTime™ and a 5 GIF decompressor are needed to see this picture. 0 -5 -10 -15 0 2 4 6 8 10 12 14 16 18 20 Which Algorithm? Which Algorithm? Q-learning, no features, 1000 learning trials: Q-learning, simple features, 50 learning trials: QuickTime™ and a QuickTime™ and a GIF decompressor GIF decompressor are needed to see this picture. are needed to see this picture. Partially observable MDPs A POMDP: Ghost Hunter Markov decision processes: States S Actions A b Transitions P(s ʼ |s,a) (or T(s,a,s ʼ )) Rewards R(s,a,s ʼ ) (and discount ) a Start state distribution b 0 =P(s 0 ) b, a QuickTime™ and a H.264 decompressor are needed to see this picture. o POMDPs, just add: b ʼ Observations O Observation model P(o|s,a) (or O(s,a,o)) 6

Recommend

More recommend