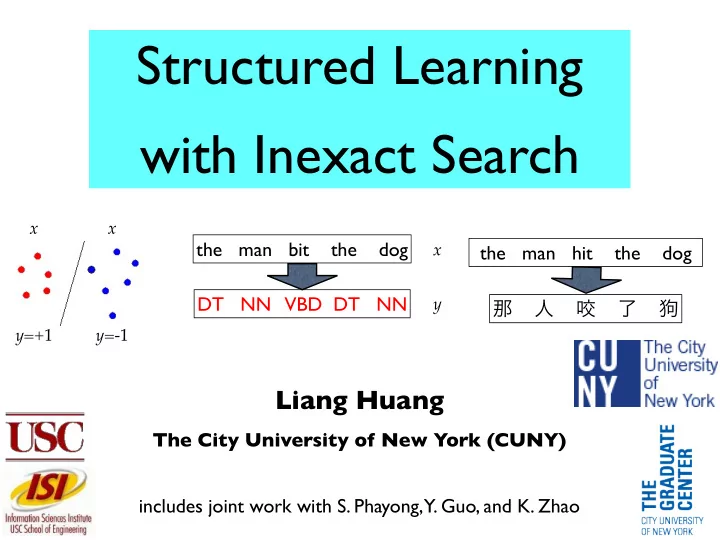

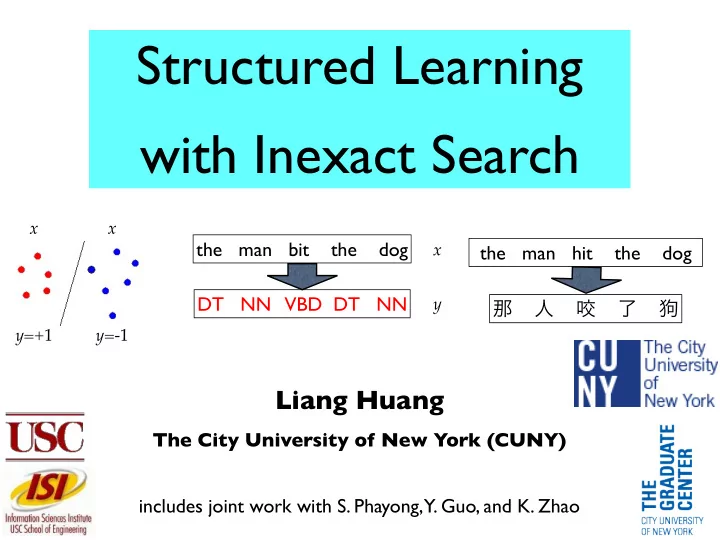

Structured Learning with Inexact Search x x the man bit the dog x the man hit the dog DT NN VBD DT NN y 那 人 咬 了 狗 y=+ 1 y=- 1 Liang Huang The City University of New York (CUNY) includes joint work with S. Phayong, Y. Guo, and K. Zhao

Structured Perceptron (Collins 02) binary classification trivial w x x exact z x constant update weights inference # of classes if y ≠ z y y=+ 1 y=- 1 hard exponential structured classification w # of classes exact the man bit the dog x z x update weights inference if y ≠ z y DT NN VBD DT NN y • challenge: search efficiency (exponentially many classes) • often use dynamic programming (DP) • but still too slow for repeated use, e.g. parsing is O ( n 3 ) • and can’t use non-local features in DP 2

Perceptron w/ Inexact Inference w the man bit the dog x inexact z x update weights inference if y ≠ z DT NN VBD DT NN y y does it still work??? beam search greedy search • routine use of inexact inference in NLP (e.g. beam search) • how does structured perceptron work with inexact search? • so far most structured learning theory assume exact search • would search errors break these learning properties? • if so how to modify learning to accommodate inexact search? 3

Idea: Search-Error-Robust Model w training inexact z x update weights inference if y ≠ z y w testing inexact z x inference • train a “search-specific” or “search-error-robust” model • we assume the same “search box” in training and testing • model should “live with” search errors from search box • exact search => convergence; greedy => no convergence • how can we make perceptron converge w/ greedy search? Liang Huang (CUNY) 4

Our Contributions w greedy z x early update on or beam prefixes y’, z’ y • theory: a framework for perceptron w/ inexact search • explains previous work (early update etc) as special cases • practice: new update methods within the framework • converges faster and better than early update • real impact on state-of-the-art parsing and tagging • more advantageous when search error is severer 5

In this talk... • Motivations: Structured Learning and Search Efficiency • Structured Perceptron and Inexact Search • perceptron does not converge with inexact search • early update (Collins/Roark ’04) seems to help; but why? • New Perceptron Framework for Inexact Search • explains early update as a special case • convergence theory with arbitrarily inexact search • new update methods within this framework • Experiments 6

Structured Perceptron (Collins 02) • simple generalization from binary/multiclass perceptron • online learning: for each example (x, y) in data • inference: find the best output z given current weight w • update weights when if y ≠ z trivial x x w constant exact z x update weights classes inference if y ≠ z y y=+ 1 y=- 1 hard exponential w classes the man bit the dog x exact z x update weights inference if y ≠ z DT NN VBD DT NN y y 7

Convergence with Exact Search • linear classification: converges iff. data is separable • structured: converges iff. data separable & search exact • there is an oracle vector that correctly labels all examples • one vs the rest (correct label better than all incorrect labels) • theorem: if separable, then # of updates ≤ R 2 / δ 2 R: diameter x 100 R: diameter R: diameter y 100 x 100 x 111 δ δ x 3012 x 2000 z ≠ y 100 y=- 1 y=+ 1 Rosenblatt => Collins 1957 2002 8

Convergence with Exact Search V V current V N V training example model time flies correct N V w ( k +1) label w ( k ) V N e output space t a N d p {N,V} x {N, V} u standard perceptron converges with exact search 9

No Convergence w/ Greedy Search V V current V N V training example model time flies correct w ( k ) N V label V N output space N new {N,V} x {N, V} model V V N V V w ( k +1) e t standard perceptron a w ( k ) d ∆ Φ ( x, y, z ) p u does not converge with greedy search V N N 10

Early update (Collins/Roark 2004) to rescue V V current V N V training example model time flies correct w ( k ) N V label V N output space N new {N,V} x {N, V} model N V V w ( k +1) e t standard perceptron a w ( k ) d ∆ Φ ( x, y, z ) p u does not converge with greedy search V N V ∆ Φ ( x, y, z ) w ( k ) stop and update at the first mistake w ( k +1) N new model 11

Why? N V V V V ∆ Φ ( x, y, z ) w ( k ) V N N w ( k +1) • why does inexact search break convergence property? • what is required for convergence? exactness? • why does early update (Collins/Roark 04) work? • it works well in practice and is now a standard method • but there has been no theoretical justification • we answer these Qs by inspecting the convergence proof 12

Geometry of Convergence Proof pt 1 w exact z x update weights inference if y ≠ z exact y z 1-best update ∆ Φ ( x, y, z ) perceptron update: correct y label δ margin ≥ δ separation update (by induction) w ( k ) unit oracle current vector u model w ( k +1) new model (part 1: upperbound) 13

Geometry of Convergence Proof pt 2 w exact z x update weights inference if y ≠ z exact y z 1-best violation: incorrect label scored higher update ∆ Φ ( x, y, z ) perceptron update: correct y label R: max diameter update ≤ R 2 w ( k ) <90 ˚ violation diameter current model w ( k +1) new (part 2: upperbound) by induction: model parts 1+2 => update bounds: k ≤ R 2 / δ 2 14

Violation is All we need! • exact search is not really required by the proof • rather, it is only used to ensure violation! exact all z 1-best violation: incorrect label scored higher violations update ∆ Φ ( x, y, z ) correct y label R: max diameter the proof only uses 3 facts: update 1. separation (margin) w ( k ) 2. diameter (always finite) <90 ˚ current 3. violation (but no need for exact) model w ( k +1) new model 15

Violation-Fixing Perceptron • if we guarantee violation, we don’t care about exactness! • violation is good b/c we can at least fix a mistake all same mistake bound as before! violations all possible updates y standard perceptron violation-fixing perceptron w w exact z z x x update weights find update weights inference violation if y ≠ z if y’ ≠ z y y y ’ 16

What if can’t guarantee violation • this is why perceptron doesn’t work well w/ inexact search • because not every update is guaranteed to be a violation • thus the proof breaks; no convergence guarantee • example: beam or greedy search • the model might prefer the correct label (if exact search) • but the search prunes it away d • such a non-violation update is “bad” a b e t a d p u because it doesn’t fix any mistake beam • the new model still misguides the search current model 17

Standard Update: No Guarantee V V V N V training example time flies correct w ( k ) N V label V N output space N {N,V} x {N, V} V V N V V w ( k +1) standard update w ( k ) ∆ Φ ( x, y, z ) doesn’t converge b/c it doesn’t guarantee violation V N N correct label scores higher. non-violation: bad update! 18

Early Update: Guarantees Violation V V V N V training example time flies correct w ( k ) N V label V N output space N {N,V} x {N, V} V V N V V w ( k +1) standard update w ( k ) ∆ Φ ( x, y, z ) doesn’t converge b/c it doesn’t guarantee violation V N N V ∆ Φ ( x, y, z ) w ( k ) early update: incorrect prefix w ( k +1) scores higher: a violation! N 19

Early Update: from Greedy to Beam • beam search is a generalization of greedy (where b=1) • at each stage we keep top b hypothesis • widely used: tagging, parsing, translation... • early update -- when correct label first falls off the beam • up to this point the incorrect prefix should score higher • standard update (full update) -- no guarantee! correct violation guaranteed: update early incorrect prefix scores incorrect higher up to this point standard update correct label (no guarantee!) falls off beam 20 (pruned)

Early Update as Violation-Fixing also new definition of w “beam separability”: a correct prefix should z x find update weights score higher than violation if y’ ≠ z any incorrect prefix y y’ of the same length prefix violations (maybe too strong) y cf. Kulesza and Pereira,2007 z update early beam y’ standard update correct label (bad!) falls off beam (pruned) 21

New Update Methods: max-violation, ... beam standard latest early (bad!) max-violation • we now established a theory for early update (Collins/Roark) • but it learns too slowly due to partial updates • max-violation: use the prefix where violation is maximum • “worst-mistake” in the search space • all these update methods are violation-fixing perceptrons 22

Experiments trigram part-of-speech tagging incremental dependency parsing the man bit the dog x the man bit the dog x DT NN VBD DT NN bit y man dog y the the local features only, non-local features, exact search tractable exact search intractable (real impact) (proof of concept)

Recommend

More recommend