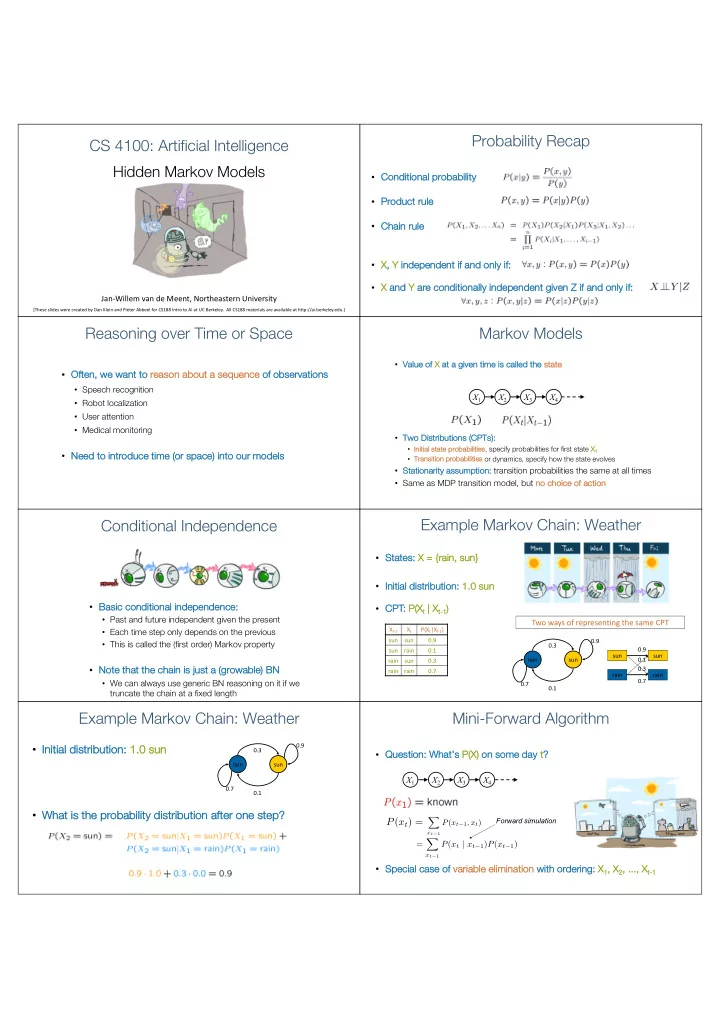

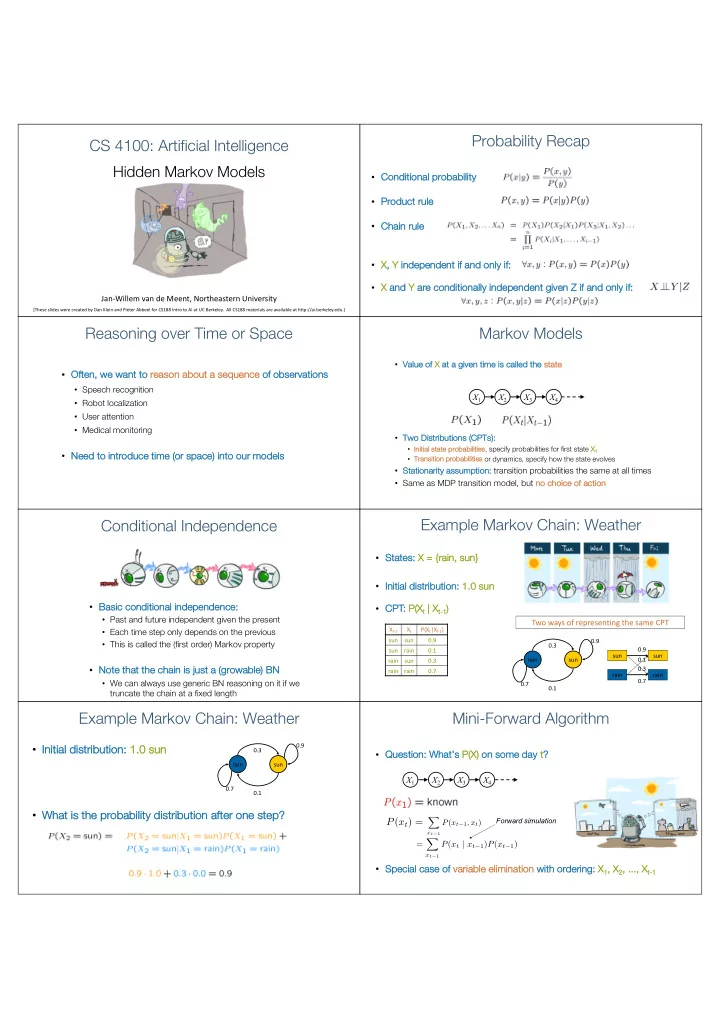

Probability Recap CS 4100: Artificial Intelligence Hidden Markov Models • Co Conditional probability • Pr Product rule • Ch Chain rule • X, , Y in independent if if and only ly if if: • X an and Y ar are co e conditional ally i indep epen enden ent g given en Z Z i if an and o only i if: Jan-Willem van de Meent, Northeastern University [These slides were created by Dan Klein and Pieter Abbeel for CS188 Intro to AI at UC Berkeley. All CS188 materials are available at http://ai.berkeley.edu.] Reasoning over Time or Space Markov Models • Va Value of X at at a a given en time e is cal called ed the e st state • Oft Often, we we wa want to re reason on abou bout a sequ quence of of obs observ rvation ons • Speech recognition X 1 X 2 X 3 X 4 • Robot localization • User attention • Medical monitoring • Tw Two o Distr tributi tion ons (CPTs Ts): • In Initial s state p probabilities , specify probabilities for first state X 1 • Ne Need to introduce time me (or sp space) into our mo models • Tra ransiti tion pr proba babi bilities or dynamics, specify how the state evolves • St Stationarity as assumption: transition probabilities the same at all times • Same as MDP transition model, but no no cho hoice of action Example Markov Chain: Weather Conditional Independence • St States: X X = {ra {rain, sun} • In Initial di distri ribu bution on: 1. 1.0 0 sun • Ba Basic ic condit itio ional l in independence: • CP CPT: P( P(X t | | X t-1 ) • Past and future independent given the present Two ways of representing the same CPT X t-1 X t P(X t |X t-1 ) • Each time step only depends on the previous sun sun 0.9 0.9 • This is called the (first order) Markov property 0.3 0.9 sun rain 0.1 sun sun rain sun 0.3 rain sun 0.1 • No Note that the chain is s just st a (grow growabl ble) ) BN 0.3 rain rain 0.7 rain rain 0.7 • We can always use generic BN reasoning on it if we 0.7 0.1 truncate the chain at a fixed length Example Markov Chain: Weather Mini-Forward Algorithm • In Initi tial al di distr tribu buti tion: 1. 1.0 0 sun 0.9 0.3 • Qu Question: What’s s P( P(X) on on some ome da day t? rain sun X 1 X 2 X 3 X 4 0.7 0.1 • Wh What i is t s the p probability d dist stribution a after o one st step? P ( x t ) = X Forward simulation P ( x t − 1 , x t ) x t − 1 X = P ( x t | x t − 1 ) P ( x t − 1 ) x t − 1 • Sp Special case of f va variable elimination wi with ordering: X 1 , X , X 2 , ..., X , ..., X t-1

Hidden Markov Models Pacman – Sonar (P4) Hidden Markov Models Example: Weather HMM P ( X t | X t − 1 ) • Marko kov chains not so useful for most agents • Need observations to update your beliefs Rain t-1 Rain t Rain t+1 • Hidden Marko kov models (HMMs) P ( E t | X t ) • Underlying Markov chain over states X Umbrella t-1 • You observe outputs (effects) at each time step Umbrella t Umbrella t+1 X 1 X 2 X 3 X 4 X 5 • An An HMM is defined by: y: R t-1 R t P(R t |R t-1 ) R t U t P(U t |R t ) • In Initial al distribution: +r +r 0.7 +r +u 0.9 • Tr Transiti tion ons: P ( X t | X t − 1 ) +r -r 0.3 +r -u 0.1 E 1 E 2 E 3 E 4 E 5 • Emi Emissions: P ( E t | X t ) -r +r 0.3 -r +u 0.2 -r -r 0.7 -r -u 0.8 Example: Ghostbusters HMM Ghostbusters – Circular Dynamics -- HMM P( P(X 1 ) : uniform • 1/9 1/9 1/9 P( P(X X | X ’ ) : usually move clockwise, • 1/9 1/9 1/9 but sometimes move in a random direction or stay in place 1/9 1/9 1/9 P(X 1 ) P(R ij P( ij | X | X) : = same sensor model as before; • red means close, green means far away. 1/6 1/6 1/2 X 1 X 2 X 3 X 4 0 1/6 0 0 0 0 R i,j R i,j R i,j R i,j X 5 P(X|X ’ =<1,2>) [Demo: Ghostbusters – Circular Dynamics – HMM (L14D2)] Conditional Independence Real HMM Examples • HM HMMs ha have two wo impo portant nt inde ndepe pende ndenc nce pr prope perties: • Sp Speech recognition HMMs: • Marko kov hidden process: future depends on past via the present • Observations are acoustic signals (continuous valued) • States are specific positions in specific words (so, tens of thousands) • Current observation in independent of all else given current state • Mach Machine e tran anslat ation HMMs MMs: X 1 X 2 X 3 X 4 X 5 • Observations are words (tens of thousands) • States are translation options E 1 E 2 E 3 E 4 E 5 • Robot tracki king: • Observations are range readings (continuous) • States are positions on a map (continuous) • Qu Quiz: does this mean that evidence variables are guaranteed to be independent? • [No, they tend to correlated by the hidden state]

Filtering / Monitoring Example: Robot Localization Example from • Fi Filtering ng , or monitoring, is the task of tracking the distribution Michael Pfeiffer B t (X) (X) = = P t (X t | | e 1 , …, …, e t ) (the belief state) over time • We start with B 1 (X (X) in an initial setting, usually uniform • Given B t (X) ) and new evidence e t+ t+1 evidence we compute B t+ 1 (X) X) t+1 • Ba Basic idea: Use interleaved jo join in , su sum , and no normalize that we have also seen in va variable elimi minati tion : • Jo Join P( P(X t+ t+1 , X , X t-1 1 | e | e 1 , …, …, e t ) Prob 0 1 • Su Sum P( P(X t+1 1 | e | e 1 , …, …, e t ) • Jo Join P(e (e t+ t+1 , X , X t+1 +1 | e | e 1 , …, …, e t , e t+ t+1 ) t=0 • No Normaliz lize P( P(X t+ 1 | e | e 1 , …, …, e t , e t+ t+1 ) • Se Sensor mo model: can read in which directions t+1 there is a wall, never more than 1 mistake • The Ka Kalman f filter r was invented in the 60’s and first implemented • Mo Motion model el: may not execute action with small prob. as a method of trajectory estimation for the Apollo program Example: Robot Localization Example: Robot Localization Prob 0 1 Prob 0 1 t=1 hter grey: was possible to get the reading, Li Light t=2 but less likely b/c required 1 mistake Example: Robot Localization Example: Robot Localization Prob 0 1 Prob 0 1 t=3 t=4 Inference: Base Cases Example: Robot Localization X 1 X 1 X 2 E 1 Prob 0 1 t=5

Filtering (join + sum): Elapse Time Example: Passage of Time • As Assume me we have ve current belief P( P(X | evi vidence to date) • As time passes, uncertainty “ accumulates ” X 1 X 2 (Transition model: ghosts usually go clockwise) • Th Then, afte fter on one ti time ste tep passes: P ( X t +1 | e 1: t ) X = P ( X t +1 , x t | e 1: t ) • Or compactly: x t T = 1 T = 2 T = 5 X B 0 ( X t +1 ) = P ( X 0 | x t ) B ( x t ) X = P ( X t +1 | x t , e 1: t ) P ( x t | e 1: t ) x t x t X = P ( X t +1 | x t ) P ( x t | e 1: t ) x t Basic idea: beliefs get “pushed” through the transitions • Ba • With the “B” notation, we have to be careful about what time step t the belief is about, and what evidence it includes Filtering (join + normalize): Observation Example: Observation • As Assume me we have ve current belief P( P(X | previ vious evi vidence): X 1 • As we get observations, beliefs get reweighted, uncertainty “ decreases ” B 0 ( X t +1 ) = P ( X t +1 | e 1: t ) • Th Then, afte fter evidence com omes in: E 1 P ( X t +1 | e 1: t +1 ) = P ( X t +1 , e t +1 | e 1: t ) /P ( e t +1 | e 1: t ) ∝ X t +1 P ( X t +1 , e t +1 | e 1: t ) = P ( e t +1 | e 1: t , X t +1 ) P ( X t +1 | e 1: t ) Before observation After observation = P ( e t +1 | X t +1 ) P ( X t +1 | e 1: t ) B ( X t +1 ) ∝ X t +1 P ( e t +1 | X t +1 ) B 0 ( X t +1 ) Basic idea: beliefs “reweighted” Ba • • Or Or, compa pactly: by likelihood of evidence First compute pr produ duct (jo (join in) then no normalize • Unlike passage of time, we have B ( X t +1 ) ∝ X t +1 P ( e t +1 | X t +1 ) B 0 ( X t +1 ) to renormalize Pacman – Sonar (P4) Pacman – Sonar (with beliefs) [Demo: Pacman – Sonar – No Beliefs(L14D1)] Particle Filtering Particle Filtering • Fi Filtering ng: approximate solut ution 0.0 0.1 0.0 • So Some metime mes |X |X| is is too big ig to use exact in infere rence 0.0 0.0 0.2 • |X |X| may be too big to even store B( B(X) E.g. X is continuous • 0.0 0.2 0.5 • So Solution: approxima mate inference Track samples of X , not all values • • Assign weights w w to all samples (as in likelihood weighting) • Weighted samples are called pa particles In In me memo mory: list of particles, not states • • This is how robot localization works ks in practice

Recommend

More recommend