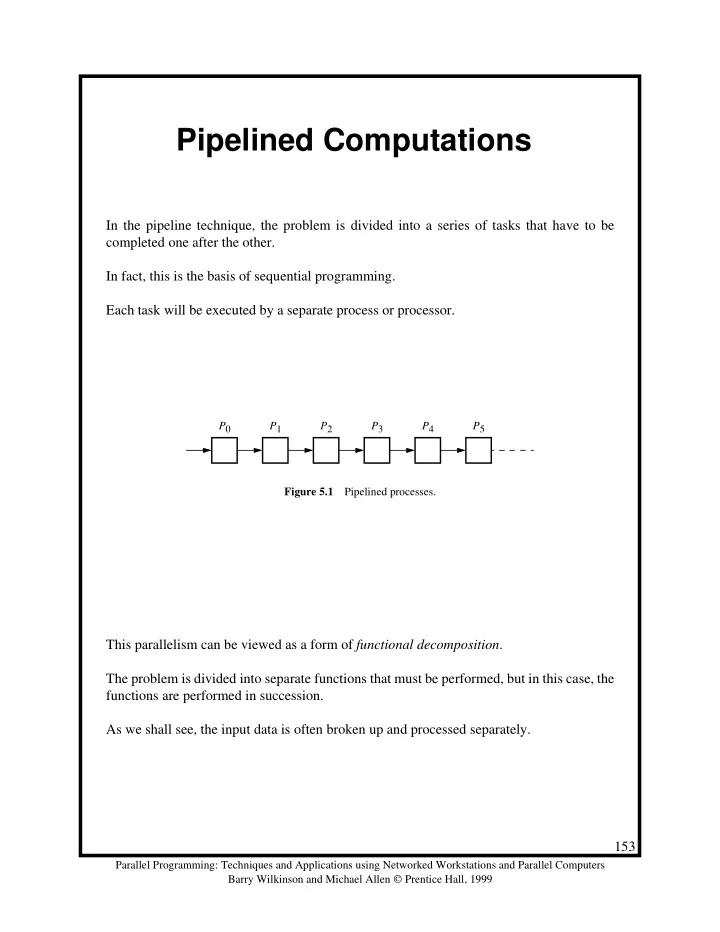

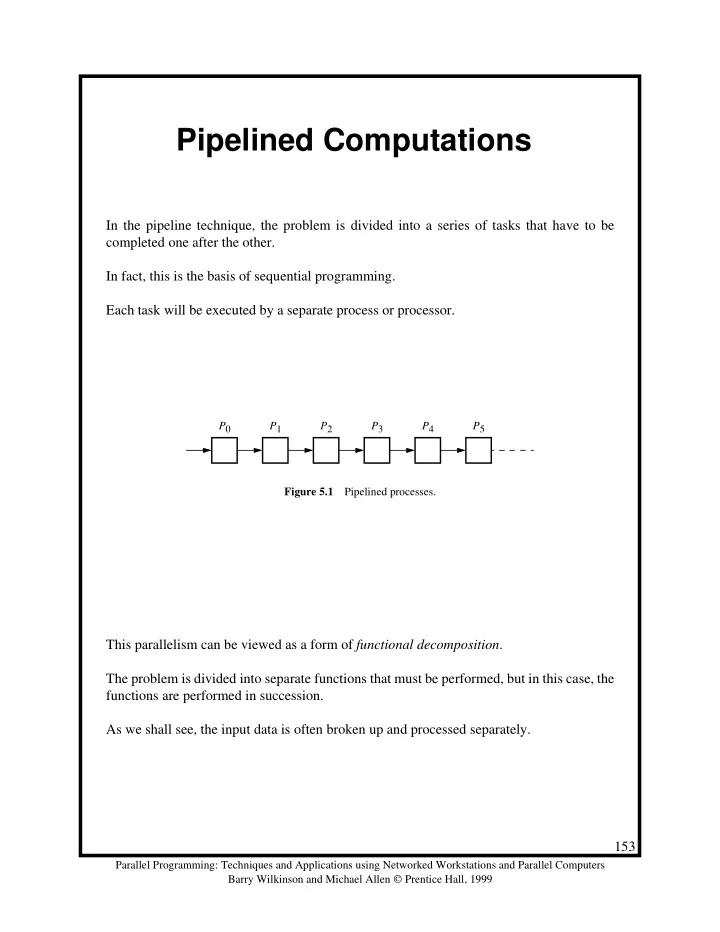

Pipelined Computations In the pipeline technique, the problem is divided into a series of tasks that have to be completed one after the other. In fact, this is the basis of sequential programming. Each task will be executed by a separate process or processor. P 0 P 1 P 2 P 3 P 4 P 5 Figure 5.1 Pipelined processes. This parallelism can be viewed as a form of functional decomposition . The problem is divided into separate functions that must be performed, but in this case, the functions are performed in succession. As we shall see, the input data is often broken up and processed separately. 153 Parallel Programming: Techniques and Applications using Networked Workstations and Parallel Computers Barry Wilkinson and Michael Allen Prentice Hall, 1999

Example Add all the elements of array a to an accumulating sum: for (i = 0; i < n; i++) sum = sum + a[i]; The loop could be “unfolded” to yield sum = sum + a[0]; sum = sum + a[1]; sum = sum + a[2]; sum = sum + a[3]; sum = sum + a[4]; . One pipeline solution: a[0] a[1] a[2] a[3] a[4] a a a a a sum s in s out s in s out s in s out s in s out s in s out Figure 5.2 Pipeline for an unfolded loop. Stage i performs s out = s in + a[i]; 154 Parallel Programming: Techniques and Applications using Networked Workstations and Parallel Computers Barry Wilkinson and Michael Allen Prentice Hall, 1999

Example A frequency filter - The objective here is to remove specific frequencies (say the frequen- cies f 0 , f 1 , f 2 , f 3 , etc.) from a (digitized) signal, f ( t ). The signal could enter the pipeline from the left: Signal without Signal without Signal without Signal without frequency f 0 frequency f 1 frequency f 2 frequency f 3 f 0 f 1 f 2 f 3 f 4 Filtered signal f in f out f in f out f in f out f in f out f in f out f ( t ) Figure 5.3 Pipeline for a frequency filter. 155 Parallel Programming: Techniques and Applications using Networked Workstations and Parallel Computers Barry Wilkinson and Michael Allen Prentice Hall, 1999

Given that the problem can be divided into a series of sequential tasks, the pipelined approach can provide increased speed under the following three types of computations: 1. If more than one instance of the complete problem is to be executed 2. If a series of data items must be processed, each requiring multiple operations 3. If information to start the next process can be passed forward before the process has completed all its internal operations 156 Parallel Programming: Techniques and Applications using Networked Workstations and Parallel Computers Barry Wilkinson and Michael Allen Prentice Hall, 1999

“Type 1” Pipeline S pace-Time Diagram p − 1 m Instance Instance Instance Instance Instance P 5 1 2 3 4 5 Instance Instance Instance Instance Instance Instance P 4 1 2 3 4 5 6 Instance Instance Instance Instance Instance Instance Instance P 3 1 2 3 4 5 6 7 Instance Instance Instance Instance Instance Instance Instance P 2 1 2 3 4 5 6 7 Instance Instance Instance Instance Instance Instance Instance P 1 1 2 3 4 5 6 7 Instance Instance Instance Instance Instance Instance Instance P 0 1 2 3 4 5 6 7 Time Figure 5.4 Space-time diagram of a pipeline. 157 Parallel Programming: Techniques and Applications using Networked Workstations and Parallel Computers Barry Wilkinson and Michael Allen Prentice Hall, 1999

Instance 0 P 0 P 1 P 2 P 3 P 4 P 5 Instance 1 P 0 P 1 P 2 P 3 P 4 P 5 Instance 2 P 0 P 1 P 2 P 3 P 4 P 5 Instance 3 P 0 P 1 P 2 P 3 P 4 P 5 Instance 4 P 0 P 1 P 2 P 3 P 4 P 5 Time Figure 5.5 Alternative space-time diagram. 158 Parallel Programming: Techniques and Applications using Networked Workstations and Parallel Computers Barry Wilkinson and Michael Allen Prentice Hall, 1999

“Type 2” Pipeline S pace-Time Diagram Input sequence d 9 d 8 d 7 d 6 d 5 d 4 d 3 d 2 d 1 d 0 P 0 P 1 P 2 P 3 P 4 P 5 P 6 P 7 P 8 P 9 (a) Pipeline structure p − 1 n d 0 d 1 d 2 d 3 d 4 d 5 d 6 P 9 d 0 d 1 d 2 d 3 d 4 d 5 d 6 d 7 P 8 d 0 d 1 d 2 d 3 d 4 d 5 d 6 d 7 d 8 P 7 d 0 d 1 d 2 d 3 d 4 d 5 d 6 d 7 d 8 d 9 P 6 d 0 d 1 d 2 d 3 d 4 d 5 d 6 d 7 d 8 d 9 P 5 d 0 d 1 d 2 d 3 d 4 d 5 d 6 d 7 d 8 d 9 P 4 d 0 d 1 d 2 d 3 d 4 d 5 d 6 d 7 d 8 d 9 P 3 d 0 d 1 d 2 d 3 d 4 d 5 d 6 d 7 d 8 d 9 P 2 d 0 d 1 d 2 d 3 d 4 d 5 d 6 d 7 d 8 d 9 P 1 P 0 d 0 d 1 d 2 d 3 d 4 d 5 d 6 d 7 d 8 d 9 Time (b) Timing diagram Figure 5.6 Pipeline processing 10 data elements. 159 Parallel Programming: Techniques and Applications using Networked Workstations and Parallel Computers Barry Wilkinson and Michael Allen Prentice Hall, 1999

“Type 3” Pipeline S pace-Time Diagram P 5 P 5 P 4 P 4 Information P 3 P 3 transfer sufficient to P 2 P 2 start next process P 1 P 1 Information passed P 0 to next stage P 0 Time Time (a) Processes with the same (b) Processes not with the execution time same execution time Figure 5.7 Pipeline processing where information passes to next stage before end of process. 160 Parallel Programming: Techniques and Applications using Networked Workstations and Parallel Computers Barry Wilkinson and Michael Allen Prentice Hall, 1999

If the number of stages is larger than the number of processors in any pipeline, a group of stages can be assigned to each processor: Processor 0 Processor 1 Processor 2 P 0 P 1 P 2 P 3 P 4 P 5 P 6 P 7 P 8 P 9 P 10 P 11 Figure 5.8 Partitioning processes onto processors. 161 Parallel Programming: Techniques and Applications using Networked Workstations and Parallel Computers Barry Wilkinson and Michael Allen Prentice Hall, 1999

Computing Platform for Pipelined Applications Multiprocessor Host computer Figure 5.9 Multiprocessor system with a line configuration. 162 Parallel Programming: Techniques and Applications using Networked Workstations and Parallel Computers Barry Wilkinson and Michael Allen Prentice Hall, 1999

Pipeline Program Examples Adding Numbers 2 3 4 5 Σ Σ Σ Σ Σ i i i i i 1 1 1 1 1 P 0 P 1 P 2 P 3 P 4 Figure 5.10 Pipelined addition. The basic code for process P i : recv(&accumulation, P i-1 ); accumulation = accumulation + number; send(&accumulation, P i+1 ); except for the first process, P 0 , which is send(&number, P 1 ); and the last process, P n − 1 , which is recv(&number, P n-2 ); accumulation = accumulation + number; SPMD program if (process > 0) { recv(&accumulation, P i-1 ); accumulation = accumulation + number; } if (process < n-1) send(&accumulation, P i+1 ); The final result is in the last process. Instead of addition, other arithmetic operations could be done. 163 Parallel Programming: Techniques and Applications using Networked Workstations and Parallel Computers Barry Wilkinson and Michael Allen Prentice Hall, 1999

Master process Slaves d n − 1 … d 2 d 1 d 0 P 0 P 1 P 2 P n − 1 Sum Figure 5.11 Pipelined addition numbers with a master process and ring configuration. 164 Parallel Programming: Techniques and Applications using Networked Workstations and Parallel Computers Barry Wilkinson and Michael Allen Prentice Hall, 1999

Master process Numbers d 0 d 1 d n − 1 Slaves P 1 P 0 P 2 P n − 1 Sum Figure 5.12 Pipelined addition of numbers with direct access to slave processes. 165 Parallel Programming: Techniques and Applications using Networked Workstations and Parallel Computers Barry Wilkinson and Michael Allen Prentice Hall, 1999

Analysis Our first pipeline example is Type 1. We will assume that each process performs similar actions in each pipeline cycle. Then we will work out the computation and communication required in a pipeline cycle. The total execution time: t total = (time for one pipeline cycle)(number of cycles) t total = ( t comp + t comm )( m + p − 1) where there are m instances of the problem and p pipeline stages (processes). The average time for a computation is given by t total t a = --------- - m Single Instance of Problem t comp = 1 t comm = 2( t startup + t data ) t total = (2( t startup + t data ) + 1) n The time complexity = Ο ( n ). Multiple Instances of Problem t total = (2( t startup + t data ) + 1)( m + n − 1) t total ≈ 2( t startup + t data ) + 1 t a = --------- - m That is, one pipeline cycle Data Partitioning with Multiple Instances of Problem t comp = d t comm = 2( t startup + t data ) t total = (2( t startup + t data ) + d )( m + n/d − 1) As we increase the d , the data partition, the impact of the communication diminishes. But increasing the data partition decreases the parallelism and often increases the execution time. 166 Parallel Programming: Techniques and Applications using Networked Workstations and Parallel Computers Barry Wilkinson and Michael Allen Prentice Hall, 1999

Recommend

More recommend