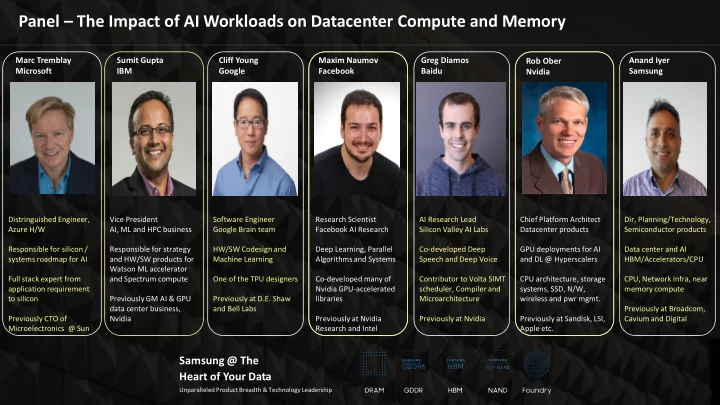

Panel – The Impact of AI Workloads on Datacenter Compute and Memory Marc Tremblay Sumit Gupta Cliff Young Maxim Naumov Greg Diamos Anand Iyer Rob Ober Microsoft IBM Google Facebook Baidu Samsung Nvidia Distringuished Engineer, Vice President Software Engineer Research Scientist AI Research Lead Chief Platform Architect Dir, Planning/Technology, Azure H/W AI, ML and HPC business Google Brain team Facebook AI Research Silicon Valley AI Labs Datacenter products Semiconductor products Responsible for silicon / Responsible for strategy HW/SW Codesign and Deep Learning, Parallel Co-developed Deep GPU deployments for AI Data center and AI systems roadmap for AI and HW/SW products for Machine Learning Algorithms and Systems Speech and Deep Voice and DL @ Hyperscalers HBM/Accelerators/CPU Watson ML accelerator Full stack expert from and Spectrum compute One of the TPU designers Co-developed many of Contributor to Volta SIMT CPU architecture, storage CPU, Network infra, near application requirement Nvidia GPU-accelerated scheduler, Compiler and systems, SSD, N/W, memory compute to silicon Previously GM AI & GPU Previously at D.E. Shaw libraries Microarchitecture wireless and pwr mgmt. data center business, and Bell Labs Previously at Broadcom, Previously CTO of Nvidia Previously at Nvidia Previously at Nvidia Previously at Sandisk, LSI, Cavium and Digital Microelectronics @ Sun Research and Intel Apple etc. Samsung @ The Heart of Your Data Unparalleled Product Breadth & Technology Leadership

Virtuous cycle of big data vs compute growth chasm Data Compute 350M photos 2 Trillion searches / year 4PB / day Data is being created faster than our ability to make sense of it. Flu Outbreak: Google knows before CDC

ML/DL Systems – Market Demand and Key Application Domains probability of a click image/video NNs convolutions Interactions attention p2 p3 p1 ( нравятся ) ( коты ) ( мне ) cat NNs hidden state Embeddin g Lookup … \start p1 I like cats \end p2 dense features sparse features DL inference in data centers [1] NMT Vision Recommenders [1] “Deep Learning Inference in Data Centers: Characterization, Performance Optimizations and Hardware Implications”, ArXiv, 2018 https://code.fb.com/ai-research/scaling-neural-machine-translation-to-bigger-data-sets-with-faster-training-and-inference https://code.fb.com/ml-applications/expanding-automatic-machine-translation-to-more-languages

Resource Requirements Categor Model Types Model Size Max. Op. Intensity (W) Op. Intensity (X and y (W) Activations W) 20-200 20-200 FCs 1-10M > 10K RecSys 1-2 1-2 Embeddings >10 Billion > 10K Avg. 380/Min. Avg. 188/Min. 28 ResNeXt101-32x4-48 43-829M 2-29M 100 Avg.3.5K/Min. Avg. 145/Min. 4 Faster-RCNN- CV 6M 13M 2.5K ShuffleNet Avg. 22K/Min. 2K Avg. 172/ Min. 6 ResNeXt3D-101 21M 58M NLP 2-20 2-20 seq2seq 100M-1B >100K

AI Application Roofline Performance System balance For the foreseeable future, off-chip memory Memory <-> Compute <-> Communication bandwidth will often be the constraining resource in system performance. Memory Access for: Research + Development Network / program config and control flow Time to train and accuracy Training data mini-batch compute flow Multiple runs for exploration, sometimes overnight Compute consumes: Production Mini-batch data Optimal work/$ Communication for: Optimal work/watt Time to train All reduce Embedding table insertion

Hardware Trends Time spent in caffe2 operators in data centers [1] oofline • High memory bandwidth and capacity for embeddings • Support for powerful matrix and vector engines ⇥ • Large on -chip memory for inference with small batches fit • Support for half -precision floating-point computation oofline oofline Common activation and weight matrix shapes ( X MxK W T fleNet KxN ) [1] fleNet ⇥ [1] “Deep Learning Inference in Data Centers: Characterization, Performance Optimizations and Hardware Implications”, ArXiv, 2018 ⇥ fi fit ⇥ fit fleNet fleNet ⇥ ’ doesn’ filter fi ⇥ filters). fi ⇥ ⇥ ⇥ fleNet fleNet ⇥ ’ fi doesn’ filter ⇥ filters). fi ⇥ ⇥ ⇥ ’ doesn’ filter ⇥ filters). fi ⇥ ⇥ ⇥

Sample Workload Characterization Embedding table hit rates and access histograms [2] [2] “Bandana: Using Non - Volatile Memory for Storing Deep Learning Models”, SysML, 2019

MLPerf

The Move to The Edge CPU Memory Storage By 2022, 7 out of every 10 bytes of data created will never see a data center. Let Data Speak for Considerations Itself! • Compute closer to Data • Smarter Data Movement • Faster Time to Insight

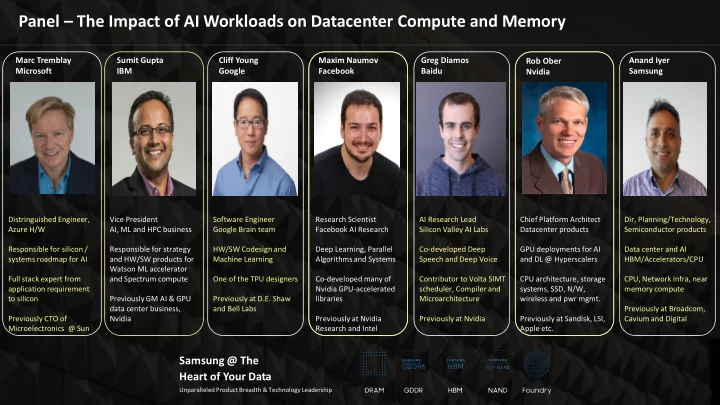

Panel – The Impact of AI Workloads on Datacenter Compute and Memory Marc Tremblay Sumit Gupta Cliff Young Maxim Naumov Greg Diamos Rob Ober Anand Iyer Microsoft IBM Google Facebook Baidu Nvidia Samsung Distringuished Engineer, Vice President Software Engineer Research Scientist AI Research Lead Chief Platform Architect Dir, Planning/Technology, Azure H/W AI, ML and HPC business Google Brain team Facebook AI Research Baidu SVAIL Datacenter products Semiconductor products Responsible for silicon / Responsible for strategy HW/SW Codesign and Deep Learning, Parallel Co-developed Deep GPU deployments for AI Data center and AI systems roadmap for AI and HW/SW products for Machine Learning Algorithms and Systems Speech and Deep Voice and DL @ Hyperscalers HBM/Accelerators/CPU Watson ML accelerator Full stack expert from and Spectrum compute One of the TPU designers Co-developed many of Contributor to Volta SIMT CPU architecture, storage CPU, Network infra, near application requirement Nvidia GPU-accelerated scheduler, Compiler and systems, SSD, N/W, memory compute to silicon Previously GM AI & GPU Previously at D.E. Shaw libraries Microarchitecture wireless and pwr mgmt. data center business, and Bell Labs Previously at Broadcom, Previously CTO of Nvidia Previously at Nvidia Previously at Nvidia Previously at Sandisk, LSI, Cavium and Digital Microelectronics @ Sun Research and Intel Apple etc. Samsung @ The Heart of Your Data Unparalleled Product Breadth & Technology Leadership

Cloud AI Edge computing AI On-device AI 1.5x bandwidth 6x faster training time 2x faster data access 8x training cost effectiveness 2x hot data feeding privacy & fast response HBM GDDR6 LPDDR5 Samsung @ The Heart of Your Data Visit us at Booth #726

Recommend

More recommend