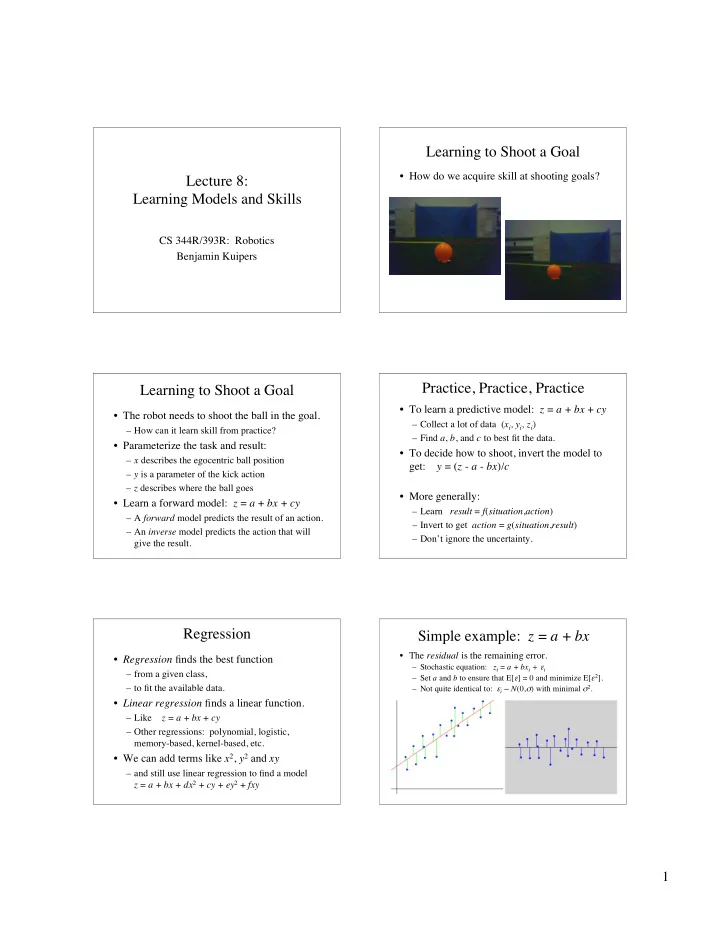

Learning to Shoot a Goal • How do we acquire skill at shooting goals? Lecture 8: Learning Models and Skills CS 344R/393R: Robotics Benjamin Kuipers Practice, Practice, Practice Learning to Shoot a Goal • To learn a predictive model: z = a + bx + cy • The robot needs to shoot the ball in the goal. – Collect a lot of data ( x i , y i , z i ) – How can it learn skill from practice? – Find a , b , and c to best fit the data. • Parameterize the task and result: • To decide how to shoot, invert the model to – x describes the egocentric ball position get: y = ( z - a - bx )/ c – y is a parameter of the kick action – z describes where the ball goes • More generally: • Learn a forward model: z = a + bx + cy – Learn result = f ( situation , action ) – A forward model predicts the result of an action. – Invert to get action = g ( situation , result ) – An inverse model predicts the action that will – Don’t ignore the uncertainty. give the result. Regression Simple example: z = a + bx • The residual is the remaining error. • Regression finds the best function – Stochastic equation: z i = a + bx i + ε i – from a given class, – Set a and b to ensure that E[ ε ] = 0 and minimize E[ ε 2 ]. – to fit the available data. – Not quite identical to: ε i ∼ N (0, σ ) with minimal σ 2 . • Linear regression finds a linear function. – Like z = a + bx + cy – Other regressions: polynomial, logistic, memory-based, kernel-based, etc. • We can add terms like x 2 , y 2 and xy – and still use linear regression to find a model z = a + bx + dx 2 + cy + ey 2 + fxy 1

After shifting the data to the origin First, the simple case … • Given the data set ( x i � x , z i � z ) • Suppose we have n data points ( x i , z i ) ( z � z ) = b ( x � x ) – look for the best fit b for – and we want to learn a model z = a + bx + ε n – that minimizes � )) 2 E = ( z i � z � b ( x i � x • We need to find a and b to minimize the i = 1 n squared error: • Look for a local minimum of E � � a � bx i ) 2 E = ( z i n dE � � 2( z i � z � b ( x i � x )) ( x i � x ) 0 i = 1 = = db • Shift the mean to the origin: ( x i � x , z i � z ) 1 n � ( ) 2 ) � 2 ( z i � z )( x i � x ) � b ( x i � x 0 = = • First we find b such that ( z i � z ) = b ( x i � x ) 1 � a = z � bx • It’s a minimum because – Once we have b , we will get ( z i � z )( x i � x ) b = d 2 E � � ) 2 ) 2 ( x i � x db 2 = + 2 ( x i � x > 0 Up to more dimensions … Summary • Suppose our dataset is ( x i , y i , z i ) – (For simplicity, assume data centered at origin) • Given n data points ( x i , z i ) – We want to fit the plane z = bx + cy – The best fitting line z = a + bx is given by n • The error term is � � bx i � cy i ) 2 E = ( z i � • Find a minimum i = 1 ( z i � z )( x i � x ) b = � E � = � 2 x i ( z i � bx i � cy i ) = 0 � ) 2 ( x i � x � b � E � = � 2 y i ( z i � bx i � cy i ) = 0 � c • Solve for b and c a = z � bx � 2 � � b x i c x i y i x i z i + = � � 2 � b x i y i + c y i = y i z i Cleaning the data Caution! • Given the data ( x i , y i , z i ) • It’s easy to listen to this, and even read it carefully, and think it all makes sense. – find the best-fitting plane z = a + bx + cy • Compute the residuals: r i = z i − a − bx i − cy i – But you still don’t understand it! – The mean of the r i should be zero. – Compute the standard deviation σ r • Your dataset ( x i , y i , z i ) will not be centered around the origin. • A data point is an outlier if | r i | > 3 σ r – You need to fit the plane z = a + bx + cy – Discard the outliers • Work through the math for this, by hand . • Recompute the regression, using only inliers. 2

Discarding Outliers Hough Transform • Representing a line • Why is it OK to discard outliers if | r i | > 3 σ r ? x cos θ + y sin θ = r – Outliers are still data, aren’t they? ( x,y ) ⋅ (cos θ , sin θ ) = r • A model explains data by saying that some causes are relevant, and others are negligible. For fixed ( r , θ ), • If a data point has p < .001 according to the represent all points ( x , y ) on a given line. model, it is more likely explained as a modeling error, than as an unlikely outcome. – An unlikely outcome isn’t helpful in fitting For fixed ( x,y ), model parameters, anyway. represent all lines ( r , θ ) through ( x,y ). Votes From Three Points Hough Space: ( r , θ ) representations • Each point contributes a curve of votes. • Each observed point ( x,y ) votes for all lines ( r , θ ) passing through it. Lines Get the Most Votes Lines Get the Most Votes • Votes from three very strong lines. • Identify local max in Hough Space to define a line in Image Space. 3

RANSAC Hough Transform Issues Random Sample Consensus • Hough Transform works with any • A method for robust model-fitting. parameterized model: circle, rectangle, etc. – Separating inliers from outliers . – But in a high-dimensional Hough Space, each cell gets few votes • To maximize votes, use large cells. – But they give low resolution model descriptions. RANSAC Pros and Cons RANSAC to Find Line Models • Very robust search for models. • Repeat k times: – The model classifies data as inliers and outliers – Select 2 points from data , to define a model M. – Collect all points from data , within tolerance t • Can estimate probability of failure as a of the model M . These are the inliers. function of k . But no upper bound. • If # inliers < d , give up on model M . – Find the model M ′ that best fits the inliers. • In this case, by linear regression. • Can find multiple models by deleting data – Record the error of the inliers from model M ′ . explained by current best model. • Return the model M ′ with the lowest error. – But this can fail if current best model is bad. Back to Learning a Skill! Learning to Shoot a Goal • Remember: Ball position x . Goal position z . Kick param y – x describes the egocentric ball position – y is a parameter of the kick action – z describes where the ball goes • Learn a forward model: z = a + bx + cy – Practice to collect the data ( x i , y i , z i ) – Do regression to find a , b , and c • To decide how to shoot, invert the model to get: y = ( z - a - bx )/ c 4

Kick Action parameter: y Egocentric Ball Position: x • The built-in kicks have no parameters. • I assume that you can position your robot so • Your kick action includes a step or two to that the ball position can be described by a approach the ball, then a built-in kick. single parameter x . – Embed the parameter y in the approach. – You can use ( x 1 , x 2 ), but more variables requires more data. • Try various parameterizations: • When you’re close enough to kick the ball, – Sideways component of the walk you’ll be too close to be sure where it is! – Turning while walking forward – Pick a position farther away, for accurate x . – etc. • Avoid gaps in the search space of y values. Planning the shot Where the ball goes: z • You have a forward model: z = a + bx + cy • Track the ball after the kick. • Invert the model: y = ( z - a - bx )/ c – “ Keep your eye on the ball! ” – z is where you want the ball to go – x is where you see the ball right now • After a suitable period of time (1 second?), – a , b , c have been learned record the direction the ball went. – Compute y : how to control the kick action – Body-centered egocentric frame of reference • Q: Would it help to have a Bayesian model of the distribution p ( z | x, y )? Next • Kalman filters: tracking dynamic systems. • Extended Kalman filters: handling nonlinearity by local linearization. 5

Recommend

More recommend