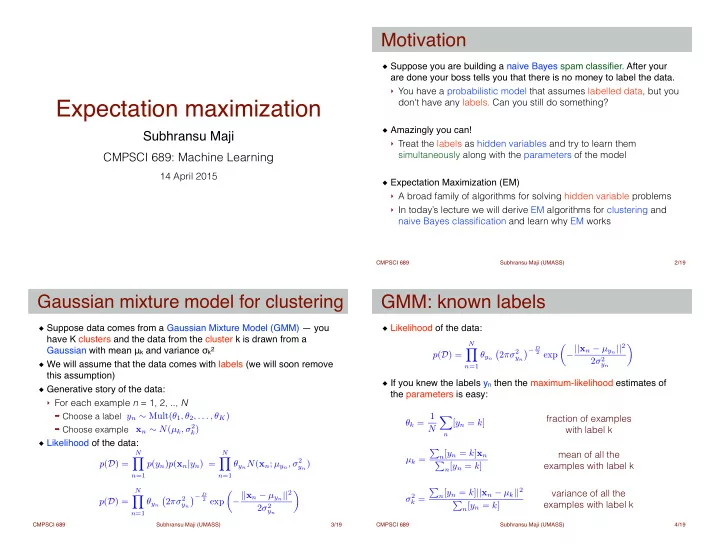

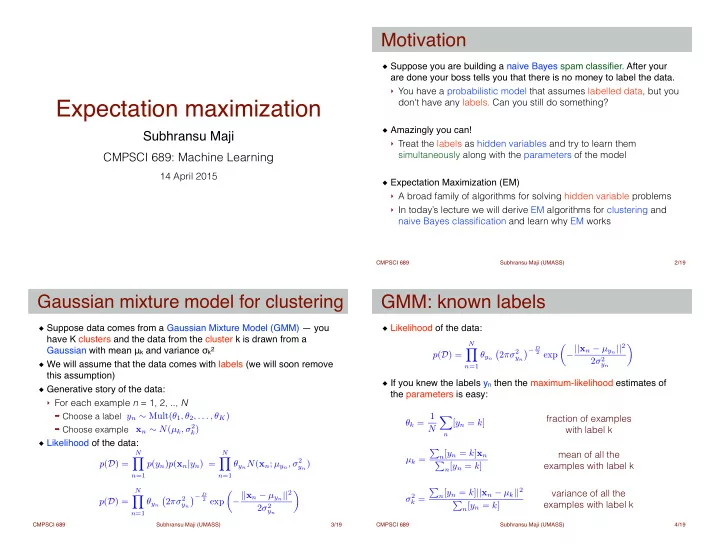

Motivation Suppose you are building a naive Bayes spam classifier. After your are done your boss tells you that there is no money to label the data. ! ‣ You have a probabilistic model that assumes labelled data, but you Expectation maximization don't have any labels. Can you still do something? ! Amazingly you can! ! Subhransu Maji ‣ Treat the labels as hidden variables and try to learn them simultaneously along with the parameters of the model CMPSCI 689: Machine Learning ! 14 April 2015 Expectation Maximization (EM) ! ‣ A broad family of algorithms for solving hidden variable problems ‣ In today’s lecture we will derive EM algorithms for clustering and naive Bayes classification and learn why EM works CMPSCI 689 Subhransu Maji (UMASS) 2 /19 GMM: known labels Gaussian mixture model for clustering Suppose data comes from a Gaussian Mixture Model (GMM) — you Likelihood of the data: ! have K clusters and the data from the cluster k is drawn from a ! N − || x n − µ y n || 2 ✓ ◆ Gaussian with mean μ k and variance σ k2 ! � − D 2 exp Y � 2 πσ 2 p ( D ) = θ y n ! y n 2 σ 2 We will assume that the data comes with labels (we will soon remove y n n =1 ! this assumption) ! If you knew the labels y n then the maximum-likelihood estimates of Generative story of the data: ! the parameters is easy: ‣ For each example n = 1, 2, .., N ➡ Choose a label y n ∼ Mult( θ 1 , θ 2 , . . . , θ K ) θ k = 1 fraction of examples X [ y n = k ] ➡ Choose example x n ∼ N ( µ k , σ 2 k ) N with label k n Likelihood of the data: P n [ y n = k ] x n N N mean of all the µ k = Y Y θ y n N ( x n ; µ y n , σ 2 p ( D ) = p ( y n ) p ( x n | y n ) = y n ) P n [ y n = k ] examples with label k n =1 n =1 N n [ y n = k ] || x n − µ k || 2 P variance of all the ✓ − || x n − µ y n || 2 ◆ � − D σ 2 2 exp Y k = � 2 πσ 2 p ( D ) = θ y n examples with label k P n [ y n = k ] y n 2 σ 2 y n n =1 CMPSCI 689 Subhransu Maji (UMASS) 3 /19 CMPSCI 689 Subhransu Maji (UMASS) 4 /19

GMM: unknown labels GMM: parameter estimation Now suppose you didn’t have labels y n . Analogous to k-means, one Formally z n,k is the probability that the n th point goes to cluster k ! solution is to iterate. Start by guessing the parameters and then ! z n,k = p ( y n = k | x n ) repeat the two steps: ! ! = P ( y n = k, x n ) ‣ Estimate labels given the parameters ! P ( x n ) ‣ Estimate parameters given the labels ! ∝ P ( y n = k ) P ( x n | y n ) = θ k N ( x n ; µ k , σ 2 ! k ) ! In k-means we assigned each point to a single cluster, also called as Given a set of parameters ( θ k , μ k, σ k2 ), z n,k is easy to compute ! hard assignment (point 10 goes to cluster 2) ! Given z n,k , we can update the parameters ( θ k , μ k, σ k2 ) as: In expectation maximization (EM) we will will use soft assignment θ k = 1 X fraction of examples (point 10 goes half to cluster 2 and half to cluster 5) ! z n,k N with label k ! n P n z n,k x n mean of all the fractional Lets define a random variable z n = [z 1 , z 2 , …, z K ] to denote the µ k = P assignment vector for the n th point ! examples with label k n z n,k n z n,k || x n − µ k || 2 P ‣ Hard assignment: only one of z k is 1, the rest are 0 σ 2 variance of all the fractional k = ‣ Soft assignment: z k is positive and sum to 1 P examples with label k n z n,k CMPSCI 689 Subhransu Maji (UMASS) 5 /19 CMPSCI 689 Subhransu Maji (UMASS) 6 /19 GMM: example The EM framework We have data with observations x n and hidden variables y n , and would We have replaced the indicator variable [y n = k] with p(y n =k) which is like to estimate parameters θ ! the expectation of [y n =k]. This is our guess of the labels. ! The likelihood of the data and hidden variables: ! Just like k-means the EM is susceptible to local minima. ! Clustering example: Y ! p ( D ) = p ( x n , y n | θ ) ! k-means GMM n Only x n are known so we can compute the data likelihood by marginalizing out the y n : ! ! Y X p ( X | θ ) = p ( x n , y n | θ ) ! n y n ! Parameter estimation by maximizing log-likelihood: X ! X θ ML ← arg max log p ( x n , y n | θ ) θ n y n hard to maximize since the sum is inside the log http://nbviewer.ipython.org/github/NICTA/MLSS/tree/master/clustering/ CMPSCI 689 Subhransu Maji (UMASS) 7 /19 CMPSCI 689 Subhransu Maji (UMASS) 8 /19

Jensen’s inequality The EM framework Given a concave function f and a set of weights λ i ≥ 0 and ∑ᵢ λᵢ = 1 ! Construct a lower bound the log-likelihood using Jensen’s inequality ! X ! Jensen’s inequality states that f( ∑ᵢ λᵢ x ᵢ ) ≥ ∑ᵢ λᵢ f(x ᵢ ) ! ! X L ( X | θ ) = log p ( x n , y n | θ ) This is a direct consequence of concavity ! ! x n y n f ‣ f(ax + by) ≥ a f(x) + b f(y) when a ≥ 0, b ≥ 0, a + b = 1 ! X ! q ( y n ) p ( x n , y n | θ ) X = log Jensen’s inequality ! f(y) q ( y n ) n y n ! λ f(ax+by) ✓ p ( x n , y n | θ ) ◆ X X q ( y n ) log ≥ ! q ( y n ) n y n ! a f(x) + b f(y) X X = [ q ( y n ) log p ( x n , y n | θ ) − q ( y n ) log q ( y n )] ! n y n ! f(x) , ˆ L ( X | θ ) ! Maximize the lower bound: independent of θ X X θ ← arg max q ( y n ) log p ( x n , y n | θ ) θ n y n CMPSCI 689 Subhransu Maji (UMASS) 9 /19 CMPSCI 689 Subhransu Maji (UMASS) 10 /19 Lower bound illustrated An optimal lower bound Maximizing the lower bound increases the value of the original Any choice of the probability distribution q(y n ) is valid as long as the function if the lower bound touches the function at the current value lower bound touches the function at the current estimate of θ" L ( X | θ t ) = ˆ ! L ( X | θ t ) We can the pick the optimal q(y n ) by maximizing the lower bound ! L ( X | θ ) ! X arg max [ q ( y n ) log p ( x n , y n | θ ) − q ( y n ) log q ( y n )] q ! y n ˆ q ( y n ) ← p ( y n | x n , θ t ) This gives us ! L ( X | θ t +1 ) ‣ Proof: use Lagrangian multipliers with “sum to one” constraint ! ˆ L ( X | θ t ) This is the distributions of the hidden variables conditioned on the data and the current estimate of the parameters ! ‣ This is exactly what we computed in the GMM example θ t θ t +1 CMPSCI 689 Subhransu Maji (UMASS) 11 /19 CMPSCI 689 Subhransu Maji (UMASS) 12 /19

The EM algorithm Naive Bayes: revisited We have data with observations x n and hidden variables y n , and would Consider the binary prediction problem ! like to estimate parameters θ of the distribution p(x | θ ) ! Let the data be distributed according to a probability distribution: ! EM algorithm ! ! p θ ( y, x ) = p θ ( y, x 1 , x 2 , . . . , x D ) ‣ Initialize the parameters θ randomly ! ‣ Iterate between the following two steps: We can simplify this using the chain rule of probability: ! ➡ E step: Compute probability distribution over the hidden variables p θ ( y, x ) = p θ ( y ) p θ ( x 1 | y ) p θ ( x 2 | x 1 , y ) . . . p θ ( x D | x 1 , x 2 , . . . , x D − 1 , y ) ! q ( y n ) ← p ( y n | x n , θ ) ! ! D ➡ M step: Maximize the lower bound Y ! = p θ ( y ) p θ ( x d | x 1 , x 2 , . . . , x d − 1 , y ) X X ! θ ← arg max q ( y n ) log p ( x n , y n | θ ) ! d =1 θ ! n y n Naive Bayes assumption: ! ! ! p θ ( x d | x d 0 , y ) = p θ ( x d | y ) , 8 d 0 6 = d EM algorithm is a great candidate when M-step can done easily but ! p(x | θ ) cannot be easily optimized over θ! ! ‣ For e.g. for GMMs it was easy to compute means and variances E.g., The words “free” and “money” are independent given spam given the memberships CMPSCI 689 Subhransu Maji (UMASS) 13 /19 CMPSCI 689 Subhransu Maji (UMASS) 14 /19 Naive Bayes: a simple case Naive Bayes: parameter estimation Case: binary labels and binary features ! Given data we can estimate the parameters by maximizing data likelihood ! p θ ( y ) = Bernoulli ( θ 0 ) } ! The maximum likelihood estimates are: ! p θ ( x d | y = 1) = Bernoulli ( θ + d ) 1+2D parameters ! P n [ y n = +1] // fraction of the data with label as +1 p θ ( x d | y = − 1) = Bernoulli ( θ − d ) ˆ θ 0 = ! N Probability of the data: D P n [ x d,n = 1 , y n = +1] Y p θ ( y, x ) = p θ ( y ) p θ ( x d | y ) ˆ // fraction of the instances with 1 among +1 θ + d = P n [ y n = +1] d =1 = θ [ y =+1] (1 − θ 0 ) [ y = − 1] 0 P n [ x d,n = 1 , y n = − 1] D ˆ // fraction of the instances with 1 among -1 θ − d = θ +[ x d =1 ,y =+1] Y (1 − θ + d ) [ x d =0 ,y =+1] ... × P n [ y n = − 1] // label +1 d d =1 D θ − [ x d =1 ,y = − 1] Y d ) [ x d =0 ,y = − 1] ... × (1 − θ − // label -1 d d =1 CMPSCI 689 Subhransu Maji (UMASS) 15 /19 CMPSCI 689 Subhransu Maji (UMASS) 16 /19

Recommend

More recommend