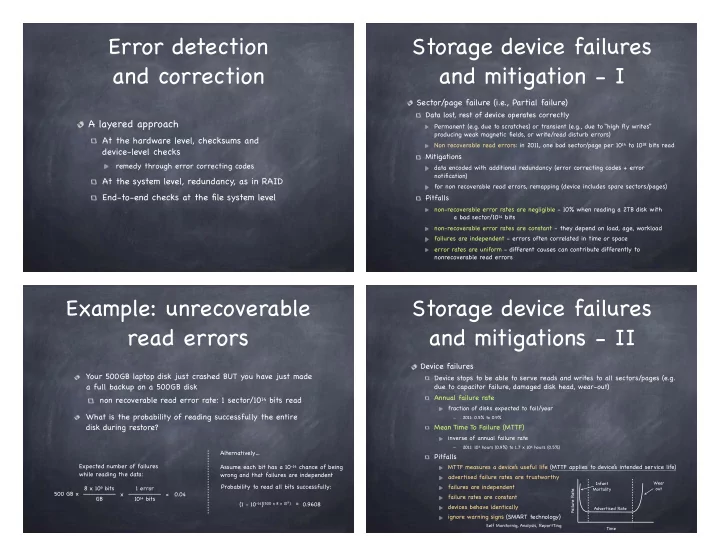

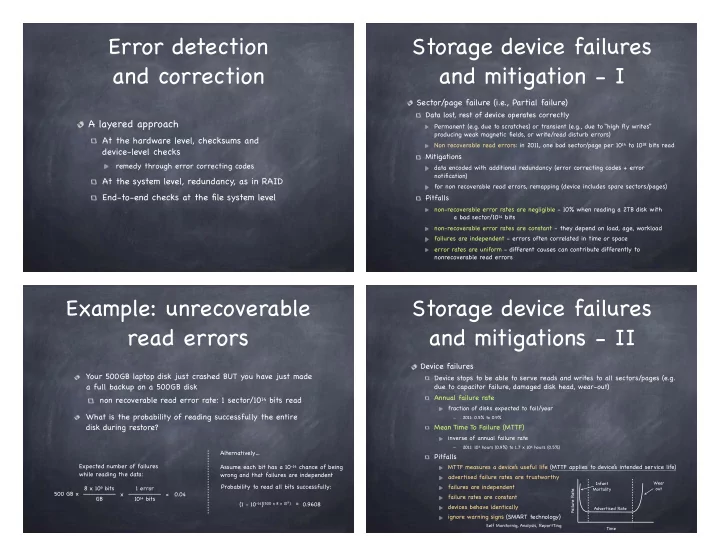

Error detection Storage device failures and correction and mitigation - I Sector/page failure (i.e., Partial failure) Data lost, rest of device operates correctly A layered approach Permanent (e.g. due to scratches) or transient (e.g., due to “high fly writes” producing weak magnetic fields, or write/read disturb errors) At the hardware level, checksums and Non recoverable read errors: in 2011, one bad sector/page per 10 14 to 10 18 bits read device-level checks Mitigations remedy through error correcting codes data encoded with additional redundancy (error correcting codes + error notification) At the system level, redundancy, as in RAID for non recoverable read errors, remapping (device includes spare sectors/pages) End-to-end checks at the file system level Pitfalls non-recoverable error rates are negligible - 10% when reading a 2TB disk with a bad sector/10 14 bits non-recoverable error rates are constant - they depend on load, age, workload failures are independent - errors often correlated in time or space error rates are uniform - different causes can contribute differently to nonrecoverable read errors Example: unrecoverable Storage device failures read errors and mitigations - II Device failures Your 500GB laptop disk just crashed BUT you have just made Device stops to be able to serve reads and writes to all sectors/pages (e.g. a full backup on a 500GB disk due to capacitor failure, damaged disk head, wear-out) Annual failure rate non recoverable read error rate: 1 sector/10 14 bits read fraction of disks expected to fail/year What is the probability of reading successfully the entire 2011: 0.5% to 0.9% disk during restore? Mean Time To Failure (MTTF) inverse of annual failure rate 2011: 10 6 hours (0.9%) to 1.7 x 10 6 hours (0.5%) Alternatively… Pitfalls Expected number of failures Assume each bit has a 10 -14 chance of being MTTF measures a device’ s useful life (MTTF applies to device’ s intended service life) while reading the data: wrong and that failures are independent advertised failure rates are trustworthy Infant Wear Probability to read all bits successfully: failures are independent 8 x 10 9 bits 1 error Mortality out Failure Rate 500 GB x x = 0.04 failure rates are constant GB 10 14 bits = 0.9608 (1 - 10 -14 ) (500 x 8 x 10 ) 9 devices behave identically Advertised Rate ignore warning signs (SMART technology) Self Monitornig, Analysis, ReportTing Time

Example: disk failures in RAID a large system Redundant Array of Inexpensive* Disks File server with 100 disks * In industry, “inexpensive” has been replaced by “independent” :-) Disks are cheap, so put many (10s to 100s) of them MTTF for each disk: 1.5 x 10 6 hours in one box to increase storage, performance, and What is the expected time before one disk fails? reliability data plus some redundant information striped across disks Assuming independent failures and constant failure rates: performance and reliability depend on how precisely it is MTTF for some disk = MTTF for single disk / 100 = 1.5 x 10 4 hours striped key feature: transparency Probability that some disk will fail in a year: to the host system it all looks like a single, large, highly 1 errors (365 x 24) hours x = 58.5% performant and highly reliable single disk 1.5 x 10 4 hours key issue: mapping Pitfalls: actual failure rate may be higher than advertised from logical block to location on one or more disks failure rate may not be constant RAID-1 RAID-0: mirrored disks High throughput, low reliability Disk striping (RAID-0) Data written in two places higher disk bandwidth through larger effective block size on failure, use surviving disk 4 blocks for the price of 1! On read, choose fastest to read 0 1 2 3 poor reliability OS 4 5 6 7 Expensive disk block 8 9 10 11 any disk failure causes data loss 12 13 14 15 Physical disk blocks 0 1 1 0 0 1 1 0 1 1 1 0 1 1 1 0 1 5 9 13 2 6 10 14 3 7 11 15 0 4 8 12 0 1 0 1 0 1 0 1

RAID-3 RAID-4 Bit striped, with parity Block striped, with parity given G disks, Combines RAID-0 and RAID-3 parity = data 0 ⊕ data 1 ⊕ ... ⊕ data G-1 reading a block accesses a single disk data 0 = parity ⊕ data 1 ⊕ ... ⊕ data G-1 writing always accesses parity disk Reads access all data disks Heavy load on parity disk Writes accesses all data disks plus parity disk Data disks Parity disk Data disks Parity disk Disk controller can identify faulty disk Disk controller can identify faulty disk single parity disk can detect and correct errors single parity disk can detect and correct errors RAID-5 RAID-5 Block Interleaved Distributed Parity Block Interleaved Distributed Parity no single disk dedicated to parity no single disk dedicated to parity Strip*: Sequence of sequential blocks that defines the unit of striping parity and data distributed across all disks parity and data distributed across all disks 4 blocks Strip* (0,0) Strip (1,0) Strip (2,0) Strip (3,0) Strip (4,0) Data Block 8 Data Block 12 Parity (0,0,0) Data Block 0 Data Block 4 Data Block 9 Data Block 13 Parity (1,0,0) Data Block 1 Data Block 5 Stripe 0 Data Block 10 Data Block 14 Parity (2,0,0) Data Block 2 Data Block 6 Data Block 11 Data Block 15 Parity 0-3 Data 0 Data 1 Data 2 Data 3 Parity (3,0.0) Data Block 3 Data Block 7 Strip (0,1) Strip (1,1) Strip (2,1) Strip (3,1) Strip (4,1) Data 4 Parity 4-7 Data 5 Data 6 Data 7 Data Block 16 Parity (0,1,1) Data Block 20 Data Block 24 Data Block 28 Data Block 17 Parity (1,1,1) Data Block 21 Data Block 25 Data Block 29 Stripe 1 Data 8 Data 9 Parity 8-11 Data 10 Data 11 Data Block 18 Parity (2,1,1) Data Block 22 Data Block 26 Data Block 30 Data Block 19 Parity (3.1,1) Data Block 23 Data Block 27 Data Block 31 Data 12 Data 13 Data 14 Parity 12-15 Data 15 Strip (0,2) Strip (1,2) Strip (2,2) Strip (3,2) Strip (4,2) Data Block 32 Data Block 36 Parity (0,2,2) Data Block 40 Data Block 44 Data 16 Data 17 Data 18 Data 19 Parity 16-19 Data Block 33 Data Block 37 Parity (1,2,3) Data Block 41 Data Block 45 Stripe 2 Data Block 34 Parity (2,2,2) Data Block 42 Data Block 38 Data Block 46 Data Block 35 Parity (3,2,2) Data Block 43 Data Block 39 Data Block 47

The File System Example: Updating a abstraction RAID with rotating parity Presents applications with persistent, named data Two main components: 4 blocks files directories Strip (0,0) What I/O ops to update block 21? Strip (1,x) Strip (2,0) Strip (3,0) Strip (4,0) Parity (0,0,0) Data Block 0 Data Block 4 Data Block 8 Data Block 12 Stripe read data block 21 Data Block 9 Data Block 13 Parity (1,0,0) Data Block 1 Data Block 5 Data Block 10 Data Block 14 0 Parity (2,0,0) Data Block 2 Data Block 6 Parity (3,0,0) Data Block 3 read parity block (1,1,1) Data Block 7 Data Block 11 Data Block 15 Strip (0,1) Strip (1,1) Strip (2,1) Strip (3,1) Strip (4,1) Data Block 16 Parity (0,1,1) compute P tmp = P 1,1,1 ⊕ D 21 to remove D 21 from parity Data Block 20 Data Block 24 Data Block 28 Stripe Data Block 17 Parity (1,1,1) Data Block 21 Data Block 25 Data Block 29 calculations Data Block 18 Parity (2,1,1) Data Block 22 Data Block 26 Data Block 30 1 Data Block 19 Parity (3,1,1) Data Block 23 Data Block 27 Data Block 31 compute P’ 1,1,1 = P tmp ⊕ D’ 21 Strip (0,2) Strip (1,2) Strip (2,2) Strip (3,2) Strip (4,2) Data Block 32 Parity (0,2,2) Data Block 40 Data Block 36 Data Block 44 write D’ 21 to disk 2 Stripe Data Block 33 Data Block 37 Parity (1,2,2) Data Block 41 Data Block 45 2 Data Block 34 Parity (2,2,2) Data Block 42 Data Block 38 Data Block 46 write P’ 1,1,1 to disk 1 Data Block 35 Parity (3,2,2) Data Block 43 Data Block 39 Data Block 47 The File The Directory A file is a named collection of data. The directory provides names for files a list of human readable names A file has two parts a mapping from each name to a specific underlying file data – what a user or application puts in it or directory (hard link) array of untyped bytes (in MacOS HFS, multiple streams per a soft link is instead a mapping from a file name to file) another file name metadata – information added and managed by the OS alias: a soft link that continues to remain valid when the size, owner, security info, modification time (path of) the target file name changes

Recommend

More recommend