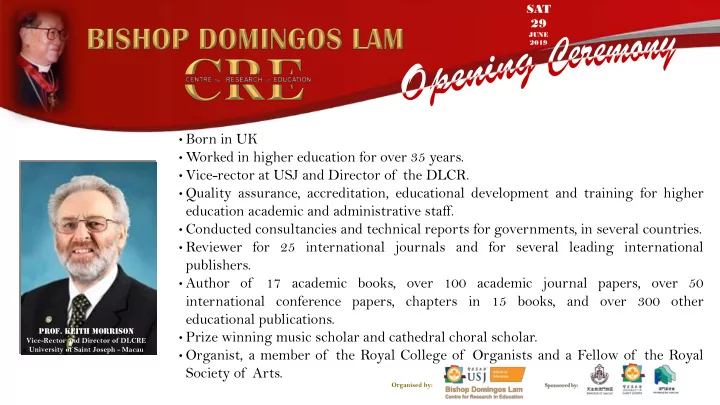

SAT 29 JUNE 2019 • Born in UK • Worked in higher education for over 35 years. • Vice-rector at USJ and Director of the DLCR. • Quality assurance, accreditation, educational development and training for higher education academic and administrative staff. • Conducted consultancies and technical reports for governments, in several countries. • Reviewer for 25 international journals and for several leading international publishers. • Author of 17 academic books, over 100 academic journal papers, over 50 international conference papers, chapters in 15 books, and over 300 other educational publications. Prof. KEITH MORRISON • Prize winning music scholar and cathedral choral scholar. Vice-Rector and Director of DLCRE University of Saint Joseph - Macau • Organist, a member of the Royal College of Organists and a Fellow of the Royal Society of Arts. Organised by:

SAT 29 JUNE 2019 RESEARCH EVIDENCE IN EDUCATION Keith Morrison

SAT 29 JUNE 2019 SEARCHING FOR CERTAINTY • ‘Knowledge is not so precise a concept as is commonly thought . . . A great many things which have been thought to be certain turn out to be untrue, and there’s no shortcut to knowledge. . . . [W]e ought not to be dogmatic’ (Bertrand Russell)

SAT 29 JUNE 2019 EXTRACTS FROM THE MISSION, VISION AND AIMS • Mission statement: “ . . . to have an impact on education and society in Macau and beyond” . • Vision statement: “ . . . a centre of excellence in research in education, through quality research training, dissemination, uptake and impact” . • Aims: • To have an impact on educational work and society in Macau and beyond, linking research and educational practice, policy making, decision making and action. • To disseminate the results of research through a range of channels and to be a resource for educational research findings and their dissemination. • To run training and development on research in education. • To provide seminars, workshops, conferences etc. on research in education.

SAT 29 JUNE 2019 2002 2009

SAT 29 JUNE 2019 2016 2018

SAT 29 JUNE 2019 2017 2017

SAT 29 JUNE 2019 Evidence- based learning and ‘What Works’ 2019

SAT 29 JUNE 2019 “In education, new policies and interventions are rarely based on good prior evidence of effectiveness and of their side effects. Many policy areas are evidence-resistant . . . . ” (p. 3).

SAT 29 JUNE 2019 TWO WORLDS POLICY RESEARCHERS MAKERS Agendas Evidence

SAT 29 JUNE 2019 2012 2009

SAT 29 JUNE 2019 • School students tend to already know 40-50 per cent of what we teach them, but the 40-50 per cent differs across students. • Roughly one third of what students learn will be unique to them, i.e. not known by any other student. • We have little or no idea of what is going on inside a student’s mind. • If students encounter a concept on at least 3 different occasions, there is an 80% chance that they will know it after 6 months. • 80% of the feedback that students get is from each other, and 80% of that is wrong.

SAT 29 JUNE 2019 REFORMULATE ‘WHAT WORKS’ • ‘What works, for whom, in the presence of which factors and conditions and in the absence of which factors and conditions, singly or in combination, in what ways and with what side effects, following what causal chains, how successfully, based on what and whose evidence and criteria, with what level of trustworthiness, and in whose eyes?’

SAT 29 JUNE 2019 TEN CAUTIONS IN USING EDUCATIONAL RESEARCH 1. The need for robust research (internal validity). 2. External validity, transferability and generalizability. 3. Generalizability and transferability through research syntheses, meta-analysis and meta-meta-analysis. 4. Appropriate and secure methods of data analysis 5. Educational research and certainty. 6. ‘Evidence’ and neutrality. 7. Educational research, policy making and practice: ‘is’ and ‘ought’, ethics, morals and values. 8. Privileging certain types of educational research. 9. Teachers as decision makers in the ‘what works’ agenda. 10. Teachers’ professional responsibility in using research.

SAT 29 JUNE 2019 THE NEED FOR ROBUST RESEARCH (INTERNAL VALIDITY) • The research design does not fit the research purposes or the research questions. • Causality is confused with correlation. • Insufficient controls are placed on the data and the data analysis. • Counterfactuals, comparison groups and counterfactual analysis are absent. • Claims for causality are spurious. • The specific contingencies of specific situations are excluded, overlooked or neglected. • Studies are too small scale for correct statistical power, statistical analysis or secure generalization. • Missing data and attritions bring about unreliability in the data analysis. • Replication studies are few and unreliable (unrepeatable). • Measurements are not as exact as they are taken to be. • Conflicts of interest may suppress negative findings.

SAT 29 JUNE 2019 EXTERNAL VALIDITY, TRANSFERABILITY AND GENERALIZABILITY • Just because research has shown that such-and-such might ‘work’ in a such-and-such a research setting, this is no reason to believe that it will work in a different temporal, locational, contextual setting, or even the same setting, a second time. • Findings don’t always travel well. ‘Causal roles do not travel well’ (p. 88). Contexts, causal conditions and their relative strengths differ. Cartwright & J. Hardie (2012) Evidence-Based Policy: A Practical Guide to Doing It Better. New York, NY: Oxford University Press. ?

SAT 29 JUNE 2019 MANY WORLDS The Alliance for Useful RESEARCHERS Evidence (2018): ‘We identified examples of different POLICY organisations reaching PARENTS MAKERS different conclusions about the same intervention; one thought it worked well, and the other was less confident’ . STUDENTS EDUCATIONISTS

SAT 29 JUNE 2019 GOVERNMENT HEALTH WARNING • ‘What works’ should indicate the limits of its applicability, its negative as well as positive effects, the limits on what can and cannot be safely concluded from the evidence, the conditions under which it might or might not ‘work’, and a covering statement to say that, actually, it might not ‘work’ in specific contexts.

SAT 29 JUNE 2019 GENERALIZABILITY AND TRANSFERABILITY THROUGH RESEARCH SYNTHESES, META-ANALYSIS AND META-META-ANALYSIS • Meta-analysis • Meta-meta-analyses • Research syntheses • Systematic reviews

SAT 29 JUNE 2019 EDUCATIONAL ENDOWMENT FOUNDATION

SAT 29 JUNE 2019

SAT 29 JUNE 2019 2012 2009

SAT 29 JUNE 2019 2019 2014

SA T 29 JU NE 20 19 • A synthesis of over 800 meta-analyses of over 50,000 studies, 150,000 effect sizes, and circa 240 million students. • Later increased to over 1500 meta-analyses. Heavily criticised: Bergeron 2017: ‘pseudo -science Slavin (2018): • Overlooks the importance of smaller effects achieved by different groups; • Uses effect size studies that are correlational, with insufficient controls, lacking in control groups, or are pre-post designs); • Uses studies without sufficient attention to the meaning or quality of these studies, i.e. does not screen them sufficiently.

SAT 29 JUNE 2019 1. Meta-analysis is simply ‘statistical alchemy for the 21 st century. Important inconsistencies are ignored and buried in statistical slurry.’ Feinstein, A. (1995). Meta-analyses: statistical alchemy for the 21 st century. Journal of Clinical Epidemiology , 48 (1), pp. 71-79. 2. Hattie: ‘is merely shovelling meta-analyses containing massive bias into meta-meta- analyses that reflect the same biases’. Slavin, R. E. (2018) John Hattie is Wrong. Robert Slavin’s Blog, 21 June, 2018 . https://robertslavinsblog.wordpress.com/2018/06/21/john-hattie-is- wrong/ 3. See, B.H. (2018): quality problems; sampling problems; scope; conflicts of interest; biased reporting; insufficient warrants for the conclusions drawn and claims made; data quality; lack of trials; non-experimental designs; lack of clarity; data analysis. See, B.H. (2018) 'Evaluating the evidence in evidence-based policy and practice : examples from systematic reviews of literature.' Research in Education , 102 (1). pp. 37-61.

SAT 29 JUNE 2019 APPROPRIATE AND SECURE METHODS OF DATA ANALYSIS • Statistical analysis uses the wrong statistics • Null hypothesis significance testing is discredited • Effect size is used incorrectly • Coding damages the integrity of qualitative data • Misinterpreting data and what they (claim to) show

SAT 29 JUNE 2019 EDUCATIONAL RESEARCH AND CERTAINTY ‘ The enormous problem faced in basing policy on research is that it is almost impossible to make educational policy that is not based on research. Almost every educational practice that has ever been pursued has been supported with data by somebody. I don’t know a single failed policy, ranging from the naturalistic teaching of reading, to the open classroom, to the teaching of abstract set-theory in third- grade math that hasn’t been research-based. Experts have advocated almost every conceivable practice short of inflicting permanent bodily harm. ’ ( https://www.mathematicallycorrect.com/edh2cal.htm. Address to California State Board of Education, April 10, 1997, by E. D. Hirsch, Jr.)

Recommend

More recommend