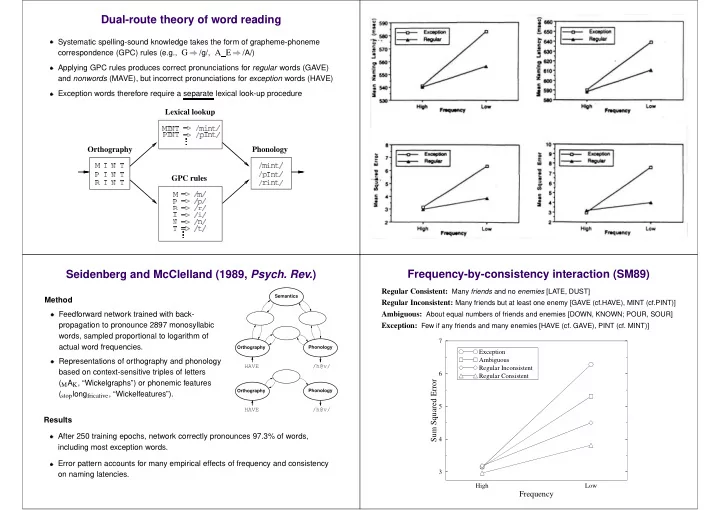

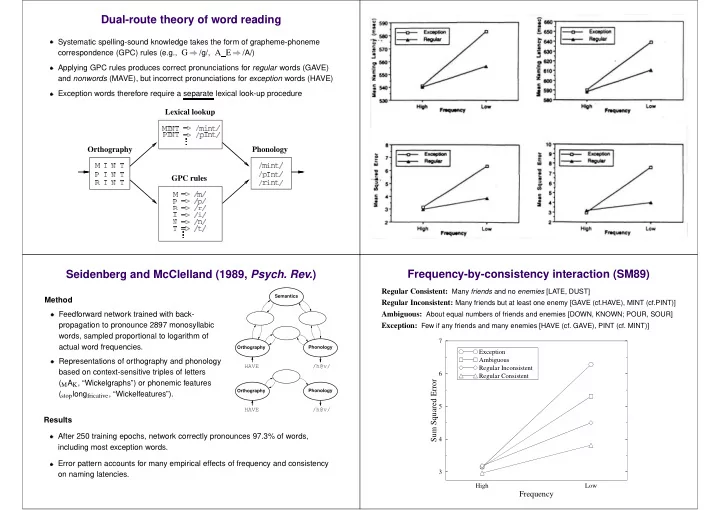

� � � � ✁ � ✁ � � Dual-route theory of word reading Systematic spelling-sound knowledge takes the form of grapheme-phoneme correspondence (GPC) rules (e.g., G /g/, A E /A/) Applying GPC rules produces correct pronunciations for regular words (GAVE) and nonwords (MAVE), but incorrect pronunciations for exception words (HAVE) Exception words therefore require a separate lexical look-up procedure Seidenberg and McClelland (1989, Psych. Rev. ) Frequency-by-consistency interaction (SM89) Regular Consistent: Many friends and no enemies [LATE, DUST] Method Regular Inconsistent: Many friends but at least one enemy [GAVE (cf.HAVE), MINT (cf.PINT)] Feedforward network trained with back- Ambiguous: About equal numbers of friends and enemies [DOWN, KNOWN; POUR, SOUR] propagation to pronounce 2897 monosyllabic Exception: Few if any friends and many enemies [HAVE (cf. GAVE), PINT (cf. MINT)] words, sampled proportional to logarithm of 7 actual word frequencies. Exception Ambiguous Representations of orthography and phonology Regular Inconsistent based on context-sensitive triples of letters 6 Regular Consistent Sum Squared Error ( M A K , “Wickelgraphs”) or phonemic features ( stop long fricative , “Wickelfeatures”). 5 Results After 250 training epochs, network correctly pronounces 97.3% of words, 4 including most exception words. Error pattern accounts for many empirical effects of frequency and consistency 3 on naming latencies. High Low Frequency

� � � � � � � � � � � � � � Seidenberg and McClelland (1989, Psych. Rev. ) Nonword reading (SM89) Method Feedforward network trained with back- propagation to pronounce 2897 monosyllabic words, sampled proportional to logarithm of actual word frequencies. Representations of orthography and phonology based on context-sensitive triples of letters ( M A K , “Wickelgraphs”) or phonemic features ( stop long fricative , “Wickelfeatures”). Results After 250 training epochs, network correctly pronounces 97.3% of words, including most exception words. Error pattern accounts for many empirical effects of frequency and consistency on naming latencies. Seidenberg and McClelland (1989, Psych. Rev. ) Representation and generalization: Condensing regularities The “dispersion” problem Method LOG � L O L O G O G Feedforward network trained with back- GLAD � G L G L A L A D A D propagation to pronounce 2897 monosyllabic SPLIT � S P S P L P L I L I T I T words, sampled proportional to logarithm of actual word frequencies. Capturing orthographic and phonological structure Representations of orthography and phonology Phonotactic constraints : Possible phoneme sequences are strongly constrained based on context-sensitive triples of letters by the structure of the articulatory system and by language-specific learning. ( M A K , “Wickelgraphs”) or phonemic features Alphabetic principle : Parts of written words (graphemes) correspond to parts of ( stop long fricative , “Wickelfeatures”). pronunciations (phonemes). Results Ordering of graphemes and phonemes is (virtually) unambiguous within clusters of consonants/vowels. After 250 training epochs, network correctly pronounces 97.3% of words, Onset Vowel Coda including most exception words. S P G L O A I G D T Error pattern accounts for many empirical effects of frequency and consistency LOG L O G on naming latencies. GLAD G L A D SPLIT S P L I T Fails to pronounce nonwords as well as skilled readers.

✁ ✁ ✄ � � � � � Representations Nonword reading Simulation: Feedforward network Frequency-by-consistency interaction 0.20 Exception Ambiguous Regular Inconsistent 0.15 Regular Consistent Cross Entropy Training 0.10 Trained with back-propagation on 2998 monosyllabic words (SM89 corpus plus additional 101 words) using log-frequencies to scale weight changes Error measured by cross-entropy between states and targets: 0.05 ✂ 1 ✂ 1 ✂ a j ✁ ∑ t j log ✄ log C t j a j ✄✆☎ j Adaptation of connection-specific learning rates (delta-bar-delta; Jacobs, 1988). 0.00 High Low After 300 training epochs, network pronounces the entire training corpus correctly Frequency (100% correct)

✄ ✄ � � � ✄ � ✄ ✄ � � ✄ Analytic account of frequency/consistency effects Frequency-by-consistency interaction (raw frequencies) Train with Hebbian learning on set of patterns indexed by p : 0.20 ✂ p ✂ p ✂ p � ∑ Exception ✄ freq w ij a i a j w ij a i ✄ a j Ambiguous p Regular Inconsistent Regular Consistent Response of output unit j to test pattern t : 0.15 Cross Entropy ✂ t ✂ t σ ∑ a j a i ✄ w ij i 0.10 ✂ p ✂ p ✂ t ✂ p σ ∑ ✄ ∑ ✄ freq a j a i ✄ a i p i 0.05 ✂ p ✂ p ✂ t σ ∑ ✄ freq ✄ sim a j ✁ p p ✂ t ✂ f ✂ f ✂ e ✂ e 0.00 ✁ ∑ ✄✆☎ ∑ σ freq freq ✄ sim freq ✄ sim ✁ t ✁ t High Low e f Frequency where f indexes friends of pattern t and e indexes enemies . Frequency-by-consistency interaction Nonword reading (raw frequencies) The additive combination of frequency and consistency has an nonlinear (asymptotic) effect on output activations

✁ ✁ � ☎ ✁ ✄ ☎ ✁ ☎ � ✁ � � � � � � ✁ � � Simulation: Attractor network Nonword reading (attractor network) � t � t ✂ 1 � t � t 1 τ ∑ ✂ τ ✂ τ η τ ✄ η a w ij a � t j i j j 1 exp ✁ η i j Training Trained with a continuous version of back-propagation through time (Pearlmutter, 1989), using actual word frequencies, cross-entropy, and delta-bar-delta 0 ✆ 2 Run for 2.0 units of time, receiving no error before time 1.0; discretization τ reduced to 0.01 at end of training During testing, network responds when phoneme states stop changing After 1900 training epochs, the network pronounces all but 25 words correctly (99.2% correct) Frequency-by-consistency interaction Generalization with componential attractors 1.95 Exception Ambiguous 1.90 Regular Inconsistent Regular Consistent 1.85 Time to Settle 1.80 1.75 Very strong orthography-phonology systematicity within consonant clusters (less for vowels); relative independence between clusters (except for vowels in 1.70 exception words). Connectionist learning is sensitive to which parts of the input reliably predict (e.g., 1.65 are correlated with) each part of the output. High Low Network develops componential attractors for words that can recombine to Frequency support nonword reading.

� � � ✁ ✁ ✁ ✁ ✁ ✁ ✁ � � ✁ ✁ ✁ � � � � � Orthographic Onset Orthographic Vowel Impaired reading in “surface” dyslexia Orthographic Coda Orthographic Activity Boundary 0.6 Regular Consistent (n=46) Brain damage to left temporal lobe (stroke, head injury, or degenerative disease) 0.5 0.4 in premorbidly literate adult 0.3 Severe impairment to semantics, or to mapping from semantics to phonology 0.2 0.1 Word reading accuracy influenced by frequency and consistency: 0.0 Onset Vowel Coda Correct Performance Orthographic Activity Boundary 0.6 Ambiguous (n=38) Patient HFR LFR HFE LFE %Reg’s NW 0.5 0.4 MP 95 98 93 73 90 95.5 0.3 KT 100 89 47 26 85 100 0.2 Exception words produce regularization errors: 0.1 DEAF “deef” FLOOD “flude” 0.0 Onset Vowel Coda Orthographic Activity Boundary SAID “sayed” GONE “goan” 0.6 Exception (n=42) BROAD “brode” STEAK “steek” 0.5 SHOE “show” SEW “sue” 0.4 0.3 ONE “own” SOOT “suit” 0.2 Nonword reading accuracy is normal 0.1 0.0 Word and nonword naming latencies are normal Onset Vowel Coda Phonological Cluster Similarity structure among representations Surface dyslexia: Damage to phonological pathway? Two sets of representations are structured similarily if their pairwise similarities Attractor network: Lesioning procedure are correlated Remove specified proportion of connections between two groups of units Tested with 48 body-matched triples of nonwords, regular words, and exception Results averaged over 50 different instances of lesion at given severity/location words (MAVE, GAVE, HAVE) HF Reg LF Reg HF Exc LF Exc Reg’s Nonwords 1.0 100 Nonwords Regular Words 90 Exception Words 0.9 80 70 Percent Correct Correlation 0.8 60 50 0.7 40 30 0.6 Patient MP 20 Patient KT Lesion 5% GH-conns 10 0.5 Lesion 30% GH-conns Orth-Phon Hidden-Orth Hidden-Phon 0 Representation Pair

Recommend

More recommend