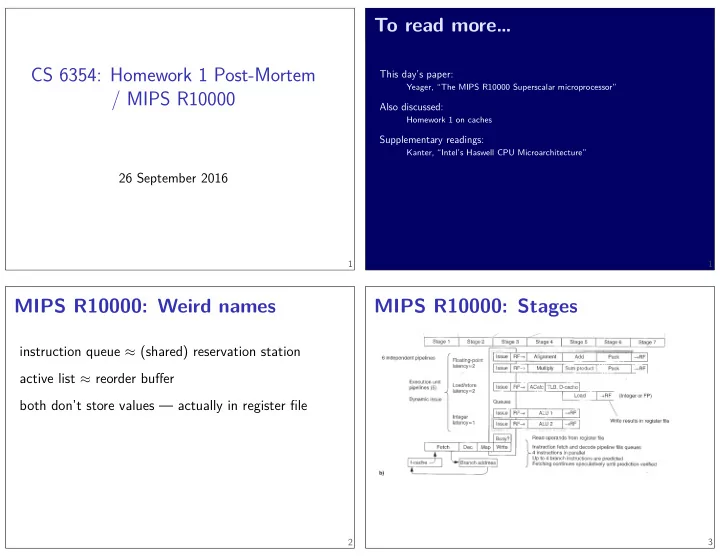

CS 6354: Homework 1 Post-Mortem / MIPS R10000 MIPS R10000: Stages 2 both don’t store values — actually in register fjle MIPS R10000: Weird names 1 Kanter, “Intel’s Haswell CPU Microarchitecture” Supplementary readings: Homework 1 on caches Also discussed: Yeager, “The MIPS R10000 Superscalar microprocessor” This day’s paper: To read more… 1 26 September 2016 3 instruction queue ≈ (shared) reservation station active list ≈ reorder bufger

MIPS R10000: Register Reservation Station pointer to active list (ROB) ready bits — local copy of busy bits branch mask — for branch mispredicts metadata: queue only tracks register numbers shared register fjle MIPS R10000: Instruction Queue v. Renaming/Queues 6 MIPS R10000: Instruction Queue 5 explicit register map data structure MIPS R10000: Register Renaming 4 7

MIPS R10000: Functional Units 8 treat like exception if other processors are listening … in case other processors are listening execute memory accesses in order MIPS R10000: Synchronization 10 load to store forwarding match cache accesses against all loads special-case for two accesses to same cache set kept in program order 16 entry address queue MIPS R10000: Memory requests 9 what if X == Z or Y == Z? store Y load Z store X store Y load Z store X desired (fast) order program order Moving load/stores around 11 tracks dependencies (overlapping memory accesses)

LL/SC atomic increment active list — 672 bits core storage (approx sizes) data — approx 8KB register fjles — 8192 bits metadata — approx 4KB register map tables — 390 bits free list — 192 bits busy bits: — 128 bits cache, half in another instruction queues — 1600 bits address queue — 1232 bits 14 SGI’s workload graphics lots of fmoating point big images 13 multibanked data cache — half the sets in one 15 nop ll $t0, value addi $t0, $t0, 1 sc $t0, value // if sc unsuccessful, goto retry within a block beqz $t0, retry // (delay slot) 12 MIPS R10000: Weird Tricks predecoding in instruction cache — opcode preprocessed retry: // $t0 ← value // $t0 < $t0 + 1 instruction cache specialized for unaligned accesses // value ← $t0 if memory unchanged // $t0 ← 1 if stored, 0 otherwise

evolution of modern processors complex insturctions can’t go to a single functional micro-op cache branch pred. local, 512 entry ??? cores/package 1 2–18 threads/core 1 2 16 Micro-ops complex instruction encodings don’t allow pre-decode trick unit MIPS R10000 (1996) 10 points: tested system described, report parts is that really an increase? how consistent are your measurements? Benchmarking Discipline Homework 1: General Concerns: 18 clear 5 points: raw results and code are included, match trick: split into micro-ops results 140 points total: For each thing benchmarked: Homework 1: Rubric 17 Intel Haswell: cache for micro-ops extra decoding step in I-cache predecoding 1024 entry instruction queue memory/cycle 2 int/FP + 2 int 2 int + 2 FP execute/cycle 60 unifjed 16 int + 16 FP + 16 mem 192 entry 2 load + 1 store 32 entry reorder bufger 5 instructions 4 instructions fetch/cycle Intel Haswell (2013) none 1 load or store operand width 32 bit L2 TLB 64 entry D, 64 entry I 64 entry L1 TLB 1+MB none L3 cache 19 256K ofg-chip L2 cache 32K I, 32K D 32K I, 32K D L1 cache 32 bit to 256 bit 5 points: benchmark description, including how to read description, interpreted plausibly be honest

Homework 1: General Concerns: sum2 += array[i]; thread_one_func( int offset) { for ( int i = 0; i < N / 2; ++i) sum1 += array[offset + i]; } thread_two_func() { for ( int i = N / 2; i < N; ++i) } 22 compute_sum() { thread_one = thread_create(thread_one_func); thread_two = thread_create(thread_two_func); wait_for_thread(thread_one); wait_for_thread(thread_two); sum = sum1 + sum2; } Multithreading appears to execute at same time as other threads Latency v. Bandwidth may or may not share memory bandwidths much better than latencies (everywhere) memory system relies on overlapping many memory accesses better to avoid prefetching — e.g. random access pattern, pointer chasing 20 Next Time: SMT multiple threads on one core later: multiple processors/cores 21 Defjnition: Thread stream of program execution own registers 23 measuring sizes? better to measure latency own program counter (current instruction pointer)

Difgerent Parallelism 24 threads vectors Multiple data ??? serial Single data Multiple instruction Single instruction Flynn’s Taxonomy exposed to programmer instruction-level parallelism that each does multiple copies of the same thing sequential sequence of instructions later: vectorization exposed to programmer run in parallel (apparently) multiple sequential sequences of instructions next up: thread-level parallelism transparent to programmer not actually sequential sequential sequence of instructions 25

Recommend

More recommend